AI for Healthcare: Pt 1, The Rise of AI

The history of AI in medicine. AI for healthcare is just getting started.

The Rise of AI in Medicine

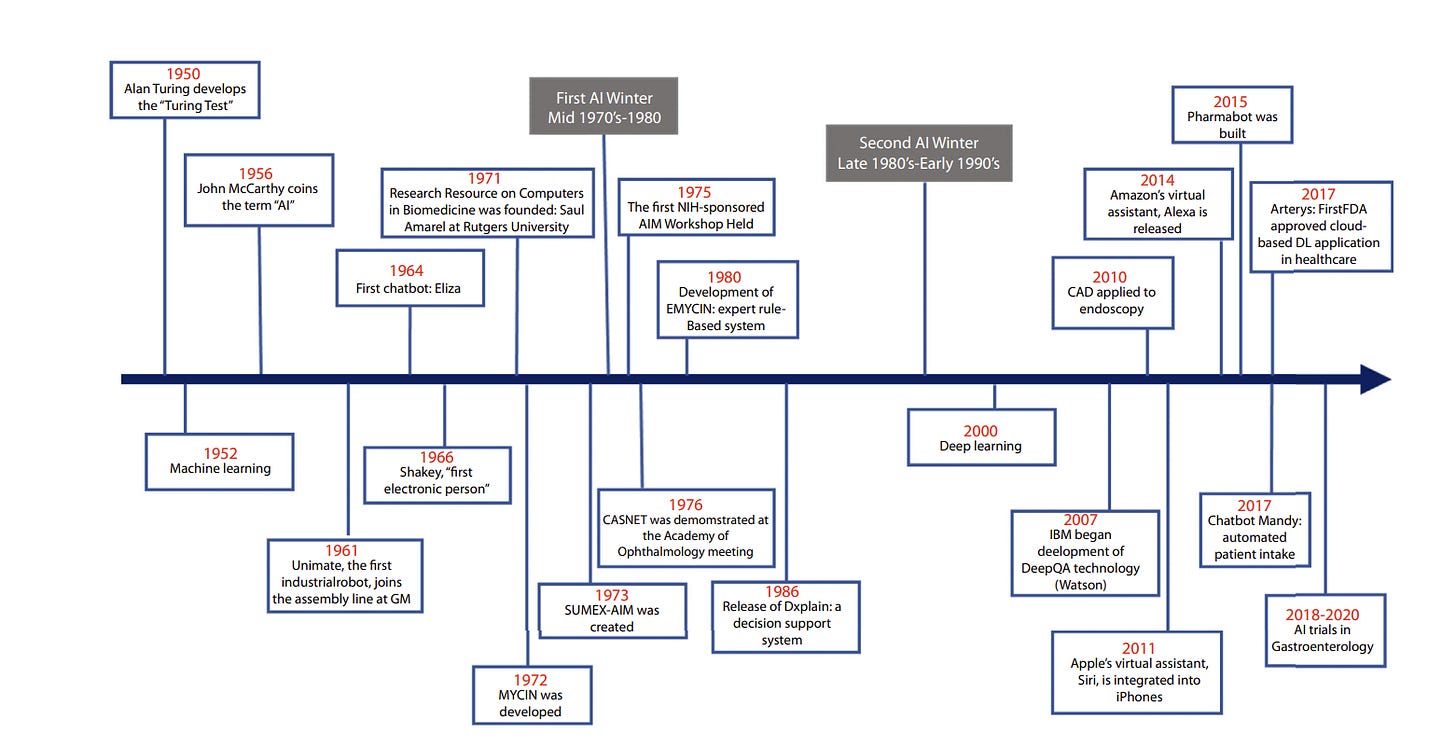

Since the dawn of AI, applying AI to medicine to improve our healthcare has been a futuristic dream. Before the machine learning era of the 2000s, there were various attempts to apply technology and AI to encode medical knowledge. However, as with expert systems in general, rule-based medical decision systems were limited and not scalable.

As machine-learning started maturing in the 2000s, the opportunity to develop complex models over large datasets started being applied to medical applications. Researchers built machine-learning models that analyzed medical data, such as electronic health records (EHRs) or medical images, to derive useful classification models.

The rise of deep learning and the breakthrough of AlexNet in 2012 led wider and more impressive application of AI for diagnostics, particularly in assisting radiologists by analyzing MRI and CT scan images for disease detection. By 2015, deep learning models began outperforming human experts in specific medical imaging tasks.

IBM’s Watson generated a lot of AI hype in 2011 by winning on Jeopardy! IBM parlayed that into announcing Watson for medical uses, specifically diagnosing cancer. Unfortunately, the “Watson Oncology” system fell short of its ambitious goals. By 2017, though the typical postmortem was that “IBM’s hubris and hype have crashed into reality.”

IBM’s AI marketing hype outpaced their technology. Watson was a pre-deep-learning NLP-based question-answering system that required human training and feature engineering to reach conclusions. Their AI simply wasn’t up to the task of medical diagnosis. They sold off their Watson Health unit to a private equity company and what was once Watson Health is now Merative.

As NLP improved in the 2010s, NLP-based chatbots were applied to medical use cases like Pharmabot (2015), a pediatric medicine consultant bot for children. As image and text classification improved, AI systems demonstrated comparable or superior performance to human experts in various medical tasks.

For example, Arterys developed CardioAI, which was able to analyze cardiac magnetic resonance images in a matter of seconds, providing information such as cardiac ejection fraction. It became the first FDA–approved clinical cloud-based DL application in health care in 2017. Similarly, in 2018, the FDA approved IDx-DR, the first AI-based medical device for detecting diabetic retinopathy.

A review in 2020, “History of Artificial Intelligence in Medicine,” show that AI in healthcare had evolved from basic prototypes and concepts to sophisticated working AI systems, able to improve diagnostic accuracy, workflow efficiency, and patient outcomes. However, most of the promise of AI still was in the future.

AI Algorithms in Healthcare

The 2020 review paper noted:

“AI algorithms and their applications will need further study and validation. Furthermore, additional clinical data will be needed to demonstrate its efficacy, value, and impact on patient care and outcome. Finally, we will need to develop cost-effective AI models and products to allow physicians, practices, and hospitals to incorporate AI into daily clinical use.”

So, medical use cases need: Algorithms and models that work; clinical data to train and prove out models; and cost-effective user-friendly AI applications that healthcare practitioners can use daily.

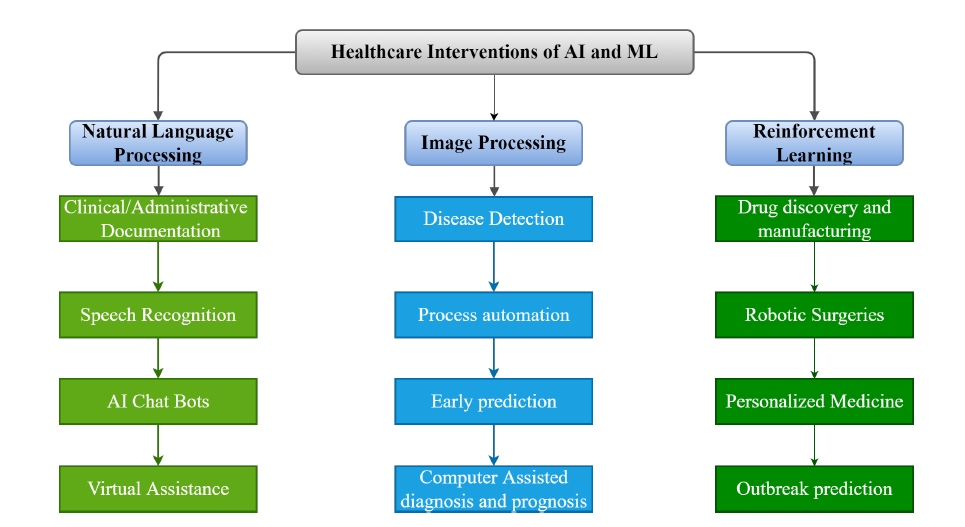

In the pre-ChatGPT AI era, three types of AI algorithms were the basis of most AI medical applications:

NLP (Natural Language Processing) based applications, with NLP used for chat-bots, virtual assistants, document-understanding tools to analyze EHRs (electronic health records), and text-based classification models.

Image processing tools, based on CNNs (convolutional deep learning neural networks), that could detect diseases or predict condition progression based on image recognition, for example in radiology.

RL (Reinforcement Learning) based applications, which have been quite varied: Surgical robotics; drug discovery, such as with AlphaFold and successors; personalized medicine, such as RL-based personalized drug design; and designing optimal treatment plans, e.g., for sepsis.

Ramping Up Medical AI with LLMs

The arrival of ChatGPT has opened a new era for AI in medicine. We can use the power of LLMs to understand and reason over medical information more easily than ever before.

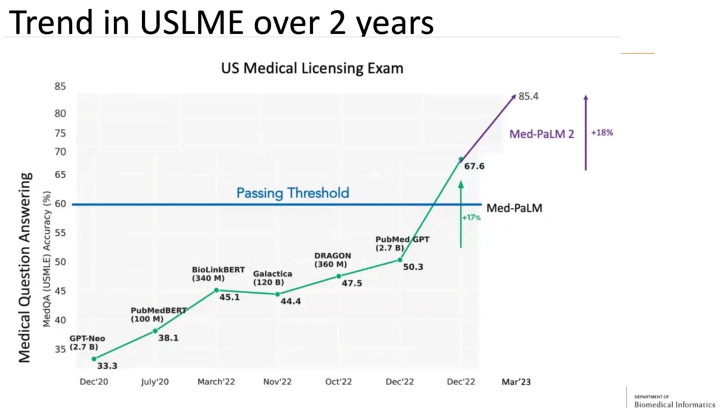

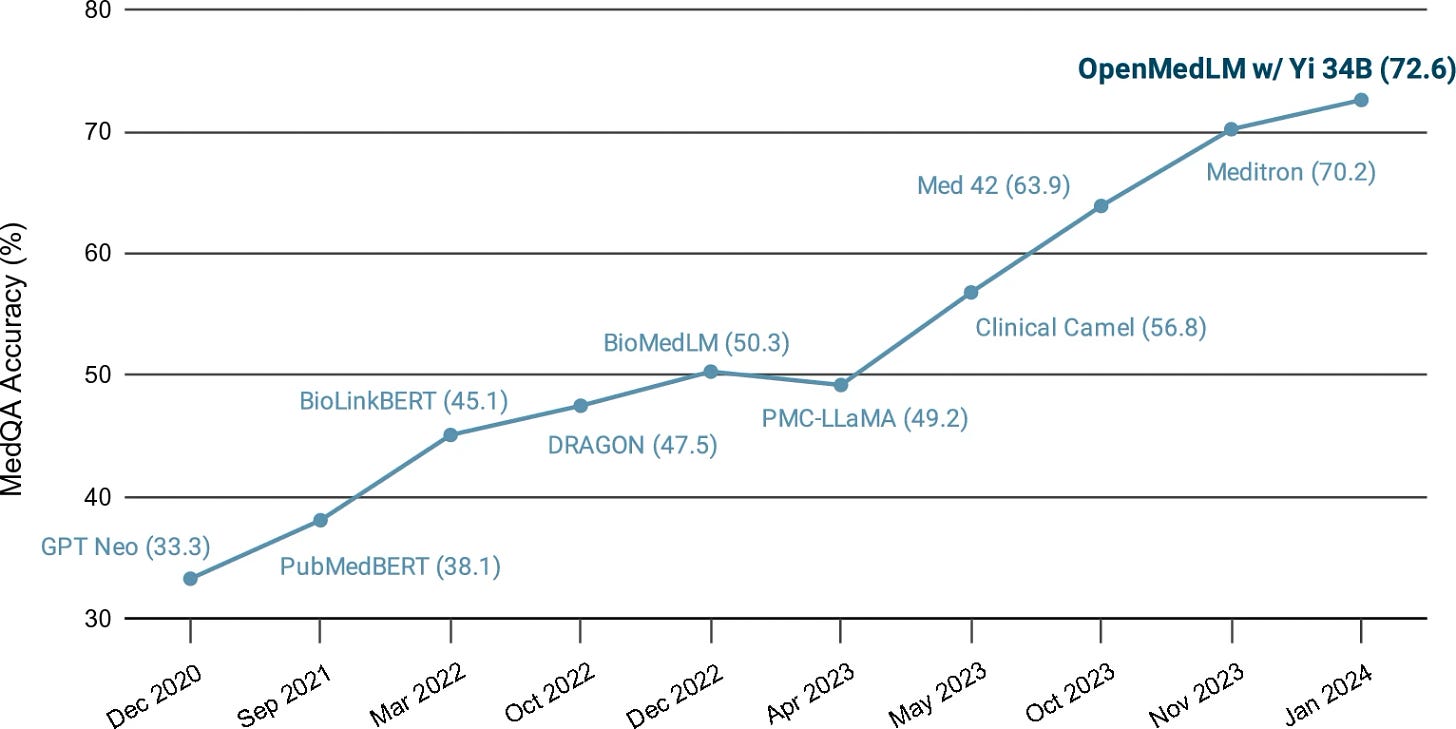

This can be illustrated by how AI models have gotten better at passing US Medical Licensing Exams (USMLE). The below chart shows the progress from the early days of BERT-based QA systems to Google’s Med-PaLM and Med-PaLM 2, which scored up to 86.5% on the MedQA dataset.

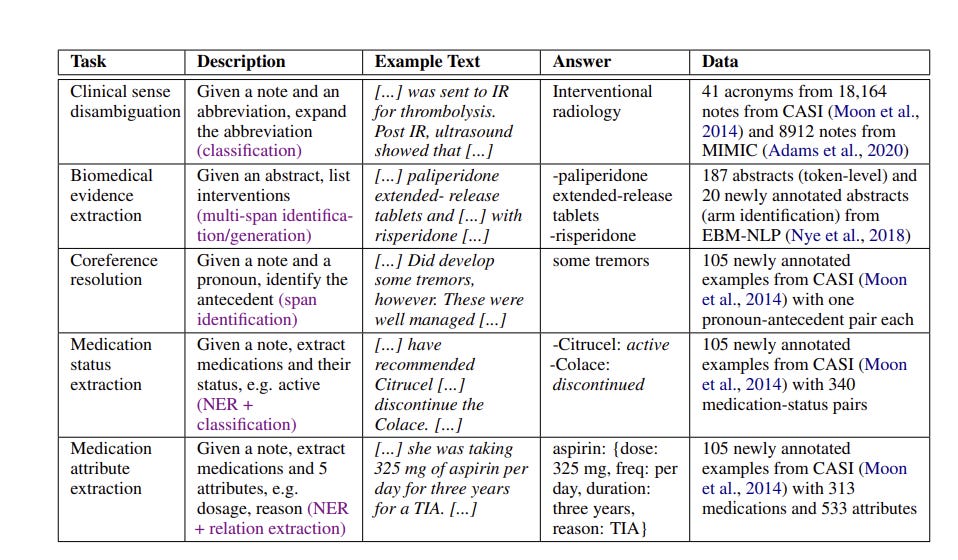

In 2022, it was observed that “Large Language Models are Few-Shot Clinical Information Extractors.” Specifically, GPT-3 was able to correctly respond to several text-based clinical queries without requiring retraining or many examples. LLMs can support medical tasks that before required difficult bespoke solutions.

Seeing the promise of LLMs in medicine, AI researchers have pursued several avenues to improve and analyze LLM results on MedQA and other datasets, as well as develop better training datasets and benchmarks more relevant to clinical tasks and settings.

The paper “OpenMedLM: prompt engineering can out-perform fine-tuning in medical question-answering with open-source large language models” from June 2024 developed OpenMedLM on Yi34B open AI model. They achieved 72.6% accuracy on MedQA and 81.7% accuracy on MMLU medical-subset, all without creating a fine-tuned model, but instead using prompting strategies of zero-shot, few-shot, chain-of-thought, and ensemble/self-consistency voting. This out-performed the prior SOTA open medical LLM MEDITRON-70B.

The paper “Aligning Large Language Models for Clinical Tasks” proposed an alignment prompting strategy 'expand-guess-refine' for medical question-answering, which improved performance, to 70.63% on USMLE questions while using ChatGPT.

The paper “Gemini Goes to Med School” found that Gemini 1.0 lagged behind GPT-4V and Med-PaLM2 on MedQA tasks.

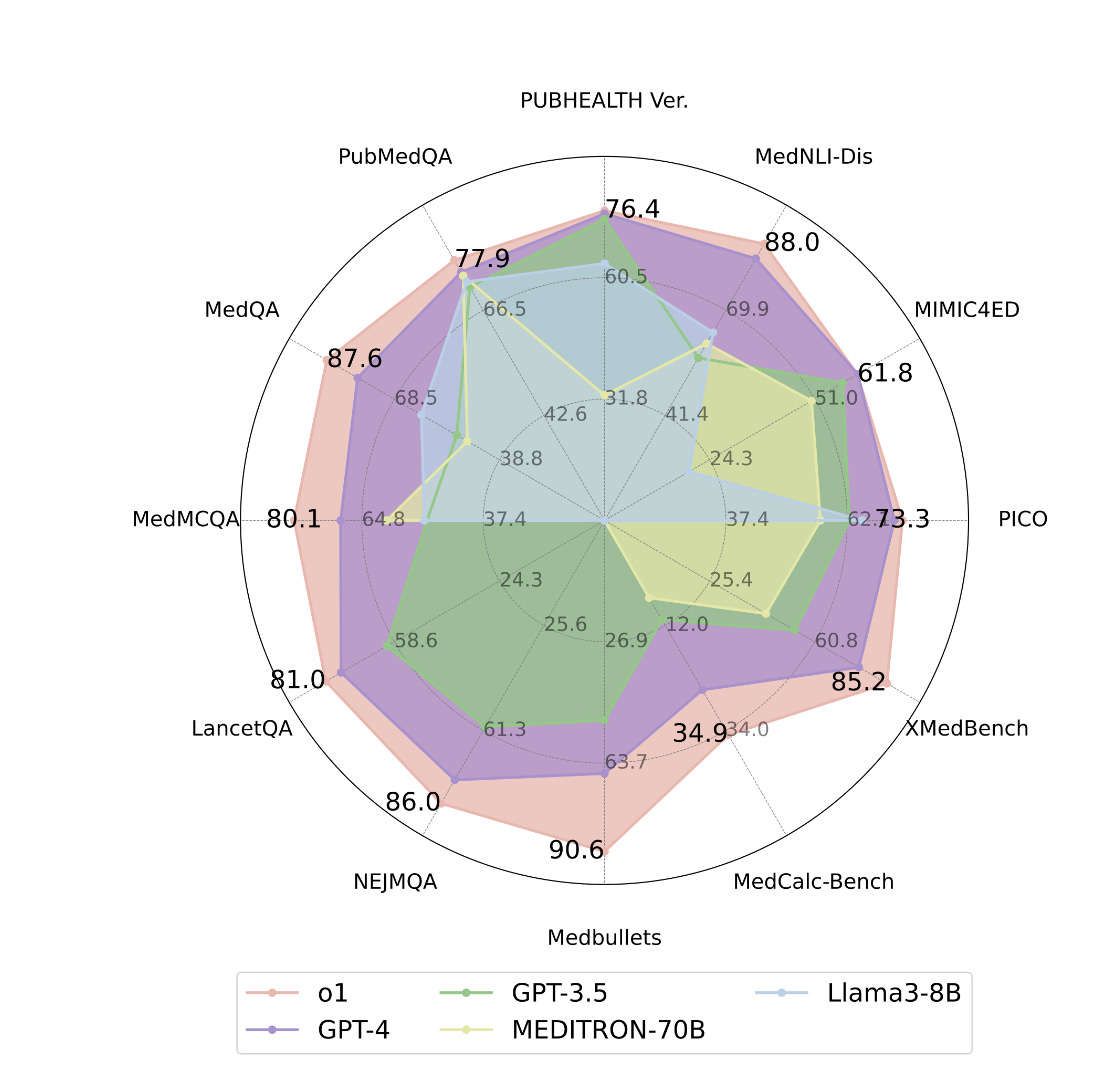

The recent paper “A Preliminary Study of o1 in Medicine: Are We Closer to an AI Doctor?”, examines the new o1 model from OpenAI for medical uses. They evaluated o1 against GPT-4 and other LLMs across 19 benchmarks, several of which they say, “offer greater clinical relevance compared to standard medical QA benchmarks such as MedQA.” They found o1 surpasses the previous GPT-4 in accuracy by an average of 6.2% across 19 datasets, so they answered their own question affirmatively.

The o1 model is SOTA but there is still room to improve. What if we combined a SOTA general reasoning LLM, like o1, with specialized prompting and fine-tuning that for example made Med-PaLM2? Or added a RAG-based system to improve further?

AI in healthcare is just getting started

To summarize the prior section: LLMs have greatly advanced in their ability to reason, extract knowledge retrieval and understand medical information. The o1 model and medically-fine-tuned versions of leading models are near human-level in answering medical questions.

The advance of LLMs leads to the opportunity to apply them to clinical applications. Many text-based medical tasks, like error checking, summarization, classification, and risk score predictions from test readings, have gone from impossible to challenging; others have gone from difficult to trivial.

Just as a lot more goes into making a good doctor than a medical student passing a test, there’s a lot more to AI in healthcare than being able to answer test questions on a medical exam. Getting AI to provide useful assistance in a real clinical setting is a long, arduous process, and for many applications of AI in healthcare, the process is just starting.

Healthcare is a broad, complex industry and medicine has many specialties and procedures. With so much opportunity, AI has the potential to drastically improve healthcare, but its impact has yet to be fully felt.

That said, AI has already been applied to many areas of healthcare: Biomedical research, analyzing patient-doctor interactions, automated diagnosis from image and patient data, precision medicine, patient management, chatbots for patient communication, drug discovery, drug interaction assessment, and more.

The uses of AI in healthcare is growing rapidly. We will cover some of these current and upcoming AI applications in medicine in our next installment.

References

This list is partial. Other papers referenced or mentioned are linked inline in the text.

History of artificial intelligence in medicine. Kaul, Vivek et al.

Gastrointestinal Endoscopy, Volume 92, Issue 4, 807 – 812. https://www.giejournal.org/article/S0016-5107(20)34466-7/fulltext

Artificial Intelligence and Machine Learning Based Intervention in Medical Infrastructure: A Review and Future Trends. Kamlesh Kumar, Prince Kumar, Dipankar Deb, Mihaela-Ligia Unguresan and Vlad Muresan. Healthcare 2023, 11(2), 207; https://doi.org/10.3390/healthcare11020207

Reinforcement Learning for Systems Pharmacology-Oriented and Personalized Drug Design.

Ryan K. Tan,1 Yang Liu,1 and Lei Xie, Expert Opin Drug Discov. 2022 Aug; 17(8): 849–863. https://ncbi.nlm.nih.gov/pmc/articles/PMC9824901/

Redefining Radiology: A Review of Artificial Intelligence Integration in Medical Imaging.

Reabal Najjar, Diagnostics (Basel). 2023 Sep; 13(17): 2760. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10487271