AI Week In Review 24.10.05

ChatGPT Canvas, OpenNotebookLM, OpenAI Realtime API. Copilot Voice, Vision, Personalization and deep thought. Gemini 1.5 flash 8B, Pika 1.5 Meta's Movie Gen, Flux 1.1 Pro, Liquid AI's LFM, DepthPro.

Top Tools & Hacks

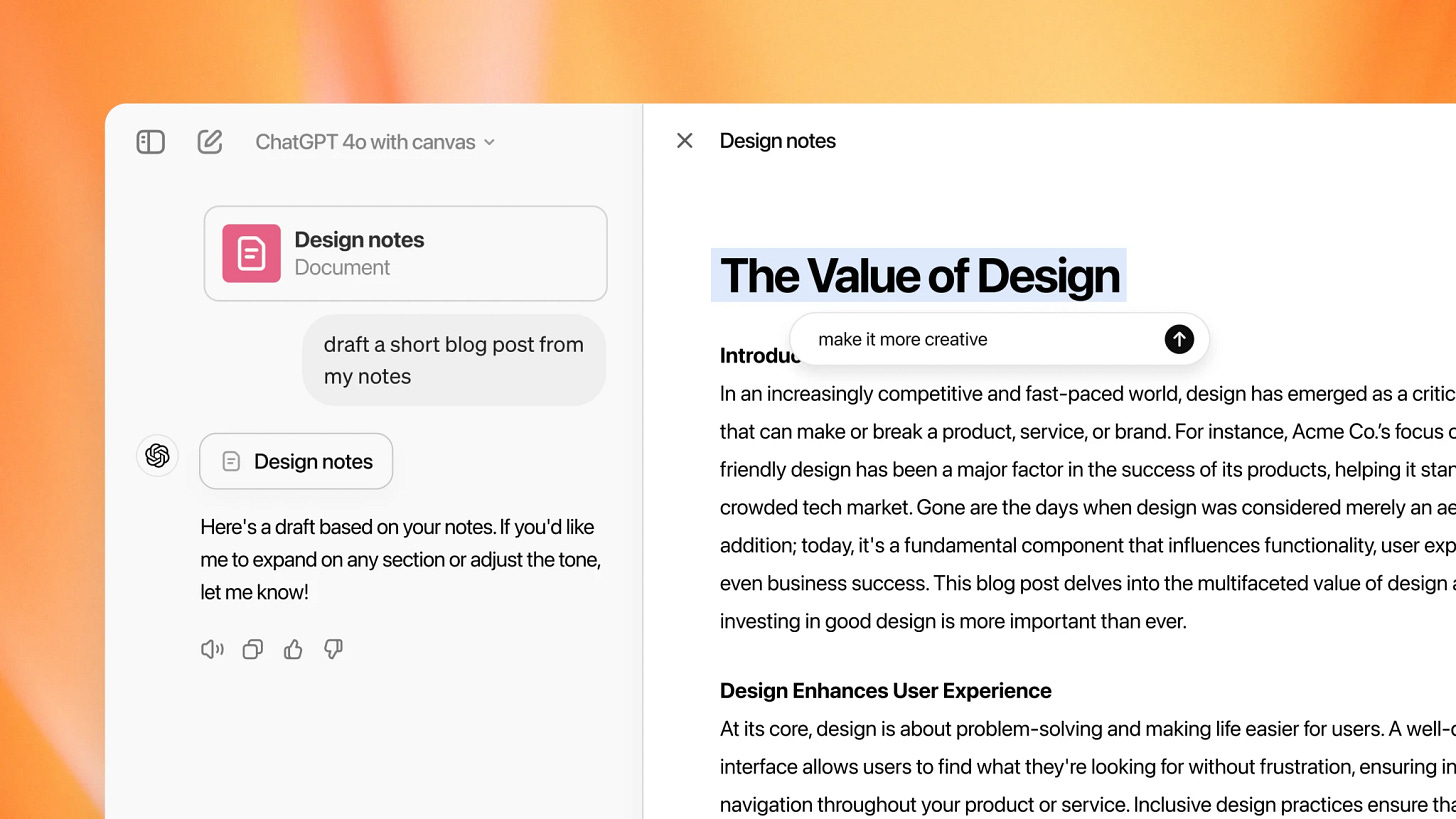

OpenAI launched a new interface for ChatGPT called 'Canvas', which offers a workspace for writing and coding alongside the ChatGPT window. The Canvas feature, built on GPT-4o, lets users modify and compare chatbot responses directly in a side panel, similar to Claude Artifacts. Canvas is rolling out gradually to ChatGPT Plus, Teams, Enterprise, and Edu users before becoming available to all.

OpenAI calls Canvas “a new way of working with ChatGPT to write and code” and says they trained GPT-4o to collaborate as a creative partner in writing and coding. Canvas interface provides an output panel and controls to edit outputs, adjust the length, change reading level, or polish the writing. A dedicated workspace interface is a huge step up from the chatbot for AI-human collaborative work.

We’ve mentioned Google’s NotebookLM as our prior tool-of-the-week. More controls are coming to NotebookLM’s “Audio Overviews” podcast generation feature, including personalization and episode length.

Now Gabriel Chua has developed an open-source alternative to Google’s NotebookLM called Open NotebookLM. This tool was “built in an afternoon” and converts documents into personalized podcasts using Llama 3.1. It is available on HuggingFace Spaces to try out, and the code repo is on GitHub.

AI Tech and Product Releases

OpenAI announced new developer tools and APIs at its 2024 DevDay. OpenAI did not bring news on anticipated AI models or the GPT Store, but it was truly developer-focused with four major items to advance OpenAI’s AI ecosystem:

OpenAI launched the Realtime API. The Realtime API allows developers to support low-latency speech-to-speech interactions using GPT-4o, enabling fast speech-to-speech experiences in AI applications. It combines speech recognition, text processing, and text-to-speech into a single WebSocket connection. Realtime API is the most importance DevDay release because it gives AI application developers a way to add “Advanced Voice Mode” capability.

OpenAI added vision to their fine-tuning API. Developers can now fine-tune GPT-4o with images and text to improve vision capabilities. This tuning can be useful for specific image recognition workflows.

Prompt Caching offers a 50% discount on recently processed input tokens. While the discount is less than with competitors’ prompt caching offerings, and caches are cleared after 10 minutes, it is done automatically. Users don’t have to change their API calls. A beneficial use case for this is when repeated questions on the same document or code base are asked in a session.

OpenAI added Model Distillation to their API, with stored completions and evaluations supported and integrated with our existing fine-tuning offering. OpenAI just made the process much easier to fine-tune a smaller model to achieve the capabilities of a larger teacher model.

OpenAI emphasized empowering developers with new tools, cementing OpenAI as the leading AI model provider for AI application developers. There were deeper reviews of OpenAI DevDay from Latent Space and Simon Willison, who headlined his review: “Let’s build developer tools, not digital God.”

OpenAI also launched Whisper-large-v3-turbo, an updated version of its speech-to-text model that is 8X faster and has better multilingual support.

Microsoft released major updates to Copilot, with many new features making it more versatile and user-friendly:

Copilot Voice allows users to interact with Copilot using voice commands and spoken audio responses.

Think Deeper gives Copilot the ability to reason through more complex problems, using a customized version of OpenAI's o1 model.

Copilot Vision can analyze text and images on web pages you’re viewing and answer queries (e.g., “What’s the recipe for the food in this picture?”)

Personalization tailors Copilot to your preferences and past interactions with Microsoft services.

Microsoft continues to advance its AI capabilities across their product line, including Copilot, Windows 11, and Bing. Windows 11 has been updated with improved Phone Link integration and new AI-powered tools for Paint and Photos. Recall is also back. Additionally, a revamped AI-powered Windows Search enhances search functionality. And Bing is getting AI-powered search summaries with Bing Generative Search.

Google released production Gemini 1.5 Flash-8B with free access via Google AI Studio and the Gemini API. Gemini 1.5 Flash-8B is optimized for speed and efficiency and suitable for tasks like chat, transcription, and long context language translation. The Gemini 8B release performs very well, matching prior versions of Flash on benchmarks.

Google has also been incrementally rolling out AI features across its product suite:

Google has introduced Gmail Q&A for iOS users, allowing users to interact with the Gemini chatbot within the app to answer detailed questions about emails and summarize content.

Google is integrating ads into AI Overviews, its AI-generated summaries for certain search queries, and adding links to relevant web pages within these summaries.

Google is enhancing its Lens app to enable near-real-time answers to questions about surroundings, using video capture and Gemini AI, allowing users to ask questions about objects in videos captured via the Google app.

Google is intensifying its AI efforts in India by integrating Gemini, its AI model, into search, visual recognition, and language processing to better serve the diverse linguistic needs of Indians. This move includes launching Gemini Live AI assistant with support for Hindi and eight other Indian languages, new AI features for Maps and merchants, and Gemini-powered video search feature.

Cohere introduced significant updates to its fine-tuning service for enterprise customers, supporting their latest Command R 08-2024 model with enhancements to improve customization, control, and transparency in adapting AI models for specific tasks.

Pika has released version 1.5, featuring physics-defying special effects called ‘Pikaffects.’ The new effects include options like 'explode it', 'melt it', and 'cake-ify it', which transform imagery into dynamic visuals. Pika 1.5 aims to push the boundaries of creativity in AI-powered video content creation. Both free and paid users can now access these advanced features, which include more lifelike movements and cinematic shot styles.

Meta has unveiled Movie Gen, a text-to-video AI model that generates realistic videos that sets a new high bar for AI video generation. Movie Gen AI model can create up to 16 seconds of video at 16 FPS or 10 seconds at 24 FPS, with sound but no voice.

Meta is withholding public release due to safety concerns around potential misuse. Though not yet integrated into Meta apps like Instagram until next year, CEO Mark Zuckerberg demonstrated the technology by altering his workout environment through various whimsical scenarios, including Zuck in gladiator armor and a setting where he leg presses chicken nuggets surrounded by French fries.

Black Forest Labs (BFL) released Flux 1.1 Pro, a faster text-to-image model available through a paid API and partner platforms like together.ai and Replicate. Flux 1.1 Pro offers six times the generation speed of earlier models while improving image quality to be state of the art, sporting highest ELO scores. BFL also launched a beta API for its Flux image generation models, offering developers features like content moderation and resolution constraints in the API to integrate image generation into their applications.

Nvidia’s recently announced NVLM 1.0, a new family of multimodal LLMs, and launched their flagship 72B model, NVLM-D-72B, which matches the performance of GPT-4 and Claude 3.5 across various vision and language tasks. NVLM-1.0-D-72B model weights and code are open-source and available via HuggingFace.

AI-powered video editing app Captions introduces a new tool that automates content scheduling and video generation for websites, focusing initially on Instagram Reels and TikTok. The tool analyzes site data to create relevant videos, helping businesses like cafes or clinics generate engaging online presence without requiring extensive video creation skills.

Liquid AI has unveiled its first multimodal AI models, called Liquid Foundation Models (LFMs). Liquid AI is a startup co-founded by former MIT researchers to develop the novel non-transformer-based LFM models, which the company claims have superior performance and memory efficiency compared to transformer-based LLMs. Available in three sizes, these new LFM models are designed for diverse applications across various industries while minimizing memory usage.

In release-retraction news, Sahil Chaudhary the CEO of Glaive AI gave a post-mortem on the Reflection 70B model release debacle. While Chaudhary has committed to being more transparent, questions remain about the authenticity of the training data and initial claims.

AI Research News

Our AI research weekly roundup for this week covered AI for Healthcare, including medical LLMs, grounding medical LLMs with RAG and rule alignment, medical datasets, evaluations of LLMs in clinical settings, and ML models for diagnostics.

Apple's AI research team has developed Depth Pro, a new model that generates detailed 3D depth maps from single 2D images in under a second, marking a significant advancement in monocular depth estimation.

Published in the paper “Depth Pro: Sharp Monocular Metric Depth in Less Than a Second,” this technology bypasses traditional requirements for camera data and can produce highly accurate depth maps. Depth Pro model weights and code are open-source and released on GitHub.

AI Business

OpenAI raised $6.6 Billion in the largest Venture Capital funding round to date, valuing the company at $157 billion. Thrive Capital led this funding round, with participation from Microsoft, Nvidia, and others. OpenAI will use the funds to “scale the benefits of AI.” OpenAI needs to change its corporate structure to a full for-profit structure, a controversial step, so there may be more drama to come.

Voyage AI, backed by Snowflake, secured $20 million in funding to enhance enterprise RAG. Voyage AI aims to improve Retrieval Augmented Generation (RAG) for the enterprise, with more accurate embedding models that will be integrated into Snowflake’s Cortex AI service.

Another OpenAI departure: Tim Brooks, co-lead on OpenAI’s video generator Sora, has left for Google DeepMind to work on video generation technologies and “world simulators.” Brooks’ departure comes as Sora faces technical setbacks and is yet to be released to the public, leaving rivals like Luma and Runway to lead in this space.

The voice and audio AI startup ElevenLabs is nearing a new funding round that could value the company at up to $3 billion. This would triple its valuation from January. Its annualized revenue has grown to $80 million from $25 million at the end of last year.

Meta recently clarified that images and videos shared with its AI through Ray-Ban Meta smart glasses can be used for training AI models in the U.S. and Canada, according to its Privacy Policy. However, photos and videos captured on the glasses are not used unless explicitly submitted to AI by the user.

Policy

California Governor Gavin Newsom vetoed SB 1047, a bill that would have imposed strict regulations on AI development, including the requirement for a “kill switch” and third-party safety audits.

Gov Newsom signed AB-2013, that mandates AI companies releasing AI models must disclose the source of training data by January 2026. This includes disclosing copyrighted materials included and how it was procured or licensed.

Meanwhile, a federal judge temporarily blocked California's new AI deepfake law (AB 2839) over First Amendment concerns. Judge John Mendez ruled that the new law, which aims to compel individuals to remove election-related deepfakes from social media, is overly broad and could infringe on protected speech.

AI Opinions and Articles

Despite building a company around the concept, OpenAI’s Sam Altman questioned the meaning of artificial general intelligence (AGI) in his DevDay chat with OpenAI’s CPO. As recorded by Simon Willison:

Sam says they’re trying to avoid the term now because it has become so over-loaded. Instead, they think about their new five steps framework. …

Sam: Most people looking back in history won’t agree when AGI happened. The Turing test wooshed past and nobody cared.

Now, even renowned AI researcher Fei-Fei Li admits she doesn’t fully understand AGI. During Credo AI’s summit, Li discussed her confusion about AGI and emphasized the importance of focusing on practical applications.