AI Agent Ecosystem Expands - Mistral's Agents API

Mistral announces Agents API to support agentic AI. LangChain talks about the AI agent future. MCP wins. Google A2A Protocol may join MCP as a standard protocol to deliver multi-agent AI systems.

Introduction

To make AI agents successful, developers need a robust ecosystem of AI infrastructure to support AI application development. We’ve tracked progress in the AI ecosystem since the inception of “AI Changes Everything” two years ago, and it feels like progress has accelerated in this area in the past 6 months.

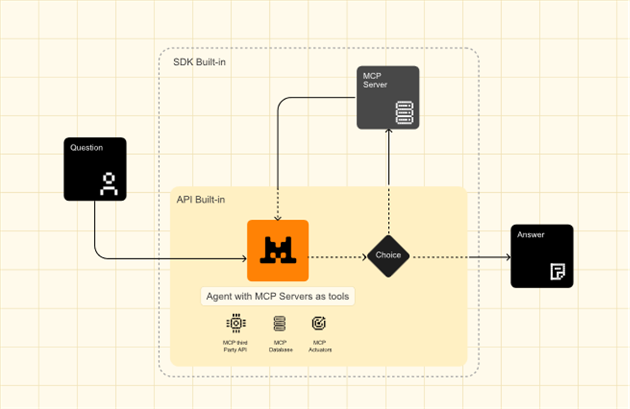

One sign of this progress is the success of MCP. Anthropic introduced Model Context Protocol (MCP) six months ago, and it has been embraced as the open standard for integrating external data sources and tools into an agent. Both Microsoft and Google recently touted MCP support in their own AI agent releases. Just in the last few weeks, Anthropic, OpenAI, and now Mistral have incorporated MCP support into their APIs.

MCP is becoming the essential standard protocol for AI agents, like TCP/IP is for the internet.

Some recent developments give some hints to the future of AI agents:

LangChain’s Interrupt 2025 conference highlighted LangChain's support for AI agent development.

Mistral released Agents API, Mistral’s API support for building agentic AI with Mistral AI models.

Google’s A2A (agent-to-agent) Protocol, released in March, is getting traction for the next stage in AI agents: multi-agent AI systems.

LangChain’s Harrison Chase Talks the Future of Agents

Harrison Chase began LangChain as an open-source project in late 2023, just before the ChatGPT release, as a framework to support AI integration around AI models. LangChain has been supporting the building of AI agents before it was cool.

LangChain has grown into a company supporting prototyping and production deployment of AI applications with their AI frameworks: LangChain, the original open source prompt-chaining toolkit that has expanded to support AI application development in Python and Javascript; LangGraph, their framework for building AI agents; and LangSmith, their platform for evaluation and monitoring of production AI applications.

Earlier this month, they held their first user developer conference, Interrupt 2025, billing it “The AI Agent Conference.” Harrison Chase gave the Interrupt 2025 keynote speech and shared his perspective on AI agents.

Observing that AI agent production use is increasing in areas like code generation, customer service, and AI-driven search tools, he made key points about where AI agents are today:

AI agents rely on many different models to leverage the unique strengths of different LLMs.

Reliable agents start with the right context: Effective agents rely on carefully managed context, so it’s important to control the context, with prompting, agent orchestration, and agent flow control.

Building agents is a team sport: Agent development is collaborative, requiring diverse skills.

He sees agent development as the job of the Agent Engineer (we have used the term AI Engineer), an interdisciplinary role with skills in prompting, engineering, product, and machine learning. Going forward for AI agents, he sees democratization of building AI agents and challenges taking AI agents into production:

AI Observability is Unique: Observability for agents differs from traditional methods because agents deal with large, unstructured, and often multimodal data.

Everyone Will Be an Agent Builder: The push to democratize agent building, empowering individuals from various backgrounds to create AI agents, will expand. Tools will make AI agent building even easier.

Deployment is the Next Major Hurdle: Agents have unique deployment characteristics—they can be long-running, bursty, and prone to flakiness, often requiring human-in-the-loop interactions over long time periods.

His keynote was a sales pitch for the LangChain tools to support the agent engineers building these AI agents: LangGraph pre-builts, LangGraph Studio, and the Open Agent Platform (an open-source, no-code solution) help democratize AI agent building. LangGraph Platform addresses challenges with APIs for streaming, memory, horizontal scaling, and deployment, while LangSmith provides observability into the unique concerns of AI agents.

One takeaway is the importance of AI agent orchestration for multi-agent AI systems.

Mistral’s Agents API

Mistral has just released its new Agents API, joining other leading AI companies with in-house API support for agentic AI. This API is designed to support agents that integrate with Mistral's AI models. The Mistral Agents API operates as a cloud-based service, accessible primarily through the Mistral AI library, akin to how developers interact with OpenAI's requests API.

Key Features of the Mistral Agents API:

Persistent Memory: Addresses a challenge in other frameworks by maintaining memory across conversations.

Built-in Tools: Includes tools for code execution, web search (likely Bing or Brave), image generation (using Black Forest models), document RAG, and custom tool integration.

Agentic Orchestration: Supports complex workflows like agent hand-offs, sequential and parallel execution.

The API also offers the flexibility to integrate custom tools, expanding its utility.

A key strength of Mistral's offering lies in its sophisticated agentic orchestration capabilities. The API supports complex workflows, including the hand-off of tasks between different agents, as well as the execution of sequential and parallel agent processes, providing developers with enhanced control over agent behavior.

To demonstrate their API’s functionalities, Mistral has released a cookbook sharing several practical applications. Examples include a GitHub code writing agent leveraging the Devstral model, a financial agent for stock price analysis, and a multi-agent system designed for earnings call analysis that integrates Mistral OCR for document processing.

This is similar in all respects to other AI vendor APIs; OpenAI, Gemini, and Anthropic frameworks can be used to build similar AI agent applications. However, Mistral’s Agents API seamlessly supports Mistral foundation models, making it a valuable asset for organizations wishing to specifically implement Mistral-based AI agents.

Google’s A2A Protocol

Google supports AI agent development with the Agent Development Kit, Agent Space, and Firebase Studio, as well as Google's Agent to Agent (A2A) Protocol.

Google's A2A Protocol defines how agents communicate with each other at a service level via an exposed API. It is designed to standardize communication between different AI agents. It addresses the current challenge of interoperability between agents built on various frameworks, and it aims to enhance interoperability, enable capability discovery, and standardize task management.

While this sounds like MCP, A2A and MCP are complementary, with A2A handling agent discovery and inter-agent communication and MCP standardizing access to external data, APIs, and tools from an agent.

Inspired by Anthropic's model context protocol, A2A utilizes JSON RPC for interactions. Communication involves tasks, which represent work assigned to an agent. Key components include the client (initiating the call), the remote agent (being called), and a server facilitating communication. Agents can also exchange artifacts, which are data such as text, binaries, or images. A central element is the Agent Card, a JSON-formatted file that describes an agent's capabilities, such as its name, description, URL, provider, version, and skills.

With competing alternatives for multi-agent orchestration, it begs the question: Will A2A succeed as a standard protocol? Or will another protocol or framework (such as LangGraph, which can define agent swarms) dominate? The jury is out, but Google has been adding partners to the A2A ecosystem.

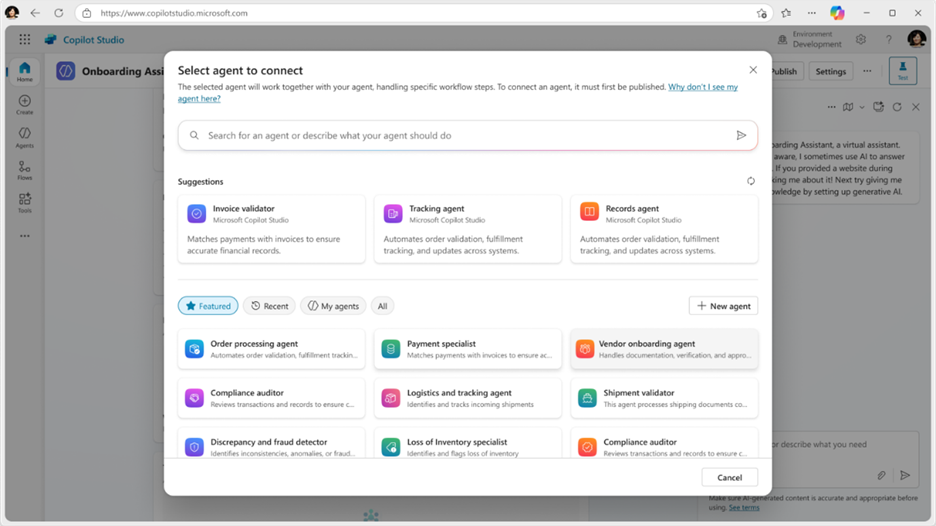

For example, Google claimed Microsoft as an A2A partner in early May. Microsoft will be using A2A to support multi-agent coordination in agentic AI applications:

As agents take on more sophisticated roles, they need access not only to diverse models and tools but also to one another. That is why we are committed to advancing open protocols like Agent2Agent (A2A), coming soon to Azure AI Foundry and Copilot Studio, which will enable agents to collaborate across clouds, platforms, and organizational boundaries.

For end users, it looks like this: Microsoft’s Copilot Studio now supports coordinating multiple specialized agents. Each AI agent is responsible for discrete tasks like data analysis, scheduling, or decision routing. The AI agents are communicating via a shared orchestration layer to tackle complex, multi-step workflows.

Conclusion – The Agentic AI Stack

The AI agent infrastructure ecosystem is maturing, making building an AI application easier than ever and expanding support for deployment of AI agents into production.

Many of us have noticed the ‘design convergence’ of the major AI lab developing a common set of APIs to support agentic AI. Simon Willison on X expresses it this way:

It's interesting how the major LLM API vendors are converging on the following features:

Code execution: Python in a sandbox

Web search - like Anthropic, Mistral seem to use Brave

Document library aka hosted RAG

Image generation (FLUX for Mistral)

Model Context Protocol

Some call it the LLM OS stack. I call it the agentic AI stack, since these are the common components and features of an agentic AI application. All AI vendors are competing not just on AI models, but on the supporting AI ecosystem for building agentic AI. This is why Mistral has delivered Agents API.

Model Context Protocol (MCP) is a shift from explicitly programming AI to defining capabilities, allowing AI to determine how to utilize tools. Now that AI can handle tool-calling robustly, more tools and capabilities can be usefully plugged in, creating a virtuous cycle of more powerful AI systems.

The next stage in this AI agent evolution is multi-agent AI, which uses different AI agents for distinct tasks as building blocks of the system. As noted by Harrison Chase, this requires AI agent orchestration. Multi-agent AI systems involve AI agent discovery, processing, interaction, and coordination.

This is ripe for standardization, because AI agent discovery and coordination benefit from a standard connector or protocol. It’s not yet clear if Google’s A2A will be that standard solution, but the trends are positive. We may soon see MCP and A2A combining to form the standard protocols for autonomous multi-agent systems.