AI Engineer World's Fair '24: Highlights

Top takeaways from AIEWF: Faster, Better, Cheaper AI models; data, evals and iteration is path to AI app success; LlamaFile can run LLMs fast on local CPUs.

What I learned at the World’s Fair

I went to the AI Engineer World’s Fair to get an in-person experience for what is going on in the AI application development space. I gained a ton of learning and met some great people. I am sharing some of what I learned in this and followup articles.

First, the AI Engineer World's Fair is a dev conference, for AI builders, formalized as AI engineers. However, there is something for many who fall outside this. AI users can get a peek at the new AI model features and capabilities; software engineers of all stripes can learn about AI coding assistants; and we all are going to need to know about AI trends, since AI changes everything.

This debrief is too much for one article, so this will focus on some of the highlights from some of the best keynotes and talks, with a focus on key AI principles and trends.

Follow-up articles will focus on developments, releases and products for parts of the AI ecosystem and AI stack:

AI infrastructure products and releases

AI coding assistants and AI agents

RAG (retrieval-augmented generation) and managing data

AI models and LLM training

GPUs and LLM Inference

AIEWF is a Dev Conference for AI Builders

The AI Engineer World’s Fair is a developer conference. This should be pointed out to distinguish it from an academic conference - there were only a few academic papers referenced and not much math on slides - and a vendor conference.

While there were some vendor pitches in Expo sessions, it also was not a ‘vendor’ conference, heavy on pitches for vendor-specific frameworks. The vendor talks were mostly helpful and not dominating.

As a developer conference, it lived up to it’s billing as a conference for AI Engineers. It helped developers building AI applications to understand the state of AI, the pace of AI progress, what others are building, and what solutions and tools are out there to build AI-based products.

Andrej Barth of AWS provided good high-level advice to keep in mind, similar to my own advice in “How To Thrive in the AI Era”:

Learn AI.

Increase your productivity using AI. For developers, this means adopting AI

coding assistants to explain and generate code. He used this advice to pitch Amazon Q developer, but there are many options now. For others, the advice holds: Use AI to speed tasks, answer questions and advise, help you write, etc.

Start prototyping and building with AI. There are many frameworks, tools and cloud-based inference services to develop and run AI applications. AWS offers Bedrock and the Converse API to access and serve AI models.

Integrate AI into your applications.

Stay updated and engage with the community.

AI Trends: Simon Willison’s Keynote

“Faster, Better, Cheaper … The GPT-4 barrier is no longer a problem.”

Simon Willison’s keynote was a good high-level State of the Union on AI. He mentioned the progress recently in getting multiple good GPT-4 level competitors - Claude 3.5 Sonnet, Gemini 1.5 Pro - as well as very capable cheaper LLMs, such as Claude 3 Haiku, Gemini 1.5 Flash, etc.

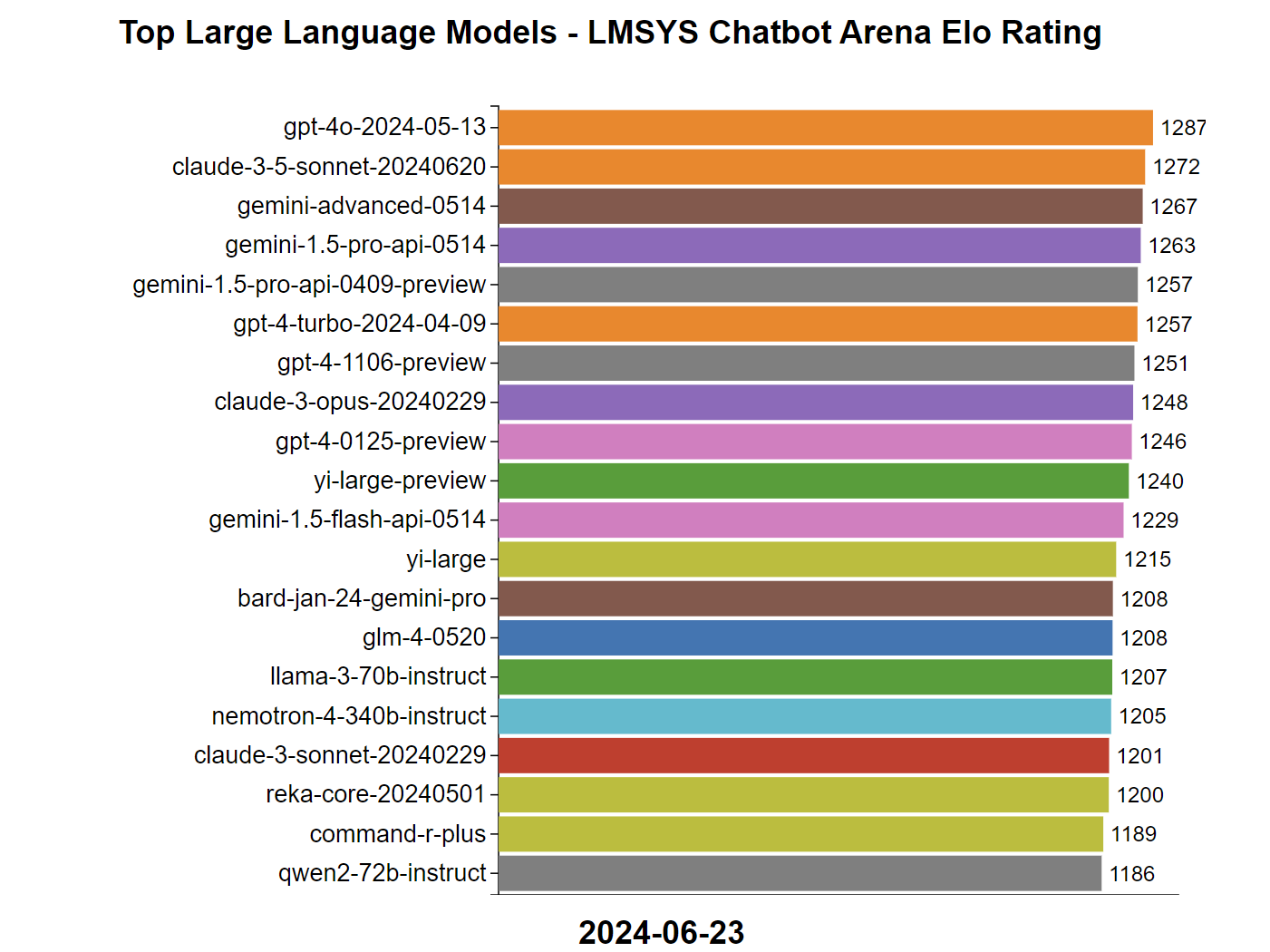

Our score for vibes is lmsys Chatbot arena, and Simon shared a neat animated tool showing the past year’s progress in Chatbot Arena scores that he created.

The top AI models by Lmsys Arena Elo ratings are GPT-4o, Claude 3.5 Sonnet, and Gemini 1.5 Pro. Best news is you can use these models without paid subscriptions. Once frontier but now a laggard, GPT-3.5 is ranked 65. Stop using it.

“LLMs reward power users.” You need to spend time with LLMs to gain the most out of them.

“The AI Trust Issue” Consumers and copyright owners alike are rebelling at AI models getting trained on their data. But Simon shared a statement from Anthropic; it turns out the best AI model out there didn’t need customer data.

Other points Simon made:

“We haven’t solved prompt injection”

“LLMs are gullible … it’s a strength and a weakness”

“We need to establish patterns for responsible AI use.”

He also wants to popularize the term “AI Slop” - AI-generated content that’s not verified and checked - make it anathema, like spam. “It’s really about taking accountability, and LLMs can never do that.” AI content can indeed degrade our experience when consuming content, be it blogs, YouTube videos, social media and more.

Best AI Practices: “What We Learned from a Year of Building with LLMs”

This keynote was the title of a collaborative blog post that started as a group chat, then became a white paper that went viral. The white paper is in three parts on OReilly website, here:

The keynote was presented by the six co-authors in three parts, and like the white paper, broken into strategic, operational, and tactical advice. It’s a good roadmap of best practices and pitfalls, with many nuggets of wisdom for AI builders. Highlights:

Strategic: Building AI apps that win

The model is not the moat. A model with high MMLU is not a product.

Build in your zone of genius. Leverage product expertise and find your niche. Don’t build what AI models are providing already.

Iterate to something great. Iteration speed is a competitive advantage, apply continuous improvement principles.

Invent the future by living it. Build things that generalize to smarter, faster models.

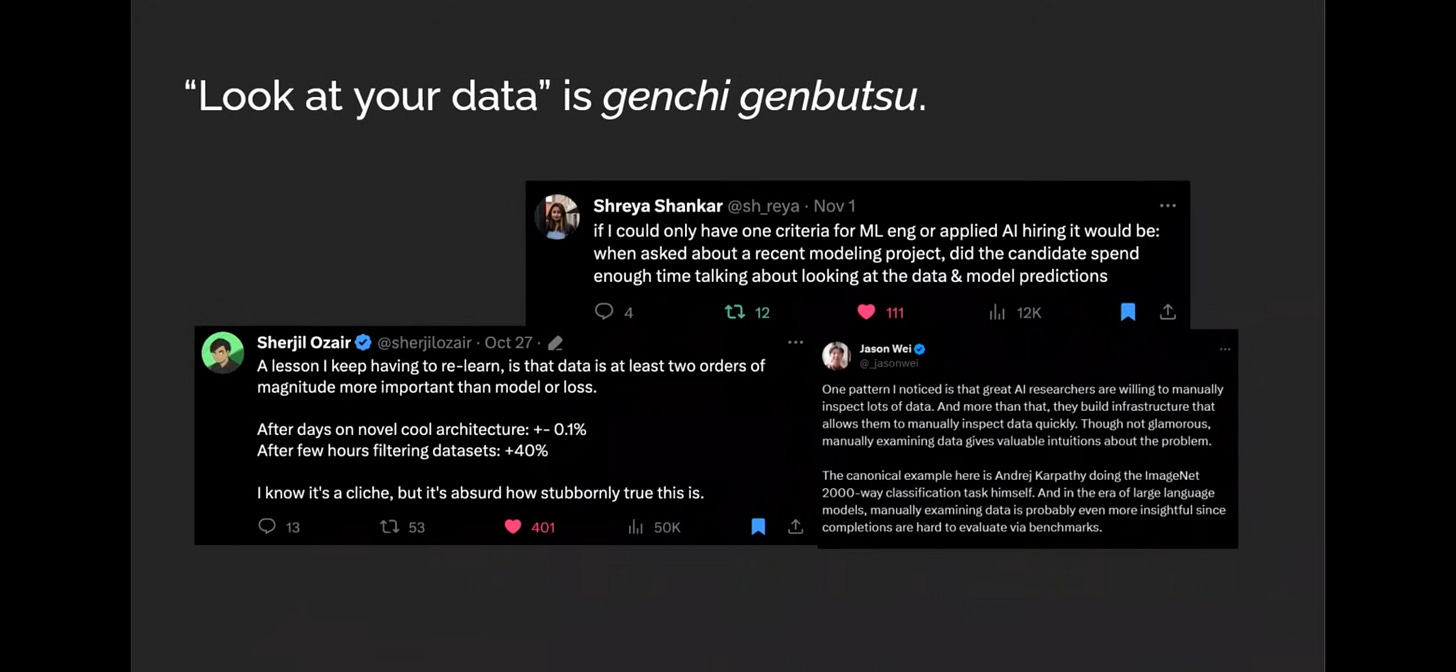

Look at your data. Evals are a differentiator. Without evals, we can’t make progress.

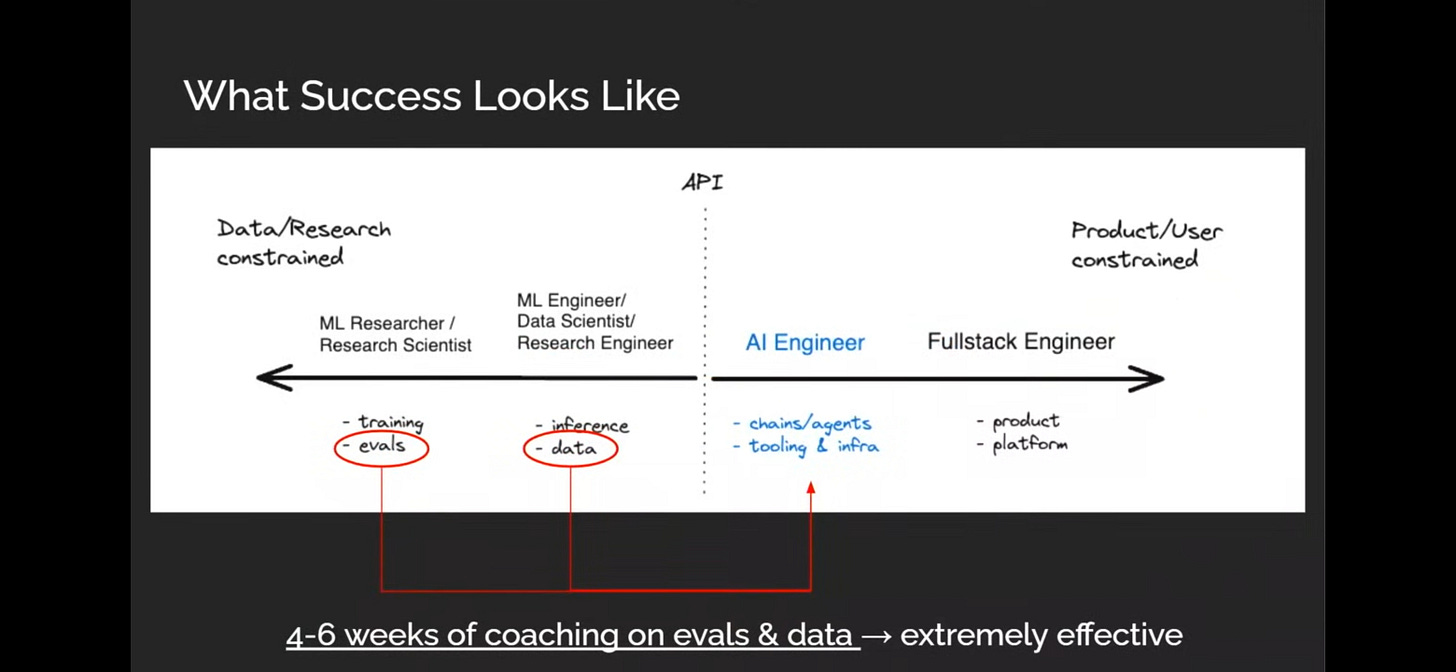

Operational: Building the AI team

Talent is the most important lever, but AI Engineer is “over-scoped and under-specified.”

Don’t hire ML engineers too early. Instead, take 4-6 weeks to train up AI engineers on evals and data.

Learn data and evals. Evals plus data literacy is the highest leverage skill in AI. make it the core skill-set of AI engineer.

AI engineer is aspirational, keep learning.

Tactical - How to implement data, evals, guardrails, and iteration

When to look at data: Regularly, even daily.

LLM responses deserve human eyes.

Consider model-based evals, with LLM-as-a-judge. There are pros and cons, depending on situation. Classifier or fine-tuned models are more efficient than LLM, but using LLMs is more flexible and as easy as writing a prompt.

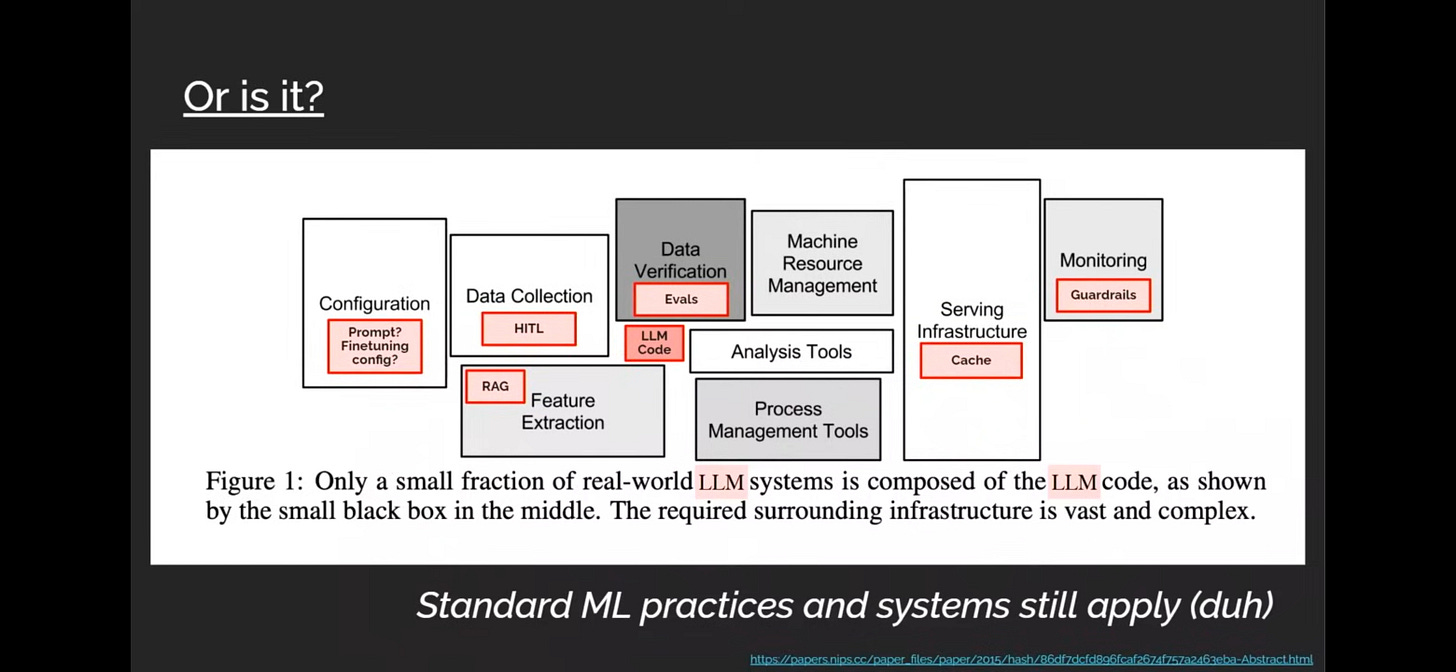

Their final takeaway is that the AI model is a small part of the overall AI application. This concept and the chart they shared is familiar to those who have worked in data science and machine learning (ML). Standard ML practices and concerns apply: Data, process management, infrastructure, monitoring, configurations and features are all important.

Running Models Locally: Llamafile

“We have an entire planet of CPUs out there…” Stephen Hood, Mozilla

Mozilla Llamafile: Llamafile was developed to “democratize AI” access by enabling AI users to distribute and run LLMs with a single file. They do that by combining llama.cpp with Cosmopolitan Libc into a framework that turns LLM weights into a single-file executable (called a "llamafile") that runs locally on most computers.

Running AI models on a CPU is much slower than GPU, but Llamafile team was able to use optimization techniques to accelerate AI model runs on a CPU by 30 to 5x or more. They got 4x improvement on Intel i9, and 8x speedup on AMD Ryzen Threadripper CPUs, achieving 2400 tokens/sec on AI models like Mistral 7B.

This gives Llamafile the ability to run local AI models with decent performance across many computers, even a Raspberry Pi.

Mozilla also has builders accelerator, for those who want to get support for contributing to open source.

Conclusions

The AI Engineer’s World’s Fair showed that the generative AI space is maturing. More products and vendors versus open problems, more “best practices” versus simple winning hacks, and more complaints about reliability and production-readiness versus hype over prototypes.

That said, it is clearly still early innings for the AI revolution. Much more to come.

What I shared above just scratched the surface of what was covered at AIEWF, and I’ll post more in follow-ups.

AI coding currently does not have any particularly good products. GitHub Copilot is particularly difficult to use. I have been using Claude for programming for over a year. I estimate that in the future, Claude will lead GPT by a significant margin in the field of programming.