AI Keeps Eating the Software World

CodeQwen 1.5 7B, Codestral 22B, & DeepSeek Coder 34B are great coding models to run locally. OpenInterpreter shows power of iteration. Top AI models for coding: GPT-4o, Claude Opus, Gemini 1.5 Pro.

Last year, we wrote “AI Is Eating the Software World,” where we reviewed the leading AI code assistants, namely, CoPilot and using chatGPT / GPT-4 itself combined with code interpreter, which OpenAI now calls data analysis.

At the time, GPT-4 was the only game in town for decent code generation with AI.

Just as GPT-4 remained the leading available AI model overall as it evolved into GPT-4 turbo and GPT-4o, improving along the way, it has remained a most capable AI coding model. But it’s not alone now. Competitors have developed many high-quality alternatives, improving coding-specific AI models as well as frontier AI models for coding tasks.

Two frontier AI models besides GPT-4o stand out for coding - Claude Opus and Gemini 1.5. Pro:

Claude Opus, the flagship model in Anthropic’s Claude 3 model family, scores an impressive 84.9% on HumanEval benchmark, beating OpenAI’s original GPT-4 score of 67%. It’s a strong model both for nuanced content creation and code generation.

Google’s Gemini 1.5 Pro, as of May, has improved on coding versus earlier Gemini versions, scoring 84.1% on HumanEval, well above Gemini 1.0 Ultra score of 74.4%. With a context window of over 1 million tokens, it leads in long-context understanding, giving it an edge in problem-solving for large code bases.

Code Llama and the Rise of Open AI Coding Models

The options and alternatives in coding-specific AI models continue to get better as more effort is put into code-specific pre-training. Coding-specific AI models are pre-trained on text similar to other LLMs, but their pre-training datasets contain mostly source code as the text they ingest. LLMs can have more coding capability, if more source code is put in their training dataset.

The introduction of Code Llama in late August 2023 changed the game in open AI models for coding. Prior to the release of Code Llama, there were some interesting coding AI models of note, but they were far from competitive with GPT-4.

CodeLlama 34B models greatly out-performed prior open AI code models, achieving 48% on Human Eval overall, and CodeLlama-python achieving 53% on HumanEval for Python.

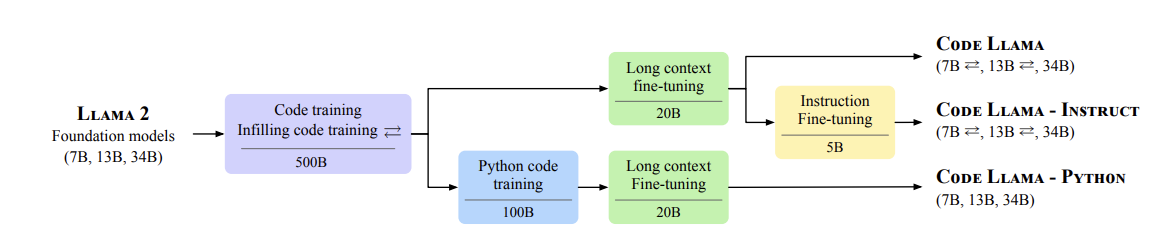

How did CodeLlama do it? They shared technical details here. They took the Llama 2 model as a baseline and trained it on additional code-specific data - 500B tokens of code text for infilling pre-training. To make Code Llama (7B, 13B and 34B), they trained with 20B more tokens for long context fine-tuning with a 16k context window, and used RoPE scaling to extend the context to 100,000 tokens. Plus:

They added another 100B tokens of training for the python model version.

They added 5B tokens of instruction fine-tuning for the instruct model.

While less capable than GPT-4, CodeLlama’s small size and best-in-class performance for small open AI code models made them natural tools for local use as code assistants; code Llama 7B and 13B could perform code completion and infilling.

Code Llama’s impact was amplified when fine-tunes further improved on Code Llama’s scores and capabilities to make very capable specialized models. One example was Phind-CodeLlama-34B-v2, that achieved a 73.8% HumanEval score.

Deep Seek Coder, Code Qwen 1.5, and Code Llama 70B

In the wake of CodeLlama last August, there have been several new open AI coding model releases, leap-frogging prior releases in capabilities. The HuggingFace BigCode Models Leaderboard tells the story, with a mix of base (or instruct) coding models and fine-tunes of AI coding models.

Deep Seek Coder, from the Chinese company DeepSeek, is a series of open AI coding models, sized at 1.3B, 5.7B, 6.7B, and 33B parameters. Deep Seek Coder models were each trained on 2T tokens, consisting of 87% code and 13% natural language in both English and Chinese. Their top model is deepseek-coder-33b-instruct, with a 16K window size that achieves 81% on Human Eval.

Code Qwen 1.5 is a derivative of the the Qwen 1.5 model suite developed by Alibaba. Code Qwen 1.5 7B was enhanced by training it on 3 trillion tokens of code and supports a context length of 64K tokens. Code Qwen 1.5 7B Chat high quality coding model, especially in chat models, getting 83.5% on HumanEval, currently state-of-the-art for a 7B instruct AI coding model.

CodeLlama 70B Instruct was released in January, after the original CodeLlama release. It gets 72% on HumanEval. Meta’s April release of Llama 3 surpassed that: Llama 3 70B Instruct scored 78.6% on HumanEval, while the Llama 3 8B scored 72.4%.

WizardCoder and the Fine Tunes

Taking a very good base model and fine-tuning it for specific coding tasks yields an even better AI coding model.

WizardCoder pioneered fine-tuning for coding models by adapting the Evol-Instruct method specifically for coding tasks: Tailoring the prompt to the domain of code-related instructions and fine-tuning base Code LLMs - StarCoder, Code Llama, and DeepSeek Coder - to make a fine-tuned coding model.

Others have followed their lead in making solid fine-tunes of the best models, for example CodeFuse.

At the top of the BigCode Model Leaderboard is Nxcode-CQ-7B-orpo, a fine-tune of CodeQwen1.5-7B. For fine-tuning, it uses a Monolithic Preference Optimization without Reference Model on 100k samples of high-quality ranking data. Its results on benchmarks are amazing: 86.6% on HumanEval, 83.5% on HumanEval+, 82.3% on MBPP, 70.4% on MBPP+.

Codestral

The latest entrant for AI coding models is Mistral AI’s Codestral-22B, a 22B parameter open-weight AI model for code generation with a 32K context window. Codestral was trained on 80+ programming languages, and achieves 81% on HumanEval for Python, close to state-of-the-art for an open coding LLM.

Caveat: Codestral not fully open source, but rather licensed under the new Mistral AI Non-Production License, which means that you can use it free for research and testing purposes only.

Iteration Is All You Need

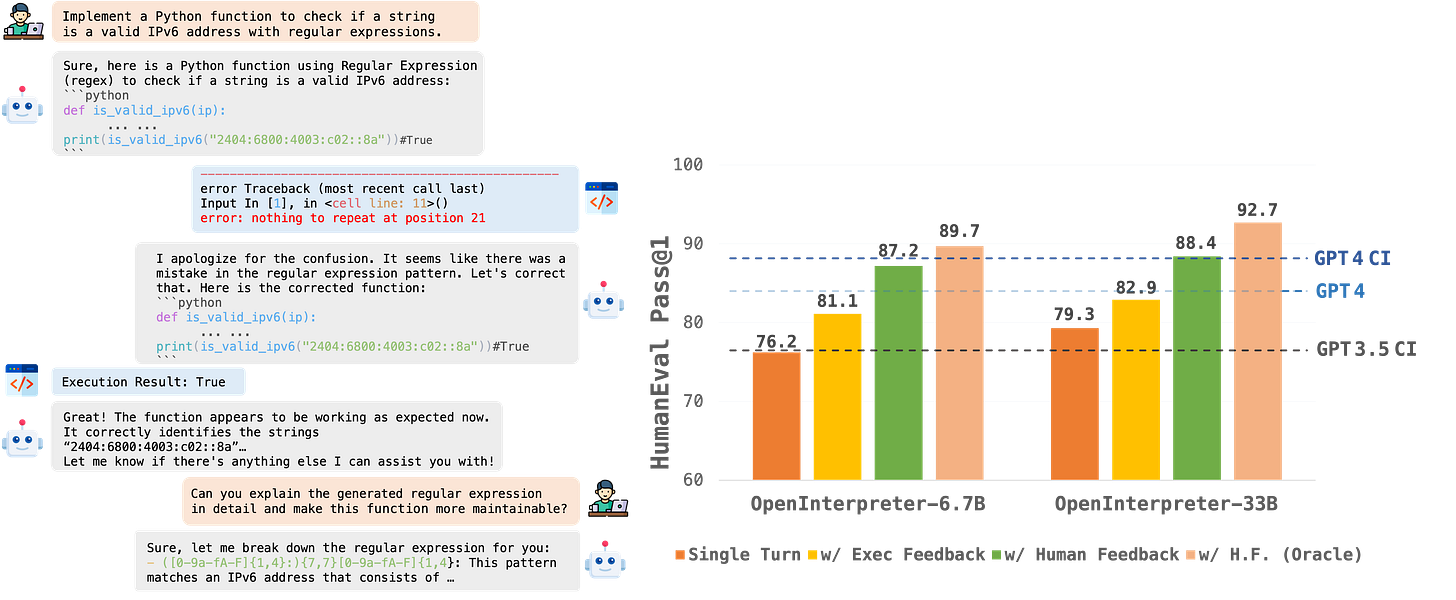

OpenCodeInterpreter-DS-33B is a fine-tune of Deep Seek that adds a special wrinkle to getting better at solving code problems. It improves on code generation capabilities by integrating execution and iterative refinement functionalities, as they show in the paper “OpenCodeInterpreter: Integrating Code Generation with Execution and Refinement.”

They were able to show that going beyond a single turn, by using synthesized feedback (from GPT-4) and iterating on the generated code, they were able to improve responses significantly. This approach scored 92.7% on HumanEval when given the opportunity to review code and fix errors iteratively.

Benchmarks - HumanEval and Beyond

HumanEval has become a standard benchmark for judging AI coding model performance, but, as with all benchmarks, HumanEval is flawed. Data contamination may inflate scores on some AI models; some AI models over-fit to do well on HumanEval, but under-perform in real-world tasks.

One effort to remedy this gap is EvalPlus. They developed HumanEval+ and MBPP+ out of the original HumanEval and MBPP (Mostly Basic Python Programming) benchmarks:

HumanEval+: 80x more tests than the original HumanEval.

MBPP+: 35x more tests than the original MBPP.

A more serious flaw with HumanEval is that programming language challenges do not fully capture software development: Requirements, design, testing, and more play a role. As the best AI models get to 90% and beyond on HumanEval, it ‘saturates’ the benchmark without telling us if these models can solve real problems. We need benchmarks that capture more difficult and complex real-world software engineering tasks.

This is where SWE Bench comes in; it’s a benchmark based on real software issues collected from GitHub. This makes it suitable for evaluating AI coding agents and AI coding applications that can do more complete software engineering tasks beyond ‘fill in the blank’ code completion. We will see more SWE Bench scores when evaluating future AI coding assistants.

Conclusion

A year ago, there was only one choice in a high-quality AI coding model: GPT-4. Now, there are a dozen AI coding models that are better now than the original GPT-4, including both GPT-4o and competing frontier AI models like Claude 3 Opus and Gemini 1.5 Pro. Lower tier AI models like Claude 3 Haiku and Gemini 1.5 Flash also do quite well.

Beyond the large, proprietary AI models that perform well, smaller open coding-specific LLMs like CodeQwen1.5 7B, Codestral 22B, and DeepSeek 34B achieve high-quality performance on coding tasks, and thanks to smaller size and open weights, they can be run locally. They can be integrated as a copilot on VSCode or other software development environments.

Your mileage may vary depending on your machine configuration. A 4-bit quantized DeepSeek 34B takes up about 20B, so can fit on a GPU with 24GB of memory; a CodeQwen 1.5 7B can fit on an 8B GPU or can run on a MacBook with an M2 or M3.

The next stage is harnessing these coding models into AI agent frameworks where the models iterate to solve harder tasks. That’s a topic for another day.