AI Model Innovations - Better, Faster, Cheaper

QwQ-32B brings AI reasoning to local use. Mercury diffusion LLM makes inference 10x faster. Chain-of-Draft speeds reasoning with terse thoughts.

Introduction

AI models continue to improve rapidly. Two recent AI model releases, QwQ-32B reasoning model and Mercury diffusion LLM, and an innovation in prompting called Chain of draft, all are pushing the envelope to make AI model inference and reasoning faster, better, and cheaper:

QwQ-32B: Embracing the Power of Reinforcement Learning

Mercury: A commercial-scale diffusion LLM

Chain of Draft: Thinking Faster by Writing Less

QwQ-32B: Embracing the Power of Reinforcement Learning

Qwen just last week released QwQ-Max-Preview, built on Qwen-2.5-Max and promised open-source releases of smaller AI reasoning models. They quickly kept their promise, releasing QwQ-32B this week and announcing it in a blog titled QwQ-32B: Embracing the Power of Reinforcement Learning.

QwQ-32B is based on the (quite excellent) Qwen-2.5-32B base model that is then fine-tuned with RL for reasoning. The Qwen team explained the training process to enhance reasoning, which is the same used to make DeepSeek R1:

We began with a cold-start checkpoint and implemented a reinforcement learning (RL) scaling approach driven by outcome-based rewards. In the initial stage, we scale RL specifically for math and coding tasks. Rather than relying on traditional reward models, we utilized an accuracy verifier for math problems to ensure the correctness of final solutions and a code execution server to assess whether the generated codes successfully pass predefined test cases. … After the first stage, we add another stage of RL for general capabilities.

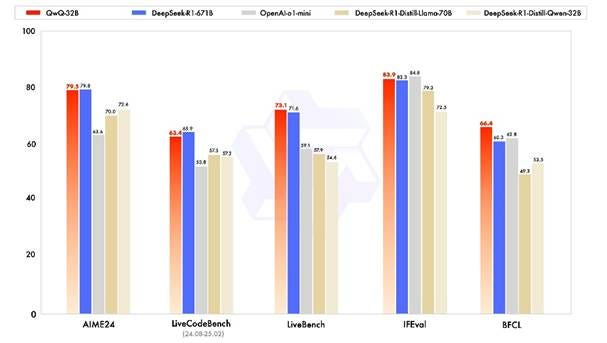

How good is it? Remarkably, the 32B model holds its own with DeepSeekR1 and outperforms o1-mini on math and coding benchmarks. While it’s not at o3-mini performance, so not SOTA, it is remarkable that QwQ-32B matches much larger AI reasoning models.

QwQ-32B is available as an open-weights model in Hugging Face. It’s the best AI reasoning model you can run locally, so it’s worth downloading if you have enough GPU memory to accommodate it (24GB). You can also try it out on Qwen Chat.

Mercury: A commercial-scale diffusion LLM

A dLLM (diffusion LLM) is a drop-in replacement for a typical autoregressive LLM, supporting all its use cases, including RAG, tool use, and agentic workflows.

Inception Labs recently announced Mercury, a groundbreaking diffusion-based LLM. This innovative model diverges from traditional autoregressive LLMs, promising a significant leap in speed and computational efficiency.

Mercury's distinct advantage is its parallel generation process using a diffusion process. Unlike autoregressive models that painstakingly predict one token at a time, diffusion models generate text in a parallelized “coarse-to-fine” de-noising process.

Diffusion models are not new to generative AI, having gained prominence in image generation for their ability to produce high-fidelity outputs. However, the sequential nature of language has thus far favored autoregressive one-token-at-a-time approaches.

Inception Labs, founded by three professors, pioneered diffusion’s application to the realm of language, commercializing academic ideas on this topic. One co-founder co-wrote a paper in 2023 “Discrete Diffusion Modeling by Estimating the Ratios of the Data Distribution” that improves diffusion for natural language models.

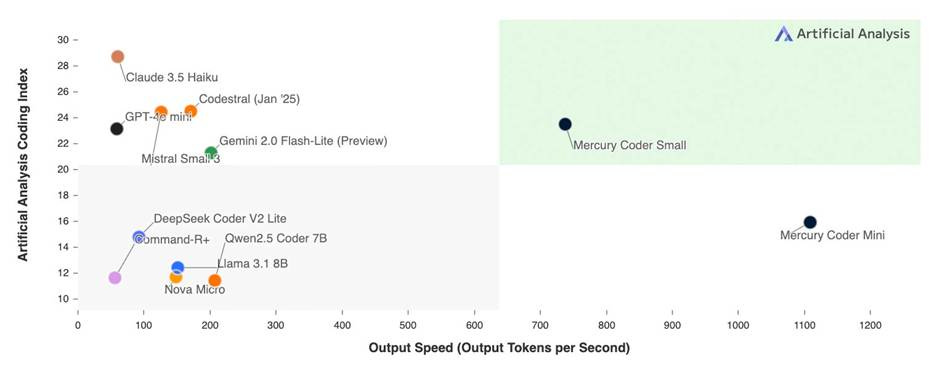

The shift to a diffusion-based architecture translates to remarkable speed gains. Inception Labs reports up to 10 times faster inference speeds compared to current state-of-the-art LLMs. While they don’t report much in terms of benchmarks nor LLM size, to determine the efficiency of the model, they back up their claims of speed-up: You can try the LLM on their hosted chatbot.

Mercury Coder is a special-purpose LLM for coding assistance. If such AI model acceleration doesn’t require tradeoffs in output quality, it presents a huge benefit, enabling lightning-fast code generation for developers and other AI workloads. However, we need an open-source AI model or clearer comparison benchmarks to verify actual efficiency gains.

Chain of Draft: Thinking Faster by Writing Less

The paper “Chain of Draft: Thinking Faster by Writing Less,” presents a novel prompting strategy, Chain of Draft (CoD), designed to enhance the efficiency of LLMs in complex reasoning tasks. Developed by AI researchers from Zoom, CoD is inspired by the human cognitive process of drafting concise intermediate thoughts, which contrasts with the more verbose Chain-of-Thought (CoT) prompting method.

In CoD, the model is asked to think step by step but limit each reasoning step to five words at most. Since this is a prompting technique, it doesn’t require LLM retraining or fine-tuning. Testing this on Claude 3.5 Sonnet, the authors found that it maintained reasoning accuracy versus chain-of-thought while reducing token usage:

By reducing verbosity and focusing on critical insights, CoD matches or surpasses CoT in accuracy while using as little as only 7.6% of the tokens, significantly reducing cost and latency across various reasoning tasks.

CoD encourages LLMs to generate minimalistic, yet informative, intermediate reasoning outputs, focusing on essential insights rather than detailed elaborations. I confirmed this simple prompting trick works on a local AI model, Phi4.

AI reasoning models don’t need to be prompted for their chain-of-thought, but this indicates that terse AI reasoning traces can be as effective as longer chains, so they can possibly be trained for logical reasoning efficiency as well as accuracy.

Conclusion

These three AI model innovations are quite distinct but share some common trends: AI model innovations are rapidly making AI models better, faster, and cheaper. The efficiency of AI models still has a lot of headroom; we can get more intelligence out of smaller AI models and fewer generated tokens. Finally, AI model innovations continue to be made in many places, not just in the major AI labs.