AI Research Review 25.01.31 - New Models

VideoLLaMA 3, Janus-Pro, Baichuan-Omni-1.5, Qwen2.5 VL, Qwen2.5-1M, Qwen2.5-MAX, Bespoke-Stratos

Introduction

There have been many AI model releases this week, as AI activity has picked up in the new year. We present several recent AI model releases that have shared technical reports and technical information, including several multi-modal AI models and a reasoning distillation fine-tune:

VideoLLaMA 3: Frontier Multimodal Foundation Models for Image and Video Understanding

Janus-Pro: Unified Multimodal Understanding and Generation with Data and Model Scaling

Baichuan-Omni-1.5: Technical Report

Qwen2.5 VL, Qwen2.5-1M and Qwen2.5-MAX

Bespoke-Stratos: The Unreasonable Effectiveness of Reasoning Distillation

VideoLLaMA 3: Frontier Multimodal Foundation Models for Image and Video Understanding

VideoLLaMA3 is a new multimodal foundation model from Alibaba researchers that aims to improve both image and video understanding through a vision-centric approach. Presented in VideoLLaMA 3: Frontier Multimodal Foundation Models for Image and Video Understanding, the "vision-centric" design of VideoLLaMA 3 emphasizes a training paradigm centered on high-quality image-text data, and a training framework that efficiently processes visual inputs.

VideoLLaMA3 has four training stages:

1) Vision Encoder Adaptation, which enables the vision encoder to accept images of variable resolutions as input.

2) Vision-Language Alignment, which jointly tunes the vision encoder, projector, and LLM with large-scale image-text data covering multiple types (including scene images, documents, charts) as well as text-only data.

3) Multi-task Fine-tuning, which incorporates image-text SFT data for downstream tasks and video-text data to establish a foundation for video understanding.

4) Video-centric Fine-tuning, which further improves the model's capability in video understanding.

The framework employs Any-resolution Vision Tokenization (AVT) to process images of any size, replacing fixed positional embeddings with RoPE. For videos, it uses Differential Frame Pruner to compress video tokens by reducing redundant information using similarity between consecutive frames. This makes video representations more precise and compact, enhancing processing efficiency.

To achieve high-quality data, they created the VL3-Syn7M dataset, which filters and re-captions images sourced from COYO-700M with detailed and short captions.

VideoLLaMA3 achieves state-of-the-art performance on image understanding, chart comprehension, mathematical reasoning, video understanding, temporal reasoning, and grounding, as demonstrated on various benchmarks including VideoMME, PerceptionTest, MLVU, DocVQA, and MathVista.

Janus-Pro: Unified Multimodal Understanding and Generation with Data and Model Scaling

DeepSeek developed and released the Janus series as unified multimodal understanding and generation AI models, which could both understand and interpret images as well as output images. Their latest iteration in the series is an enhanced version of their Janus model, Janus-Pro, described in “Janus-Pro: Unified Multimodal Understanding and Generation with Data and Model Scaling.”

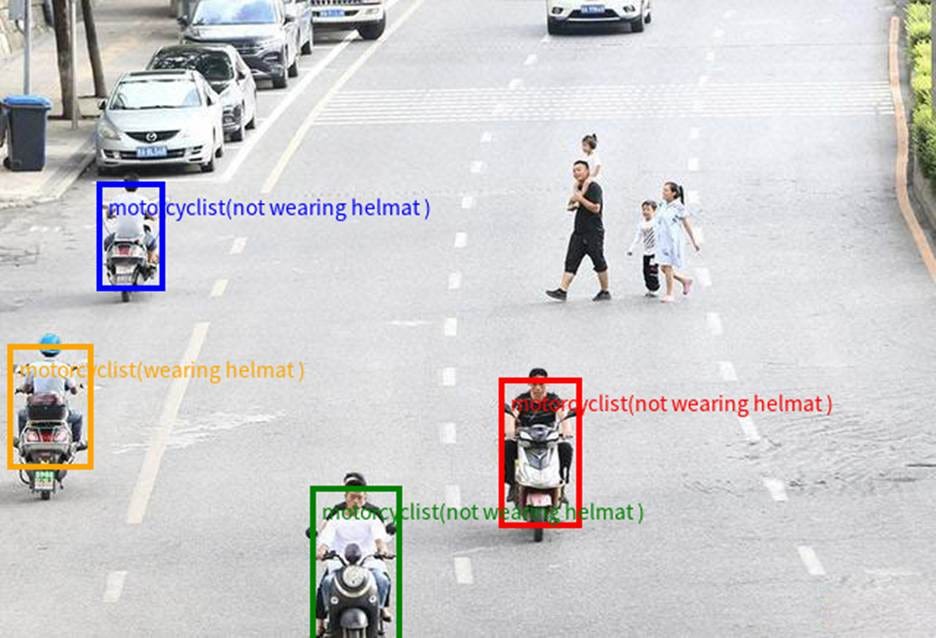

The core innovation of Janus, retained in Janus-Pro, is the decoupling of visual encoding pathways for understanding and generation, addressing the performance trade-offs often seen in models that use shared encoders. Janus-Pro improves on the original by incorporating an optimized three-stage training strategy, scaling to a 7B model size, and expanding training data. Data was expanded by adding ~90 million samples to multimodal understanding data and ~72 million synthetic aesthetic samples to visual generation data.

Evaluations showed Janus-Pro's superior performance. Janus-Pro-7B achieved a SOTA score of 79.2 on the MMBench benchmark. On text-to-image instruction following, Janus-Pro-7B scored 0.80 on GenEval and 84.19 on DPG-Bench, exceeding previous models including Janus, DALL-E 3, and Stable Diffusion 3 Medium. These results validate Janus-Pro’s enhanced capabilities in both multimodal understanding and complex text-to-image generation.

JanusPro code and model weights are publicly available via GitHub and HuggingFace.

Baichuan-Omni-1.5

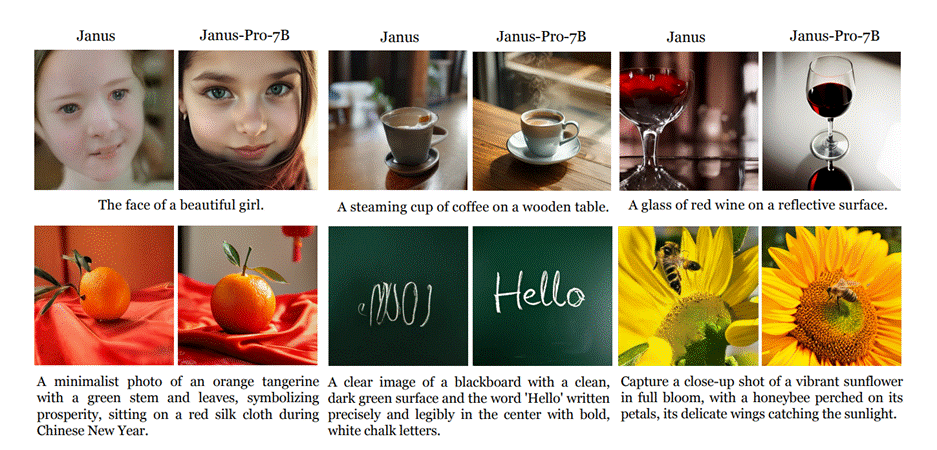

The Baichuan-Omni-1.5 model is a new multimodal AI model designed for comprehensive understanding and generation across text, audio, image, and video modalities. Presented in the Baichuan-Omni-1.5 Technical Report, Baichuan-Omni-1.5 achieves strong performance across all modalities, thanks to an integrated architecture and multi-stage training pipeline.

The model's architecture incorporates a visual branch based on NaViT (used in Qwen2-VL), an audio branch, and a pre-trained LLM backbone (a 7B LLM). The audio branch uses a novel Baichuan-Audio-Tokenizer based on Residual Vector Quantization (RVQ) to convert semantic and audio information into tokens; it is paired with an audio decoder, refined with a flow-matching model, for high-fidelity audio output.

They train the model with three stages of pre-training and then fine-tuning. It starts with image-text pretraining, followed by image-audio-text pretraining, and finally omni-modal pretraining, using approximately 500B tokens of data. This approach gradually integrates different modalities into a unified language space while mitigating modality conflicts. The model is then fine-tuned using a diverse set of supervised data, totaling around 17 million pairs.

Based on the various reported benchmark results, Baichuan-Omni-1.5 leads contemporary models (including GPT4o-mini and MiniCPM-o 2.6) in comprehensive omni-modal capabilities, and it is competitive or superior to other open multimodal LLMs models in visual understanding, video question answering, and audio tasks.

The authors also show how Baichuan-Omni-1.5 excels on multi-modal medical domain tasks such as medical image analysis, achieving SOTA scores on GMAI-MMBench and the new OpenMM-Medical benchmark, even surpassing models like Qwen2-VL-72B. This work shows that multi-modal AI models can support both visual and audio modalities without compromising either, even based on a modest 7B LLM backbone.

Qwen2.5-VL

The Alibaba Qwen team this week released Qwen2.5-VL, the new flagship vision-language model of Qwen and also a significant leap from the previous Qwen2-VL. They released both base and instruct models as open weights models in 3 sizes - 3B, 7B, and 72B - available on Hugging Face. The models are also available on Qwen Chat.

While they did not publish a technical paper on it, the Qwen2.5-VL blog post explains its capabilities, benchmarks and architecture. Key features include:

Visual understanding: Qwen2.5-VL excels in recognizing common objects and also analyzing texts, charts, icons, graphics, and layouts within images.

Agentic: Qwen2.5-VL can reason and dynamically direct tools via computer use.

Video understanding: Qwen2.5-VL can comprehend videos of over an hour, pinpointing events within a long video.

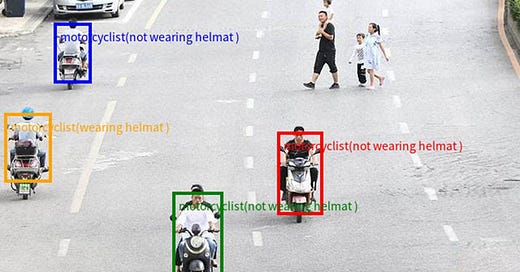

Visual localization: Qwen2.5-VL accurately localizes objects in an image by generating bounding boxes and outputs coordinates and attributes to JSON.

Generating structured outputs: Qwen2.5-VL supports structured outputs of images of invoices, forms, and tables, which benefits use-cases in finance, commerce, etc.

The flagship Qwen2.5-VL 72B competes with Gemini 2.0 Flash and GPT-4o on multi-modal tasks, while the smaller Qwen2.5-VL 7B provides capabilities on par with GPT-4o mini in a model that can be run locally.

Qwen2.5-1M Technical Report

The Qwen team also introduced Qwen2.5-1M, a series of models, in 7B and 14B sizes as well as the API-accessed model Qwen2.5-Turbo, which extend the context length to 1 million tokens. They shared details on it in Qwen2.5-1M Technical Report.

Compared to the previous 128K version, the Qwen2.5-1M series have significantly enhanced long-context capabilities through long-context pre-training and post-training. Key techniques such as long data synthesis, progressive pre-training, and multi-stage supervised fine-tuning are employed to effectively enhance long-context performance while reducing training.

By leveraging our inference framework, the Qwen2.5-1M models achieve a remarkable 3x to 7x prefill speedup in scenarios with 1 million tokens of context. This framework provides an efficient and powerful solution for developing applications that require long-context processing using open-source models.

Evaluation on long-context tasks shows that Qwen2.5-1M models significantly outperform previous versions without compromising short-context performance. Notably, the Qwen2.5-14B-Instruct-1M model surpasses GPT-4o-mini on long-context benchmarks and supports eight times longer contexts.

Qwen MAX

“Concurrently, we are developing Qwen2.5-Max, a large-scale MoE model that has been pretrained on over 20 trillion tokens and further post-trained with curated Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF) methodologies.” Qwen Team

In addition to releasing Qwen2.5-VL and Qwen2.5-1M, the Qwen team released their most capable model yet, Qwen2.5-MAX, a mixture-of-experts (MoE) LLM pretrained on over 20 trillion tokens. This is not an open model and is instead only available via its API through Alibaba Cloud or on Qwen Chat.

The performance of Qwen2.5-MAX is on par with SOTA frontier AI models, including the latest Claude3.5-Sonnet, DeepSeek-V3, and GPT-4o.

Their release announcement doesn’t share architecture details beyond what was quoted above, but it suggests that Qwen2.5-MAX is similar to DeepSeek V3 MoE and has comparable size. This suggests that fine-grained MoE model architectures will be adopted to scale AI models efficiently.

By releasing a broad set of high-quality AI models, including releasing Qwen2.5-MAX, Qwen2.5-VL, and Qwen2.5-1M just in this week, the Qwen team has established themselves as the leading Chinese AI lab.

Bespoke-Stratos: The unreasonable effectiveness of reasoning distillation

Bespoke-Stratos-32B is a fine-tuned version of Qwen/Qwen2.5-32B-Instruct that uses the Bespoke-Stratos-17k dataset. The dataset is derived by distilling DeepSeek-R1 using the data pipeline of Berkeley NovaSky’s Sky-T1 with some modifications, replacing the Sky-T1 data that used Qwen QWQ reasoning with DeepSeek-R1 reasoning.

The blog “Bespoke-Stratos: The unreasonable effectiveness of reasoning distillation” describes further details on their fine-tuning process:

The Bespoke Labs Bespoke-Stratos-17k dataset of 17,000 examples is curated from DeepSeek-R1 outputs.

They used GPT-4o-mini to filter out incorrect math solutions from DeepSeek-R1.

They used 8 H100s to train the model for 27 hours.

They reported improved reasoning in the 7B distilled model (Bespoke-Stratos-7B) over Qwen-7B-Instruct, finding distillation effective at the 7B scale.

Bespoke-Stratos-32B comes close to the performance of DeepSeek-R1-Distill-Qwen-32B, which shows “unreasonable effectiveness” of their approach, in that they fine-tuned for AI reasoning on only 17,000 examples versus the 800,000 used in DeepSeek’s own distillation.

This result shows that AI reasoning can be distilled into smaller AI models effectively at low cost, so long as there is good reasoning trace data. It further suggests that we will be able to have effective small AI reasoning models, based on distillation from larger AI reasoning models.