AI Research Review 25.02.27

Deep Research System Card, SuperGPQA, MLGym for AI Research Agents, LLM-Microscope, Mol-LLaMA Molecular LLMs, SpargeAttn speeds up inference.

Introduction

This week’s AI Research Review covers a variety of topics. It includes Deep Research performance and risks, harder benchmarks for knowledge (SuperGPQA) and agents (MLGym-Bench), understanding punctuation’s role in context of LLMs, the Mol-LLaMA molecule-understanding LLM, and accelerating inference with SpargeAttn:

Deep Research System Card

SuperGPQA: Scaling LLM Evaluation across 285 Graduate Disciplines

MLGym: A New Framework and Benchmark for Advancing AI Research Agents

LLM-Microscope: Uncovering the Hidden Role of Punctuation in Context Memory of Transformers

Mol-LLaMA: Towards General Understanding of Molecules in Large Molecular Language Model

SpargeAttn: Accurate Sparse Attention Accelerating Any Model Inference

Deep Research System Card

"Our models are on the cusp of being able to meaningfully help novices create known biological threats." – OpenAI, Deep Research System Card

OpenAI opened up Deep Research to ChatGPT Plus users this week, and at the same time, OpenAI also published the Deep Research system card, a detailed report on the capabilities and AI safety profile of Deep Research.

OpenAI developed safety mitigations around potential vulnerabilities, and they conducted extensive safety testing and red teaming. Evaluations show that Deep Research “is significantly more accurate and hallucinates less” than other AI models, and has strong capabilities on software tasks, achieving state-of-the-art scores on SWE-Lancer.

Despite developing mitigations, OpenAI found Deep Research was capable of aiding potentially harmful endeavors, leading to risks:

After reviewing the results from the Preparedness evaluations, the Safety Advisory Group classified the deep research model as overall medium risk, including medium risk for cybersecurity, persuasion, CBRN, model autonomy. This is the first time a model is rated medium risk in cybersecurity.

OpenAI’s Deep Research is the best-performing agent for multi-step research tasks, so it is not surprising it presents both great benefits yet also poses some risks from misuse. OpenAI is to be commended for their red teaming, mitigations, and transparency on AI safety concerns.

SuperGPQA: Scaling LLM Evaluation across 285 Graduate Disciplines

The paper SuperGPQA: Scaling LLM Evaluation across 285 Graduate Disciplines introduces SuperGPQA, an extensive benchmark that tests LLMs on 285 graduate-level disciplines, far beyond the common subjects of existing benchmarks. It uses a human-and-LLM iterative filtering process to refine questions.

The benchmark reveals that even state-of-the-art LLMs have “significant room for improvement” in many specialized domains, exposing gaps that existing GPQA might not reveal. For example, 03-mini gets 55% on SuperGPQA as compared with 78% on GPQA. This harder benchmark continues the trend of new harder benchmarks for AI models as the prior benchmarks get saturated.

MLGym: A New Framework and Benchmark for Advancing AI Research Agents

The paper “MLGym: A New Framework and Benchmark for Advancing AI Research Agents” introduces MLGym and MLGym-Bench, a novel framework and benchmark designed to evaluate and advance LLM agents in tackling realistic AI research tasks.

MLGym represents the first Gym environment tailored for machine learning tasks, thereby facilitating the application of reinforcement learning (RL) algorithms to train AI agents. The MLGym framework makes it easy to add new tasks, integrate and evaluate models or agents, and develop new learning algorithms for training agents.

MLGym-bench supports MLGym through the evaluation of agents:

MLGym-bench consists of 13 diverse and open-ended AI research tasks from diverse domains such as computer vision, natural language processing, reinforcement learning, and game theory. Solving these tasks requires real-world AI research skills such as generating new ideas and hypotheses, creating and processing data, implementing ML methods, training models, running experiments, analyzing the results, and iterating through this process to improve on a given task.

The authors used MLGym-Bench to evaluate several state-of-the-art LLMs, including Claude-3.5-Sonnet, Llama-3.1 405B, GPT-4o, o1-preview, and Gemini-1.5 Pro, but not AI models released in 2025. Experiments show that current frontier LLMs can perform complex research tasks, but they still fail to produce novel research ideas, highlighting the gap that MLGym is designed to explore.

The framework and benchmark were open sourced by Meta to promote future research in enhancing the AI research capabilities of LLM agents.

LLM-Microscope: Uncovering the Hidden Role of Punctuation in Context Memory of Transformers

Even “minor” tokens like determiners and punctuation carry surprisingly high contextual value for Transformer models and play a significant role in LLM understanding. The paper “LLM-Microscope: Uncovering the Hidden Role of Punctuation in Context Memory of Transformers” explores this and introduces LLM-Microscope, an open-source toolkit designed to analyze how LLMs encode and store contextual information.

The authors demonstrate that removing such filler tokens (stop words, commas, etc.) consistently degrades LLM performance on knowledge tasks (measured on MMLU and BABILong-4k), underscoring their hidden importance:

Our analysis also shows a strong correlation between contextualization and linearity, where linearity measures how closely the transformation from one layer's embeddings to the next can be approximated by a single linear mapping. These findings underscore the hidden importance of filler tokens in maintaining context.

The LLM-Microscope toolkit assesses token-level nonlinearity, evaluates contextual memory, visualizes intermediate layer contributions, and measures the intrinsic dimensionality of representations. Experiments using LLM-Microscope show that determiners and punctuation are highly contextualized, while nouns are less so. Multilingual analysis suggests that models may perform implicit translation into English in intermediate layers.

Mol-LLaMA: Towards General Understanding of Molecules in Large Molecular Language Model

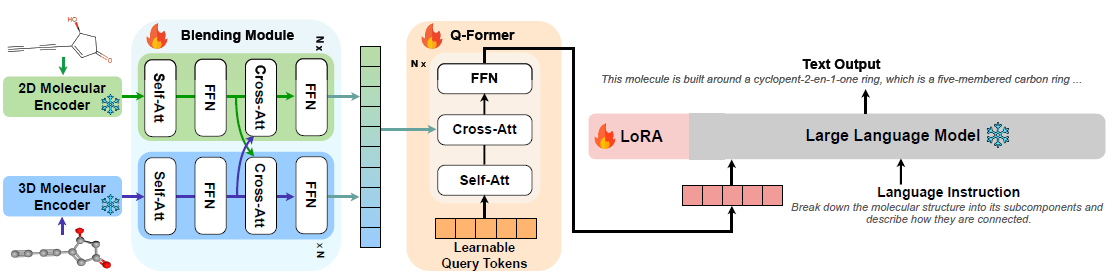

The paper “Mol-LLaMA: Towards General Understanding of Molecules in Large Molecular Language Model” introduces Mol-LLaMA, a novel large molecular language model designed to serve as a general-purpose “molecular assistant”.

Mol-LLaMA is trained for its specialization using multi-modal instruction fine-tuning, applying a specially curated dataset that encompasses fundamental molecular characteristics to learn molecular features (chemical structures, properties, etc.) The base LLM it is trained on Llama 3.1 8B.

To achieve this, the authors designed three key data types, covering essential knowledge of molecular structures: Detailed structural descriptions, structure-to-feature relationship explanations, and comprehensive conversations. The dataset consists of 77k detailed structural descriptions, 147k structure-to-feature relationship explanations, and 60k comprehensive conversations.

The model incorporates a unique module that integrates information from both 2D and 3D molecular encoders, leveraging the distinct advantages of different molecular representations to improve molecular feature understanding. Much as multi-modal LLMs use vision encoders to tokenize images for LLM reasoning, Mol-LLaMA uses a molecular encoder and fine-tuning on molecular tasks, making it an AI model that possesses general molecular understanding.

Experiments show that Mol-LLaMA can comprehend general molecular features and generate detailed, relevant responses to user queries, superior to existing LLMs and molecular AI models. Mol-LLaMA has potential for applications in chemistry, drug discovery and related fields.

SpargeAttn: Accurate Sparse Attention Accelerating Any Model Inference

The paper “SpargeAttn: Accurate Sparse Attention Accelerating Any Model Inference” introduces SpargeAttn, a universal sparse attention mechanism that can plug into large AI models to speed up inference by 2 to 7 times without hurting accuracy.

SpargeAttn is a universal sparse and quantized attention method that addresses the computational bottleneck posed by the quadratic time complexity of attention. It works through a two-stage online filtering of the attention map:

In the first stage, we rapidly and accurately predict the attention map, enabling the skip of some matrix multiplications in attention. In the second stage, we design an online softmax-aware filter that incurs no extra overhead and further skips some matrix multiplications.

In simple terms, determining the sparsity of the attention block upfront can filter and reduce matrix multiplications needed in attention, without compromising quality.

Evaluated on language, image, and video generation tasks using models like Llama3.1 and Stable Diffusion, SpargeAttn demonstrates significant acceleration while preserving model quality, with for example a 1.8x speedup on Mochi. The method's universal applicability and training-free nature mark a substantial advance in accelerating inference in large AI models, making AI inference faster and cheaper.