AI Research Review 25.05.08 – Models for Physical AI

The Cosmos World Foundation Model Platform for physical AI, and Cosmos Predict, Cosmos Reason1, Cosmos Transform 1, and Gr00T N1.

Introduction

Physical AI is the convergence of sophisticated AI algorithms with physical hardware systems, and it encompasses robotics, self-driving cars and other systems that use intelligence while interacting in the real world.

While LLMs and foundation AI models can be trained by leveraging vast amounts of internet text data, it’s more challenging to train robots and other physical AI due to lack of accessible data. Training robots only on real-world observations and actions is slow, not safe, and not scalable; simulation is needed. Most of this work involves developing datasets and models to support simulation-based training for physical AI. Nvidia has been at the forefront of these developments.

This research review article was originally drafted in late March in the wake of Nvidia’s GTC, where Nvidia announced exciting new AI models and datasets to support physical AI. Nvidia’s Director AI Dr Jim Fan gave a recent presentation on Physical AI, and it reminded me of the importance of this work, prompting me to publish this.

Without further ado, these are key AI models Nvidia released at their latest GTC to support physical AI development:

Cosmos World Foundation Model Platform and Cosmos-Predict

Cosmos Reason

Cosmos Transfer

Gr00T N1

Cosmos World Foundation Model Platform for Physical AI

Cosmos, a foundational model platform for physical AI, is premised on this observation:

Physical AI needs to be trained digitally first. It needs a digital twin of itself, the policy model, and a digital twin of the world, the world model.

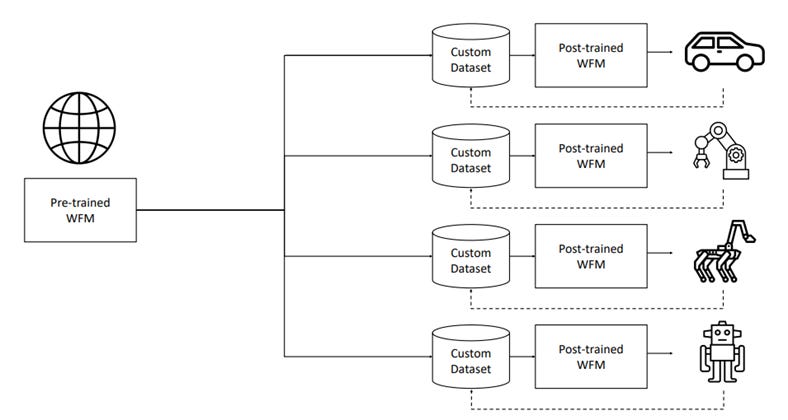

A world foundation model (WFM) – that ‘digital twin of the world’ - predicts the future state of the (simulated) world based on past observations, the current state, and current perturbations. In Cosmos World Foundation Model Platform for Physical AI, Nvidia presented the Cosmos world foundation models and released them to open source to help developers build customized world models for specific Physical AI training simulations.

The Cosmos WFM Platform is based on video inputs and consists of several major components: video curator, video tokenizer, pre-trained general WFMs suitable for fine-tuning, WFM post-training samples, and a guardrail system blocking harmful outputs.

They curated and tokenized 20 million hours of video as well as using images to pre-train the Cosmos WFM prediction models, training both diffusion models and autoregressive models from 4B to 14B sizes to predict future video from video and text prompts.

These pretrained Cosmos-Predict1 Video2World models can be fine-tuned to take further video input to support simulation and digital twin creation within specific domains, for example, to support industrial robotics development.

The Cosmos WFM platform aims to provide Physical AI developers with tools for policy evaluation, initialization, training, planning, and synthetic data generation. Cosmos WFMs and video tokenizers are open source under the Nvidia Open Model License and available on HuggingFace.

Cosmos Reason: From Physical Common Sense to Embodied Reasoning

Cosmos-Reason1 models … understand the physical world and generate appropriate embodied decisions (e.g., next step action) in natural language through long chain-of-thought reasoning processes. - Nvidia

The world model building didn’t stop with Cosmos-Predict. Nvidia also introduced Cosmos-Reason1 at GTC and shared technical details in Cosmos-Reason: From Physical Common Sense to Embodied Reasoning. Cosmos-Reason1 is a suite of customizable World Foundation Models (WFMs) equipped with spatiotemporal awareness, designed to understand, reason and perform complex actions in the physical world.

As they put it in Cosmos-Reason1: From Physical Common Sense to Embodied Reasoning:

We begin by defining key capabilities for Physical AI reasoning, with a focus on physical common sense and embodied reasoning. To represent physical common sense, we use a hierarchical ontology that captures fundamental knowledge about space, time, and physics. For embodied reasoning, we rely on a two-dimensional ontology that generalizes across different physical embodiments.

Its architecture incorporates hybrid Mamba-MLP-Transformer design as the core of its LLM. From this foundation, they developed and trained two multimodal LLMs, Cosmos-Reason1-8B and Cosmos-Reason1-56B, in four stages: Vision pre-training, general supervised fine-tuning (SFT), Physical AI SFT, and Physical AI reinforcement learning (RL) as the post-training. The models are trained to analyze video data and predict the outcomes of interactions through a chain-of-thought reasoning process.

The models were evaluated on 3 benchmark datasets including space, time, and fundamental physics:

To evaluate our models, we build comprehensive benchmarks for physical common sense and embodied reasoning according to our ontologies. Evaluation results show that Physical AI SFT and reinforcement learning bring significant improvements.

Applications of Cosmos-Reason1 span various stages of physical AI development. It can enhance existing world foundation models by adding a strong reasoning component. Developers can leverage the model to make physical AI data annotation and curation more efficient and accurate. Cosmos-Reason1 can also be post-trained to make physical AI high-level planners that can instruct how to accomplish complex tasks.

Cosmos Transfer

Presented in Cosmos-Transfer1: Conditional World Generation with Adaptive Multimodal Control, Cosmos-Transfer1 represents a key advance in controllable world generation for physical AI. Its core functionality involves ingesting structured video inputs, such as segmentation maps, depth maps, lidar scans, pose estimation maps, and trajectory maps, to produce controllable photorealistic video world-model outputs.

Cosmos-Transfer1 builds upon Cosmos-Predict1 by post-training the Cosmos-Predict1 diffusion world model, and it employs a diffusion transformer-based architecture (DiT) enhanced with a novel ControlNet design.

They also introduce a customizable spatial conditional scheme, which allows for differential weighting of various conditional inputs at different spatial locations, achieving highly controllable world generation. This enables applications in robotics and autonomous vehicle data enrichment.

Cosmos-Transform1 can transform structured video inputs into highly realistic photorealistic outputs, and it can be configured to either preserve the original structure and appearance of the input or to allow for variations in appearance while maintaining the underlying structure in generated video sequences. The effectiveness of Cosmos-Transfer1 was verified through extensive empirical evaluations on a variety of Physical AI-related tasks, measuring generation quality and controllability on those tasks.

Gr00T N1: An Open Foundation Model for Generalist Humanoid Robots

Isaac GR00T is Nvidia’s development platform for Generalist Robot foundation models, and GR00T N1 is the first open foundation model for generalist humanoid robots, designed to enable robots to reason, handle real-world variability, and quickly learn new tasks. It was highlighted at Nvidia’s GTC and presented in the paper GR00T N1: An Open Foundation Model for Generalist Humanoid Robots.

GR00T N1 uses a dual-system approach with a Vision-Language-Action (VLA) architecture, inspired by human cognitive processing, integrating vision, language, and motor control. The first module (System 2) uses a vision-language model (VLM) to interpret the environment; System 2 interprets the environment through visual and linguistic instructions, allowing it to plan appropriate actions. The second diffusion transformer module (System 1) generates real-time motor actions.

Both modules are tightly coupled and trained end-to-end:

We train GR00T N1 with a heterogeneous mixture of real-robot trajectories, human videos, and synthetically generated datasets.

The model was evaluated on standard simulation benchmarks and deployed on the Fourier GR-1 humanoid robot for language-conditioned bimanual manipulation tasks, demonstrating robust performance and data efficiency. Incorporating synthetic data generated by the Isaac GR00T Blueprint resulted in a 40% improvement in performance compared to using only real-world data.

At GTC, Nvidia demonstrated the 1X humanoid robot autonomously performing domestic tidying tasks using a post-trained policy built on GR00T N1.

GR00T N1 outperforms state-of-the-art imitation learning baselines and shows potential for generalization, adaptability, and fine-tuned control in manipulation tasks. The training data and task evaluation scenarios for GR00T N1 are publicly available on platforms like Hugging Face and GitHub.

Summary - Nvidia’s AI Models for Physical AI

Nvidia’s Cosmos suite of models, GR00T N1, and the supporting datasets are great contributions to accelerating physical AI development. To foster research and development, Nvidia has open-sourced the Cosmos models and Physical AI datasets and made them available on GitHub and HuggingFace.

HuggingFace: Cosmos-Predict1, Cosmos-Transfer1.

GitHub: Cosmos-Predict1, Cosmos-Reason1, Cosmos-Transfer1, Gr00T N1.

While Physical AI is a harder nut to crack than LLMs and video AI models, it is leveraging other AI advances to make progress, so progress is accelerating. Leveraging simulation and AI models, physical AI and robotics will follow in the footsteps of the development of virtual AI, and in time will become our most important AI application, bringing AI into the physical world.

Great work