AI Research Review 25.06.05

Darwin Gödel Machine: Self-Improving Agents, Reflect, Retry, Reward: Reflection as a reward signal, Managing entropy in RL, 80/20 rule: High-entropy token for effective RL, Skywork Open Reasoner 1.

Introduction

This AI research review highlights the progress being made on AI reasoning through evolution, entropy management, and self-improvement. We have two AI research papers on self-improvement, one of self-improving AI Agents through evolution, the other on rewarding self-reflection for RL:

Darwin Gödel Machine: Open-Ended Evolution of Self-Improving Agents

Reflect, Retry, Reward: Self-Improving LLMs via Reinforcement Learning

Entropy has become important in analyzing reinforcement learning (RL) for AI model reasoning, because entropy measures the exploration-exploitation balance important to RL algorithm success. We have two AI research papers on entropy in RL and one on the Skywork Open Reasoner 1 models, which studies entropy management in RL training:

The Entropy Mechanism of RL for Reasoning Language Models

Beyond the 80/20 Rule: High-Entropy Minority Tokens Drive Effective RL for LLM Reasoning

Skywork Open Reasoner 1 Technical Report

Darwin Godel Machine: Open-Ended Evolution of Self-Improving Agents

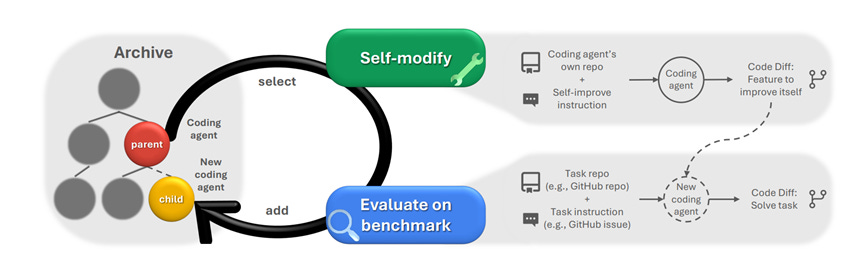

Researchers from Sakana AI have introduced the Darwin Gödel Machine (DGM), an AI agent that can modify its own code to autonomously self-improve, an intriguing idea that if implemented well, could accelerate AI progress. The paper Darwin Godel Machine: Open-Ended Evolution of Self-Improving Agents proposes an empirically validated, self-modifying system that iteratively refines its own code and self-modification abilities.

They explain how DGM relates to the Godel Machine that inspired it:

The Gödel machine [is] a self-improving AI that repeatedly modifies itself in a provably beneficial manner. Unfortunately, proving that most changes are net beneficial is impossible in practice. We introduce the Darwin Gödel Machine (DGM), a self-improving system that iteratively modifies its own code (thereby also improving its ability to modify its own codebase) and empirically validates each change using coding benchmarks.

The DGM operates with an evolving archive of AI coding agents, powered by frozen AI foundation models. Agents self-modify by analyzing past performance logs to propose and implement AI agent code changes, such as enhancing tools (e.g., granular file editing) or refining workflows (e.g., multiple patch generations). New AI agents are coded from changes and then empirically evaluated on coding benchmarks (SWE-bench, Polyglot). Valid agents are added to the archive, retaining diverse AI agent solutions for open-ended exploration of future improvements.

The DGM process produced AI agents that showed significantly improved capabilities on evaluations, boosting SWE-bench performance from 20.0% to 50.0% and Polyglot from 14.2% to 30.7%. It substantially outperforms baselines lacking self-improvement or open-ended exploration.

The DGM's integration of evolutionary principles with empirical validation offers a compelling demonstration of autonomous self-improving AI. However, the evolutionary algorithm used incurs high computational costs. Future work could utilize more efficient optimization methods and also address potential "objective hacking" risks, paving the way for practical self-improving AI agents.

Reflect, Retry, Reward: Self-Improving LLMs via Reinforcement Learning

Our novel paradigm is thus an exciting pathway to more useful and reliable language models that can self-improve on challenging tasks with limited external feedback. - Authors of “Reflect, Retry, Reward: Self-Improving LLMs via Reinforcement Learning”

As with the above DGM work, the paper Reflect, Retry, Reward: Self-Improving LLMs via Reinforcement Learning addresses the challenge of getting AI to self-improve. The authors observe that an LLM’s own reflection and judgment can be used as an evaluation signal. This paper introduces a novel reinforcement learning framework that trains LLMs to generate more effective self-reflections, and by rewarding that, bootstraps to better overall performance.

The core problem addressed is improving LLM performance on complex, verifiable tasks where generating synthetic training data is infeasible and only binary success/failure feedback is available. The paper's key contribution is a task-agnostic methodology that teaches models to optimize their self-correction process rather than specific task performance, leading to more robust and reliable LLMs.

The “Reflect, Retry, Reward” methodology operates in two stages. Upon an initial task failure, the LLM generates a self-reflective commentary analyzing its previous attempt. Subsequently, the model attempts the task again, incorporating its self-reflection into the context. If this second attempt succeeds, only the tokens generated during the self-reflection phase are rewarded using Group Relative Policy Optimization (GRPO), which leverages GRPO’s ability to handle sparse, outcome-based rewards.

Experimental results show substantial performance gains, “as high as 34.7% improvement at math equation writing and 18.1% improvement at function calling.” Furthermore, the training showed minimal catastrophic forgetting on diverse benchmarks, suggesting a general improvement in reasoning.

Similar to how process-based reward models work, the model learns to generate a superior self-reflection process instead of directly rewarding final success. This indirect reward fosters generalized reasoning skills and is practical for tasks with automatic verifiers.

The Entropy Mechanism of RL for Reasoning Language Models

Entropy is a measure of disorder and variance; in this context entropy relates to the amount of divergence in the probability distribution of an RL policy or an LLM output. The paper The Entropy Mechanism of Reinforcement Learning for Reasoning Language Models addresses the critical issue of the collapse of policy entropy reinforcement learning (RL) for reasoning LLMs.

The authors observe "entropy collapse", with entropy drops occurring early in RL training; this lack of diversity in outputs leads to limited model change and RL performance saturation. Their core contribution is an empirical law that relates entropy and RL performance:

In practice, we establish a transformation equation R=-a*e^H+b between entropy H and downstream performance R. This empirical law strongly indicates that the policy performance is traded from policy entropy, thus bottlenecked by its exhaustion, and the ceiling is fully predictable H=0, R=-a+b. Our finding necessitates entropy management for continuous exploration toward scaling compute for RL.

Their mathematical derivation reveals that entropy change is approximately determined by the negative covariance between an action's log-probability and its logit change. For Policy Gradient-like algorithms, this logit change is proportional to the action's advantage. This higher covariance leads to quicker policy entropy loss and limits RL scaling.

In plain language, increasing probabilities of “high-probability and high advantage” outputs does not improve performance because it is already learned. When entropy is low, the RL is failing to ‘explore’ the solution space and thus failing to improve.

Since the policy “trades” entropy for performance, managing (limiting) entropy loss during training is key to scaling RL. The authors observe that only a small portion of tokens exhibit extremely high covariance, and so they control entropy loss in RL training by restricting the update of high-covariance tokens:

Specifically, we propose two simple yet effective techniques, namely Clip-Cov and KL-Cov, which clip and apply KL penalty to tokens with high covariances, respectively. …

Experiments on Qwen2.5 models (7B, 32B) in mathematical reasoning show significant improvements. `Clip-Cov` and `KL-Cov` yielded average gains of 2.0% for 7B and 6.4% for 32B over GRPO.

This result demonstrates that by controlling entropy and avoiding policy entropy collapse, the RL training can sustain further exploration and achieve better reasoning performance in the trained model.

High-Entropy Tokens Drive Effective RL for LLM Reasoning

These findings indicate that the efficacy of RLVR primarily arises from optimizing the high-entropy tokens that decide reasoning directions. - From “Beyond the 80/20 Rule: High-Entropy Minority Tokens Drive Effective Reinforcement Learning for LLM Reasoning”

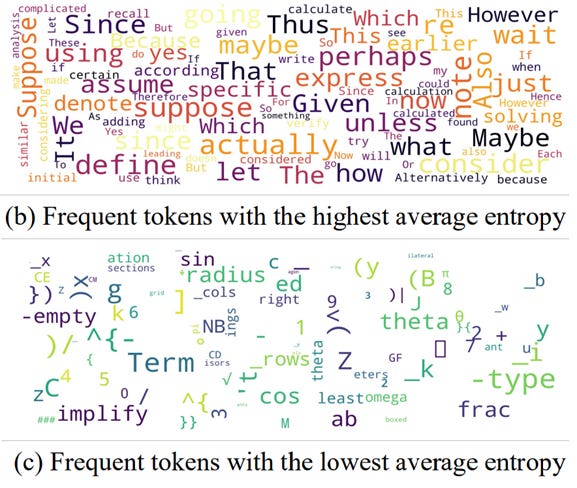

The paper Beyond the 80/20 Rule: High-Entropy Minority Tokens Drive Effective Reinforcement Learning for LLM Reasoning introduces a novel token entropy perspective: Reinforcement Learning with Verifiable Rewards (RLVR) for LLMs primarily benefits from optimizing a small subset of "high-entropy" tokens. These tokens act as crucial "forks" in Chain-of-Thought (CoT) reasoning, where targeted gradient updates significantly enhance LLM performance.

The authors analyze token entropy in LLM chains of thoughts, finding most tokens are low-entropy, but a critical minority are high-entropy “forks” for reasoning decisions. RL with verifiable rewards primarily adjusts these high-entropy tokens. The authors take advantage of this fact to restrict policy gradient updates (via DAPO as a baseline) to only the top 20% highest-entropy tokens per batch, effectively ignoring the low-entropy majority.

This focused optimization yielded substantial performance improvements for larger models. On Qwen3-32B, limiting gradients to 20% high-entropy tokens boosted AIME’25 by +11.0 (from 45 to 56) and AIME’24 by +7.7, achieving new state-of-the-art benchmarks for models under 600B parameters. Results show improved generalization and response accuracy on out-of-distribution datasets and scale with model size.

This insightful work shows a minority of critical tokens drive nearly all performance gains in RL for reasoning; the effectiveness of high-entropy tokens may lie in their ability to enhance exploration. This insight yields more efficient and more scalable RL training methods.

Skywork Open Reasoner 1

Skywork Open Reasoner 1 (OR1) is a suite of open AI reasoning models, 7B and 32B, that are built from DeepSeek-R1 distilled models and achieve remarkably strong AI reasoning performance. Skywork-OR1-32B model achieves 79.7 on AIME24, 69.0 on AIME25, and 63.9 LiveCodeBench benchmarks, delivering Deepseek-R1 performance in a 32B model and surpassing Qwen3-32B. Skywork-OR1-7B and Skywork-OR1-Math-7B models are also competitive on reasoning for their size, the latter scoring 69.8 on AIME24.

The Skywork Open Reasoner 1 Technical Report shares technical details of how this was achieved. Skywork-OR1 is an effective and scalable RL implementation specifically designed to enhance the reasoning capabilities of LLMs generating long Chains-of-Thought (CoT). The researchers’ core contribution is MAGIC, a refined RL recipe that leverages a modified Group Relative Policy Optimization (GRPO) algorithm.

The "MAGIC" recipe integrates several key components: rigorous data collection with offline and online filtering, multi-stage training that progressively increases context length for efficiency, and high-temperature sampling to boost exploration. Further, the policy loss adopts a token-level approach with adaptive entropy control, which dynamically adjusts the entropy loss coefficient to prevent premature entropy collapse and maintain exploration.

The authors conducted an empirical investigation into factors influencing policy entropy dynamics through ablation experiments. They determined that increased off-policy updates (via more SGD steps or data reuse) accelerate entropy collapse, and mitigating policy entropy collapse is critical to improved test performance. On-policy training significantly mitigates this issue.

These results confirm the importance of entropy control in the RL training process, aligning with the entropy-related AI research articles presented above. The further takeaway to this is that there is further room to optimize RL efficiency (80/20 paper) and to scale RL by managing entropy loss.

Skywork has open-sourced their Skywork-OR1 models and the training dataset resources to further support research on RL-based AI reasoning optimization.

We also release a Notion Blog to share detailed training recipes and extensive experimental results, analysis, and insights, dedicated to helping the community to better research, understand, and push the frontier of open reasoning models. - Skywork OR1 team