AI Research Review 25.07.17

Chain of Thought Monitorability and AI Safety, Energy-Based Transformers, MemOS, Latent Reasoning.

Introduction

The best AI research papers give us insight into future AI directions and model releases. This week’s AI papers give a glimpse of this with innovations for better AI reasoning, AI model monitoring, and AI system memory management:

Chain of Thought Monitorability: A New and Fragile Opportunity for AI Safety

Energy-Based Transformers are Scalable Learners and Thinkers

MemOS: A Memory OS for AI System

A Survey on Latent Reasoning

Energy-based transformers and latent reasoning models present improved AI models for reasoning. The Chain of Thought (CoT) monitoring paper presents a way to monitor CoT traces, but it also raises the alarm that if AI reasoning models evolve to become opaque and impair such monitoring, it poses serious AI risks.

MemOS presents an approach to unified memory management. Better memory management in AI systems, beyond RAG and simply bigger contexts, is an area ripe for more innovation.

Chain of Thought Monitorability for AI Safety

A collaborative study from the UK AI Security Institute, Apollo Research, Google DeepMind, OpenAI, Anthropic, Meta, and other academics argues that monitoring Chain of Thought (CoT) in advanced AI reasoning models presents a critical but vulnerable avenue for AI safety. The paper Chain of Thought Monitorability: A New and Fragile Opportunity for AI Safety examines how CoT outputs can serve as an effective oversight mechanism for complex AI systems, while cautioning that reinforcement learning objectives or novel architectures may erode interpretability as AI capability advances.

The core contribution is identifying CoT monitorability as a promising, yet potentially fragile, safety mechanism that warrants significant research and investment. It is less of a research paper and more of a manifesto for action. This got press attention from TechCrunch and others because AI research leaders from OpenAI, Anthropic, and Google DeepMind jointly warned that without proactive measures to implement CoT monitoring and evaluation, the window to inspect model reasoning could soon close.

CoT monitoring involves an automated system analyzing a reasoning model's internal “thought process” for suspicious or harmful intent before final outputs or actions. This opportunity arises from properties that cause the AI model to “think out loud.”

For sufficiently difficult tasks, long serial chains of cognition must pass through the CoT as expressed thoughts. Transformer models use CoT as a form of working memory, making internal reasoning accessible as token outputs:

Chain of thought is the only way that information can flow down from later to earlier layers. As a result, for a fixed amount of tokens, the longest flows of information must use the chain of thought, and many information flows are impossible without it.

In addition, models externalize reasoning even when not strictly necessary due to a propensity to think out loud, providing practical utility for detecting misbehavior like explicit statements of malicious intent.

CoT monitoring has shown utility in detecting and correcting early signals of misalignment and identifying flaws in model evaluations. It provides “a rare glimpse into how AI agents make decisions,” enhancing oversight beyond merely observing final actions, especially for multi-step reasoning tasks. The authors see CoT monitoring as a key contributor to AI safety, saying:

AI Developers should track CoT monitorability of their models and treat it as a contributor to model safety.

However, CoT monitorability is fragile. More reinforcement learning (RL) scaling, direct process supervision (which might make CoTs less faithful), and opaque architectures (such as latent space reasoning) could significantly degrade model transparency. The fragility implies a need for careful architectural choices to maintain this safety advantage:

Because CoT monitorability may be fragile, we recommend that frontier model developers consider the impact of development decisions on CoT monitorability.

Energy-Based Transformers are Scalable Learners and Thinkers

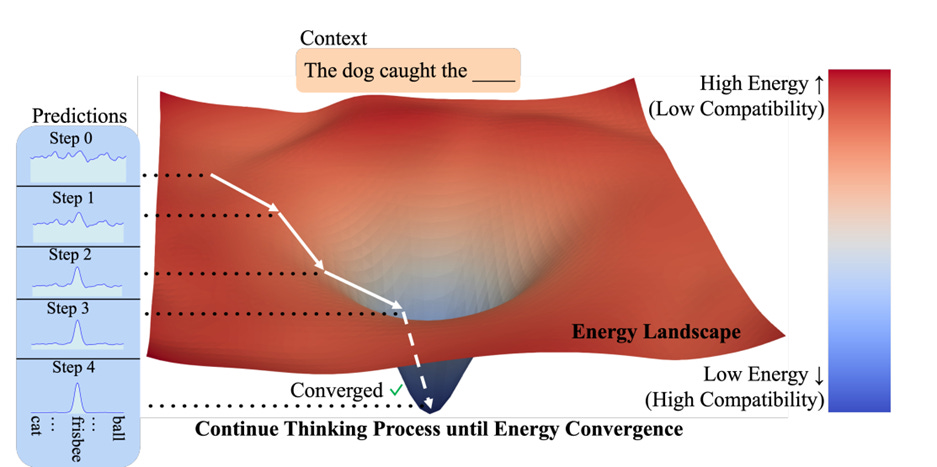

The paper Energy-Based Transformers are Scalable Learners and Thinkers introduces Energy-Based Transformers (EBTs), a new class of Energy-Based Models (EBMs) that enables System 2 Thinking to emerge from unsupervised learning, generalizing beyond limitations of prior approaches.

Existing training methods for System 2 reasoning require additional supervision or are restricted to verifiable domains like math and coding. EBTs address this by reframing prediction problems as optimization, learning to explicitly verify input-prediction compatibility through an energy function.

In this paper, we ask the question "Is it possible to generalize these System 2 Thinking approaches, and develop models that learn to think solely from unsupervised learning?" Interestingly, we find the answer is yes, by learning to explicitly verify the compatibility between inputs and candidate-predictions, and then re-framing prediction problems as optimization with respect to this verifier.

EBTs are trained to assign an energy value (unnormalized probability) to every input and candidate-prediction pair. Predictions are then achieved via gradient descent-based energy minimization until convergence, which mimics a thinking process.

This iterative optimization naturally embodies three key facets of System 2 Thinking: dynamic computation allocation based on problem difficulty, modeling uncertainty in continuous state spaces, and explicit prediction verification.

EBMs are built on this principle that verification is easier than generation: rather than learning to generate directly, as in most existing paradigms, EBMs learn to generate by optimizing predictions with respect to this learned verification (energy function).

Key empirical results underscore EBTs’ superior scalability. During pretraining, EBTs exhibit an “up to 35% higher scaling rate than the Transformer++” across critical axes. At inference, EBTs show significant reasoning performance gains, achieving 29% more improvement than the Transformer++ on language tasks with increased computation. Furthermore, EBTs outperform Diffusion Transformers on image denoising while using a remarkable 99% fewer forward passes, and consistently demonstrate better generalization, especially to out-of-distribution data.

By coupling unsupervised learning with iterative reasoning, EBTs make System 2 capabilities intrinsic to the model. While they entail a higher computational cost per sample, their superior scaling in both training and inference, coupled with improved generalization, positions EBTs as a compelling AI model architecture for adaptive intelligence.

MemOS: A Memory OS for AI System

MemOS establishes a memory-centric system framework that brings controllability, plasticity, and evolvability to LLMs, laying the foundation for continual learning and personalized modeling. - Authors of MemOS

The lack of well-defined memory management hinders the utility of long-context reasoning, continual personalization, and knowledge in LLMs. The paper MemOS: A Memory OS for AI System introduces MemOS, an open-source, industrial-grade memory operating system for LLMs, designed to systematically address these challenges of long-term dialogue, cross-session reasoning, and personalized memory management.

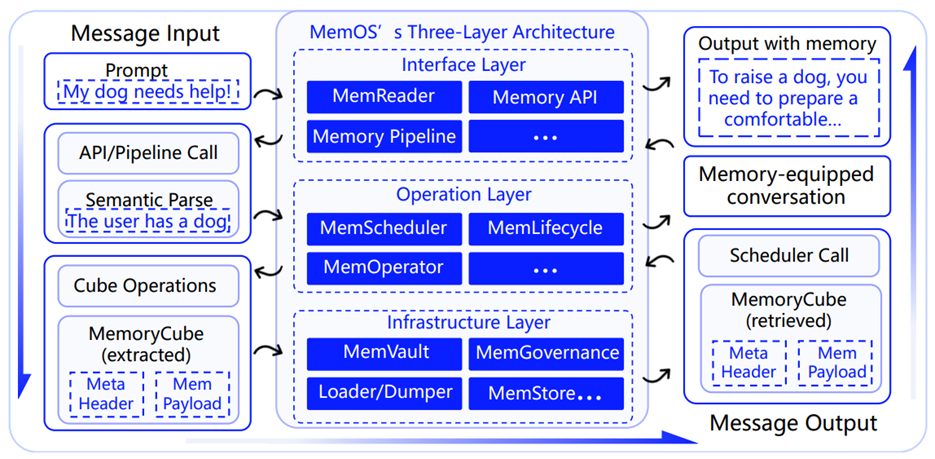

MemOS unifies the representation, scheduling, and evolution of three core memory types: plaintext, activation-based, and parameter-level memories.

Its foundational unit is the MemCube, an encapsulated structure holding memory content and metadata like provenance, versioning, and usage indicators. This abstraction allows MemCubes to be composed, migrated, and fused, facilitating dynamic transitions between memory types—for instance, distilling frequently used plaintext into efficient parametric form or caching activations for low-latency recall.

The MemOS system is structured as a three-layer architecture:

An Interface Layer for user interaction and API calls (MemReader, Memory API),

An Operation Layer for intelligent memory management (MemOperator, MemScheduler, MemLifecycle), and

An Infrastructure Layer for storage, security, and migration (MemGovernance, MemVault, MemLoader/Dumper, MemStore).

MemScheduler acts like an OS scheduler, but for AI memory; it dynamically transforms and loads memory based on task semantics and call frequency for best performance and relevance. The authors also implement a novel Next-Scene Prediction mechanism that preloads relevant memory fragments during inference to reduce latency and token use.

On the LOCOMO benchmark, MemOS scored best-in-class compared with all other memory methods (including RAG methods) tested, with competitive latency performance; it achieved 159% improvement in temporal reasoning over OpenAI's global memory, with an overall accuracy gain of 39% and 61% reduction in token overhead. In addition, it scored higher than the full-context baseline, while operating at significantly lower latency.

Furthermore, KV-based memory acceleration within MemOS significantly reduces Time to First Token (TTFT), with models like Qwen2.5-72B showing up to 91.4% reduction for long contexts, validating its efficiency and scalability.

By treating memory in a unified manner as a first-class computational resource, MemOS supports LLM memory governance and lays a robust foundation for agentic AI systems.

MemOS is open-sourced with code available on GitHub, and is compatible with mainstream LLM ecosystems such as HuggingFace, OpenAI, and Ollama.

A Survey on Latent Reasoning

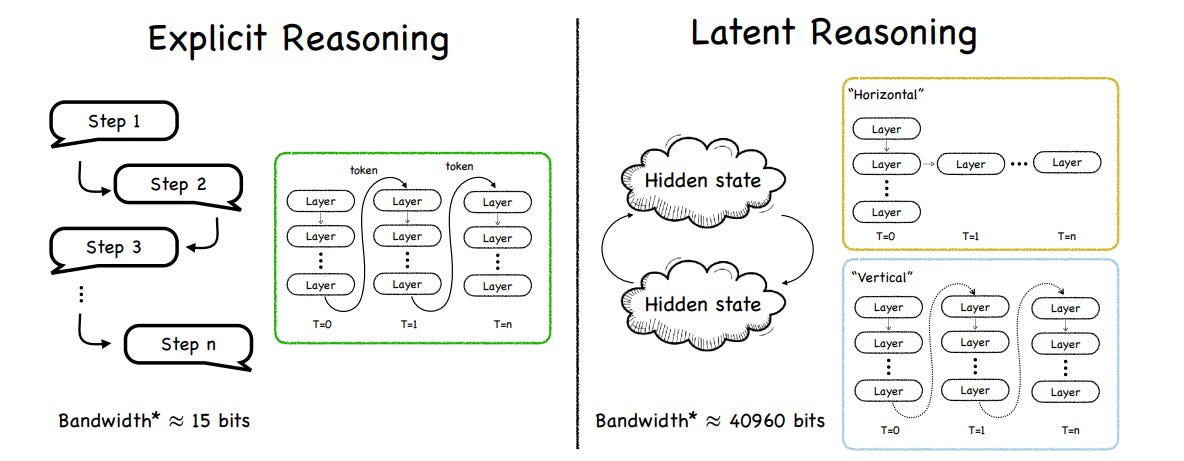

A Survey on Latent Reasoning provides a comprehensive overview of latent reasoning, the method of AI reasoning that an emerging AI model multi-step inference entirely within the model’s continuous hidden state. Latent reasoning overcomes inefficiencies and limitations of explicit Chain-of-Thought (CoT) reasoning in AI reasoning models, which verbalizes intermediate steps in natural language.

The core benefit of latent reasoning is to leverage the significantly higher bandwidth of continuous representations passed along during chain of thought (CoT). There is a massive 3,000 times increase in the bandwidth that can be passed forward compared to discrete tokens. This enables richer, non-linguistic reasoning paths that can unlock new AI reasoning capabilities.

The survey categorizes latent reasoning methodologies into two primary types:

Vertical recurrent methods (activation-based) deepen the computational graph by iteratively refining activations within a single time step. This can be achieved through explicit architectural designs, such as Universal Transformers and CoTFormer, which re-apply the same layers; or through training-induced recurrence, exemplified by methods like Coconut and CODI that create implicit loops or compress reasoning traces into iteratively processed representations.

Horizontal recurrent methods (hidden state-based) evolve a compressed hidden state of internal parameters over long time sequences. This includes Linear-State models (e.g., Mamba-2, DeltaNet) and Gradient-State models (e.g., TTT, Titans), which treat hidden state updates as optimization steps, effectively “trading time for depth” without adding parameters.

This comprehensive survey clarifies the conceptual landscape of latent reasoning and its theoretical underpinnings. This included extending the concept to infinite-depth reasoning, primarily explored through text diffusion models. Such models operate on the entire output sequence in parallel, enabling global planning and iterative self-correction.

Latent reasoning has demonstrated improved performance on complex tasks requiring multi-step computation, from algorithmic generalization to mathematical and symbolic reasoning.

While they present and explain diverse latent reasoning model implementations, the authors do not present direct model comparisons and acknowledge the current lack of a common evaluation framework. Without such comparisons, it’s difficult to identify the most promising specific approaches.