AI Research Roundup 24.03.29

ViTAR vision transformer, visual body control (VBC) for robots, Extreme Quantization with HQQ+, Pruning LLM layers, long-form factuality in LLMs, InternLM2 tech report.

Introduction

We are again highlighting AI Research papers and results from the past week. Please comment to let me know what you like and what you’d like improved.

AI research highlights for this week:

VBC: Visual Whole-Body Control for Legged Loco-Manipulation

ViTAR, a Vision Transformer for Multiple Image Resolutions

Extreme Quantization - Towards 1-bit Machine Learning Models

The Unreasonable Ineffectiveness of the Deeper Layers

Long-Form Factuality in LLMs - Using Agents to Check Facts

InternLM2 open-source LLM - Technical Report

VBC: Visual Whole-Body Control for Legged Loco-Manipulation

Robotics researchers, led by Xiaolon Wang of UC San Diego, have created a four-legged one-armed robot to address the challenge they call legged loco-manipulation. Sharing their work in the paper VBC: Visual Whole-Body Control for Legged Loco-Manipulation and in demo videos, they show how a robot combines control of the legs and arm to manipulate better and “extend its workspace” for tasks like picking up objects:

We propose a framework that can conduct the whole-body control autonomously with visual observations. Our approach, namely Visual Whole-Body Control(VBC), is composed of a low-level policy using all degrees of freedom to track the end-effector manipulator position and a high-level policy proposing the end-effector position based on visual inputs. We train both levels of policies in simulation and perform Sim2Real transfer for real robot deployment.

Their experimental results and demo videos (see more on their project page) shows good performance (above baseline) in picking up diverse objects in different configurations and environments, including San Diego beaches.

A four-legged one-armed robot may be the right form factor for many tasks, like fruit-picking, household cleaning chores, and picking up trash on a beach.

ViTAR, a Vision Transformer for Multiple Resolutions

The paper ViTAR: Vision Transformer with Any Resolution introduces a novel vision transformer model that addresses limitations faced by current Vision Transformers (ViTs). ViTs perform visual-language tasks to support multi-modal LLMs, but have problems with scaling to different image resolutions.

The authors propose two key innovations to improve scalability of the ViT:

Firstly, we propose a novel module for dynamic resolution adjustment, designed with a single Transformer block, specifically to achieve highly efficient incremental token integration. Secondly, we introduce fuzzy positional encoding in the Vision Transformer to provide consistent positional awareness across multiple resolutions, thereby preventing overfitting to any single training resolution.

The resulting model, ViTAR (Vision Transformer with Any Resolution), demonstrates over 80% accuracy at a range of resolutions from 224x224 to 4032x4032 resolution, all while reducing computational costs. It also performs well in other tasks, like segmentation. ViTAR enhances the flexibility and applicability of vision-transformers to more tasks and a wider range of image inputs.

Extreme Quantization - Towards 1-bit Machine Learning Models

Towards 1-bit Machine Learning Models is not a formal paper on Arxiv, but a presentation of some experimental results that “showcase the potential of extreme low-bit quantization in machine learning models.”

While LLMs are typically trained in 16-bit floating point or higher precision, there are forms of quantization that cut the precision down to run the LLM on less memory, such as 8-bitFP, 8-bit int, or 4-bit. Extreme low-bit quantization takes this to the limit of 1-bit or 2-bit, using a technique called Half-Quadratic Quantization (HQQ).

They find that extreme quantization, down to even 2-bit or 1-bit, followed by retraining just a small fraction of weights can maintain much of the capabilities of the original model. This process, called HQQ+ is the adaptation of HQQ which utilizes a low-rank adapter to enhance its performance.

The HQQ+ adaptation improved performance. Starting with the Llama2-7B-chat FP16 model, they quantized to 2-bit and 1-bit, and evaluated the models on benchmarks and compared to Llama2-7B-chat FP16 model's average score on 3 benchmarks of 53.56:

HQQ 1-bit model average score was 28.31, while the HQQ+ 1-bit model improved on HQQ to obtain an average score of 37.56.

HQQ 2-bit model obtained 47.08, while the HQQ+ 2-bit model achieved an average score of 51.11, close to the original FP16 model.

Our results show that, when training only a fraction of the weights on top of an HQQ-quantized model, the output quality significantly improves even at 1-bit, outperforming smaller full-precision models.

There is other work that trains LLMs at low precision from scratch, such as BitNet b1.58. But quantization is more accessible to the open source community, as it can be used to down-size existing LLMs.

The Unreasonable Ineffectiveness of the Deeper Layers

This could also be titled “The unreasonable effectiveness of pruning LLM layers”

The paper “The Unreasonable Ineffectiveness of the Deeper Layers” looks at the question of what parameters in an LLM can be pruned without greatly degrading the performance of the LLM. They use a layer-pruning strategy. The surprising finding:

[There is] minimal degradation of performance on different question-answering benchmarks until after a large fraction (up to half) of the layers are removed.

How they pruned and evaluated the models:

We identify the optimal block of layers to prune by considering similarity across layers; then, to "heal" the damage, we perform a small amount of finetuning … specifically quantization and Low Rank Adapters (QLoRA).

The image below summarizes the process, the metric used to prune layers, and the benchmarks that resulted.

While there was minimal degradation by removing up to half the layers of Llama-2-70B for Mistral and Qwen LLMs, degradation occurred at the 0.2 to 0.3 fraction of total layers.

Layer pruning with healing can be complementary to other methods, such as quantization, to improve inference efficiency by reducing the LLM parameter count without quality degradation.

The work also suggests models have inefficiencies:

From a scientific perspective, the robustness of these LLMs to the deletion of layers implies either that current pretraining methods are not properly leveraging the parameters in the deeper layers of the network or that the shallow layers play a critical role in storing knowledge.

Long-Form Factuality in LLMs - Using AI Agents to Check Facts

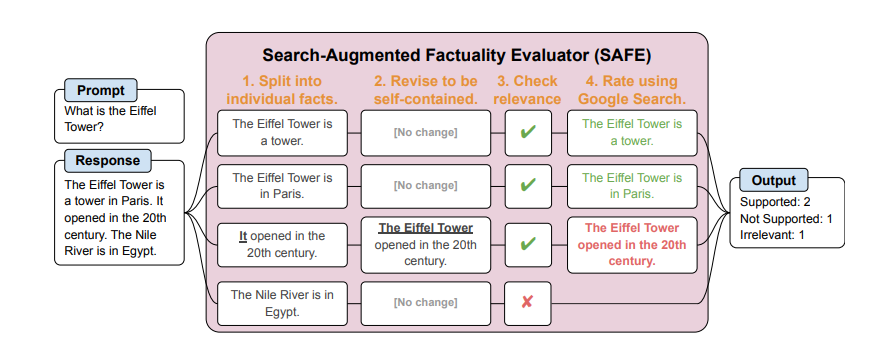

The paper Long-form factuality in large language models from Google DeepMind addresses the challenge of factual correctness. They propose a prompt set called LongFact, an evaluation method for factual correctness they call Search-Augmented Factuality Evaluator (SAFE), and a metric for long-form factuality based on F1 scores.

SAFE works as an AI agent to check multiple facts in a single long-form response:

SAFE utilizes an LLM to break down a long-form response into a set of individual facts and to evaluate the accuracy of each fact using a multi-step reasoning process comprising sending search queries to Google Search and determining whether a fact is supported by the search results.

The results show that their SAFE agents “achieve superhuman rating performance” on annotating facts. On their test of about 16,000 individual facts:

SAFE agrees with crowdsourced human annotators 72% of the time, and on a random subset of 100 disagreement cases, SAFE wins 76% of the time.

Aside from showing that newer, larger LLMs achieve better long-form factuality, they point out that “SAFE is more than 20 times cheaper than human annotators.” So we’ve gone from worrying about AI hallucinating to finding a way to automate fact-checking with AI agents.

InternLM2 open-source LLM - Technical Report

The InternLM2 Technical Report comes from AI researchers in China - there are many authors listed, hailing from Shanghai AI Laboratory, SenseTime Group, The Chinese University of Hong Kong, and Fudan University. They introduce and report on InternLM2, an open-source LLM that comes in 7B and 20B variants:

InternLM2 outperforms its predecessors in comprehensive evaluations across 6 dimensions and 30 benchmarks, long-context modeling, and open-ended subjective evaluations through innovative pre-training and optimization techniques.

Their report shows that InternLM2 achieves excellent results across benchmarks, with their 7B model surpassing prior SOTA models such as Mistral 7B.

This Technical Report is notable not so much in the AI model results, but in the in-depth details on the InternLM2 training and architecture. Many LLM training details are no longer shared, even when it comes to “open” AI models; the weights are ‘open’ but little else is shared. This report, on the other hand, is both open and detailed:

The pre-training process of InternLM2 is meticulously detailed, highlighting the preparation of diverse data types including text, code, and long-context data. InternLM2 efficiently captures long-term dependencies, initially trained on 4k tokens before advancing to 32k tokens in pre-training and fine-tuning stages, exhibiting remarkable performance on the 200k ``Needle-in-a-Haystack" test. InternLM2 is further aligned using Supervised Fine-Tuning (SFT) and a novel Conditional Online Reinforcement Learning from Human Feedback (COOL RLHF) strategy that addresses conflicting human preferences and reward hacking.

Their openness gives AI researchers better understanding about their LLM building process, and it will help others seeking to replicate their efforts and build new LLMs:

By releasing InternLM2 models in different training stages and model sizes, we provide the community with insights into the model's evolution.