AI Research Roundup 24.04.19

VASA-1 talking heads, ResearchAgent, GoEX runtime for autonomous agents, StableAudio2.0 music generation, Megaladon unlimited context, AlphaLLM improves LLM reasoning with search and critiques.

Introduction

Another busy week on all fronts of AI. Here are our AI research highlights for this past week:

VASA-1: Lifelike Audio-Driven Talking Faces Generated in Real Time

StableAudio2.0: Generating Long-form Music with Latent Diffusion

Megalodon: Unlimited Context Length LLMs

ResearchAgent: Automating Research Idea Generation with LLM Agents

GoEX: Towards a Runtime for Autonomous LLM Applications

Improving LLMs via Imagination, Searching, and Criticizing

VASA-1: Lifelike Audio-Driven Talking Faces Generated in Real Time

The headline research result was this remarkable result from Microsoft: Project Vasa-1 is an AI model can turn a single image and an audio track into an expressive talking head video. Similar to EMO from Alibaba, this model shows incredible realism.

Their project page shows some incredible must-see demos. Our cover art is a shot of a video clip where they turned the Mona Lisa into a very expressive rapper. As they themselves claim:

VASA-1, is capable of not only producing lip movements that are exquisitely synchronized with the audio, but also capturing a large spectrum of facial nuances and natural head motions that contribute to the perception of authenticity and liveliness.

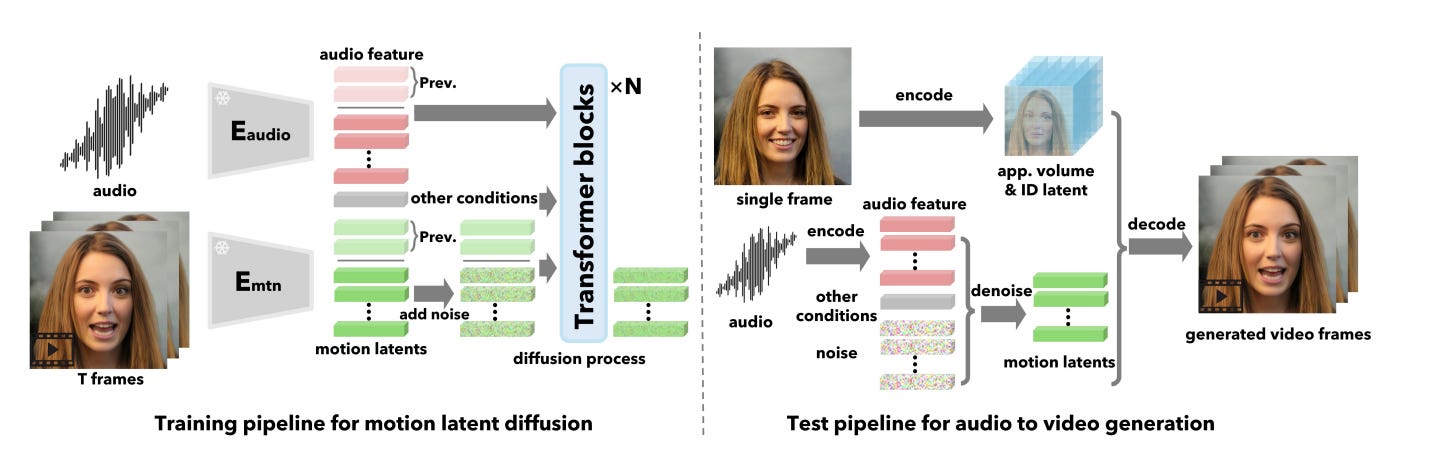

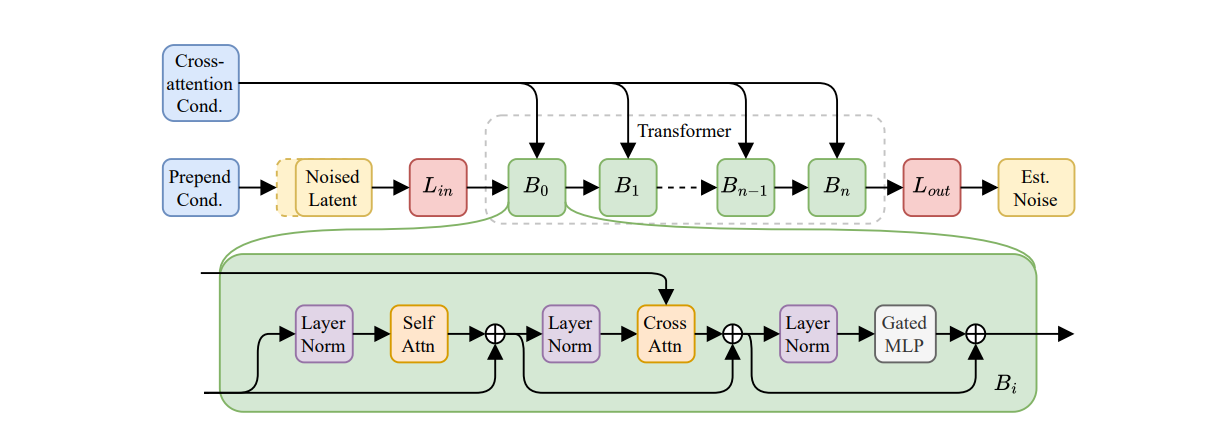

They shared further details on the model, training process and results in their paper “VASA-1: Lifelike Audio-Driven Talking Faces Generated in Real Time.” This model is based on diffusion transformers and they used videos from VoxCeleb2 dataset to train this system.

The technical cornerstone is an innovative holistic facial dynamics and head movement generation model that works in an expressive and disentangled face latent space.

Vasa-1 is so good, we can’t have it. Microsoft doesn’t want to put it out in the wild without figuring out how to avoid its misuse for deep-fakes, a legitimate concern due to its realism.

“Given such context, we have no plans to release [a VASA-1] online demo, API, product, additional implementation details, or any related offerings until we are certain that the technology will be used responsibly and in accordance with proper regulations.” - VASA-1 project authors

Generating Long-form Music with Latent Diffusion

The creators behind Stable Audio 2.0 released a paper on their model. The paper is “Long-form music generation with latent diffusion.” Stable Audio 2.0 can generate high-fidelity instrumental music with lengths up to 4 minutes.

Suno V3 and Udio are impressive music generation applications, but they are proprietary, so it’s helpful to have a more open AI model like Stable Audio 2.0.

Stable Audio 2.0 uses latent diffusion model based on diffusion transformers (DiT), that operates on a highly down-sampled continuous latent representation (latent rate of 21.5Hz). This model can reproduce the large-scale structures required to generate coherent music over several minutes, yielding high-quality musical compositions.

Megalodon: Unlimited Context Length LLMs

Many efforts have been made to address LLM limits on context window lengths, including alternative architectures such as state space models (like Mamba). To solve this challenge, the paper “Megalodon: Efficient LLM Pretraining and Inference with Unlimited Context Length” introduces an architecture for efficient sequence modeling with unlimited context length called Megaladon.

The Megaladon architecture is an improvement on prior work, Mega (exponential moving average with gated attention), which in turn modifies the transformer attention mechanism to allow for longer (unlimited) context understanding.

[Megalodon] further introduces multiple technical components to improve its capability and stability, including complex exponential moving average (CEMA), timestep normalization layer, normalized attention mechanism and pre-norm with two-hop residual configuration.

They show that Megalodon can train with better efficiency than transformer-based Llama2 7B and demonstrates ability to model context sequences of unlimited length.

ResearchAgent: Automating Research Idea Generation with LLM Agents

AI has the potential to accelerate science research by automating research tasks. The paper “ResearchAgent: Iterative Research Idea Generation over Scientific Literature with Large Language Models” presents an LLM-based AI Agent called ResearchAgent to accelerate a key part of scientific research, research idea generation.

ResearchAgent is an LLM-based research idea writing agent, that starts with a core paper as its focus, and from that it automatically generates problems, methods, and experiment designs while iteratively refining them based on scientific literature. They augment the Agent with a knowledge store to explore related concepts, as well as “ReviewingAgents that provide reviews and feedback iteratively.”

They validate in experiments that ResearchAgent is effective in generating novel, clear, and valid research ideas based on human and model-based feedback. This result shows AI can accelerate progress in science and increase productivity in intellectual tasks.

GoEX: Towards a Runtime for Autonomous LLM Applications

Gorilla has been a ground-breaking development in giving LLMs the ability to use functions and tools, broadening the scope of what LLMs can do for us beyond the Chat interface. The developers of Gorilla have a new paper to showcase new capabilities and explore how to make autonomous agents work: “GoEX: Perspectives and Designs Towards a Runtime for Autonomous LLM Applications.”

The question this paper tries to address is: How do we enable an untrusted agent to take sensitive actions (e.g., code generation, API calls, and tool use) on a user’s behalf and then verify that those actions aligned with the intent of that user’s request?

To move towards autonomous systems requires validating correctness of untrusted agent actions. They state that “post-facto validation” is easier than validation or checking before an action is taken. The solution to agent unreliability in these cases is undoing an action and engaging in damage confinement if complete undo is not possible.

They implement their approach in an open-source runtime for executing LLM actions, Gorilla Execution Engine (GoEX). Their controls to take corrective action based on user feedback make the autonomous agent system more robust, which broadens its capabilities.

Improving LLMs via Imagination, Searching, and Criticizing

LLMs have difficulty on complex reasoning tasks. Various approaches to improve LLM reasoning have been proposed, including advanced prompting techniques, fine-tuning models, and self-critique. A fundamental challenge is that complex reasoning and planning are exploratory, not linear. To reason better, we need a solution that explores iteratively.

The paper “Toward Self-Improvement of LLMs via Imagination, Searching, and Criticizing” improves LLMs on complex reasoning with AlphaLLM, an approach inspired by AlphaGo. AlphaLLM uses tree search to improve the LLM’s performance:

AlphaLLM addresses the unique challenges of combining Monte-Carlo Tree Search (MCTS) with LLM for self-improvement, including data scarcity, the vastness search spaces of language tasks, and the subjective nature of feedback in language tasks. AlphaLLM is comprised of prompt synthesis component, an efficient MCTS approach tailored for language tasks, and a trio of critic models for precise feedback.

Their trio of critic models includes “a value function for estimating future rewards, a process reward model for assessing node correctness, and an outcome reward model for evaluating the overall trajectory.” Process-rewards models are possibly what is behind Q* and are discussed in our “Getting LLMs To Reason With Process Rewards.”

Their experimental results shows AlphaLLM improves on math reasoning, via much higher GSM8K and MATH benchmark scores than baseline.