AI Research Roundup 24.05.03

Evaluating LLMs with PoLL, Prometheus 2, and GSM1k for Grade School Math. Plus Med-Gemini LLM for medicine, iterative reasoning preference opt., and multi-token prediction for faster and better LLMs.

Introduction

Here are our AI research highlights for this week:

LLM Performance on Grade School Arithmetic

Prometheus 2: A Language Model For Evaluating Language Models

PoLL: Evaluating LLM Generations with a Panel of Diverse Models

Iterative Reasoning Preference Optimization - fine-tuning LLMs for reasoning.

Med-Gemini: Capabilities of Gemini Models in Medicine - Med-Gemini shows we are getting to human-level performance on medical tasks.

Better & Faster Large Language Models via Multi-token Prediction - multi-token prediction can be used to achieve faster and more capable LLMs.

TL;DR - Three papers this week focus on LLM evaluation. Benchmarks can be corrupted by data contamination, as the paper on Grade School Math benchmarks shows. We can scale LLM evaluation using LLMs to evaluate outputs, but there are challenges. Multiple LLMs acting together, as done in PoLL, or specialized evaluation LLMs, such as Prometheus 2, give us better results.

LLM Performance on Grade School Arithmetic

There are many challenges in properly benchmarking LLMs. Capabilities of LLMs have outrun older standard benchmarks, so new benchmarks need to be developed. LLMs vacuum vast amounts of data in their training dataset, so it’s difficult to know if test questions are excluded from training data. Benchmarks like MMLU have known errors and flaws (shown by AI Explained).

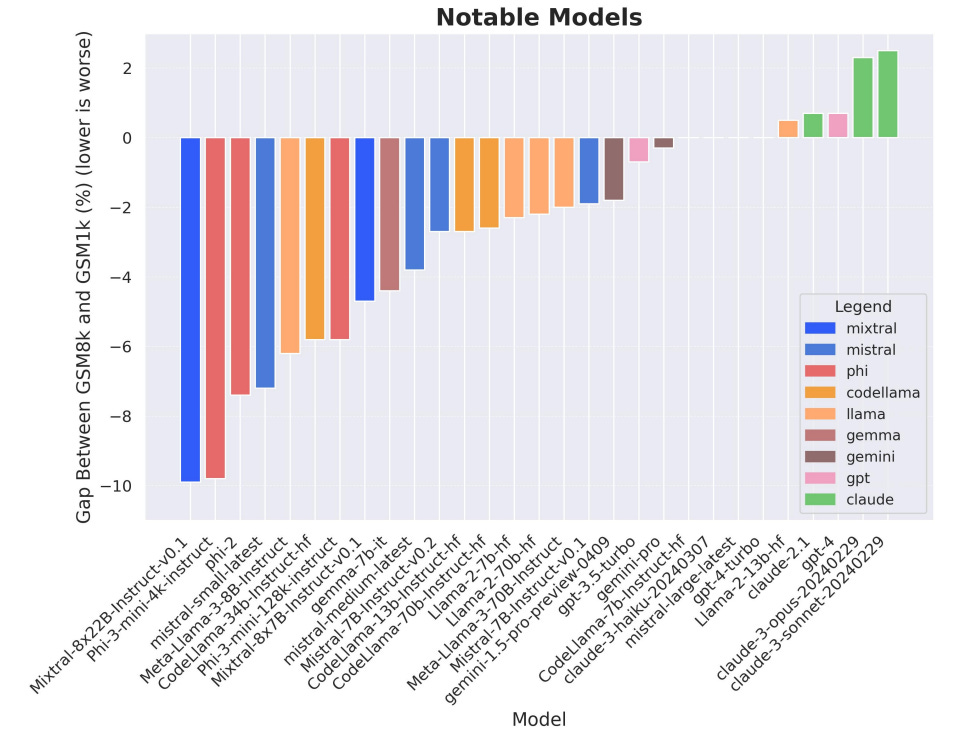

A paper from Scale AI “A Careful Examination of Large Language Model Performance on Grade School Arithmetic” examines some of these questions to help better calibrate LLM performance, specifically on GSM8k. The specific concern is that improved GSM8k benchmarks results could be due to dataset contamination “where data closely resembling benchmark questions leaks into the training data,” which compromises the results:

To investigate this claim rigorously, we commission Grade School Math 1000 (GSM1k). GSM1k is designed to mirror the style and complexity of the established GSM8k benchmark, the gold standard for measuring elementary mathematical reasoning. We ensure that the two benchmarks are comparable across important metrics such as human solve rates, number of steps in solution, answer magnitude, and more.

By making GSM1k a mirror of GSM8k in all but specific test questions, they could use this to detect possible data contamination. They found some families of LLMs (e.g., Phi and Mistral) had accuracy drops and evidence of overfitting to GSM8k, while others (e.g., Gemini/GPT/Claude) show minimal signs of overfitting.

This paper shows LLMs can have contamination or overfitting that skews their results on benchmarks, so one takeaway is not to over-rely on specific benchmarks to assess LLMs.

Prometheus 2: A Language Model For Evaluating Language Models

To scale the evaluation of LLM output, we have turned to other LLMs. High-quality LLMs such as GPT-4 can evaluate outputs with comparable quality and high correlations to human evaluations.

However, the best evaluation LLMs are proprietary models, so the paper “Prometheus 2: An Open Source Language Model Specialized in Evaluating Other Language Models” addresses the gap by developing an open-source LLM for evaluation - “Prometheus 2, a more powerful evaluator LM than its predecessor that closely mirrors human and GPT-4 judgements.”

To build Prometheus 2, they start with Mistral-7B and Mixtral8x7B as base models; then they train evaluator LMs separately to address direct assessment and pairwise ranking; then merge the weights to make the unified Prometheus 2 models.

Prometheus 2 scores the highest correlation and agreement with humans and proprietary LM judges among all tested open evaluator LMs. They share models, code, and data on Github.

PoLL: Evaluating LLM Generations with a Panel of Diverse Models

An alternative to using large LLMs like GPT-4 or developing special-purpose models to evaluate LLMs is to apply strength in numbers. The paper “Replacing Judges with Juries: Evaluating LLM Generations with a Panel of Diverse Models” proposes to evaluate models using a Panel of LLM evaluators (PoLL).

The PoLL is based on using evaluation feedback from models from three disparate model families (Command R, Haiku, and GPT3.5):

Across three distinct judge settings and spanning six different datasets, we find that using a PoLL composed of a larger number of smaller models outperforms a single large judge, exhibits less intra-model bias due to its composition of disjoint model families, and does so while being over seven times less expensive.

Averaging responses from multiple evaluation AI models gets closer to human evaluation responses. The PoLL concept could be generalized to use other LLMs, including open LLMs, as alternatives for LLM output evaluation.

Iterative Reasoning Preference Optimization

Creating better LLMs that can reason better is a challenge we discussed in our article “Getting to System 2: LLM Reasoning.” LLM reasoning can be improved through fine-tuning and iterative feedback, but we’ve learned it is more effective if you set the rewards on specific steps (“process rewards”).

The paper “Iterative Reasoning Preference Optimization” applies the process rewards concept, by evaluating chains-of-thought, within a fine-tuning framework:

In this work we develop an iterative approach that optimizes the preference between competing generated Chain-of-Thought (CoT) candidates by optimizing for winning vs. losing reasoning steps that lead to the correct answer. …

On each iteration, our method consists of two steps, (i) Chain-of-Thought & Answer Generation and (ii) Preference Optimization, as shown in Figure 1. For the t th iteration, we use the current model Mt in step (i) to generate new data for training the next iteration’s model Mt+1 in step (ii).

They show this method is effective at improving reasoning on base models:

… increasing accuracy for Llama-2-70B-Chat from 55.6% to 81.6% on GSM8K, from 12.5% to 20.8% on MATH, and from 77.8% to 86.7% on ARC-Challenge.

Med-Gemini: Capabilities of Gemini Models in Medicine

Google researchers have produced several advanced AI models for healthcare in recent years (for example Med-PaLM). The Med-Gemini suite of models they present in the new paper “Capabilities of Gemini Models in Medicine” achieves SOTA performance on many medical tasks.

The medical application of AI is a challenging task that requires advanced reasoning, accurate medical knowledge and leverage of detailed multimodal data. Gemini has some of the combined capabilities required, but more is needed:

We enhance our models’ clinical reasoning capabilities through self-training and web search integration, while improving multimodal performance via fine-tuning and customized encoders.

Specifically, they made Med-Gemini-M 1.0 by fine-tuning Gemini 1.0 Pro model and Med-Gemini-L 1.0 by fine-tuning Gemini 1.0 Ultra.

The other key part of their performance is “a novel uncertainty-guided search strategy at inference time to improve performance on complex clinical reasoning tasks.” As shown in the figure, it combines reasoning evaluation and fact-finding (via web search) to get to correct results.

On a set of 14 medical benchmarks, Med-Gemini is able to surpass GPT-4 and achieve SOTA results on 10 of them. Med-Gemini achieves 91.1% accuracy on MedQA (USMLE) benchmark. Med-Gemini has SOTA long-context capabilities in medical information and also surpassed human experts on tasks such as medical text summarization.

Better & Faster Large Language Models via Multi-token Prediction

In “Better & Faster Large Language Models via Multi-token Prediction,” Meta researchers propose a method that improves LLM training and can lead to LLM inference speedups, by training language models to predict multiple future tokens at once.

Current LLM training and inference is one token at a time, a sequential process that they show can be made more efficient. They propose a multi-head output that consists of a shared trunk and 4 dedicated output heads. During training, the model predicts 4 future tokens at once. Inference can use only the next-token output head, or can optionally use all four heads for speculative decoding or to speed-up inference.

The authors found this method improves model performance in areas such as code generation, and it improves and LLMs scale up:

Considering multi-token prediction as an auxiliary training task, we measure improved downstream capabilities with no overhead in training time for both code and natural language models. The method is increasingly useful for larger model sizes, and keeps its appeal when training for multiple epochs. Gains are especially pronounced on generative benchmarks like coding, where our models consistently outperform strong baselines by several percentage points. Our 13B parameter models solves 12% more problems on HumanEval and 17% more on MBPP than comparable next-token models.

The authors found that model speed can also be improved, as models trained with 4-token prediction are up to 3 times faster at inference.

This paper opens the door to added dimensions of training and inference beyond the token-at-a-time constraint, and so it could be an important path to further large efficiency gains in LLMs.