AI Research Roundup 24.05.24

Anthropic maps the LLM mind of Claude 3 Sonnet, Your transformer model is secretly linear, not all LLM features are linear, LLMs as financial analysts, Uni-MoE is a unified MLLM.

Introduction

How do LLMs really work? As LLMs become more powerful, the inner workings of LLM architectures and how training maps to activations and intelligence has become more complex and inscrutable.

Several research papers this week try to untie these knots. Understanding AI model internals better should help us avoid and reduce AI risks, avoiding the AI paper-cli apocalypse.

Our AI research paper highlights for this week:

Mapping the Mind of a Large Language Model

Your Transformer is Secretly Linear

Not All Language Model Features Are Linear

Financial Statement Analysis with Large Language Models

UniMoE: Unified Multimodal Framework

Mapping the Mind of a Large Language Model

We have identified how millions of concepts are represented inside Claude Sonnet, one of our deployed large language models. This is the first ever detailed look inside a modern, production-grade large language model. This interpretability discovery could, in future, help us make AI models safer.

Anthropic has been trying to decode how LLMs work by Mapping the Mind of a Large Language Model.

Last fall, they showed how to map concepts to specific model weight activations on a small language model, using a sparse autoencoder (SAE) to extract single concepts (mon-semantic). The SAE maps distinct concepts to activations, helping us understand the internals of an LLM.

In their latest paper, “Scaling Monosemanticity: Extracting Interpretable Features from Claude 3 Sonnet,” they scale up this approach to find interpretable features in Claude 3 Sonnet. Some of their high-level findings:

Resolved features are highly abstract: multilingual, multimodal, and generalizing between concrete and abstract references. For example they showed how various different code errors, with different syntactical meaning or errors, would trigger the same activation pattern. And a country would fire on it’s name but also its description.

There appears to be a systematic relationship between the frequency of concepts and the dictionary size needed to resolve features for them.

Features vectors, like word vectors, show clustering and relationships.

They observed a variety of features with AI safety concerns: Deception, bias, dangerous content, hateful expression. Identifying feature mappings to activations enabled them to adjust feature-related activations and steer model behavior:

If we clamp the secrecy and discreteness feature 1M/268551 to 5×, Claude will plan to lie to the user and keep a secret while “thinking out loud” using a scratchpad.

This study helps us better understand how concepts are internally represented in AI model, and it provides an approach to adjusting AI models to remove harmful biases and unsafe behaviors. Anthropic’s result here will help make AI models safer and more reliable.

Your Transformer is Secretly Linear

From Russian AI researchers, the paper “Your Transformer is Secretly Linear” challenges assumptions about how transformers’ internal model parameters work.

The paper reveals that in models such a GPT, Llama, and other LLMs, the decoder layers exhibit surprisingly high linearity (near 0.99) between sequential layers. They find that linearity is reduced during pretraining but goes up with fine-tuning.

This has implications for the efficiencies of AI models, since linearities across layers imply some redundancy in model weights. Linear layers can combine to enable complex non-linear representations, but the linearities allow for further approximation or model reduction:

Our experiments show that removing or linearly approximating some of the most linear blocks of transformers does not affect significantly the loss or model performance.

Reducing layer linearity should make AI models more efficient, so they introduce a cosine-similarity-based regularization, and in a small model experiment show its use reduces layer linearity and improves performance benchmarks.

This result could explain why approximating LLMs by making them sparse can maintain accuracy. For example, Sparse Llama is 70% Smaller yet has full accuracy.

Not All Language Model Features Are Linear

How do representations work in LLMs? Anthropic’s work and the general understanding of LLMs is based on the linear representation hypothesis: Language models perform computation by manipulating one-dimensional representations of concepts (“features”) in activation space.

While this seems to be generally true, the paper “Not All Language Model Features Are Linear” explores the exceptions, showing that some language model representations may be inherently multi-dimensional.

They defined irreducible multi-dimensional features based on whether they can be decomposed into either independent or non-co-occurring lower-dimensional features, and then they used sparse autoencoders to automatically find multi-dimensional features in GPT-2 and Mistral 7B:

These auto-discovered features include strikingly interpretable examples, e.g. circular features representing days of the week and months of the year. We identify tasks where these exact circles are used to solve computational problems involving modular arithmetic in days of the week and months of the year.

Thus, they were able to extract multi-dimensional features with sparse autoencoders, identified some circular representations as fundamental and irreducible, and explained these multi-dimensional representations by breaking down the hidden states for these tasks into interpretable components.

The linear representation hypothesis is an approximate of the true multi-dimensional nature of feature representations in AI models. This work helps expand our understanding of language model representations by encompassing complex “corner cases” that go beyond linearity.

Financial Statement Analysis with Large Language Models

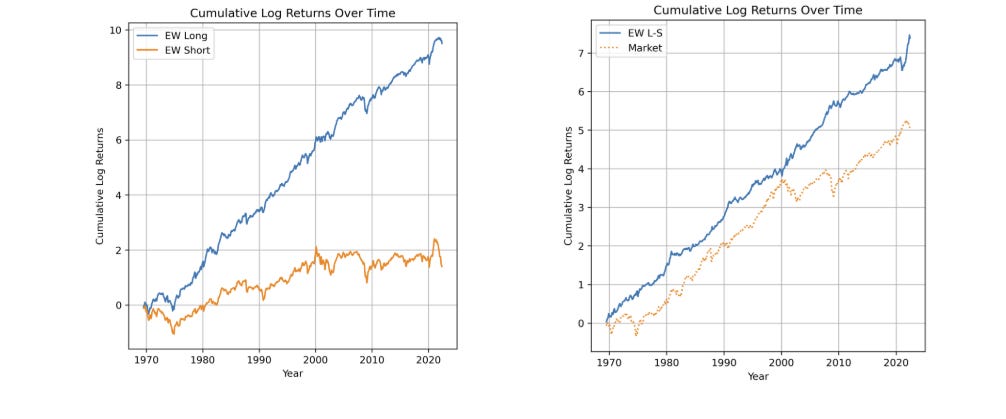

In Financial Statement Analysis with Large Language Models, researchers out of University of Chicago’s School of Business looked into how well GPT-4 could perform on financial statement analysis.

The bottom line results from their study suggest AI financial analysts have arrived:

The LLM outperforms financial analysts in its ability to predict earnings changes. The LLM exhibits a relative advantage over human analysts in situations when the analysts tend to struggle.

The LLM prediction capability benefits from how it “generates useful narrative insights about a company’s future performance.”

Better predictions leads to more successful investments and trades. They showed that trading strategies based on GPT’s predictions yield a higher Sharpe ratio (an indicator of risk-adjusted return) than strategies based on other models (ANNs and logistic regression models).

Unified Multimodal Framework

The two biggest trends in frontier AI models has been the rise of Multimodal Large Language Models (MLLMs), and the use of the Mixture of Experts (MoE) architecture.

The paper “Uni-MoE: Scaling Unified Multimodal LLMs with Mixture of Experts” introduces the Uni-MoE model, which implements a sparse MoE architecture to enable efficient training and inference while supporting a wide array of modalities.

The Uni-MoE MLLM is pre-trained using a 3-part progressive training strategy:

Cross-modality alignment using various connectors with different cross-modality data.

Training modality-specific experts with cross-modality instruction data to activate experts' preferences.

Tuning the Uni-MoE framework utilizing Low-Rank Adaptation (LoRA) on mixed multimodal instruction data.

The authors shared benchmarks where Uni-MoE outperforms traditional dense MLLMs, including image-text, video, and audio/speech understanding datasets, while also reducing training and inference computation load.