AI Research Roundup 24.08.09 - Multi-modal & Medical

MiniCPM-V, Optimus-1, MMIU - Multi-modal Multi-image Understanding, GMAI-MMBench for medical AI, MedTrinity-25M multi-modal dataset for medical AI

Introduction

This week for our AI research highlights, we focus on multi-modal models, agents, benchmarks and datasets, including a benchmark and dataset for multi-modal medical AI:

MiniCPM-V: A GPT-4V Level MLLM on Your Phone

Optimus-1: Hybrid Multimodal Memory Empowered Agents Excel in Long-Horizon Tasks

MMIU: Multimodal Multi-image Understanding for Evaluating Large Vision-Language Models

GMAI-MMBench: A Comprehensive Multimodal Evaluation Benchmark Towards General Medical AI

MedTrinity-25M: A Large-scale Multimodal Dataset with Multigranular Annotations for Medicine

MiniCPM-V: A GPT-4V Level MLLM on Your Phone

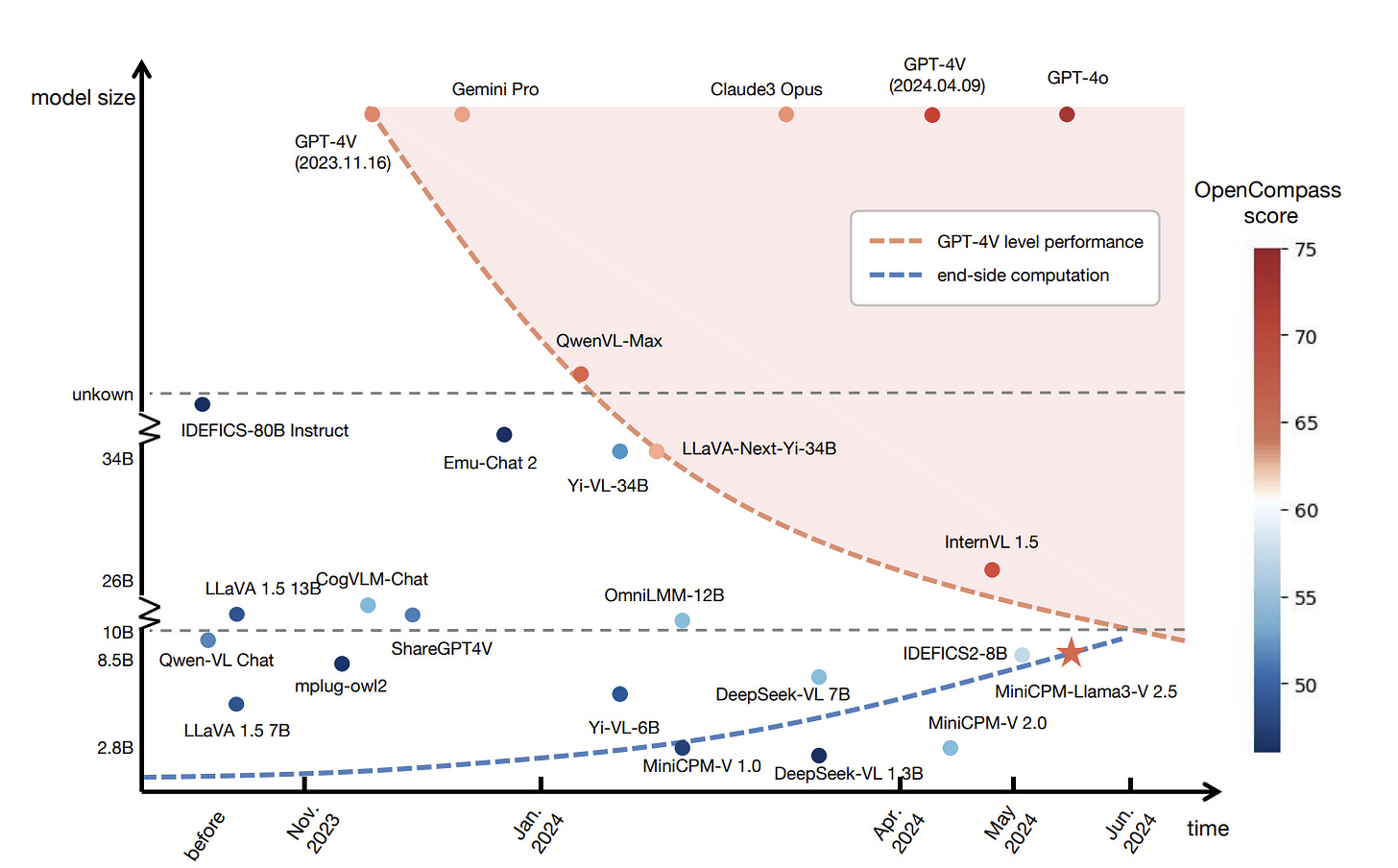

Multimodal Large Language Models (MLLMs) are AI models that can understand across text, images and video. There are major challenges with computation cost of MLLMs, but they have been significantly improving recently. A team of Chinese AI researchers at OpenBNB are pushing this trend of smaller, efficient MLLMs with the releases of MiniCPM-V, A GPT-4V Level MLLM you can run on your phone.

MiniCPM-V aims to achieve a good balance between performance and efficiency and run on edge devices like mobile phones. Three iterations have been released this year: MiniCPM-V 1.0 2B in February, MiniCPM-V 2.0 2B in April, and MiniCPM-Llama3-V 2.5 8B in May. The latest MiniCPM-Llama3-V 2.5 boasts these features:

(1) Strong performance, outperforming GPT-4V-1106, Gemini Pro and Claude 3 on OpenCompass, a comprehensive evaluation over 11 popular benchmarks, (2) strong OCR capability and 1.8M pixel high-resolution image perception at any aspect ratio, (3) trustworthy behavior with low hallucination rates, (4) multilingual support for 30+ languages, and (5) efficient deployment on mobile phones.

MiniCPM-V uses adaptive visual encoding in its architecture and RLAIF-V in training. On MLLM benchmarks, MiniCPM-Llama3-V 2.5 scores well - 76% on TextVQA, 84.8% on DocVQA - above other MLLMs of comparable parameter size and on par with the much larger GPT-4V.

MiniCPM-V shows that high-performing MLLMs on edge-devices are possible. Project info is in the MiniCPM-V repo on Github.

Optimus-1: Hybrid Multimodal Memory Empowered Agents

A general-purpose agent for real-world use (think of AI embedded in robots) needs to handle multi-modal long-horizon tasks in an open world, requiring a combination of world knowledge, multi-modality and planning. Equipping AI agents with these capabilities is the big challenge taken up in the paper Optimus-1: Hybrid Multimodal Memory Empowered Agents Excel in Long-Horizon Tasks.

To encode multi-modal understanding to address the above challenges, they develop a Hybrid Multimodal Memory module. It consists of Hierarchical Directed Knowledge Graph (HDKG) and Abstracted Multimodal Experience Pool (AMEP). The Hybrid Multimodal Memory:

1) transforms knowledge into Hierarchical Directed Knowledge Graph that allows agents to explicitly represent and learn world knowledge, and

2) summarizes historical information into Abstracted Multimodal Experience Pool that provide agents with rich references for in-context learning.

They also develop the Optimus-1 multimodal agent on top of the Hybrid Multimodal Memory, creating an AI agent that operates virtually in Minecraft. Optimus-1 is constructed with a Knowledge-guided Planner and Experience-Driven Reflector, that draw on memories from multi-modal experience; they use the GPT-4V model for these tasks.

Learning and maintaining multi-modal knowledge with Hybrid Multimodal Memory seems to be effective, and Optimus-1 significantly outperforms prior agents and AI models on challenging long-horizon task benchmarks, including outperforming the GPT-4V baseline. They show multi-modal self-learning works:

An MLLM with Hybrid Multimodal Memory can incarnate an expert agent in a self-evolution manner.

Minecraft’s virtual world is an effective testing ground for many AI agent concepts, as shown in AI research projects like Voyager as well as this work. They share a number of Minecraft-related demos on their project page.

MMIU: Multimodal Multi-image Understanding for Evaluating Large Vision-Language Models

To make progress on Vision-Language Models, we will need benchmarks to measure it. Developed by Chinese AI researchers, Multimodal Multi-image Understanding (MMIU) is a new benchmark designed to evaluate Large Vision-Language Models (LVLMs) on multi-image tasks. MMIU has been open-source released as a dataset.

The paper MMIU: Multimodal Multi-image Understanding for Evaluating Large Vision-Language Models presents a technical report on the MMIU benchmark:

Covering 7 relationship types (such as 3D spatial relations), 52 tasks, 77K images, and 11K questions, MMIU reveals significant challenges in multi-image understanding. … The experimental results indicate that current models, including GPT-4, struggle to handle complex multi-image tasks.

The MMIU Project Page has links to the MMIU code, dataset on HuggingFace, as well as a leaderboard. GPT-4o tops the leaderboard currently at 55%. These MMIU scores show a lot of headroom from AI model improvement before MMIU as a benchmark is topped out, so it may prove to be a useful benchmark for some time to come.

GMAI-MMBench: A Multimodal Evaluation Benchmark For Medical AI

Similar to MMIU above, GMAI-MMBench is a multi-modal benchmark to assess Large Vision-Language Models (LVLMs) , but with a medical domain-specific focus. It is presented in GMAI-MMBench: A Comprehensive Multimodal Evaluation Benchmark Towards General Medical AI.

There’s great value in using LVLMs for medical diagnosis and other medical applications, but it’s a big challenge. It requires medical-specific benchmarks to measure an AI model’s medical understanding from images and text information. This motivated the development of GMAI-MMBench:

GMAI-MMBench is constructed from 285 datasets across 39 medical image modalities, 18 clinical-related tasks, 18 departments, and 4 perceptual granularities in a Visual Question Answering (VQA) format. Additionally, we implemented a lexical tree structure that allows users to customize evaluation tasks, accommodating various assessment needs and substantially supporting medical AI research and applications.

They evaluated 50 LVLMs on GMAI-MMBench. As MMIU studies found, they found weak results; LVLMs had “significant room for improvement” in how well they performed, with GPT-4o only achieving an accuracy of 52%. Their overall conclusion was that Medical tasks are still challenging for all LVLMs and Open-source models are catching up to the commercialized models.

They also identified “five key insufficiencies” in current medical LVLMs that need to be addressed to advance medical AI: Specific clinical VQA tasks, balance in performance, robustness, multiple-choice performance, and instruction tuning all need to improve.

Links to the dataset, leaderboard, paper and code can be found on the GMAI-MMbench Project Page.

MedTrinity-25M: A Large-scale Multimodal Dataset for Medicine

Presented in the paper MedTrinity-25M: A Large-scale Multimodal Dataset with Multigranular Annotations for Medicine, MedTrinity-25M is a large-scale multimodal medical dataset designed to support better multi-modal medical AI applications.

MedTrinity-25M consists of 25 million image-ROI-description triplets; ROI stands for region of interest and is expressed as a bounding box in part of a medical image. They utilized more than 90 online resources and advanced MLLMs to annotate images and flesh out coarse descriptions to automatically create this large set of fine-grained multi-modal data triplets:

Unlike existing dataset construction methods that rely on image-text pairs, we have developed the first automated pipeline to scale up multimodal data by generating multigranular visual and textual annotations from unpaired image inputs, leveraging expert grounding models, retrieval-augmented generation techniques, and advanced MLLMs.

MedTrinity-25M spans 10 modalities and covering over 65 diseases. They show that pretraining on this dataset can improve an AI model’s performance. LLaVA-Med++, pretrained on MedTrinity-25M data, “achieves state-of-the-art performance in two of the three VQA benchmarks and ranks third in the remaining one.” Further:

Pretraining on MedTrinity-25M exhibits performance improvements of approximately 10.75% on VQA-RAD, 6.1% on SLAKE, and 13.25% on PathVQA

MedTrinity-25M The dataset and code can be found on the MedTrinity-25M Project Page.