Introduction

Here are our AI research highlights for this week:

NuminaMath: Winning the AI Math Olympiad with MuMath-Code

LLEMMA: An Open Language Model for Mathematics

Internet of Agents: Weaving a Web of Heterogeneous Agents for Collaborative Intelligence

AriGraph: Learning Knowledge Graph World Models with Episodic Memory for LLM Agents

PaliGemma: A versatile 3B VLM for transfer

NuminaMath: Winning the AI Math Olympiad with MuMath-Code

Can we get AI to become a whiz at Math?

The Artificial Intelligence Mathematical Olympiad (AIMO) is a competition based on the International Math Olympiad (IMO), with the goal of getting AI to solve very complex high-level mathematics problems. XTX Markets sponsored a $10 million Prize pool.

Kaggle hosted a “progress 1” AIMO competition with a goal for entrants to “create algorithms and models that can solve tricky math problems written in LaTeX format.” These are not easy problems; the Gemma 7B baseline was a score of only 3/50 on these math challenges.

The winning entry NuminaMath far exceeded the baseline. NuminaMath 7B TIR won the first progress prize of the AI Math Olympiad (AIMO), with a score of 29/50 on the public and private tests sets.

In Kaggle competitions, as this leaderboard shows, prize-winning teams scour for the best algorithms, models, tools and hacks they can find, and combine them to make a winning solution that is robust. NuminaMath did the same, using a variety of techniques and academic results to build their solution, in particular LLMs fine-tuned with tool-integrated reasoning (TIR).

Project Numina has a write-up of their solution on Kaggle and posted their model NuminaMath-7B-TIR on HuggingFace. It has these elements:

First, they fine-tune DeepSeekMath-Base 7B to act as a “reasoning agent” that can solve mathematical problems via natural language and coding Python for tool-integrated reasoning (TIR). Numina built their fine-tuned LLMs on MuMath-Code, as explained in the paper “MuMath-Code: Combining Tool-Use Large Language Models with Multi-perspective Data Augmentation for Mathematical Reasoning.”

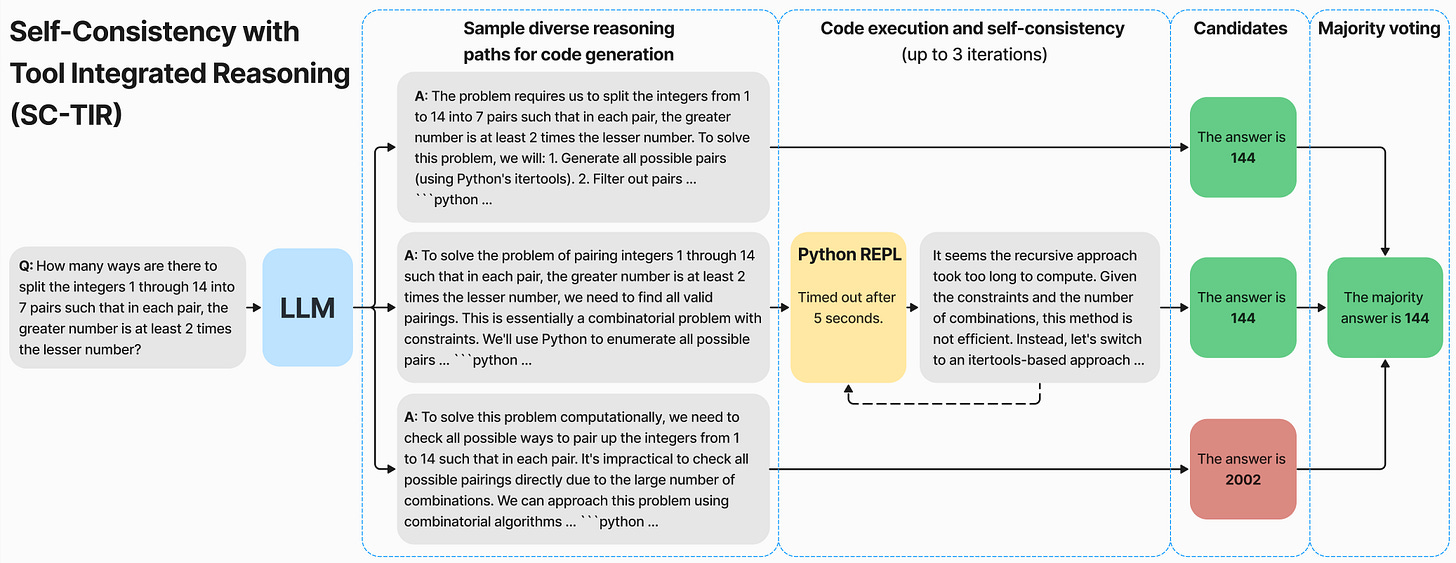

To solve math problems, they use the fine-tuned NuminaMath LLM to generate solution candidates during inference, then compare and evaluate them with self-consistency checks and tool-integrated reasoning (SC-TIR).

LLEMMA: An Open Language Model for Mathematics

On the topic of specialized LLMs for math, the "LLEMMA: An Open Language Model for Mathematics" paper gives us an LLM specialized for mathematical reasoning. LLEMMA was created by developing the Proof-Pile-2 dataset of 55 billion tokens of mathematical text and code, and then using it for continued pretraining to fine-tune the mathematical language models.

This not a new paper, but it was highlighted recently by Rohan Paul on X. It shows the value of open source development in sharing the artifacts, including models, dataset, and code.

The Proof-Pile-2 dataset consists of: Computer science papers on ArXiv; OpenWebMath - a filtered dataset of mathematical web pages; mathematical code in multiple programming languages; and StackOverflow, and proof assistant libraries.

This dataset and continued pre-training methods used in LLEMMA are a proven path to specialized LLMs for math applications, which is why the Numina team mentioned above applied a similar (MuMath-Code) approach to develop NuminaMath LLM.

Internet of Agents: Weaving a Web of Heterogeneous Agents for Collaborative Intelligence

The rise of LLMs has led to many capable and useful agent systems and frameworks to support agent development. However, many agents and frameworks have limited or rigid environments and cannot easily be integrated or co-operate.

The paper “Internet of Agents: Weaving a Web of Heterogeneous Agents for Collaborative Intelligence” considers how to improve inter-operability of agents:

Inspired by the concept of the Internet, we propose the Internet of Agents (IoA), a novel framework that addresses these limitations by providing a flexible and scalable platform for LLM-based multi-agent collaboration. IoA introduces an agent integration protocol, an instant-messaging-like architecture design, and dynamic mechanisms for agent teaming and conversation flow control.

Interoperable multi-agent architecture is a great concept, but needs an implementation to be proven, so it’s helpful that the IoA codebase is released on Github. They show in their testing that IoA facilitates effective collaboration among different agents and consistently outperforms state-of-the-art baselines.

IoA projects a future of interoperability for agents, where agent frameworks such as LangGraph, Autogen, or CrewAI, are pluggable components that collaborate within heterogeneous agent systems.

AriGraph: Learning Knowledge Graph World Models with Episodic Memory for LLM Agents

To operate in the real-world, LLMs need to update memories then act on that knowledge; typically, this is typically done by vector embeddings of learned text information, then retrieval augmented generation.

In the paper “AriGraph: Learning Knowledge Graph World Models with Episodic Memory for LLM Agents,” the authors note that “unstructured memory representations do not facilitate the reasoning and planning essential for complex decision-making.” They instead propose AriGraph, a knowledge graph-based memory system for an LLM Agent:

In our study, we introduce AriGraph, a novel method wherein the agent constructs a memory graph that integrates semantic and episodic memories while exploring the environment. This graph structure facilitates efficient associative retrieval of interconnected concepts, relevant to the agent's current state and goals, thus serving as an effective environmental model that enhances the agent's exploratory and planning capabilities.

They distinguish in the paper between semantic memory ( factual knowledge about the world) and episodic memory (personal experiences), and propose that graph-based methods can address both in a unified way for the AI agent’s internal world model.

To test AriGraph, they developed the Ariadne agent, which interacts with an environment (in this study the TextWorld) to accomplish goals set by a user.

Their evaluations show superior results with storing and maintaining LLM and AI agent memories with Knowledge-Graphs, over established methods like RAG:

Our approach markedly outperforms established methods such as full-history, summarization, and Retrieval-Augmented Generation in various tasks, including the cooking challenge from the First TextWorld Problems competition and novel tasks like house cleaning and puzzle Treasure Hunting.

PaliGemma: A versatile 3B VLM for transfer

Google announced and released PaliGemma in May, but this week released a paper covering PaliGemma, its architecture, training and evaluation of results. PaliGemma is a versatile 3B open Vision-Language Model (VLM), that is based on the SigLIP-So400m vision encoder and the Gemma-2B language model.

Evaluations show PaliGemma achieves strong performance on a wide variety of tasks, including specialized vision tasks like remote-sensing and segmentation. This is mainly remarkable due to PaliGemma’s size. At 3B, it performs better than prior vision language models 10 times its size, such as PaLM-E and Pali-X. They note:

Our results show that VLMs on the “smaller” side can provide state-of-the-art performance across a wide variety of benchmarks.