AI Week in Review 23.04.29

Soccer-playing robots, Deep Floyd IF releases, Pinecone raises money, and 1M token window with RMT

Notable AI Tools

Noty.ai is a ChatGPT-powered meeting scribe, that transforms meetings into transcriptions, action items, summaries, and follow-ups in seconds.

AI Tech and Product Releases

StabilityAI announces release of DeepFloyd IF: “Our multimodal AI lab, @DeepFloydAI is publicly releasing their state-of-the-art text-to-image model.” This text-to-image generator is available on HuggingFace spaces here. I tried it out, and while not as good as MidJourney-v5, results were decent and good enough to showcase an example in our article’s header.

Microsoft makes its AI-powered Designer tool available in preview. It’s available via the Designer website and in Microsoft’s Edge browser through the sidebar.

Designer is a Canva-like web app that can generate designs for presentations, posters, digital postcards, invitations, graphics and more to share on social media and other channels. It leverages user-created content and DALL-E 2, OpenAI’s text-to-image AI, to ideate designs, with drop-downs and text boxes for further customization and personalization.

NVIDIA Open-Source Software Helps Developers Add Guardrails to AI Chatbots. NeMo Guardrails helps enterprises keep applications built on large language models aligned with their safety and security requirements.

OpenAI offers New ways to manage your data in ChatGPT. “ChatGPT users can now turn off chat history, allowing you to choose which conversations can be used to train our models.”

AI Research News

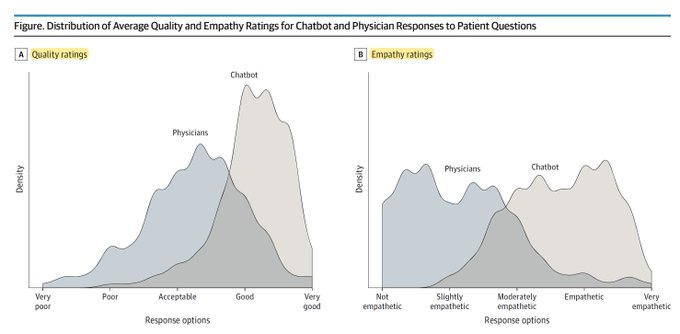

A JAMA Study “Comparing Physician and Artificial Intelligence Chatbot Responses to Patient Questions Posted to a Public Social Media Forum” shows that chatGPT gave more credible and better responses to a Reddit forum medical questions than doctors: “The chatbot responses were preferred over physician responses and rated significantly higher for both quality and empathy.”

Replit on How to train your own Large Language Models - “How we train LLMs, from raw data to deployment in a user-facing production environment … the engineering challenges we face along the way, and how we leverage the vendors that we believe make up the modern LLM stack: Databricks, Hugging Face, and MosaicML.” As to why Replit trains LLMs? It’s for customization, reduced dependency, and cost-efficiency.

Scaling Transformer to 1 million tokens and beyond with Recurrent Memory Transformer (RMT). “By leveraging the Recurrent Memory Transformer architecture, we have successfully increased the model's effective context length to an unprecedented two million tokens, while maintaining high memory retrieval accuracy. Our method allows for the storage and processing of both local and global information and enables information flow between segments of the input sequence through the use of recurrence.” They show benefits of this in bringing in bigger context windows, at the expense of more compute at inference time.

DeepMind researchers present robots playing soccer: Learning Agile Soccer Skills for a Bipedal Robot with Deep Reinforcement Learning. They shared a neat video on their work:

In another act of robotic agility, Team designs four-legged robotic system that can walk a balance beam. They use a spacecraft-inspired flywheel system to keep the quadruped balanced. And they have a video too.

Researchers develop AI system to generate novel proteins that meet structural design targets: “Researchers from MIT, the MIT-IBM Watson AI Lab, and Tufts University employed a generative AI model (like Dalle-2) … to predict amino acid sequences of proteins that achieve specific structural objectives.” These tunable proteins could be used to create new materials with specific mechanical properties, like toughness or flexibility.

Remarkably, “the models can generate millions of proteins in a few days, quickly giving scientists a portfolio of new ideas to explore, he adds.” This could both broaden and accelerate protein discovery, with so many specific candidate proteins generated.

AI Business News

Pinecone, the company that provides vector database memory for LLMs, announced this week that it has raised $100 million at a $750 million valuation.

Palantir shows off an AI that can go to war, integrating LLMs and AI intelligence gathering capabilities for decision support.

While the system itself is simply designed to integrate large language models (LLMs) like OpenAI's GPT-4 or Google's BERT into privately-operated networks, the very first thing they did was apply it to the modern battlefield.

Palantir also isn’t selling a military-specific AI or large language model (LLM) here, it’s offering to integrate existing systems into a controlled environment. Here’s their demo video:

Dropbox lays off 500 employees, 16% of staff, CEO says due to slowing growth and ‘the era of AI.’ The CEO said, “We’ve believed for many years that AI will give us new superpowers and completely transform knowledge work. And we’ve been building towards this future for a long time, as this year’s product pipeline will demonstrate.”

MANGMA is the new FAAANG. The 6 biggest players in AI Tech right now: MSFT, AMZN, NVDA, GOOG, META, AAPL. MSFT and NVDA are the biggest winners, with their gains in stock price in the last 6 months a sign the market recognizes their leadership in AI.

In related news, estimates by Synergy Research Group on Q1 Cloud Services market:

AI Opinions and Articles

Washington Post Op-Ed: “The next level of AI is approaching. Our democracy isn’t ready.” Heavy on the hand-wringing and light on specifics, it pleads for regulation-before of AI before something bad happens:

We need to govern these emerging technologies and also deploy them for next-generation governance. But thinking through the challenges of how to make sure these technologies are good for democracy requires time we haven’t yet had. And this is thinking even GPT-4 can’t do for us.

Size doesn’t matter when it comes to AI models: “we may not, for a long time to come, see anything larger than GPT-4 for reasons much more prosaic than AGI ideology.” This article makes multiple great points: Scale is likely not enough to get us to AGI, and in any case “almost no one cares about AGI.” Smaller models will find uses, since “smaller models are more manageable and better for B2B.” The future of Foundation models will be a diverse one. I’ll have more to say on this next week.

A Look Back …

What magical trick makes us intelligent? The trick is that there is no trick. The power of intelligence stems from our vast diversity, not from any single, perfect principle.

—Marvin Minsky, The Society of Mind

As a field of study, Artificial Intelligence began in the dawn of the computer age. The Dartmouth Conference in 1956, organized by Marvin Minsky and other leaders in the field, marked the first conference with a focus on Artificial Intelligence. Infographic charting the history of AI is courtesy of genuine impact.