AI Week in Review 23.05.20

ChatGPT App & plug-ins, Photoshop on steroids, and Salesforce's open-source coding LLM

TL;DR - If Google stole the spotlight last week, OpenAI stole it back. Not just with CEO Sam Altman testifying to Congress, but also with some big OpenAI release announcements - ChatGPT iOS app and plug-ins for all plus users.

Top AI Tools

RoomGPT - Generates “dream room” ideas for you. Couple uses artificial intelligence to redesign backyard and shows it off on social media. “AI is truly gonna take over so many jobs.”

Zoo, at zoo.replicate.dev, is an open source “playground for text to image models” to run prompts on different image generation AI models and compare results.

AI Tech and Product Releases

OpenAI launched ChatGPT app for the iPhone, now on the App store. The app features the ability to sync history across all devices, and it has Whisper speech recognition to allow users to input prompts via voice.

As I mentioned in this week’s article on Apple’s AI strategy, the iPhone interface will have to adapt to the emergence of ChatGPT. Letting ChatGPT displace Siri as the chatbot of choice isn’t good for Apple long-term.

Also, OpenAI announced they are rolling out ChatGPT web browsing and Plugins to all ChatGPT Plus users. “They allow ChatGPT to access the internet and to use 70+ third-party plugins.” So all the plug-ins they announced but we couldn’t get access to, you can get for their $20 per month plus subscription. Also, OpenAi says also that plug-ins will arrive for base (free) ChatGPT users in coming months.

OpenAI will release an Open Source AI model, according to The Information. However, “OpenAI is unlikely to release a model that is competitive with the proprietary model it’s selling, GPT-4.”

This TED talk of Humane’s wearable technology is quite impressive.

Meta bets big on AI with custom chips — and a supercomputer. It’s building its own chips to help handle various AI workloads. This is a part of Meta’s efforts to push harder on leveraging AI, especially generative AI, in their products. Meta also announced generative AI features for advertisers, providing an “AI Sandbox for advertisers to help them create alternative copies, background generation through text prompts and image cropping for Facebook or Instagram ads.”

In a followup to the PaLM2 announcement last week, PaLM2 technical details were leaked to CNBC, and CNBC reported they showed that PaLM2 was trained on a 3.6 trillion token dataset and has 340 billion parameters. This is indeed a smaller model than the original PaLM, which was trained on 540 billion parameters, while have a training dataset five times larger.

Salesforce AI Research is releasing CodeT5+, a family of open code Large Language Models, the largest at 16 billion parameters, for code generation and code understanding applications. They report instruction-tuned CodeT5+ 16B achieves 35.0% on the HumanEval benchmark, which is a new SoTA for open code LLMs (but not as good as Github CoPilot).

“The platform that will win will be the open one” - Yan LeCunn, Meta

AI Research News

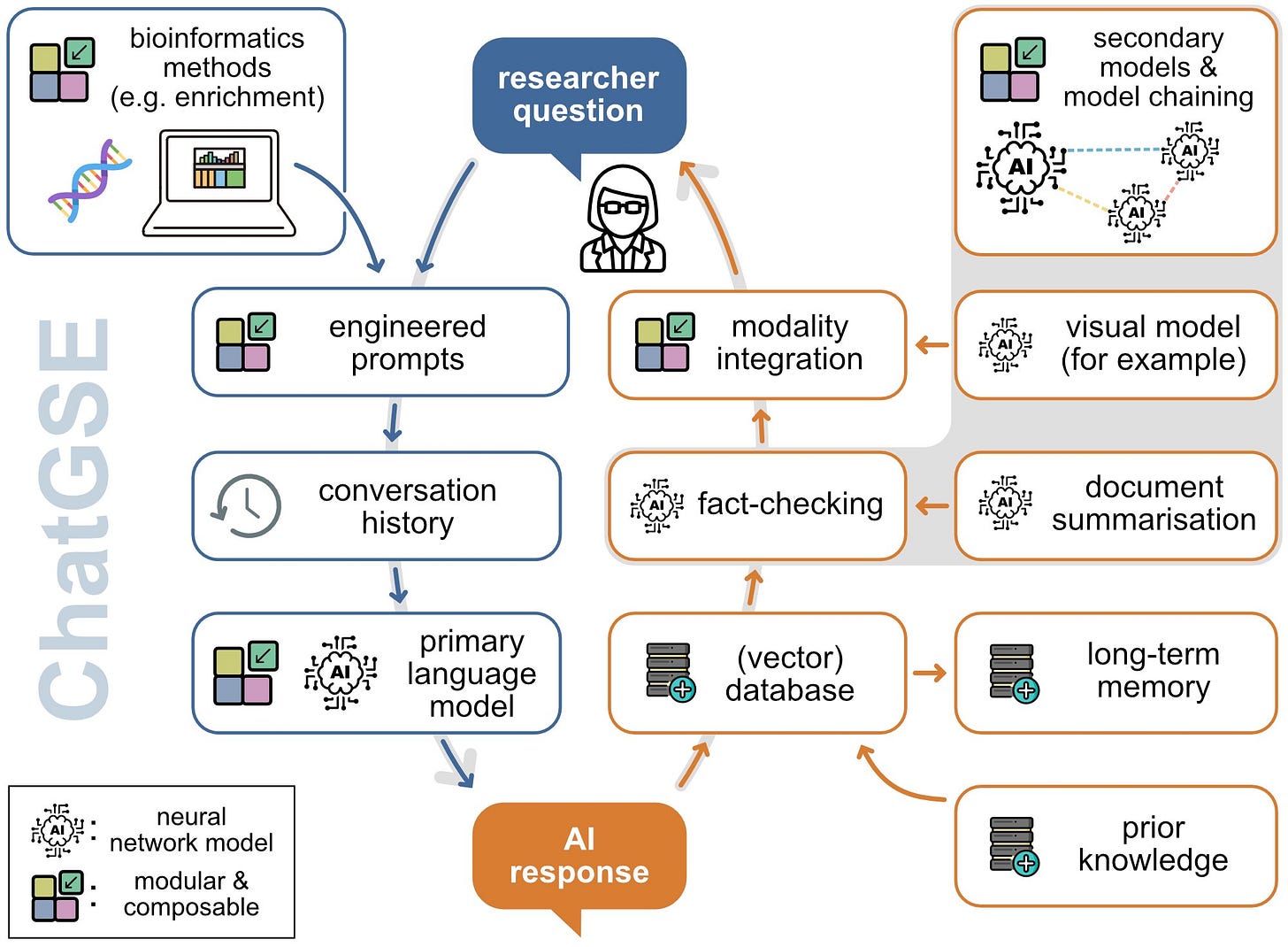

In “A Platform for the Biomedical Application of Large Language Models”, researchers from Heidelberg University in Germany have announced ChatGSE, an open and modular conversational platform to assist with biomedical analyses. “Ultimately, we aim to improve the AI-readiness of biomedicine and make LLMs more useful and trustworthy in research applications.”

A research page about a new AI image tool that's basically Photoshop on steroids is so popular the website is crashing. The research is called Drag Your GAN, an interactive approach for intuitive point-based image editing. “Our method leverages a pre-trained GAN to synthesize images that not only precisely follow user input, but also stay on the manifold of realistic images.” How they do it is via:

1) a feature-based motion supervision that drives the handle point to move towards the target position, and

2) a new point tracking approach that leverages the discriminative GAN features to keep localizing the position of the handle points.

The demo website link shows results on adjusting images of dogs, other animals, human faces, cars, etc. It is immediately compelling; it combines human direction with AI capabilities of following user intent while keeping the image realistic. This is exactly the right balance of user control and AI power. I expect this super-useful ‘Photoshop on Steroids’ AI technology will get adopted quickly. Code will be released in June.

Pre-Training to Learn in Context presents a framework to enhance the language models' in-context learning ability. PICL (Pre-training for In-Context Learning), encourages the model to infer and perform tasks by conditioning on the contexts while maintaining task generalization of pre-trained models. They show their method outperforms larger language models with nearly 4 times the number of parameters.

This application of machine learning used in gene research, using ML to characterize types of genetic sequences, is a great example of how ML and AI applications are accelerating science.

AI Business News

As we detailed in our prior article “Mr Altman goes to Washington,” OpenAI CEO Sam Altman told a Senate panel on Tuesday the use of artificial intelligence needs regulation. Altman in his testimony also repeated that they aren’t working on GPT-5 right now.

"We are not currently training what will be GPT-5; we don't have plans to do it in the next 6 months." – Sam Altman, May 16, 2023

Hippocratic AI raises $50 mln seed funding to build models for healthcare.

Together, the open-source AI startup that is behind the Red Pajamas open source LLMs, announced that it has raised $20 million in seed funding to support its development of open-source generative AI models and AI cloud platform. Together plans to continue release open-source models to support its mission of democratizing AI.

Government agencies are now using AI Chatbots: New Mexico uses an AI-driven chatbot to help process newborns into the Medicaid system.

Zoom To Inject Anthropic’s AI Chatbot Into Video, Collaboration Products.

‘With Claude guiding agents toward trustworthy resolutions and powering self-service for end-users, companies will be able to take customer relationships to another level.’ - Zoom’s Chief Product Officer Smita Hashim

AI Opinions and Articles

Poll shows 61% of Americans say AI threatens humanity’s future. One reaction comment was “Americans watch too many SciFi movies.” That could be it.

This op-ed is a helpful antidote to that popular doomerism: “The apocalypse isn’t coming. We must resist cynicism and fear about AI.”

The most important thing to remember about tech doomerism in general is that it’s a form of advertising, a species of hype. Remember when WeWork was going to end commercial real estate? Remember when crypto was going to lead to the abolition of central banks? Remember when the metaverse was going to end meeting people in real life?

I agree. I contend the real risks to AI are mundane and non-existential. AI might kill your job, but it won’t kill us all.

AI defines ‘ideal body type’ per social media, and unsurprisingly this “AI-defined” ideal body type just happens to match average human opinion - muscular men and slim well-toned women is that ideal body type. I have an issue with calling this “AI bias” when the AI simply reflects human opinion.

A Texas Grandfather gets fooled by AI voice cloning scam that faked his Grandson’s voice, so Dad warns others about AI voice scams after his family lost $1,000.

A Look Back …

In the Senate hearing this week on AI, several Senators remarked about the ‘mistakes’ of Section 230 of the Communications Decency Act (CDA), which provided broad immunity to internet platforms that host third party content. The CDA was passed in 1996 with bipartisan support. It set the tone for how the Government treated the internet, with a ‘light touch’ regulation, hoping it to flourish. It did.

If past is prologue, then we can expect initial Federal regulation of generative AI not in this Congress, but in the next, perhaps in 2025. With the current era’s zeitgeist skeptical of Big Tech corporate power and more risk-averse, we might expect to have a heavier hand of Government for AI regulation than we saw in the CDA. Yet much is unsettled on this, as many are still grappling with what AI technology is and what it can do.