AI Week in Review 23.06.17

OpenAI releases function calling, Meta makes MusicGen, VoiceBox and I-Jepa

TL;DR - OpenAI delivers function calling; GPT-4 can cannot ace MIT tests; and Meta/Facebook delivers interesting new AI models for visual understanding, voice, and music, continuing a trend of pioneering in audio-visual AI models.

AI Tech and Product Releases

OpenAI announces function calling, more steerable API models, longer context, and lower prices for their GPT-3.5 and GPT-4 models.

Function-calling is an important update that opens up the ability to connect GPT models to tools and APIs through developer-defined plug-ins:

Developers can now describe functions to GPT—4 and GPT-3.5-turbo, and have the model intelligently choose to output a JSON object containing arguments to call those functions.

They also quadrupled the context window for GPT-3.5 to 16K, while lowering the cost 75%, so more for less. This is the most important OpenAI update since their March announcement of chatGPT plug-ins.

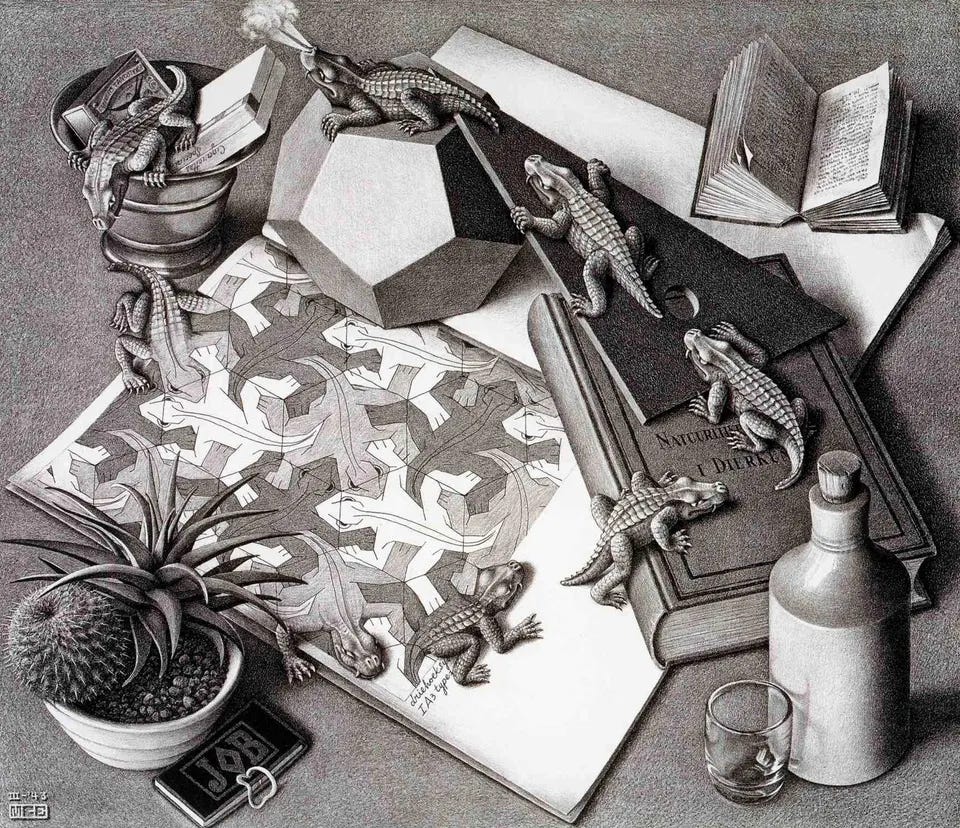

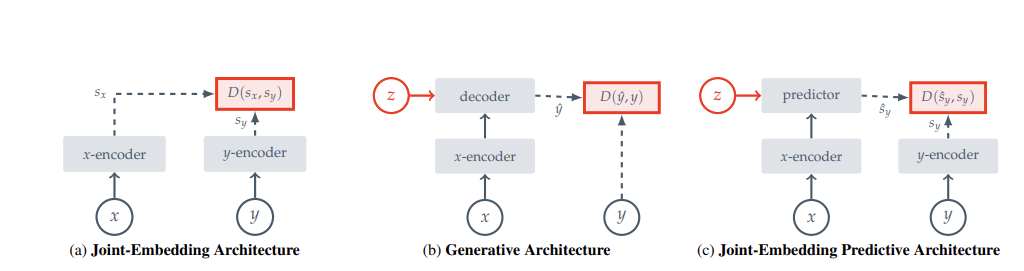

Meta releases I_JEPA, which stands for Image Joint Embedding Predictive Architecture. I-JEPA learns by creating and comparing abstract representations of images (rather than being based on the pixels themselves). The model is based on Yann LeCun’s vision of AI models learning via self-supervised observation of the world. Meta says:

I-JEPA delivers strong performance on multiple computer vision tasks, and it’s much more computationally efficient than other widely used computer vision models. The representations learned by I-JEPA can also be used for many different applications without needing extensive fine tuning.

This is a different architecture than the generative Foundation AI models, and it may lead to complementary capabilities and utility.

Meta also is releasing a new speech generation AI model called Voicebox, that can help with audio editing, sampling and styling.

From BAAI (Beijing Academy of AI) comes a new Chinese/English open source LLM, called Aquila, with 7B and 33B model sizes.

Wordpress is getting an AI Assistant.

AI Research News

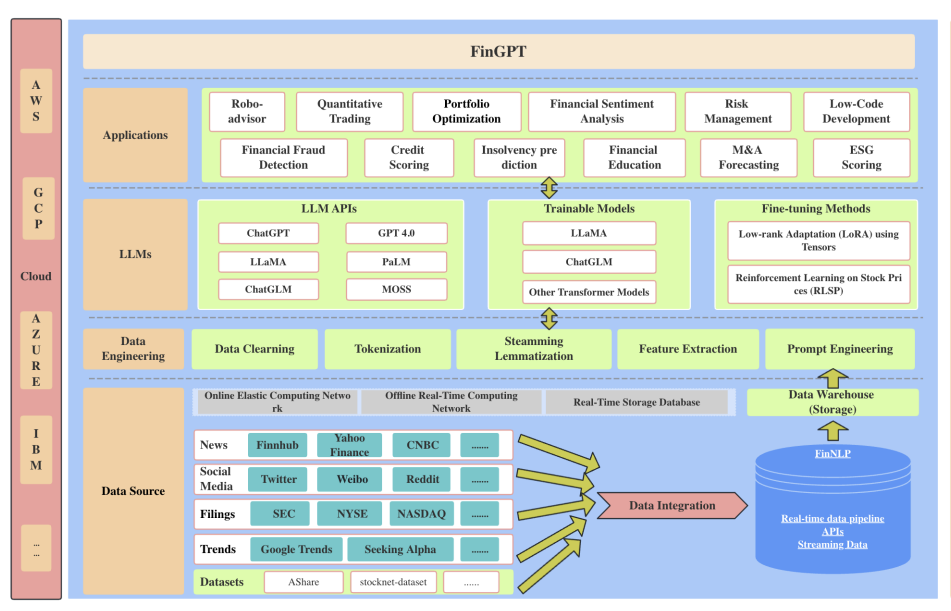

FinGPT: Open-Source Financial Large Language Models presents an approach to creating Finance-tuned LLMs. Their goal is an open platform for Finance-related AI:

“Through collaborative efforts within the open-source AI4Finance community, FinGPT aims to stimulate innovation, democratize FinLLMs, and unlock new opportunities in open finance.”

To achieve this, they collate finance-related data into NLP datasets (FinNLP) and use that to fine-tune existing LLMs using LoRA (low-rank adaptation). They also have an approach they call RLSP, Reinfocement Learning from Stock Prices.

They contrast their approach to that used to make BloombergGPT, a custom proprietary LLM built from scratch and costing several million dollars in GPU compute cost to create. Open-source LoRA fine-tuning approach drastically cuts the number of parameters to train and correspondingly cuts the cost of training by several orders of magnitude.

Exploring the MIT Mathematics and EECS Curriculum Using Large Language Models presented a remarkable result of GPT-4 acing high-level college exams: “GPT-4, with prompt engineering, achieves a perfect solve rate on a test set excluding questions based on images.” By itself, with one-shot, GPT-4 got 90%, but was able to get to 100% with chain-of-thought, self-critique and FewShot attempts.

This looked to be a fantastic result of high-level GPT-4 performance, but there was a critical review of this on twitter, that concluded: No, GPT4 can’t ace MIT. They looked at the dataset and found serious data problems:

we found clear signs that a significant portion of the evaluation dataset was contaminated in such a way that let the model cheat like a student who was fed the answers to a test right before taking it.

They found unsolvable problems, duplicated questions, information leakage in few shot examples, and other issues with the grading mechanism.

There’s one red flag this paper shares with other recent AI papers like it. To figure out whether the answers were right or not, the paper used GPT-4 to automatically grade model responses. This treats GPT-4 validation as a gold standard, but what if it is imperfect? Apparently, it was imperfect. This approach was mocked on twitter.

The paper quickly generated hype and went viral based on the shockingly good result, and then just as quickly got withdrawn based on the exposure of flawed and bad protocols. What’s fascinating is both the paper and the critique were written by MIT EECS undergraduates.

Our observations about the integrity of the data methods of analysis are worrying. This paper speaks to a larger trend in AI research recently. As the field progresses faster and faster, the timelines for discovery seem to shrink, which often comes with shortcuts. - MIT students Raunak Chowdhuri, Neil Deshmuk, and David Koplow.

Stanford and Cornell Researchers Introduce Tart: An Innovative Plug-and-Play Transformer Module Enhancing AI Reasoning Capabilities.

Meta also introduces MusicGen, a single Language Model for controllable music generation, that operates over several streams of compressed discrete music representation to generate high-quality music samples. This is an open source model.

AI Business and Policy News

TechCrunch reports corporate America is making huge bets on AI transforming their businesses:

These wagers have come in many forms. Firms like PwC and Accenture have promised to spend heavily on the sector, while others are creating new AI-focused capital pools and directly investing in AI-focused startups. A large number of tech companies today are also tapping their operating cash flow to add AI to their existing products and services.

AMD introduced the AMD Instinct™ MI300 Series accelerator family, calling it the World’s Most Advanced Accelerator for Generative AI. This will compete with NVidia’s H100, and they claim a 40B LLM can fit on a MI300X accelerator.

A great AI business use case, the Notion AI Story: How to build an AI product if you are not an AI company.

The Grammy Awards bans AI from being eligible, saying: "A work that contains no human authorship is not eligible in any categories."

AI Opinions and Articles

I digested Marc Andreessen’s Why AI Will Save the World essay in my Case for AI Optimism post. He was cited by British Prime Minister Sunak when an AI question came up during his White House visit.

On another square of the AI Attitude quadrant, Yann LeCun says AI is not even at dog level intelligence. He hasn’t avoided Twitter snark for this position.

A Look Back …

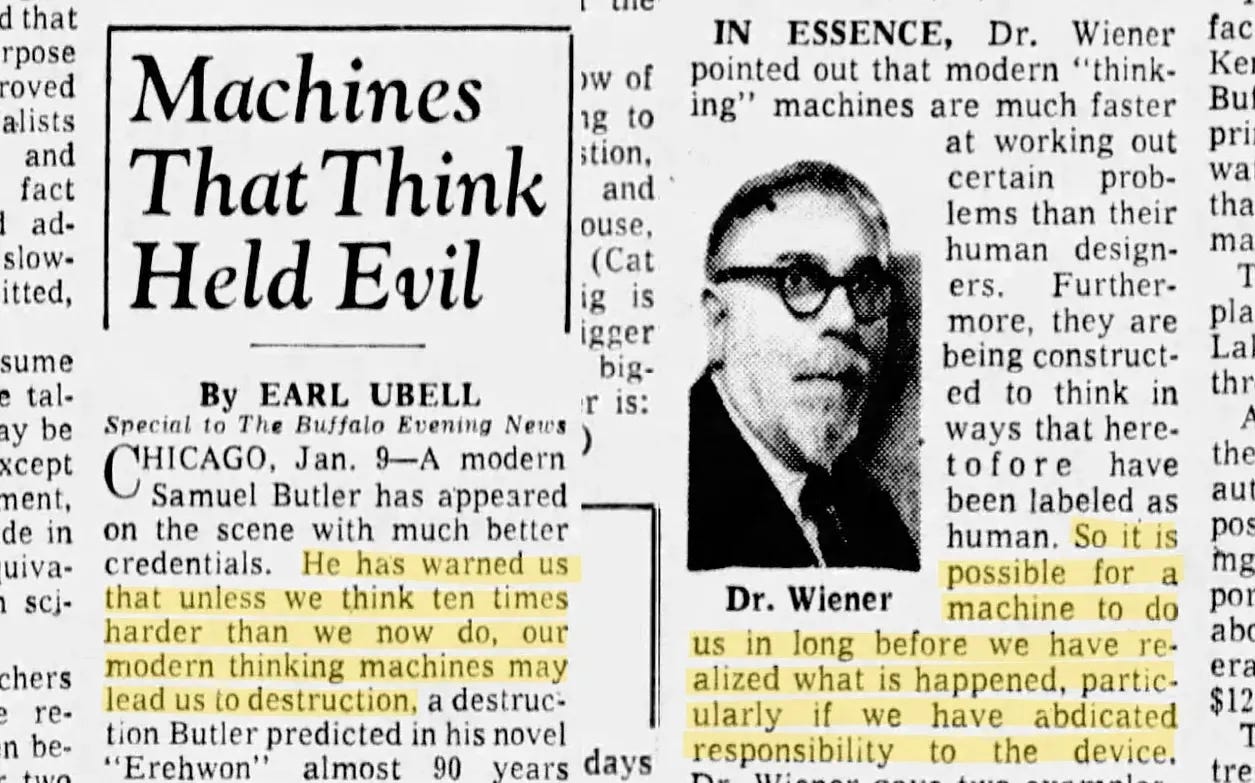

Marc Andreessen’s essay linked to the ‘Original AI Doomer,’ an article about the famous scientist Prof Norbert Weiner, pioneer in the field of cybernetics, and his ominous warnings about AI in the 1950s and 1960s. Prof Weiner stated, “Complete subservience and complete intelligence do not go together.” See below. I suspect that my 4-part division of AI attitudes - AI Skeptics, Doomers, Optimists and Minimal-Positivists - will persist all the way to the Singularity.

“We can be humble and live a good life with the aid of the machines, or we can be arrogant and die.” - Norbert Weiner