AI Week in Review 23.06.24

The rise of efficient AI models - Mosaic 30B, phi-1, AudioPaLM, vLLM, Dropbox AI

Top Tools

Noteable Plugin from Notable is a ChatGPT Plugin that can automate data analysis tasks. The use can effective get chatGPT to be the drive of the data analysis notebook, writing python code snippets to do analysis. Here is an example, where chatGPT was able to invoke a random forest classifier on the dataset.

AI Tech and Product Releases

Mosaic releases a powerful open-source 30B LLM. Following the release of a 7B LLM earlier, Mosiac has “raised the bar” on open source models. Not only is the model open-source, but the entire dataset and training process is open as well. This continues a trend of rapid iteration and innovation in open source smaller LLMs, but ‘small’ is getting bigger.

What’s more impressive is the claim that it is better than GPT-3 on a number of evaluation benchmarks:

MPT-30B outperforms GPT-3 on the smaller set of eval metrics that are available from the original GPT-3 paper. Just about 3 years after the original publication, we are proud to surpass this famous baseline with a smaller model (17% of GPT-3 parameters) and significantly less training compute (60% of GPT-3 FLOPs).

Both training efficiency (such as with FastAttention) and parameter efficiency (with more data on smaller models) has been improving, and this model sets a new standard and baseline for capabilities at this AI model size.

Dropbox announced a two-fer, launches $50M AI-focused venture fund and intros AI features. The AI features include Dropbox Dash and Dropbox AI:

Dropbox Dash is a “universal” search bar on your own content, whatever the source or type of file (Google, Word docs, Notion, other text files, etc.)

Dropbox AI is powered by an OpenAI model. It can generate summaries from documents, answer questions in a chatbot, do video previews. It can draw from the contents of research papers, contracts, meeting recordings and more.

Midjourney released version 5.2. Feature enhancements: Zoom out, outpainting, inpainting, improved remix, enhanced language processing. Zoom out is magical - credit to AKhaliq on twitter:

Not to be outdone, Stable Diffusion gets an upgrade too: Stability AI launches SDXL 0.9: A Leap Forward in AI Image Generation.

YouTube integrates AI-powered dubbing into their service, the company announced Thursday at VidCon. This feature can dub any video into any desired other language, keeping the original speaker’s tone of voice.

MongoDB announces AI Initiative with Google Cloud for Developers: “Seamlessly use Google Cloud’s Vertex AI foundation models with MongoDB Atlas Vector Search.”

In the non-release news, Meta has been unwilling to fully release their Voicebox tool due to concerns over Deep Fake misuse. They may have a solution, however: fingerprinting synthetic speech may support safe deployment. The idea of watermarks for generated images is also taking root, with Microsoft pledging to put watermarks into AI-generated images, and other companies working to do the same.

AI Research News

The paper Textbooks are all you need introduces the model phi-1, a 1.3B parameter transformer-based LLM focused on code and trained on “textbook quality” data. The model achieved very high benchmarks: pass@1 accuracy 50.6% on HumanEval and 55.5% on MBPP. This shows “ high quality data can even improve the SOTA of large language models (LLMs), while dramatically reducing the dataset size and training compute.”

As with the Mosiac 30B model, this shows improvement in quality and efficiency of AI models. Specifically, the only other >50% HumanEval model is WizardCoder, which is 10x in model size and used 100x the dataset size. The likely tradeoff for this model is likely narrowness of capability versus quality of results. It’s expert at python coding tasks, but doesn’t have capabilities outside its specialty.

Another advance in efficiency is this result, vLLM: An Open-Source LLM Inference And Serving Library That Accelerates HuggingFace Transformers By 24x. Using various optimizations, these researchers were able to vastly improve the efficiency of serving LLMs.

Researchers from Harvard introduce Inference-Time Intervention (ITI). This AI technique improves truthfulness in LLMs by guiding the LLM output at inference time. It improves truthfulness of LLMs from 32.5% to 65.1%.

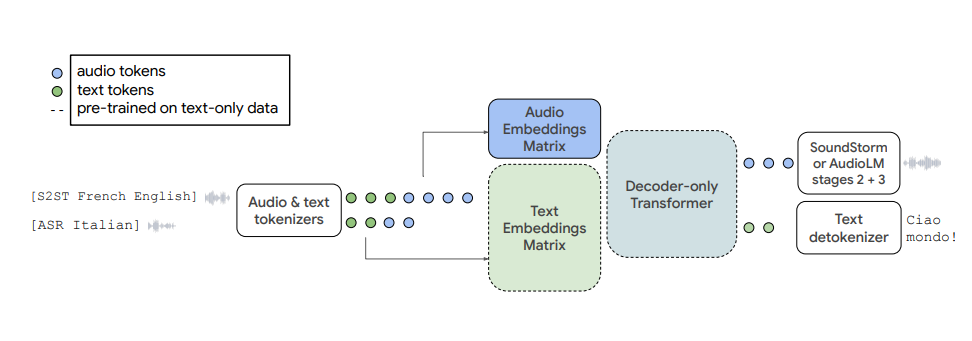

Google researchers present AudioPaLM: A Large Language Model That Can Speak and Listen. They add audio embeddings to the PaLM text LLM (a PaLM-2 8B LLM) to create a unified speech-text multi-modal model. The model yields very impressive features and tasks: Automatic Speech Recognition (ASR), Automatic Speech Translation (AST) and Speech-to-Speech Translation (S2ST). They claim state of the art results on AST and S2ST benchmarks, and competitive performance on ASR benchmarks.

The paper Efficient Neural Music Generation builds on the work of MusicLM to construct a much more efficient way for AI to generate music:

“we present MeLoDy (M for music; L for LM; D for diffusion), an LM-guided diffusion model that generates music audios of state-of-the-art quality meanwhile reducing 95.7% or 99.6% forward passes in MusicLM, respectively, for sampling 10s or 30s music. MeLoDy inherits the highest-level LM from MusicLM for semantic modeling, and applies a novel dual-path diffusion (DPD) model and an audio VAE-GAN to efficiently decode the conditioning semantic tokens into waveform. “

AI Business and Policy News

Was this headline written by an AI? The news media is starting to use generative AI: “Publisher Gannett plans to include generative artificial intelligence in the system it uses to publish stories as it and other news organizations begin to roll out the popular technology that may help save money and improve efficiency.”

New York magazine’s AI Is a Lot of Work takes a journey into the work of data annotation, done by low-paid offshore workers in Nairobi, Kenya among other places. “As AI technology becomes ubiquitous, a vast tasker underclass is emerging — and not going anywhere.”

When I read this breathless headline - Etherscan launches AI-powered Code Reader: Finance Redefined - it reminded me of the side comment on the Latent Space podcast that all the Web3 crypto startups had pivoted and got on the AI bandwagon.

Policy watch: Last week the EU Parliament moved ahead with AI regulations, they were first but not alone. Southeast Asia nations to set 'guardrails' on AI with new governance code. “Senior Southeast Asian officials said the so-called ASEAN Guide on AI Governance and Ethics was taking shape and would try to balance the economic benefits of the technology with its many risks.”

AI Opinions and Articles

How developers can build cost-effective AI models talks about the opportunity to build and leverage more efficient open-source LLMs through ‘data-centric’ AI: “As our algorithms have progressed, and we have access to incredible open-source LLMs to train our own models, it’s time to focus on how we engineer the data to build cost-efficient models.”

Ronald Bailey argues in the Reason op-ed “Don't 'Pause' A.I. Research” that doomsayers have a history of getting it wrong, and they are wrong this time with AI as well.

Human beings are terrible at foresight—especially apocalyptic foresight. The track record of previous doomsayers is worth recalling as we contemplate warnings from critics of artificial intelligence (A.I.) research. - Ronald Bailey, Reason

Emerging Architectures for LLM Applications surveys the AI ecosystem around LLMs, similar to some of my earlier articles, to denote the difference elements of the AI “stack”.

A Look Back …

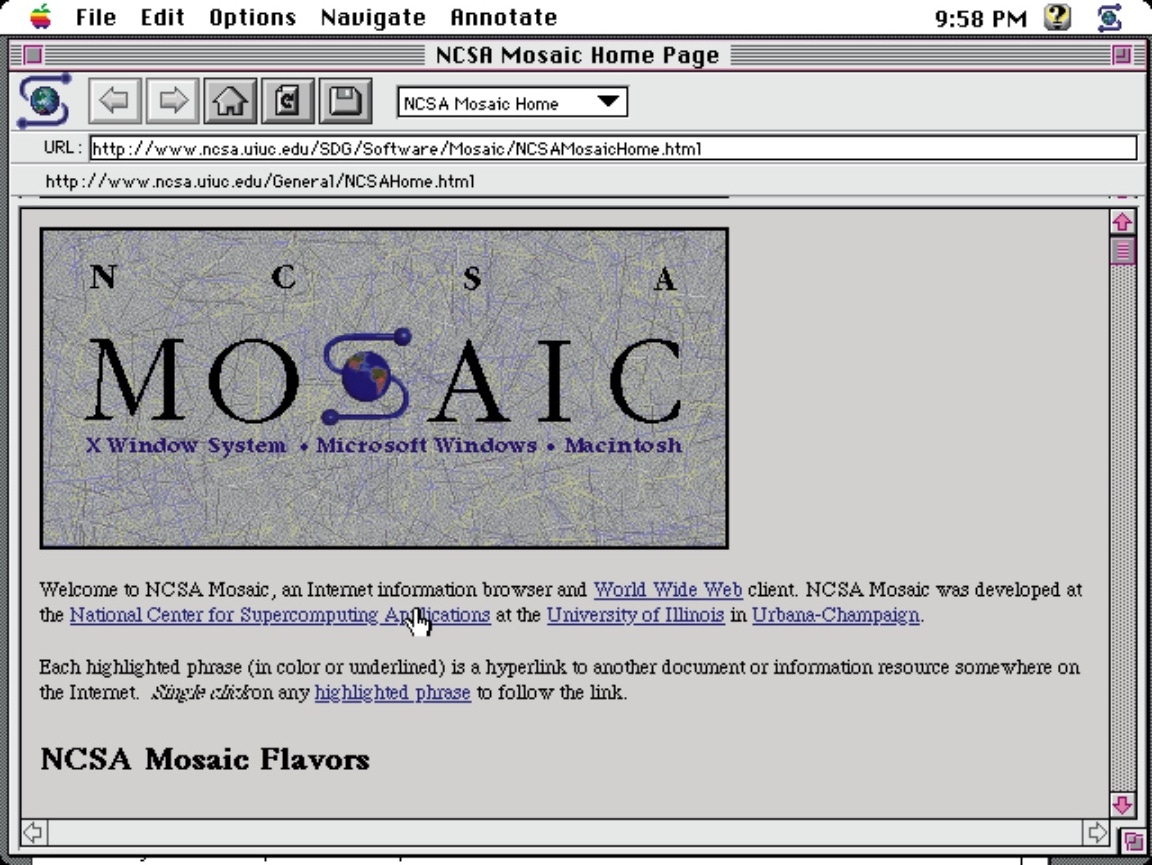

Lex Fridman interviewed VC Marc Andreessen recently on his podcast. In the interview, they spent some time discussing Marc’s pivotal role in the making of the internet. In 1993, as a University of Illinois student, Marc Andreessen coded and created Mosiac, the first internet browser. The rest, as they say, is history.

The explosion in popularity of the Web was triggered by NCSA Mosaic which was a graphical browser running originally on Unix and soon ported to the Amiga and VMS platforms, and later the Apple Macintosh and Microsoft Windows platforms. Version 1.0 was released in September 1993, and was dubbed the killer application of the Internet. It was the first web browser to display images inline with the document's text.

Just as Mosiac was the killer app for the internet, ChatGPT has become the kill app for the Age of AI. It’s just the beginning …