AI Week in Review 23.08.13

Nvidia at SIGGRAPH, multi-agent Meta-GPT and DC gets active with AI

Top Tools

This list of 10 AI Tools That You Should Be Using In Your Business This Year has the usual well-known AI apps - ChatGPT, Microsoft CoPilot, Google Bard/Duet, CrayonAI - but it also some lesser-known useful AI tools:

Heywire.ai - Turns information from the Internet into articles or blog posts.

Eightify - Summarizes videos for you.

Temi - AI-driven transcription service for audio or video.

Feathery - AI-based professional-looking form generation.

Interview.ai - Uses AI to conduct mock interviews to help prepare for interviews.

Opus Clip - Break down long videos into shorter, digestible clips for social media.

AI Tech and Product Releases

NVIDIA made big news at SIGGRAPH, the computer graphics conference, with some generative AI news:

Hugging Face is adding a new service to train and fine-tune AI models using NVIDIA’s DGX Cloud.

Nvidia unveiled a major upgrade to its enterprise-level AI software, AI Enterprise 4.0.

Nvidia introduced the Nvidia AI Workbench, a user’s local interface for developing AI models, where-ever they run and train models: PC, data center, cloud, or Nvidia DGX Cloud.

“Whether you’re an independent software vendor (ISV) or a data scientist, AI Workbench is your single pane of glass. It’s a way for you to package up your AI work uniformly and consistently and move it from one location to another. So, you do your work one time, the same way, no matter where you are,” Das said.

Their keynote also showcased a new AI 3D Background Feature Developed With Shutterstock. They used Nvidia Picasso to develop a model that generates photorealistic, 8K, 360-degree high-dynamic-range imaging (HDRi) environment maps.

Nvidia launched FlexiCubes, to generate improved 3D meshes from generative AI for a wide range of 3D applications. “FlexiCubes mesh generation is to introduce additional, flexible parameters that precisely adjust the generated mesh. By updating these parameters during optimization, mesh quality is greatly improved.”

Finally, Nvidia announced more hardware, including the GraceHopper GH200 Superchip, which will be available in Q2 2024. They also said Nvidia’s best AI chips are sold out until 2024.

StabilityAI announces StableCode, a 3B model suite designed specifically for code; the instruction-tuned 3B model achieves 27 on pass@1 on HumanEval benchmark.

Quizlet launching AI tools for “Next-Gen Studying,” using AI to power new study experiences.

AI Research News

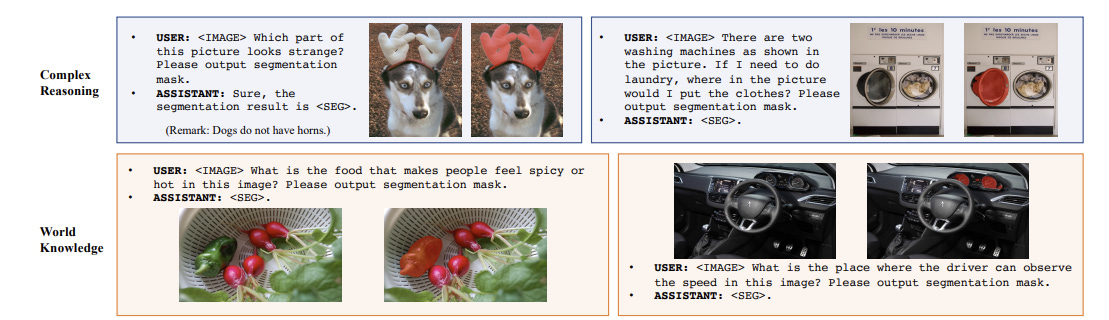

LISA: Reasoning Segmentation via Large Language Model introduces the reasoning segmentation task: to output an image mask given a complex query. It then shows how to address this task with a multi-modal AI model that inherits LLM capabilities for reasoning on text and outputs a segmented image mask.

In MetaGPT: Meta Programming for Multi-Agent Collaborative Framework, researchers developed a framework for structured coordination of multiple LLM-based agents to solve complex tasks.

Specifically, MetaGPT encodes Standardized Operating Procedures (SOPs) into prompts to enhance structured coordination. Subsequently, it mandates modular outputs, empowering agents with domain expertise comparable to human professionals, to validate outputs and minimize compounded errors. In this way, MetaGPT leverages the assembly line paradigm to assign diverse roles to various agents, thereby establishing a framework that can effectively and cohesively deconstruct complex multi-agent collaborative problems.

New tool finds bias in state-of-the-art generative AI model. It found in auto-generating images, stereotypes might be propagated, for example “science more closely with males and art more closely with females.” These are unsurprising results, in that all it shows is image generation replicates the representations it’s trained on.

How best to get LLMs to work with tools and APIs? Researchers find that Tool Documentation Enables Zero-Shot Tool-Usage with Large Language Models. This paper shows that providing tool documentation to an LLM was sufficient for eliciting proper tool usage from that LLM, achieving performance on par with few-shot prompts that gave examples of usage. So RTFM (“Read The F-ing Manual”) applies to AI.

AI Business and Policy

The data center is moving from the “Cloud Era” to the “AI Era,” and that will lead to uncertainty and shifts in data center compute demand:

AI is both an existential opportunity and a threat with unique challenges for data center capacity planning. These dynamics are likely to result in a period of increased volatility and uncertainty for the industry, and the stakes and degree of difficulty of navigating this environment are higher than ever before.

This AI story went viral: “SUPERMARKET'S MEAL-PLANNING AI SUGGESTS DEADLY POISON FOR DINNER.” To be fair, it was not the app but users jailbreaking and trolling the app who suggested chlorine gas, “bleach and ammonia surprise” and “Oreo vegetable stir fry,” but the app was not smart or locked-down enough to reject hacks and went along with making impossible recipes for this awful food. It’s a lesson in AI app security and guardrails.

More AI startup funding: London-based 11xAI closes a $2M pre-seed round to create autonomous AI workers. “It has built an AI sales development representative called Alice and plans to create James, focused on automated talent acquisition and Bob, targeting automated human resources work in the upcoming years.”

AI is getting more attention in Washington, DC:

The FEC (Federal Election Commission) voted to consider looking at campaign rules for use of AI, and Democrats are lauding federal agency’s AI move.

The Biden Administration announced an AI Cyber Challenge to Protect America’s Critical Software: “Several leading AI companies – Anthropic, Google, Microsoft, and OpenAI – to partner with DARPA in major competition to make software more secure.”

Meanwhile, the DoD has set up a Generative AI Taskforce, “an initiative that reflects the DoD's commitment to harnessing the power of artificial intelligence in a responsible and strategic manner.”

AI Opinions and Articles

A Wired Essayist says The World Needs A New Turing Test: “The father of modern computing would have opened his arms to ChatGPT. You should too.”

It is an unfortunately common fallacy to assume that because artificial intelligence is mechanical in its construction, it must be callous, rote, single-minded, or hyperlogical in its interactions. Ironically, fear could cause us to view machine intelligence as more mechanistic than it really is, making it harder for humans and AI systems to work together and even eventually to coexist in peace. - Ben Ash Blum, Wired

A Look Back …

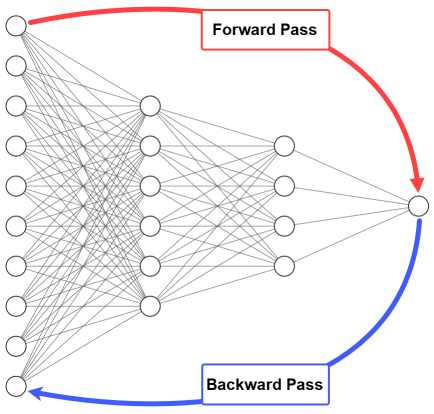

The entire deep learning and AI revolution has been built on a sequence of remarkably simple ideas. One of them is the way to update neural network weights to the neural network can be trained: Backpropagation.

This article does a deep dive on backpropagation, with a deep dive into the details, math and code of backpropagation. What’s a high level way to explain it? We train neural networks to minimize error by running them forward and check how close the neural net prediction is to correct; then we retrace back up the neural net to see where the errors were in each neural net and how to reduce them.

That’s the backpropagation algorithm: It updates network weights with the objective of reducing the network error. It reduces error by finding a gradient by taking derivatives of error, and then adjusts weights along that gradient (gradient descent) to hopefully reduce error.