AI Week in Review 23.09.02

OpenAI's ChatGPT Enterprise, Google's Duet, Vertex AI and Gemini, Baidu's Ernie, Meta's FACET, LM-Infinite, and Tesla's new 10K H100 supercomputer

Top Tools

This Fast Company article promotes Pi, Woebot, and Personal.ai as ChatGPT rivals.

Pi (at pi.ai) bills itself as “personal AI” that adapts to the user it interacts with. As the article notes, “it’s a friendly chat companion you can use as a sounding board for thoughts or questions.”

AI Tech and Product Releases

OpenAI has launched ChatGPT Enterprise, offering “enterprise-grade security and privacy, unlimited higher-speed GPT-4 access, longer context windows (32k context) for processing longer inputs, advanced data analysis capabilities, customization options, and much more.” They note ChatGPT is already in use across 80% of Fortune 500 companies, and it addresses privacy and data security concerns business users might have which is holding back broader adoption.

Google made a number of announcements at this week’s Google’s Cloud Next ‘23:

Duet AI, AI-powered assistant, is available across Google Cloud and Google workspaces. This brings AI powered features to a whole suite of Google tools.

They built out Vertex AI’s generative AI support capabilities, adding new models including Claude 2 and Llama 2, model upgrades and extensions, and data connectors, to connect it to other tools and databases.

Vertex AI Search and Conversation, in preview before, is now generally available. This provides “Google Search-quality, multi-modal, multi-turn search applications powered by foundation models, including the ability to ground outputs in enterprise data.”

Improved PaLM 2 model now supports 38 languages and a 32,000-token context window.

AI image watermarking for their Imagen image generator.

Other Google AI model progress, including improvements to their Codey code generation model and rollout of their Med-PaLM 2 model.

They announced an NVidia partnership: A3 virtual machines running H100 GPUs, for AI training.

Google made more explicit their plans for Gemini, the flagship AI model they are working on to out-do GPT-4. Key points:

Trained on Google's TPUv5 chips, with computing power 5 times that of GPT-4.

Dataset used for training this model is around 65 trillion tokens. The training included content from YouTube and used advanced training techniques similar to "AlphaGo-type" methods.

It's multi-modal, accepting text, video, audio, and pictures. Moreover, it can produce both text and images.

Google plans to release the Gemini model to the public in December 2023.

Google also touted their TPU v5e. Semi-Analysis calls it “The New Benchmark in Cost-Efficient Inference and Training for <200B Parameter Models.” The TPU v5e (the suffix e is for efficiency) is a game changer according to them based on the lower power, smaller, cheaper chips that don’t compete on performance with H100 one-on-one, but have lower total cost of ownership. Google’s pitch is to use TPU v5e for cheaper AI model inference.

Another chip company envying Nvidia’s margins and market share of AI chip market is Intel. They got welcome news when Hugging Face showed “Intel Habana Gaudi Beats Nvidia's H100 in Visual-Language AI Models.”

Baidu’s Ernie chatbot was approved by Chinese Government and is now available for download.

AI Research News

Meta’s AI group wrote a post “Evaluating the fairness of computer vision models” and shared a new model, FACET, to help do that. “FACET (FAirness in Computer Vision EvaluaTion), is a new comprehensive benchmark for evaluating the fairness of computer vision models across classification, detection, instance segmentation, and visual grounding tasks.” They use FACET to evaluate DINO v2, a vision model released earlier, that they also announced they open-sourced under Apache license.

While FACET is for research evaluation purposes only and cannot be used for training, we’re releasing the dataset and a dataset explorer with the intention that FACET can become a standard fairness evaluation benchmark for computer vision models and help researchers evaluate fairness and robustness across a more inclusive set of demographic attributes.

“LM-Infinite: Simple On-the-Fly Length Generalization for Large Language Models” is an approach to get LLMs to better understand longer context length inputs. When LLMs are fed longer inputs longer than the context window lengths they were trained on, they ‘lose track’ and fail to generate appropriate responses.

To solve this, researchers from Meta and U. of Illinois propose “a simple yet effective solution for on-the-fly length generalization, LM-Infinite, which involves only a Λ-shaped attention mask and a distance limit while requiring no parameter updates or learning.” This extends effective input context lengths with linear computation cost (quite efficient). They demonstrated that LM-Infinite generated fluent results on inputs as long as 32K tokens, on LLMs trained on 4K context lengths. Thus, this could be an effective method for extending input context windows.

Another approach to extending input context windows, called YaRN (Yet another RoPE extensioN method), was just submitted as “YaRN: Efficient Context Window Extension of Large Language Models” on Arxiv (not yet published). They have also open-sourced their solution and released Llama2 7B and 13B models fine-tuned using YaRN with 64k and 128k context windows.

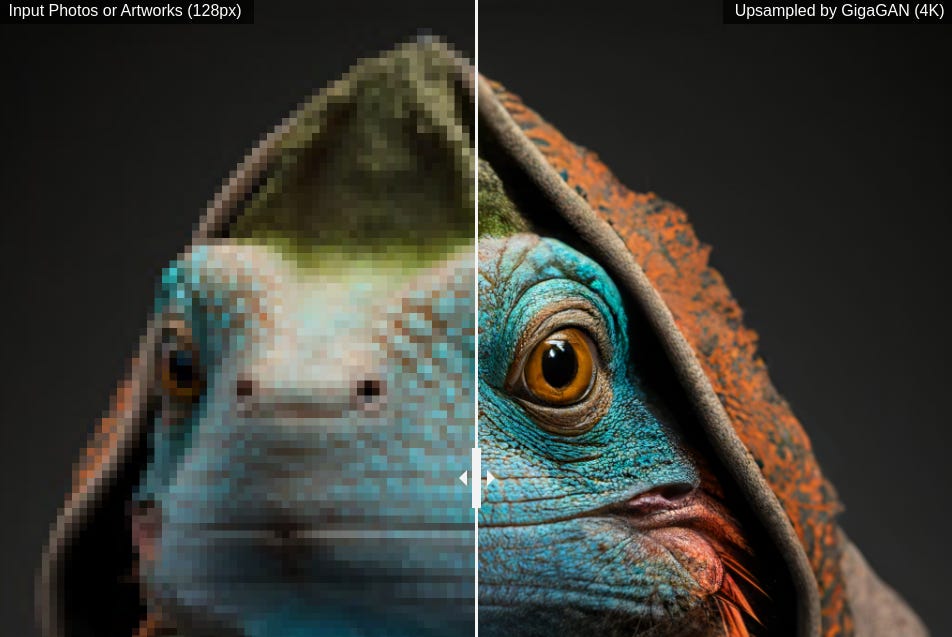

Two minute papers did a good review papers of GigaGANs, presented as “Scaling up GANs for Text-to-Image Synthesis” at the conference CVPR 2023. GANs (generative adversarial networks) were the original model for image generation, but were superceded by diffusion models for AI image generation, because they can achieve higher quality and fidelity but at the expense of being slower. GigaGAN upscaled the GAN model to improve quality to make comparable to Stable Diffusion, but doing it much faster:

It generates 512px outputs at 0.13s, orders of magnitude faster than diffusion and autoregressive models, and inherits the disentangled, continuous, and controllable latent space of GANs. We also train a fast up-sampler that can generate 4K images from the low-res outputs of text-to-image models.”

The up-sampling feature that can turn a tiny 128px image into a 4k one is really impressive. It would likely be widely adopted if the team released a model, but there is an implementation of GigaGANs on Github.

AI Business and Policy

In addition to Google announcing their A3 H100-based supercomputer, other big companies are announcing beefy AI computing. Tesla just went live on a $300 million10,000 H100 AI supercomputer. With “340 FP64 PFLOPS for technical computing and 39.58 INT8 ExaFLOPS for AI applications,” it is one of the most powerful supercomputers in the world. It will be used for Tesla’s full self-driving (FSD) technology.

Speaking of Tesla’s FSD, Elon Musk live-tweeted a full self-driving car test drive of their FSD v12 beta. One ‘intervention’ to avoid running a red light was needed on the 30 minute drive. Elon quipped,"That’s why we’ve not released this to the public yet.”

It might not be long before we do get self-driving cars and self-flying aircraft. AI has now conquered drone flying, as a high-speed AI drone beats world-champion racers for the first time.

The US Air Force has budgeted $5.8 billion highly advanced AI drones capable of autonomy, meaning hey won’t need human remote pilots, plus interoperability and “lethality enhancement.” Skynet anyone?

Israeli AI startup AI21 Labs valued at $1.4 bln after latest fund raise, raising $283 million from Google, NVidia and others.

The VC firm A16Z anounced a grant program to support the Open Source AI Community. Their goal is to give open source AI developers the resources they need to push AI technology forward.

There have been differing stories about OpenAI getting lower adoption this summer, and how inference alone is costing them $700,000 a day. But a new report suggests OpenAI is earning $80 million a month, a billion a year run rate that could plug its losses.

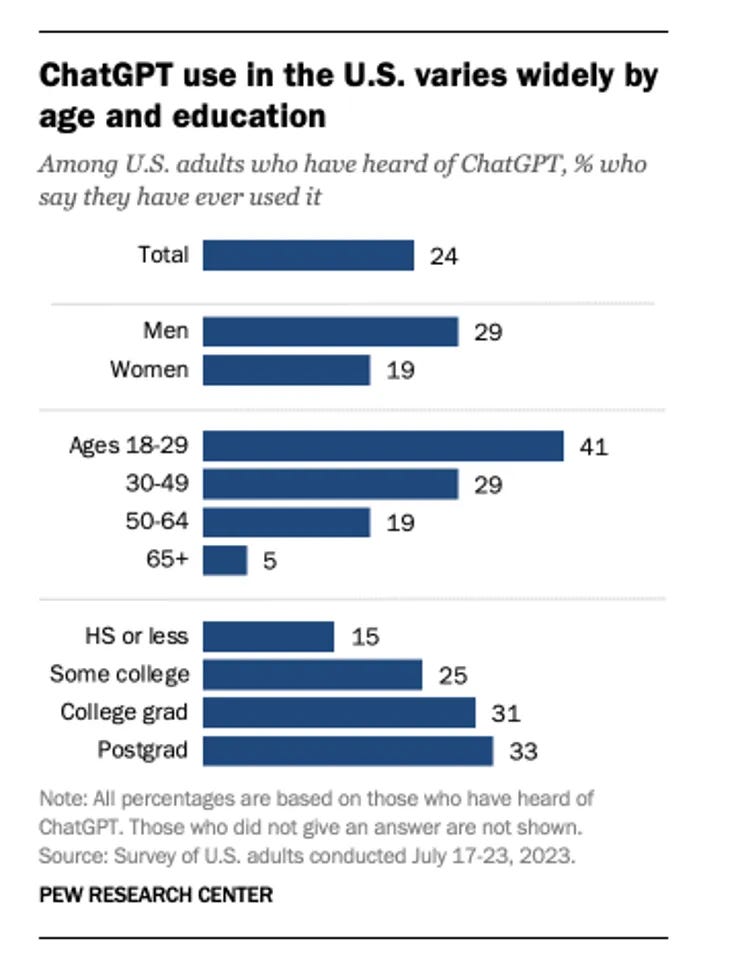

A Pew Research poll found that Only 18% of Americans have ever used ChatGPT, and the usage was much greater among younger and more educated adults than older and less educated ones.

AI Opinions and Articles

AI goes to school: OpenAI has a guide for “Teaching with AI,” that is, using ChatGPT in the classroom. Unsurprisingly, the guide doesn’t say to ban ChatGPT in the classroom.

Mustafa Suleyman, Cofounder of Deep Mind and now CEO at Inflection AI, shares thoughts in TIME on How the AI Revolution Will Reshape the World:

If the last great tech wave—computers and the internet—was about broadcasting information, this new wave is all about doing. We are facing a step change in what’s possible for individual people to do, and at a previously unthinkable pace. AI is becoming more powerful and radically cheaper by the month—what was computationally impossible, or would cost tens of millions of dollars a few years ago, is now widespread. -Mustafa Suleyman

A Look Back …

The Birth of Linux was this week in 1991:

Linus Torvalds posts a message to the Internet newsgroup comp.os.minix with the subject line “What would you like to see most in minix?” This is the first announcement that he is working on an operating system that will one day become Linux.