AI Week in Review 23.09.09

SunoAI Chirp V1, Falcon 180B open LLM, Claude Pro, pro-AI artists speak out, AI generated song seeks Grammy

Top Tools

This announcement is worth a try: SunoAI announced Chirp v1 on X (formerly Twitter), with ability to generate text-to-music: “Choose the style/genre of your music; 50+ languages now supported; Control song structure with tags like [verse] and [chorus]” It’s free to try out on Discord.

AI Tech and Product Releases

Announced on HuggingFace, TII has released Falcon 180B, a 180 billion parameter open access LLM. It was trained on 3.5 trillion tokens of data, mainly from RefinedWeb, using 4 times the compute of Llama 2 70B. Falcon 180B has performance close to PaLM2 large, outperforms GPT-3.5 and Llama2 70B on MMLU, and scores 68.74 on HuggingFace’s Open LLM Leaderboard, making it a leading open LLM.

Claude has introduced Claude Pro, an upgrade experience to get more usage out of Claude2. They also promise priority access to Claude.ai and early access to new features.

Zoom rebrands existing — and intros new — generative AI features, rolling out “AI Companion” a renamed and expanded version of Zoom IQ. This includes in-house generative AI, but it will be extended across its tools, and next spring, users will get a conversational AI interface to chat directly to AI Companion.

OpenAI Adds Canva as a ChatGPT plugin. Calling on Canva directly from ChatGPT can help content creators leverage Canva for visual content creation directly from AI.

X, formerly known as Twitter, has given itself permission to train AI on data produced on its platform in their updated terms of service. This is giving rise to speculation that X may end up being used for AI development by Elon Musk’s X.ai.

Delivering an improved software stack that doubles LLM inference performance, NVIDIA TensorRT-LLM Supercharges Large Language Model Inference on NVIDIA H100 GPUs. “H100 alone is 4x faster than A100. Adding TensorRT-LLM and its benefits, including in-flight batching, result in an 8X total increase to deliver the highest throughput.”

AI Research News

With an AI model FLM-101B shared on Hugging Face, researchers out of Beijing China share “FLM-101B: An Open LLM and How to Train It with $100K Budget.” They train the AI model at smaller sizes then grow the model over the pre-training run, to drastically cut the cost of training while still achieving decent benchmark results. This appears to be a highly useful technique to improve LLM training efficiency.

Another hurdle to more efficient AI Model training is the cost of RLHF. In Efficient RLHF: Reducing the Memory Usage of PPO, Microsoft researchers developed Hydra-RLHF to reduce the memory footprint and improve effeciency in RL stage of training.

A search-engine based alternative to vector stores is presented in “Vector Search with OpenAI Embeddings: Lucene Is All You Need.”

We provide a reproducible, end-to-end demonstration of vector search with OpenAI embeddings using Lucene on the popular MS MARCO passage ranking test collection. … there does not appear to be a compelling reason to introduce a dedicated vector store into a modern "AI stack" for search, since such applications have already received substantial investments in existing, widely deployed infrastructure.

AI Business and Policy

Many chip companies want to take on Nvidia in the booming AI chip market. Now, Microsoft-backed AI chip startup Corsair beats Nvidia H100 on key tests with C8, a GPU-like card equipped with 256GB RAM.

According to The Information, Apple has been expanding its computing budget for building artificial intelligence to millions of dollars a day, on a path to building Apple’s Ajax AI model.

Google will soon require disclaimers for AI-generated political ads:

The labels will need to state things like, “This audio was computer generated,” or “This image does not depict real events.” Any “inconsequential” tweaks, such as brightening an image, background edits, or removing red eye with AI, won’t require a label.

Pentagon looking to develop 'fleet' of AI drones, systems to combat China:

“The Pentagon believes AI can help it handle its 'pacing challenge' with China … the department would spend hundreds of millions of dollars on the project, aiming to produce thousands of systems for use over land, air and sea ready for first deployment within two years.”

AI is getting too good: OpenAI confirms that AI writing detectors don’t work. I don’t envy English teachers right now.

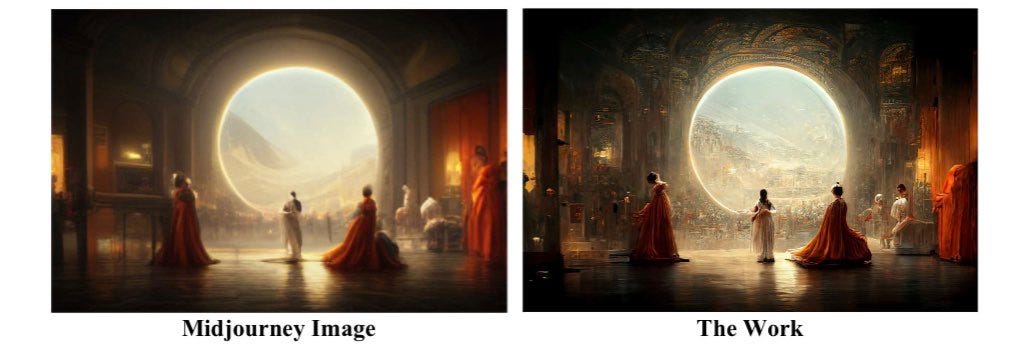

A case of AI-generated then derived art denied copyright: The copyright office denied copyright for Jason Allen’s Midjourney-derived work that “failed to meet the de minimis standard” of AI content.

This example point to how Derivative works are generative AI’s poison pill, as a recent TechCrunch article puts it.

While “derivative works” have specific legal treatment under copyright law, there are few precedents for laws or regulations addressing data derivatives, which are, thanks to open source LLMs, about to get a lot more prevalent. …

An upstream problem, like a poison pill, spreads contagion down the derivative chain, expanding the scope of any claim as we get closer to real legal challenges over IP in LLMs.

As if to put and exclamation point on the urgency of unanswered IP questions around AI-generated art, music and IP, the “Ghostwriter” of the fake AI Drake song is back. He released a new track featuring the voices of Travis Scott and 21 Savage and is looking for a Grammy. He wrote a note to the artists his audio track mimicked:

“The future of music is here. Artists now have the ability to let their voice work for them without lifting a finger. It’s clear that people want this song. DM me on Instagram if you’re interested in allowing me to release this record or if you’d like me to remove this post.” - Ghostwriter’s message to artists Travis Scott and 21 Savage

Not all artists are opposed to Generative AI, and those using AI wrote an appeal: Artists Using Generative AI Demand Seat at Table from US Congress:

We are speaking out today to advocate for a future of richer and more accessible creative innovation for generations of artists to come. Artists breathe life into AI, directing its innovation towards positive cultural evolution while expanding the essential human dimensions it inherently lacks.

AI Opinions and Articles

TIME has developed their list of the 100 most influential people in AI. It lists many of the obvious important people in AI, such as Demis Hassabis (DeepMind), Sam Altman (OpenAI), Jensen Huang (Nvidia), Yann LeCun (Meta AI), Geoff Hinton and Ilya Sutskever, and some of the lesser known CEOs, innovators and thought leaders.

But it misses the boat by highlighting users of AI and talkers about AI, while not mentioning many of the actual builders of AI. For example, it has mention of many people building key open source models, nor the authors of the “Attention is all you need” paper that got us to where we are with Large Language Models.

One name on the list is Elon Musk, who also was mentioned in TIME in a biographical essay on Elon Musk's Struggle for the Future of AI. Fascinating back stories on DeepMind, OpenAI and Musk’s own AI dreams.

Fast Company interviews Yann LeCun and explains Why Meta’s Yann LeCun isn’t buying the AI doomer narrative” He gives this analogy to explain his view on AI safety:

You can have a car that rides three miles an hour and crashes often, which is what we currently have,” he says, describing the latest generation of large language models. “Or you can have a car that goes faster, so it’s scarier . . . but it’s got brakes and seatbelts and airbags and an emergency braking system that detects obstacles, so in many ways, it’s safer.” - Yann LeCun

A Look Back …

An AI Summit hosted by the UK’s Prime Minister will be held an esteemed location: “Sunak chooses Bletchley Park to host AI summit in November.”

During World War Two, Great Britain had their best brains help the war effort by breaking the German Enigma code, and Bletchley Park was where they did it.

This History article describes how How Project Ultra at Bletchley Park cracked the Enigma code during World War Two, and helped the Allies win the war. A very detailed Wikipedia entry on the cracking of the Enigma code describes the work of the code-crackers, including the contribution of Alan Turing in making the “bombe”, an electro-mechanical computer that simulated the German Enigma machine.

IT WAS THANKS TO ULTRA THAT WE WON THE WAR.

- CHURCHILL TO KING GEORGE VI