AI Week In Review 23.10.14

Adobe's Firefly for Illustrator, Show-1 text-to-video, CharacterAI Group Chat, Disney's robot, Meta Chain-of-Verification (CoVe), Nvidia's roadmap update and SteerLM

Top Tools

We mentioned the LLaVa Project last week, which is an AI model that combines a vision encoder and the Vicuna LLM to do image interpretation tasks, like GPT-4V. You can freely access their open multi-modal LLaVa model here.

AI Tech and Product Releases

The future is Firefly, says Adobe. Adobe announced the launch of the Firefly Vector Model for Illustrator at Adobe’s MAX conference this week, calling it “the world’s first generative AI model focused on producing vector graphics.” Firefly for Illustrator will create entire vector graphics scenes from a text prompt. Adobe also announced further improvements to Firefly with Generative Match and demonstrated their Project Stardust AI-based photo editing capabilities.

But Adobe’s “most insane reveal” was the new Generative Fill for video. We now have a whole different way to make and edit video. Another mind-blowing demo was the Project Primrose dress that changes colors on command.

Google announced New features in Generative AI in Search including ability to create images using image generation AI, and also previewed “an upcoming tool called About this image that will help people easily assess the context and credibility of images.” They also are adding text generation for “written drafts” into the search experience, the idea being it can help when you use search for research.

CharacterAI announced Character Group Chat that allows you to chat with a group of human users and AI bots on the same group chat.

OpenAI will reduce their prices and open up new features for developers next month, at their upcoming developer’s conference. This is part of a move by OpenAI to make their offerings more appealing to developers and take their AI footprint beyond ChatGPT. These features include:

Stateful API that will remember the conversation history of inquiries. This could dramatically cut the cost of OpenAI in some use cases.

Vision API that can analyze images, as GPT-4V does now.

Meanwhile, “chat with images” and Dall-E 3 has shown up in OpenAI’s ChatGPT interface, so users have access to the new GPT-4V vision capabilities. Have at it!

Eleven Labs voice dubbing is now open for its users. You can localize content across 29 languages with voice translation and audio dubbing that keeps the accent and inflections of the original speaker.

Disney Research presented a new AI-based robotic character at the recent 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). This biped robot navigates and exhibits behaviors that show emotion as well, something like the robot in the Wall-E movie. They used reinforcement learning to get the emotive performances that are within the limits of physical performance.

AI Research News

Show-1 is a new text-to-video generation contender out of the National University of Singapore that uses a hybrid approach that combines latent and pixel VDMs (video diffusion models) to outperform current alternatives such as Runway Gen 2. They have a Show-1 project page and paper “Show-1: Marrying Pixel and Latent Diffusion Models for Text-to-Video Generation,” and they explain:

Our model first uses pixel-based VDMs to produce a low-resolution video of strong text-video correlation. After that, we propose a novel expert translation method that employs the latent-based VDMs to further upsample the low-resolution video to high resolution. Compared to latent VDMs, Show-1 can produce high-quality videos of precise text-video alignment; Compared to pixel VDMs, Show-1 is much more efficient (GPU memory usage during inference is 15G vs 72G).

Anthropic shared research that shows great progress in interpretability of LLMs, in a posted paper called Towards Monosemanticity: Decomposing Language Models With Dictionary Learning. They described the result on X:

The fact that most individual neurons are uninterpretable presents a serious roadblock to a mechanistic understanding of language models. We demonstrate a method for decomposing groups of neurons into interpretable features with the potential to move past that roadblock.

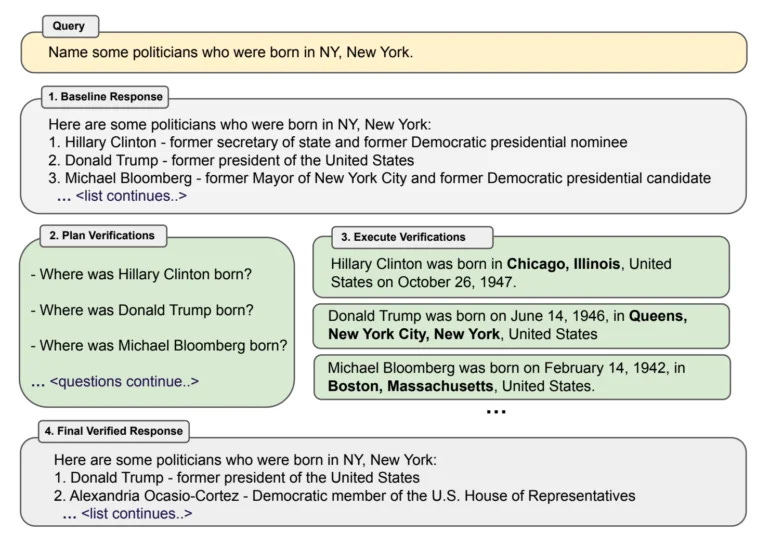

Researchers at Meta AI show how to reduce hallucinations in ChatGPT & Co with prompt engineering. Their paper presents Chain-of-Verification (CoVe), a prompt-based method that reduces LLM hallucinations through verification questions. They show CoVe-based Llama 65B yielded large improvements in LLM factualness and reducing errors, improving it enough to outperform larger models such as ChatGPT.

Nvidia’s NeMo SteerLM Lets Companies Customize a Model’s Responses During Inference:

With SteerLM, users define all the attributes they want and embed them in a single model. Then they can choose the combination they need for a given use case while the model is running.

For example, a custom model can now be tuned during inference to the unique needs of, say, an accounting, sales or engineering department or a vertical market.

More technical details on SteerLM are available in “Announcing SteerLM: A Simple and Practical Technique to Customize LLMs During Inference.” The key idea is to use supervised fine-tuning to train the AI model over a range of attributes (humor, creativity, helpfulness, for example) then enable the user to specify the desired attributes at inference time when querying the models. This new technique to tune LLMs more simply and quickly could become a powerful addition to AI model customization.

AI Business and Policy

AI chip stories in 2023 have a common theme: Huge demand and shortages for Nvidia chips; competitors and customers alike ramping up alternatives. Not content to let their dominance slip, Nvidia shared an updated 2024 and 2025 roadmap that shows an accelerated chip release cadence. They plan to release H200, a refresh of the current Hopper H100, in 2024, then transition to the Blackwell generation later in 2024 with B100. They will release another architecture X100 in 2025. It’s as if Nvidia is telling the other players “Catch me if you can.”

Nvidia will also reportedly release a RTX 4080 Super or RTX 4080 Ti in early 2024 at the RTX 4080 price point, to fix pricing of their high end graphics cards.

Samsung is adding AI capabilities to their next-generation mobile processor, the Exynos 2400, touting “AI performance that is 14.7 times better than those of its predecessor.”

The startup Numenta has helped Intel trounce AMD and Nvidia in critical AI tests. Numenta applies a novel approach to slim down AI models to be sparse, then abstract away sparse matrix operations to make them run efficiently on CPUs and GPUs. This improves AI model performance on a CPU by up to 100x.

The story “How generative AI is creeping into EV battery development” describes the startup Aionics, who are using generative AI to accelerate discovery of the right materials for batteries. AI can more rapidly generate and analyze candidate molecules and materials. This use of AI could accelerate all areas of research, making it one of the more profound applications of AI.

Sam Altman backs teens’ AI startup automating browser-native workflows. This AI-power RPA startup Induced AI is building an AI-driven browser-based workflow automation tool:

The eponymous platform spins up Chromium-based browser instances, and uses its tech to read on-screen content and control the browser similarly to a human in order to complete various steps of a workflow. This allows the browser instances to interact with websites even if they don’t have an API, Aryan Sharma, Induced AI co-founder and chief executive, showed in a demo.

“We’ve purpose-built a browser environment on top of Chromium that’s designed for autonomous workflow runs. It has its own memory, file system, and authentication credentials (email, phone number) to do complex flows. As far as I know, we’re the first to take this approach of redesigning the browser for native AI agent use. So complex logins, 2FA (we auto fill in auth codes/SMSs), file downloads, storing and re-using data is possible that other autonomous agents can’t do,” said Sharma.

Zapier is the ‘old school’ way of doing this. AI-centric flows is the new way. This can fundamentally change workflows.

SAG-AFTRA Endorses 'No Fakes Act' Discussion Draft Presented to the US Senate.

According to Deadline, the discussion draft of the bill endorsed by four U.S. senators supports the demands that the strikes have been fighting for; …Known as the No Fakes Act (or Nurture Originals, Foster Art, and Keep Entertainment Safe Act), the bill …aims to bring in federal protections “against the misappropriation of voice and likeness performance in sound recordings and audiovisual works. It also prohibits the unauthorized use of digital replicas without the informed consent of the individuals being replicated.”

AI Opinions and Articles

A CCS Insight analysis predicts ‘Overhyped’ generative AI will get a ‘cold shower’ in 2024:

The main forecast CCS Insight has for 2024 is that generative AI “gets a cold shower in 2024” as the reality of the cost, risk and complexity involved “replaces the hype” surrounding the technology.

“… But the hype around generative AI in 2023 has just been so immense, that we think it’s over-hyped, and there’s lots of obstacles that need to get through to bring it to market.”

While AI is certainly being hyped, AI’s real impact suggests it cannot be over-hyped. In 2023, we have seen a dramatic drop in the price of AI inference and the profusion of AI models, tools, applications, and new research. AI is more accessible, and the next few years will see continued waves of adoption and adaptation to AI in many use cases.

A Look Back …

The story “Going all-in with Nvidia: How Jensen Huang’s high-stakes bets paid off” reviews Nvidia’s history, a series of bets that in the end paid off handsomely:

“Nvidia has gotten to where they are by at least two, maybe three, times completely going all in and betting the company on a new idea that could have killed the company, but they were right every single time,” said Gilbert.

No bet has been bigger than NVidia’s investments in CUDA since 2006 and their bet on AI, going back to 2012 when AlexNet used NVidia GPUs for their image classification model that kicked off the deep learning revolution. That was only possible with CUDA, and we wouldn’t have gotten to this era of AI without it.

Jensen Huang’s “Go all in” approach to AI has paid off for Nvidia, and for us. As he expressed to Oregon State University students in 2013 …

“Go all-in. Don't play to come back. Don't play as if there's another hand. If there's another hand, let that be a surprise, and we'll figure out what to do next. … What is the definition of success? … Are you doing work that is relevant? Are you doing work that is exquisite? Or are you doing work that results in a great stock price? I have not figured out how to do all three at one time. However, in the long term, it all seems to work out.” - Nvidia CEO Jensen Huang