AI Week In Review 23.10.21

Baidu's ERNIE 4.0 Amazon's Digit, Mojo on Mac, Jasper Co-Pilot, Self-RAG, MemGPT, and Andreesen's Techno-Optimist Manifesto

AI Tech and Product Releases

At their Baidu World 2023 conference, Baidu launched their latest Foundation AI Model, ERNIE 4.0, with significantly enhanced performance in understanding, generation, reasoning, and memory. Baidu claims that this multi-modal AI model rivals GPT-4, but it’s hard to evaluate the claim, as it’s only available in an invitation-only beta at the moment. Baidu_demonstrated Ernie Bot in content generation, showing it understanding complex human requests and generating content:

Within a few minutes, ERNIE Bot rapidly generated a set of advertising posters, five advertising copy lines, and a marketing video.

In addition to ERNIE 4.0 and the ERNIE Bot, they also announced a number of AI application and features added to their product suite. Similar to Google and Microsoft, they are infusing AI across their product line, including in Baidu Search, Maps, document-sharing, etc. and announced other intelligent devices.

Anthropic Claude is now available in 95 countries but apparently not Canada.

Tape It’s software for musicians aims to deliver studio-quality noise reduction via AI. “The AI denoiser shipped this week as a free web app, with a plan to license the technology to vendors in the future. It will also later be integrated into the company’s flagship Tape It app.”

Amazon announces two new robot systems - Sequoia and Digit - to assist employees and deliver for customers. Sequoia integrates multiple robot systems to improve warehouse efficiency, reducing fulfillment processing time by 25%. Digit is a bipedal robot built by Amazon partner Agility that can walk and move in work spaces designed for humans, and can grasp and handle items. “We believe that there is a big opportunity to scale a mobile manipulator solution, such as Digit.”

Amazon now has 750,000 robots working for them, which likely makes them the biggest user of robotics systems in the world.

Mojo🔥 is now available on Mac, after being available on Linux since early September. Mojo is a high-performance Python-derived programming language designed for AI use cases that bills itself as “a high performance 'Python++' language for compute.” This release is a big deal because Mojo is showing performance equivalent to C and C++ implementations such as here but in a language accessible to Python developers. As Aydyn Tairov puts it: “It'll disrupt not just AI/ML development industry, but much more.”

Inflection AI announced an update to their Pi AI Model so it can access the web and be more current and informed.

Teknium, fine-tuner of open AI models, released Open Hermes 2 based on Mistral 7B, and it beats much larger prior models on a number of tasks. “Hermes 2 changes the game with strong multiturn chat skills, system prompt capabilities, and uses ChatML format.” This AI model has already been quantized and converted for local inference.

Jasper launches new marketing AI copilot: ‘No one should have to work alone again.’

The features Jasper announced today include new performance analytics to optimize content, a company intelligence hub to align messaging with brand strategy, and campaign tools to accelerate review cycles. The features will start rolling out in beta in November, with additional capabilities planned for Q1 2024.

AI Research News

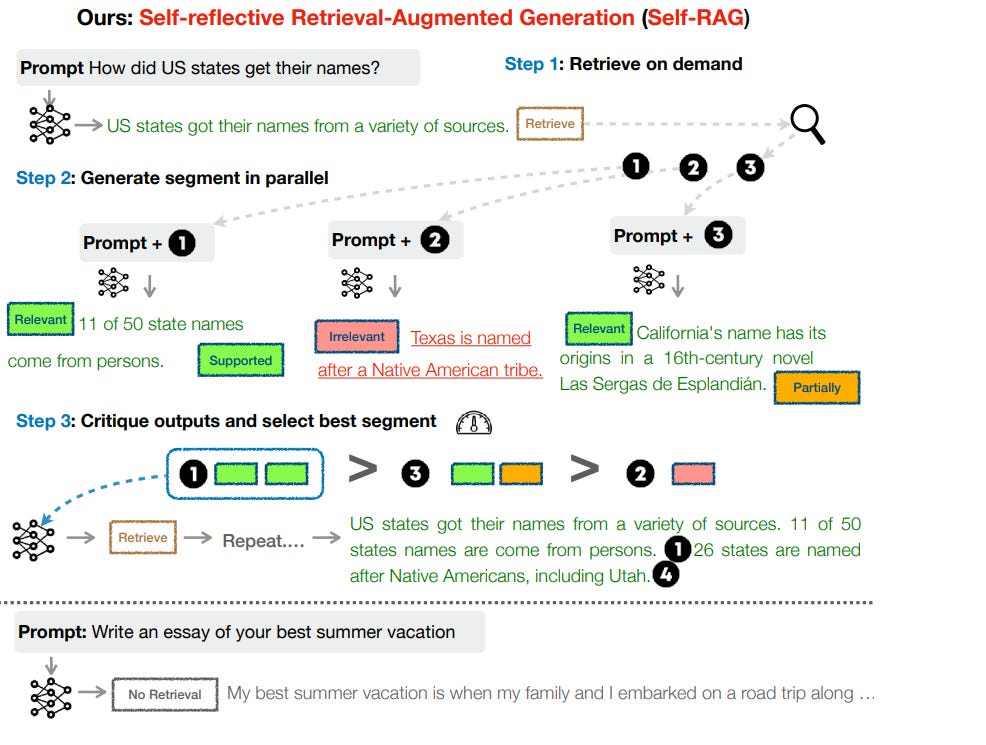

Retrieval-Augmented Generation (RAG) can improve factuality of LLM responses by incorporating relevant knowledge into context windows for LLMs, but irrelevant or missing knowledge diminishes RAG’s utility. We still face challenges with limited context windows in LLMs and how best to use them. Two new papers to improve the RAG process are now utilizing the language models themselves to control retrieval process.

The first is Self-RAG: Learning to Retrieve, Generate, and Critique through Self-Reflection. They train an LLM that can self-reflect on the need for retrieval of information during inference by generating special tokens called reflection tokens. “Generating reflection tokens makes the LM controllable during the inference phase, enabling it to tailor its behavior to diverse task requirements.” Using these tokens, the LM adaptively retrieves passages or critiques sources during inference, making the RAG process on-demand.

They released 7B and 13B parameter Self-RAG as open AI models, and show that Self-RAG outperforms ChatGPT and retrieval-augmented Llama2-chat on Open-domain QA, significantly outperforming these models on factuality and citation accuracy.

Another approach to improving the management of knowledge in LLMs is shown in “MemGPT: Towards LLMs as Operating Systems.” To overcome the limitations of LLM context window size, they utilize concepts of memory management similar to hierarchical memory systems used in operating systems. Specifically, MemGPT has the LLM itself provide memory management, using function calls from the LLM to move external vector database information in and out of the LLM's limited context window as needed. This is “virtual context management.” As a result:

MemGPT is able to analyze large documents that far exceed the underlying LLM's context window, and … MemGPT can create conversational agents that remember, reflect, and evolve dynamically through long-term interactions with their users.

MemGPT experiment code and data was released to Github and Hugging Face, accessible here.

On the AI chip development front, IBM has made a new, highly efficient AI processor. In their paper “Neural inference at the frontier of energy, space, and time,” published in Science, they claim large gains, up to 25 times, in energy efficiency for their NorthPole chip design versus comparable GPU designs.

Meta AI has developed an AI system that can generate images from brain scan data. Researchers at Meta AI used magnetoencephalography (MEG) to decode visual representations in the brain, then pairs it with image generation AI to output the related image “with millisecond precision.”

Researchers unveil ‘3D-GPT’, an AI that can generate 3D worlds from simple text commands. 3D-GPT combines AI models in an AI agent approach to automate the workflow from text prompt to final 3D rendering.

AI Business and Policy

New semiconductor export controls were imposed on AI chip makers by the Biden administration. NVidia’s H800 in particular is now banned for export to China. NVidia has said it will no impact their financial results meaningfully.

The Semiconductor Industry Association responded that the U.S. may be going too far: “Overly broad, unilateral controls risk harming the U.S. semiconductor ecosystem without advancing national security as they encourage overseas customers to look elsewhere.” While China cannot fully compete with the best-in-class GPUs, China’s Huawei is developing home-grown chips, and further restrictions could boost those efforts. However, the Biden administration is also restricting advanced semiconductor manufacturing equipment to China.

The Information reports “OpenAI Dropped Work on New ‘Arrakis’ AI Model in Rare Setback.” The Arrakis model was intended run the chatbot less expensively, but failed to meet their expectations. In unrelated news, investment firm Thrive Capital is leading a deal to buy OpenAI shares from employees that values OpenAI at $80 billion.

In AI startup investment news, SoftBank is leading a $75M To $100M investment in 1X Technologies. Also, China-based AI startup Zhipu AI announced it has raised $342 million from Chinese tech backers that include Alibaba and Tencent. Zhipu released the bilingual (Chinese and English) open AI model ChatGLM-6B.

A study in Nature Machine Intelligence shows AI tidies up Wikipedia’s references — and boosts reliability. Researchers built a neural network that “analyses whether Wikipedia references support the claims they’re associated with, and suggests better alternatives for those that don’t.” This indicates the best tool to fight AI-based disinformation may be AI itself.

Stanford HAI has developed The Foundation Model Transparency Index, an assessment of the openness and transparency of foundation AI models. “Maybe We Will Finally Learn More About How A.I. Works,” says N.Y. Times. Of the commercial Foundation AI models in 2023, Meta’s Llama 2 leads, while Amazon’s Titan lags.

AI Opinions and Articles

Marc Andreesen has released The Techno-Optimist Manifesto, an essay putting a bold stake in the ground defending technology, markets, growth, accelerating returns, and celebrating the abundance from technology with the optimistic view that “the techno-capital machine of markets and innovation never ends, but instead spirals continuously upward.”

His techno-optimist manifesto marries the free-market beliefs of Hayek with the technological futurism of Kurzweil to paint a picture of a continued spiral of progress based on technology advances. With respect to AI and technology, he sees intelligence as “the ultimate engine of progress” and AI as “our alchemy, our Philosopher’s Stone”:

We believe Artificial Intelligence is best thought of as a universal problem solver. And we have a lot of problems to solve.

We believe Artificial Intelligence can save lives – if we let it.

We believe we should place intelligence and energy in a positive feedback loop, and drive them both to infinity.

His bold assessment of the civilizational landscape has drawn attention and been met with criticism and skepticism. But he is largely correct: The arc of technology bends towards progress.

A Look Back …

Some are calling AI the Fourth Industrial Revolution. It then behooves us to consider AI’s impact as it relates to the prior industrial revolutions. This below quote was in the preface to Marc Andreesen’s techno-optimist essay, and is a reminder of how far we have come.

Our species is 300,000 years old. For the first 290,000 years, we were foragers, subsisting in a way that’s still observable among the Bushmen of the Kalahari and the Sentinelese of the Andaman Islands. Even after Homo Sapiens embraced agriculture, progress was painfully slow. A person born in Sumer in 4,000BC would find the resources, work, and technology available in England at the time of the Norman Conquest or in the Aztec Empire at the time of Columbus quite familiar. Then, beginning in the 18th Century, many people’s standard of living skyrocketed. What brought about this dramatic improvement, and why?

- Marian Tupy