AI Week In Review 23.11.18

OpenAI CEO Altman out (and back in?); Microsoft CoPilots everything; Meta's Emu Video; Runway's Gen2 Motion Brush

The news broke yesterday that Sam Altman was ousted as OpenAI CEO. This has over-shadowed our weekly news, and it requires it’s own article that we posted hours ago:

Sam Altman ousted as OpenAI CEO

Breaking News - Sam Altman ousted as OpenAI CEO I loved my time at openai. it was transformative for me personally, and hopefully the world a little bit. most of all i loved working with such talented people. will have more to say about what’s next later.” -

As of Saturday evening 11/18, there are stories of a possible return to OpenAI for Sam Altman. The saga continues, but we will focus on other stories in the AI world for this weekly.

AI Tech and Product Releases

Google is opening up Bard for teens, with extra guardrails for teen users. Included in the changelog for Google Bard update:

Google Bard extra guardrails recognizes topic inappropriate for teens.

It can teach mathematics with step by step explanations.

It can create data visualizations such as charts.

The biggest release announcements came out of Microsoft Ignite, their Azure cloud announcements. Most generative AI applications and features from Microsoft have been given the Copilot brand:

Bing Chat is rebranded ‘Copilot’ and gets its own website copilot.microsoft.com, that works on all browsers.

CoPilot in Teams: Overhauling Microsoft Teams to allow avatars, have CoPilot help take notes, capture content, ‘intelligent recap” for meeting notes, etc.

CoPilot for Azure: “Microsoft Copilot for Azure is integrated into the Azure platform, right into the Azure portal where IT teams work.” This is an IT operations helper.

Copilot for Service is designed for customer service use cases such as answering sales questions; it integrates with customer relationship management (CRM) software.

CoPilot Studio: This is a general capability to build custom AI models, a “low-code tool to customize Copilot for Microsoft 365 and build standalone copilots.” You can import data, integrate with your tools, point it to your website. This is like a Microsoft version of custom GPTs.

Azure OpenAI service: “Best selection of frontier AI models” including OpenAI models: GPT-4 Turbo with vision, Dall-E 3, and fine-tuning. Azure also is offering the small but very capable Phi2, a 2.7B model with common sense, language understanding, logical reasoning, and will be open source.

Microsoft also announced two custom-designed, in-house chips: Maia 100 AI Accelerator - a chip dedicated to LLM training and inference; and the Azure Cobalt 100 CPU. These will be used in their data centers.

At the same time, a year after NVidia and Microsoft announced extensive use of NVidia GPUs to build a massive cloud AI computer, Microsoft boasted their 561 petaflop “Eagle” supercomputer is the third most power supercomputer in the world.

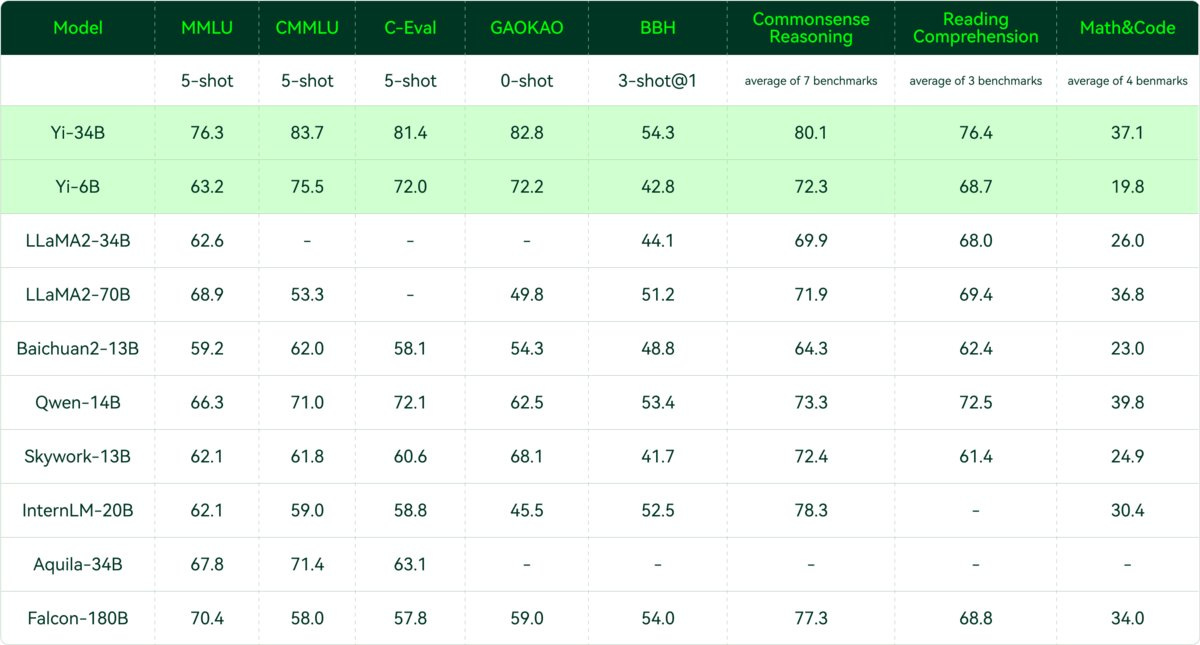

The Chinese AI company 01.AI released an updated open source AI Model Yi 34B with 200K context window. It is a new best-in-class open source model, outperforming much larger models like LLaMA2-70B and Falcon-180B. Yi AI model also has been opened up for commercial use. There are fine-tunes of this model, such as Capybara, that are performing very well.

YouTube previews AI tool that clones famous singers — with their permission. YouTube’s AI features let users generate music tracks from a hummed tune of text prompt. They are also tapping into nine artists who have collaborated with YouTube on its development.

There are reports that Google is delaying launch of AI model Gemini. We had been expecting Gemini to launch by year-end, but sources told The Information that it may be delayed into Q1.

Runway’s Motion Brush is a new feature of Gen2, and it’s blowing some minds. Brush a part of the image/video, and part gets moved or animated. This is best shown in a video itself, so check out this tutorial on X or this example or this:

Top Tools & Hacks

Draw a UI takes advantage of GPT-4 vision and code generation AI to got from wireframing to website in one step:

“This works by just taking the current canvas SVG, converting it to a PNG, and sending that png to gpt-4-vision with instructions to return a single html file with tailwind.”

AI image generation keeps getting better and faster. The fastest generation can be done by LCM - latent consistency models. These are now fast enough to be ‘real time’ - as you draw, it updates. Real time LCM inference videos are great but much better to experience it yourself, which you can do here: fal.ai.

Here's a quick test of a real-time LCM tool called Krea: “It's like the Real-time latent consistency model tests I've done with my photoshop and Dreams creations, but instead in a single application with faster refresh rates and higher quality output.”

Riffusion launched a new AI music generation tool that generates a song from singing or talking. “more natural than a text prompt.”

Gobble Bot is a project from Rafal_makes to help GPT builders: “Get all your content into one huge text file, ready to upload into your new GPT overcome file count limits and speed up GPT creation process.”

AI Research News

Meta FAIR presents two new advances in our generative AI research: Emu Video & Emu Edit. They leverage the Emu image generation to produce “Emu Video: A simple factorized method for high-quality video generation.” This method for text-to-video generation is based on diffusion models. It is a unified architecture for video generation tasks that can respond to a variety of inputs: text only, image only, and both text and image.

Emu Edit is a multi-task image editing model which sets a new state-of-the-art in instruction-based image editing. In particular, it it improves on instruction-following:

“Unlike many generative AI models today, Emu Edit precisely follows instructions, ensuring that pixels in the input image unrelated to the instructions remain untouched. For instance, when adding the text “Aloha!” to a baseball cap, the cap itself should remain unchanged.”

Fine-tuned larger language models and longer context lengths eliminate the need for retrieval from external knowledge databases, right? Not quite. Retrieval meets Long Context Large Language Models looks at retrieval-augmentation, extending context windows, and combining them:

We demonstrate that retrieval can significantly improve the performance of LLMs regardless of their extended context window sizes. Our best model, retrieval-augmented LLaMA2-70B with 32K context window, outperforms GPT-3.5-turbo-16k and Davinci003 in terms of average score on seven long context tasks including question answering and query-based summarization.

The bottom line here is that the most capable AI model applications will end up having some kind of retrieval-augmented component to them, in order to be more effective and efficient.

AI Business and Policy

French billionaire and telecom CEO Xavier Niel is building an AI research lab in Paris, and he shared details on his plans at the AI-Pulse conference. Kyutai is a French AI research lab with a $330 million budget that will make everything open source. They may be different and important because they will go beyond open source to open science:

Kyutai’s models will be open source too, but the researchers describe their work as open science. They plan to release open source models, but also the training source code and data that explain how they released these models.

At its Supercomputing 2023 event, Nvidia announced its new H200 AI chip to fuel ‘acceleration’ of generative AI. Nvidia says: “The introduction of H200 will lead to further performance leaps, including nearly doubling inference speed on Llama 2, a 70 billion-parameter LLM, compared to the H100.”

A Stability executive quit after saying that generative AI ‘exploits creators.’ Ed Newton-Rex resigned from leading the Audio team at Stability AI, and expressed on X that “I don’t agree with the company’s opinion that training generative AI models on copyrighted works is ‘fair use’.

“Companies worth billions of dollars are, without permission, training generative AI models on creators’ works, which are then being used to create new content that in many cases can compete with the original works. I don’t see how this can be acceptable in a society that has set up the economics of the creative arts such that creators rely on copyright.” - Ed Newton-Rex, Stability AI

Meta disbanded its Responsible AI team:

Meta has reportedly broken up its Responsible AI (RAI) team as it puts more of its resources into generative artificial intelligence. The Information broke the news today, citing an internal post it had seen.

AI Opinions and Articles

The AI copyright debate continues: ‘Please regulate AI:' Artists push for U.S. copyright reforms but tech industry says not so fast.

A Look Back …

The firing of Sam Altman from the OpenAI board bears some uncanny resemblances to the firing of Steve Jobs from Apple in 1985.

What happened to Steve Jobs and why did the board fire him? Jobs recruited John Sculley to be the Apple CEO, but the founder Jobs couldn’t deal with being under the control of the CEO he had hired, so clashes developed:

Jobs clashed with Sculley after two new products — the Lisa and the Macintosh — failed to live up to sales expectations.

As a result, Jobs was moved away from the Macintosh product and was furious about the change, taking his case straight to Apple’s board of directors.

This really ruffled the feathers of the people on the board.

It was both a power struggle and a question of strategic vision, a dynamic at play today with OpenAI.

The Chair, who wasn't at the meeting to sack Altman, and boot him as Chair but kept him on as president of the company, has quit.

Interesting timesvwhen you lose two cofounders in as many days.

There's speculation that Altman has been working on some other new development of his own, and kept this information secret. Thus the firing. Basic conflict of interest, and certainly would mean he wasn't open and forthright with the board.

We'll see.