AI Week In Review 23.11.25

Inflection-2, Claude 2.1, Stable Video Diffusion, ElevenLabs STS, Orca 2 ... and OpenAI drama

TL;DR - OpenAI’s boardroom drama dominated headlines, with Sam Altman fired by OpenAI board, then possibly joining Microsoft, then re-hired while the OpenAI board itself was fired and replaced. But behind that drama was another big week of AI releases, research and progress. …

AI Tech and Product Releases

InflectionAI announced Inflection-2. They claim Inflection-2 is the second-most capable AI model in the world behind GPT-4 (MMLU score is 79), and “is substantially more capable than Inflection-1, demonstrating much improved factual knowledge, better stylistic control, and dramatically improved reasoning.”

Meanwhile, others aren’t standing still. Anthropic released Claude 2.1 with key improvements: “an industry-leading 200K token context window, significant reductions in rates of model hallucination, system prompts and our new beta feature: tool use.” Claude 2.1 improved its comprehension and summarization over Claude 2, particularly for long, complex documents.

OpenAI voice input-output is now free for all ChatGPT users, not just paid subscribers.

Stability AI released an Text-to-video AI model called Stable Video Diffusion. This is their first model for generative AI video based on the image diffusion model, similar to Runway’s Gen2, and is an open source release, sharing code, weights, and research paper (see below). It can generate rotating objects, which can be converted into NeRF 3D objects. This looks like a big and foundational step forward.

Bard update: Bard can watch YouTube videos for you and break it down, summarize and analyze them. It’s an opt-in beta experience, and another sign Google keeps incrementally improving Bard.

ElevenLabs introduces speech to speech, a feature that takes the content and style of speech contained in an uploaded recording and changes the voice to a selected one. This helps control the inflection and intonation in speech output better.

LumaLabs AI announced some updates to Genie, their text to 3D image generation AI model: Negative prompting, improved stability and quality, availability on other Discord servers. It’s available in beta.

To show off their own Habana Labs's Gaudi AI chips, Intel dropped a very good 7B AI Model that is topping Hugging Face’s leaderboard. They took a Mistral 7B, fine tuned it using SlimOrca, running it on Gaudi accelerator hardware.

Top Tools & Hacks

You can try out the afore-mentioned Stable Video Diffusion for free on decoherence.co and on Hugging Face. Given that it’s an open source AI model, we may see the same profusion of SVDs as we have with image diffusion models.

AI Research News

The release of Stable Video Diffusion was accompanied by a paper, “Stable Video Diffusion: Scaling Latent Video Diffusion Models to Large Datasets.” This model is based on latent diffusion, hence Video Diffusion Model (VDM), which scales from image to short-sequence video by inserting temporal layers:

In this paper, we identify and evaluate three different stages for successful training of video LDMs: text-to-image pretraining, video pretraining, and high-quality video finetuning. … our base 1 model provides a powerful motion representation for downstream tasks such as image-to-video generation and adaptability to camera motion-specific LoRA modules. Finally, we demonstrate that our model provides a strong multi-view 3D-prior and can serve as a base to finetune a multi-view diffusion model that jointly generates multiple views of objects in a feedforward fashion, outperforming image-based methods at a fraction of their compute budget.

Microsoft research has followed up on groundbreaking Orca model with “Orca 2: Teaching Small Language Models How to Reason,” showing how improved training signals can increase reasoning abilities in the LLM. The Orca 2 13B model is extremely good at reasoning for its size, with ChatGPT-like reasoning capabilities and beating larger models such as Llama2-chat-70B. How they did it:

In Orca 2, we teach the model various reasoning techniques (step-by-step, recall then generate, recall-reason-generate, direct answer, etc.). More crucially, we aim to help the model learn to determine the most effective solution strategy for each task.

The model is open-source, ensuring this method can be replicated to create other fine-tuned LLMs, and the paper is published on Arxiv.

“The Chosen One: Consistent Characters in Text-to-Image Diffusion Models” presents an automated method for AI image generation of consistent characters across multiple images. This will be extremely useful for many applications of AI image generation, especially in illustrating stories, story-boarding, etc.

Nvidia researchers present “Tied-LoRA: Enhacing parameter efficiency of LoRA with weight tying.” Experimenting with combinations of parameter training and freezing in LoRA, they discovered a Tied-LoRA configuration that maintains comparable performance to standard LoRA but uses only about 13% of parameters.

The paper “ML-Bench: Large Language Models Leverage Open-source Libraries for Machine Learning Tasks” addresses gaps in current AI code generation benchmarks, by evaluating ability of code generation LLMs to produce executable code that follows specific user instructions and utilizes given open source libraries. ML-BENCH consists of 10044 samples spanning 130 tasks over 14 notable machine learning GitHub repositories. GPT-4 scores best on ML-BENCH but at only 39.7% on the tasks, leaving room for improvement. They also build ML-Agent on GPT-4, designed to better navigate and retrieve code for code generation, and show improvements using it.

AI Business and Policy

Nvidia had another blowout quarterly earnings. Revenue of $18.02 billion is up 206% from a year ago and up 34% from the previous quarter.

France, Germany and Italy push for ‘mandatory self-regulation’ for foundational AI models. The three biggest EU countries want to pull back from prescriptive regulations in the EU’s AI law, and are instead pushing for codes of conduct without any specific obligations for foundation models. This is being pushed by technology developers in the EU who don’t want to get strangled by regulation.

The Wild West of robotaxis: Will Texas be a regulatory haven or chaos unleashed? This is another case where AI regulatory differences may lead to shifting in innovation. “Texas not only lacks robust AV regulation, but state law expressly prohibits cities from regulating the technology that will be tested and deployed on its streets.” Self-driving taxis may hit the streets of Austin instead of San Francisco.

Meet the AI model who earns up to $11,000 a month. Aitana López is an AI-generated creation by a Spanish agency that grew tired of booking real models, with their issues and ‘egos’. She has 125,000 followers on Instagram and is onFanvue (similar to OnlyFans). Another AI model mentioned is “Emily Pellegrini, an AI influencer, who has just over 100,000 followers on Instagram and is also present on Fanvue.”

AI Opinions and Articles

Pentagon AI chief Craig Martell speaks on network-centric warfare and generative AI challenges. He sees his mission as developing tools, processes, infrastructure and policies for the DoD, with AI at the peak of a hierarchy of needs:

We are finally getting at network-centric warfare -- how to get the right data to the right place at the right time. There is a hierarchy of needs: quality data at the bottom, analytics and metrics in the middle, AI at the top. For this to work, most important is high-quality data.

In an alternate time-line, things at OpenAI could have ended up quite differently this past week: OpenAI in meltdown, Microsoft buying the whole team. Another possible outcome that didn’t pan out was OpenAI board spoke with Anthropic about a possible merger:

After Amodei's rejection, the board turned to other executives, including Nat Friedman, CEO of GitHub, and Alex Wang, CEO of Scale AI, to fill the position of interim CEO, before finally settling on former Twitch CEO Emmett Shear.

One mid-drama take-away was this contrary opinion: “Microsoft was not a winner of the events of the last few days around OpenAI.” They were in a much better place on Friday morning last week; they had invested ~$11B in OpenAI and captured most of its upside while still having enough insulated distance.

What this means is the best outcome for Microsoft was going back to an OpenAI with Altman at the helm. This is what ended up happening. Microsoft, having backed OpenAI’s leaders who are back, has a stronger relationship as well. Microsoft won, ending up with a stronger OpenAI and with a stronger hand in them.

A Look Back …

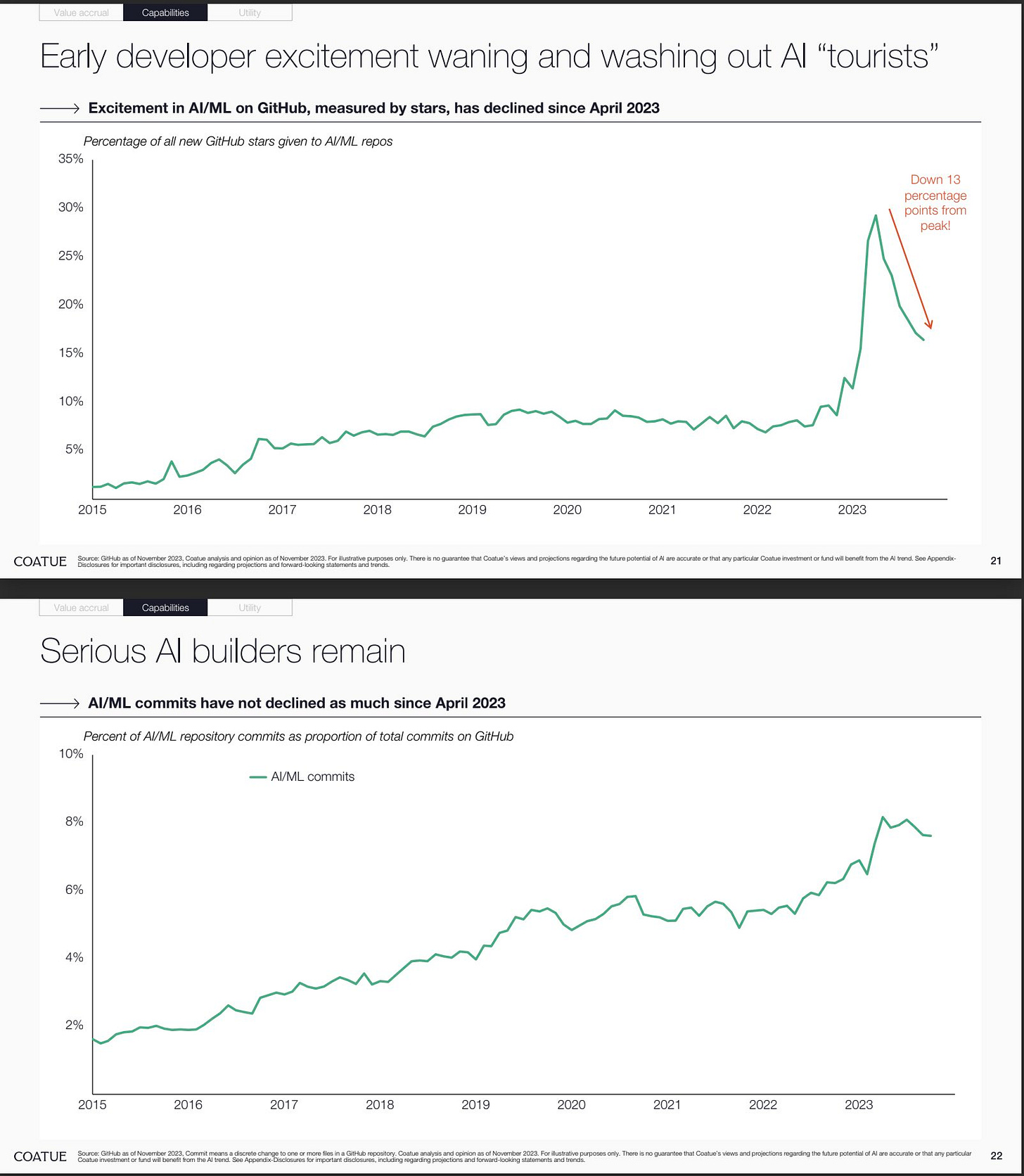

How much of AI hype is a hype cycle and how much is enduring? One data points suggests there was a hype peak in “AI tourists” in the Spring of 2023, but the development pace is continuing on. Charts are from eyc on X: