AI Week In Review 24.01.06

CoPilot key on Windows11, GPT Store opening soon, TinyLlama, CrewAI, Bagel-34B, VGen from Alibaba, composing models with CALM, and AI in the Judiciary

AI Tech and Product Releases

Coming to your keyboard soon: Microsoft wants to add the CoPilot key to PC keyboards. They announced with for Windows11 PCs, to help users get quickest possible access to CoPilot on their PC.

Google Gemini Ultra will soon be released as Bard Advanced that you have to pay for through Google One. This come from Dylan Roussel on X, who also mentions other features coming from Google’s Bard, including tasks, sharing background/foreground, and other AI experience features:

Motoko: Google will allow you to "Create Bots."

Gallery: "Explore different topics with Bard,” that will display example prompts about various topics.

Power up: Improve your prompts by using an AI to "expand" them.

OpenAI will be launching their GPT Store next week. Users will be able to sell custom GPTs and revenue-share the profit. As Rowan Cheung said on X, “Prepare for a new wave of businesses and AI builders.”

Newly-released open-source 1.3B LLM TinyLlama was trained on 3 trillion tokens (3 epochs of 1T tokens), on just 16 A100-40G GPUs over a span of 90 days. It was built with the same architecture as Llama. While not as high-quality as the high-performing Phi-2B model, it is nevertheless shows how small, efficient AI models can be built for lower cost than ever.

Recently released Bagel 34B, a fine-tune of the Yi 34B LLM, is near the top of the HuggingFace Open LLM Leaderboard. We shared their cool cover art above.

Top Tools & Hacks

CrewAI is an open-source framework to orchestrate teams of autonomous AI agents that is getting some buzz (H/T Matthew Berman). It can set up teams of role-based AI agents, gives them tools and goals, and use the AI agents to work together. It’s worth checking out.

AI Research News

The paper “LLM Augmented LLMs: Expanding Capabilities through Composition” from Google Research presents a new technique, called CALM (Composition to Augment Language Models), that combines multiple LLMs to expand their capabilities to new tasks.

The CALM method is not fine-tuning nor model merging, but it keeps existing LLMs and their weights and combines the distinct original LLMs using cross-attention mechanisms, adding a few additional parameters and data to these combined models. They were able to show utility across several use cases that used PaLM-based models, including for coding and low-resource languages.

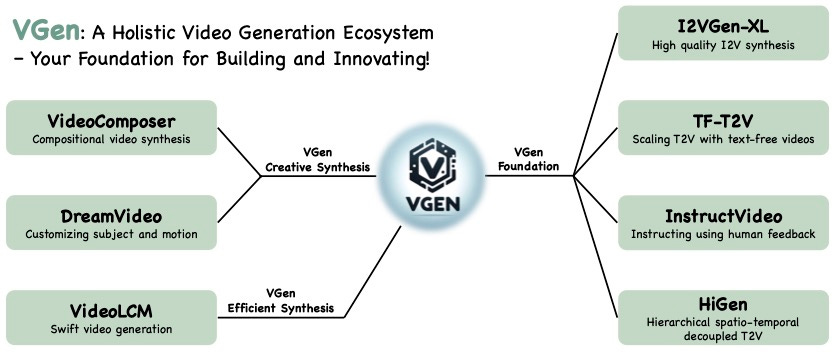

AI video generation will get crazy good in 2024. Alibaba has joined in the efforts, with a research paper “I2VGen-XL: High-Quality Image-to-Video Synthesis via Cascaded Diffusion Models” and open source models for image-to-video, text-to-video, and more. They recently released on Github the code and models in their full suite of AI video generation models, they are calling VGen.

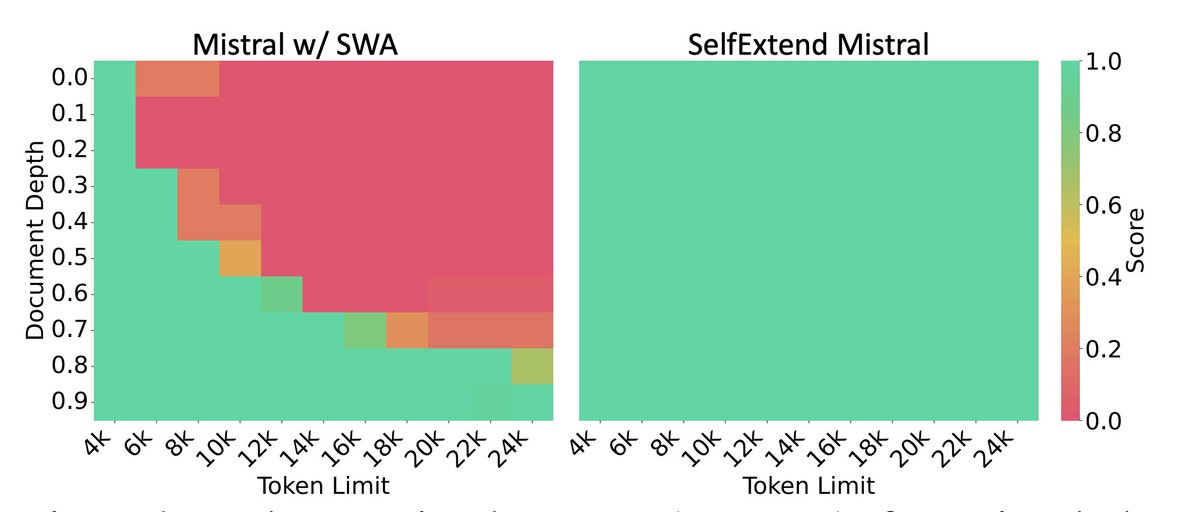

The work “LLM Maybe LongLM: Self-Extend LLM Context Window Without Tuning” showed extend context windows in LLMs without fine-tuning:

We propose Self-Extend to stimulate LLMs' long context handling potential. The basic idea is to construct bi-level attention information: the group level and the neighbor level. The two levels are computed by the original model's self-attention, which means the proposed does not require any training. With only four lines of code modification, the proposed method can effortlessly extend existing LLMs' context window without any fine-tuning.

This was used to self-extend Mistral 7B beyond an 8k context window to 16k, with greatly improved retrieval on the larger context window.

What is the best way for AI to understand complex documents, with charts, figures and structures beyond text? JP Morgan researchers developed “DocLLM: A layout-aware generative language model for multimodal document understanding” to solve this problem. Calling DocLLM “a lightweight extension to traditional large language models (LLMs) for reasoning over visual documents,” they avoid full image encoders, instead using OCR and also spatial awareness and attention in training DocLLM.

Their DocLLM 7B model was able to outperform Llama 7B on layout-intensive document understanding tasks.

AI Business and Policy

Intel spins out a Generative AI software company, called Articul8 AI, that grows out an Intel collaboration with Boston Consulting Group (BCG) to build a generative AI platform for enterprise applications.

AI search startup Perplexity AI raised $73 million and is valued at $520 million in funding from Bezos, Nvidia, and others.

OpenAI’s annualized revenue increased to $1.6 Billion at the end of 2023. They continue to gain a lot of users and traction with their AI models.

Autonomous delivery startup Nuro taps simulation company Foretellix to cut R&D costs. Foretellix specializes in generating millions of scenarios to test AV software, lowering the burden and cost of testing systems.

A leaked document from MidJourney of over16,000 artists they trained their AI model on “could be a moment of reckoning for AI art,” says CreativeBloq.

A newly leaked database titled 'Midjourney Style List' has been found to contain the names of over 16,000 artists whose work the generative model is believed to have been trained on. Spanning 24 pages, the document names Banksy, David Hockney, Walt Disney and many more, and is already provoking outrage online.

Midjourney’s style list was based on artists and trained on their art to invoke specific artists’ styles. Internal Midjourney chat discussions linked Via X mention getting around copyright concerns - “launder it through a fine Codex” and “it’s impossible to trace what is a derivative work.” Is cloning an artist’s style (such as Picasso or Van Gogh) akin to voice cloning a popular singer’s voice? Is it taking something that belongs to the artist? The backlash will likely end up in the courts.

Gary Marcus and Reid Southen say Generative AI Has a Visual Plagiarism Problem. The problem in a nutshell: LLMs remember what they were trained on, and can spit it out:

Recent empirical work has shown that LLMs are in some instances capable of reproducing, or reproducing with minor changes, substantial chunks of text that appear in their training sets.

That doesn’t mean the training violates copyright, but the output very well might.

AI Opinions and Articles

Chief Justice John Roberts writes about AI’s impact in his annual report on the Federal Judiciary:

Law professors report with both awe and angst that AI apparently can earn Bs on law school assignments and even pass the bar exam. Legal research may soon be unimaginable without it. …

Many AI applications indisputably assist the judicial system in advancing those goals. As AI evolves, courts will need to consider its proper uses in litigation. …

I predict that human judges will be around for a while. But with equal confidence I predict that judicial work—particularly at the trial level—will be significantly affected by AI. Those changes will involve not only how judges go about doing their job, but also how they understand the role that AI plays in the cases that come before them.

A Look Back …

The report by Chief Justice Roberts reminds us that the interaction of technology and law isn’t new, and in fact AI and the law have been interacting for years. In fact, AI’s collision with copyright law isn’t new either.

A year ago, TechCrunch reported on lawsuits against Microsoft, OpenAI, Midjourney and others, stating “The current legal cases against generative AI are just the beginning.” They were referring to this class-action lawsuit from the Joseph Saveri Law Firm. They were right of course, more cases have been brought, and the Federal courts will have to address it.

A quick check on this Doe v Github lawsuit, filed in 2022, shows that it is still in discovery phase. The legal system is moving so much slower than the technology, that Elon Musk could be right: We will get to AGI before these legal matters are resolved.