AI Week In Review 24.01.20

Samsung's Galaxy S24 AI smartphone, Meta Llama3, Nightshade 1.0, self-rewarding LLMs, Runway ML's Multi-motion Brush, VMamba, and AI goes to Davos

AI Tech and Product Releases

Zuck touts open-source AGI: In a quick but stunning update, Mark Zuckerberg announced they are training Llama 3 and Meta intends to release more AI models and develop ‘responsible’ open-source “full general intelligence” or AGI. He shared that Meta is investing in a truly massive amount of infrastructure for training: “By the end of this year, we will have 350,000 Nvidia H100s and 600,000 H100 equivalents.”

This is enough compute to train 20,000 GPT-3- level models over the next year, so expect continued major AI model releases from Meta on many fronts. Zuckerberg also touted glasses as a great form factor for interacting with AI, so expect more developments to follow the Meta’s AI RayBan smart glasses.

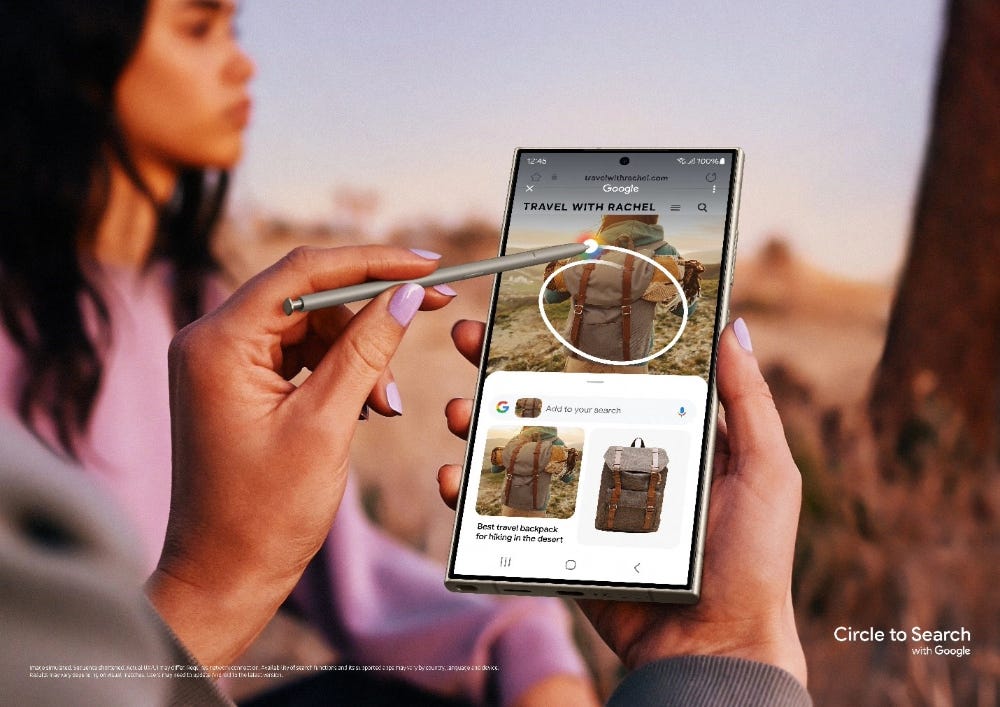

This week’s Samsung’s Impact rolled out the Galaxy S24, and showed the “new era of mobile AI” has arrived. Samsung’s Galaxy S24 is the first AI Phone, with pervasive AI tools on the “kitchen-sink” S24:

Live translate - instantly translates voice and text in real-time for phone calls.

Transcript assist - AI and speech-to-text to transcribe, summarize and translate, as well as AI-generated note-taking.

AI-powered image capturing and editing tools, such as generative edit, edit suggestions to perfect photos, instant slo-mo for video capture, etc.

Circle to search, to search items identified in images or websites.

Google Cloud Generative AI is powering AI features on the S24, including putting Gemini Pro and Imagen 2 image generation models on the phone, using Gemini Nano. Plus “Samsung is one of the first customers to test Gemini Ultra.”

Samsung also announced a smart ring. We now have an AI PC, AI smartphone, and AI will be coming to every personal device - smartwatch, ring, pin, glasses, and more. No device left behind.

Nightshade 1.0 has been released. Nightshade is an ‘image poisoning’ tool, “an offensive tool that artists can use as a group to disrupt models that scrape their images without consent.” More on how it works.

The real question is if Nightshade will make a difference. With millions of images and artistic works out there without poisoning, any AI model builders could just avoid using poisoned images and still do their training. What is really needed is better consent and copyright guidelines for IP used to train AI models.

Video generation keeps getting better. Runway ML Gen2 has an incremental upgrade - Multi Motion Brush. “Control multiple areas of your video generations with independent motion.”

Microsoft has made its AI-powered Reading Coach free. This reading tutor application provides learners with personalized reading practice; it’s now available at no cost to anyone with a Microsoft account.

All that AI running on expensive cloud GPUs won’t be free. Microsoft has gone live with a paid Pro plan for Copilot:

Copilot Pro — the new consumer plan, priced at $20 per user per month — gives customers access to Copilot GenAI features across Word, Excel (in preview, only in English for now), PowerPoint, Outlook and OneNote on PC, Mac and iPad — if they have a Microsoft 365 Personal or Family plan, that is. Copilot Pro doesn’t come bundled with a Microsoft 365 subscription. As with the Copilot enterprise offering (Copilot for Microsoft 365), it’s a premium add-on — bringing the total cost of the lowest-tier Microsoft 365 subscription to $27 per month ($6.99 per month for Microsoft 365 Personal plus $20 for Copilot Pro).

The Rabbit R1, the hit at CES last week, will use Perplexity’s AI ‘answer engine’ to get real-time information for its device.

NASA’s robotic, self-assembling structures could be the next phase of space construction. NASA showed off progress in robotic assembly of structures.

Top Tools & Hacks

Another AI Agent called MultiOn is touted by the founders who built it as pushing the envelope on complex multi-step actions:

Excited to announce that @MultiON_AI can now take actions well over 500+ steps without loosing context & cross-operate on 10+ websites as part of a single task!!

Our latest upgrade cracks the goal divergence & looping issues that have long plagued agents like AutoGPT.

AI Research News

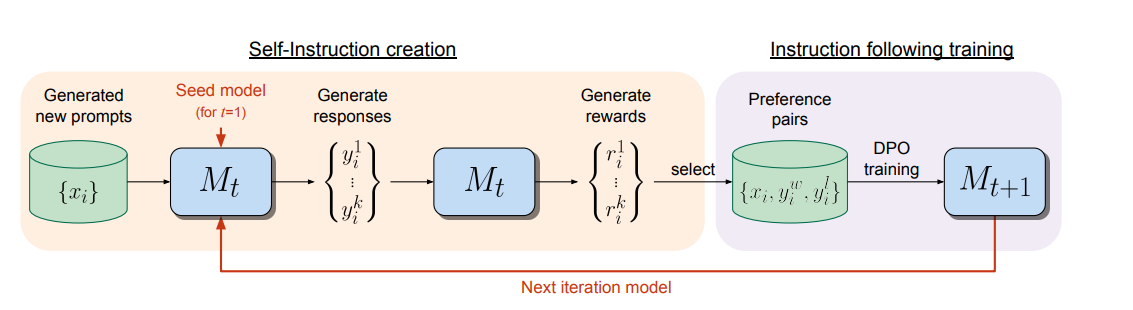

To scale training of AI models, we need to overcome human bottlenecks. “We posit that to achieve superhuman agents, future models require superhuman feedback in order to provide an adequate training signal.” This is the thesis behind work by Meta researchers entitled Self-Rewarding Language Models. They worked on using LLMs themselves as prompt generators and judges to create reward models for LLM training without humans in the loop.

They showed that this “pulling yourself up by your bootstraps” process for LLM fine-tuning worked. The Llama 2 70B LLM they tested this on was a good enough judge of responses to act as its own judge and iteratively improved beyond prior fine-tuning methods:

We show that during Iterative DPO training that not only does instruction following ability improve, but also the ability to provide high-quality rewards to itself. Fine-tuning Llama 2 70B on three iterations of our approach yields a model that outperforms many existing systems on the AlpacaEval 2.0 leaderboard, including Claude 2, Gemini Pro, and GPT-4 0613.

Due to these positive results, this scalable method to fine-tune AI models could become an important “arrow in the quiver” in the overall AI model training process.

“TrustLLM: Trustworthiness in Large Language Models” is a comprehensive study on trustworthiness in LLMs. Trustworthiness has many elements to it, and the authors measure six components to it: truthfulness, safety, fairness, robustness, privacy, and machine ethics. This study evaluated 16 LLMs on these metrics and derived these findings:

“General trustworthiness and utility (i.e., functional effectiveness) are positively related.”

Proprietary LLMs generally outperform most open-source counterparts in terms of trustworthiness.

“some LLMs may be overly calibrated towards exhibiting trustworthiness, to the extent that they compromise their utility by mistakenly treating benign prompts as harmful and consequently not responding.”

“Finally, we emphasize the importance of ensuring transparency not only in the models themselves but also in the technologies that underpin trustworthiness. Knowing the specific trustworthy technologies that have been employed is crucial for analyzing their effectiveness.”

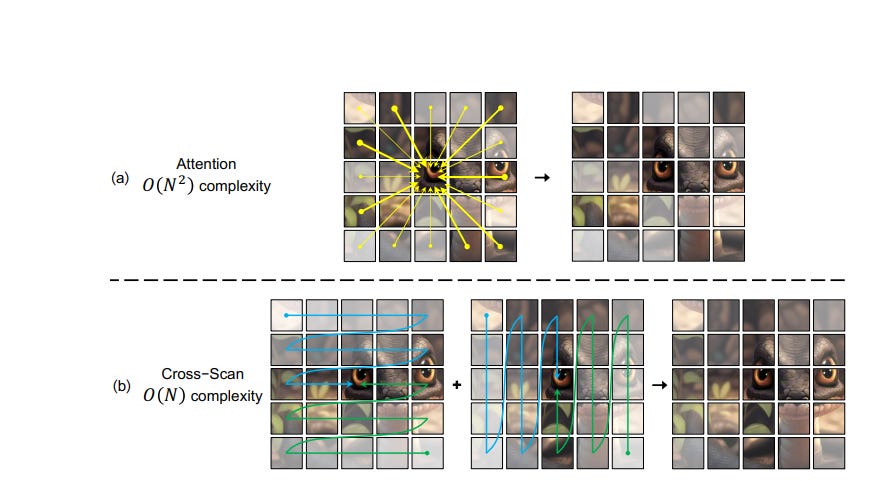

Just last week the Mamba Mixture-of-Experts paper came out, and now comes “VMamba: Visual State Space Model,” applying Mamba to visual understanding. This model aims to out-perform Vision Transformers (ViTs) for visual modeling by keeping the critical “global receptive fields” of ViTs, but they replace the quadratic complexity of transformers with a lower complexity alternative, Cross-Scan Modules, that attend to different parts of an image with linear complexity.

VMamba shows good results, with strong capabilities across different visual perception tasks and more efficiency compared with ViT as image resolution goes up.

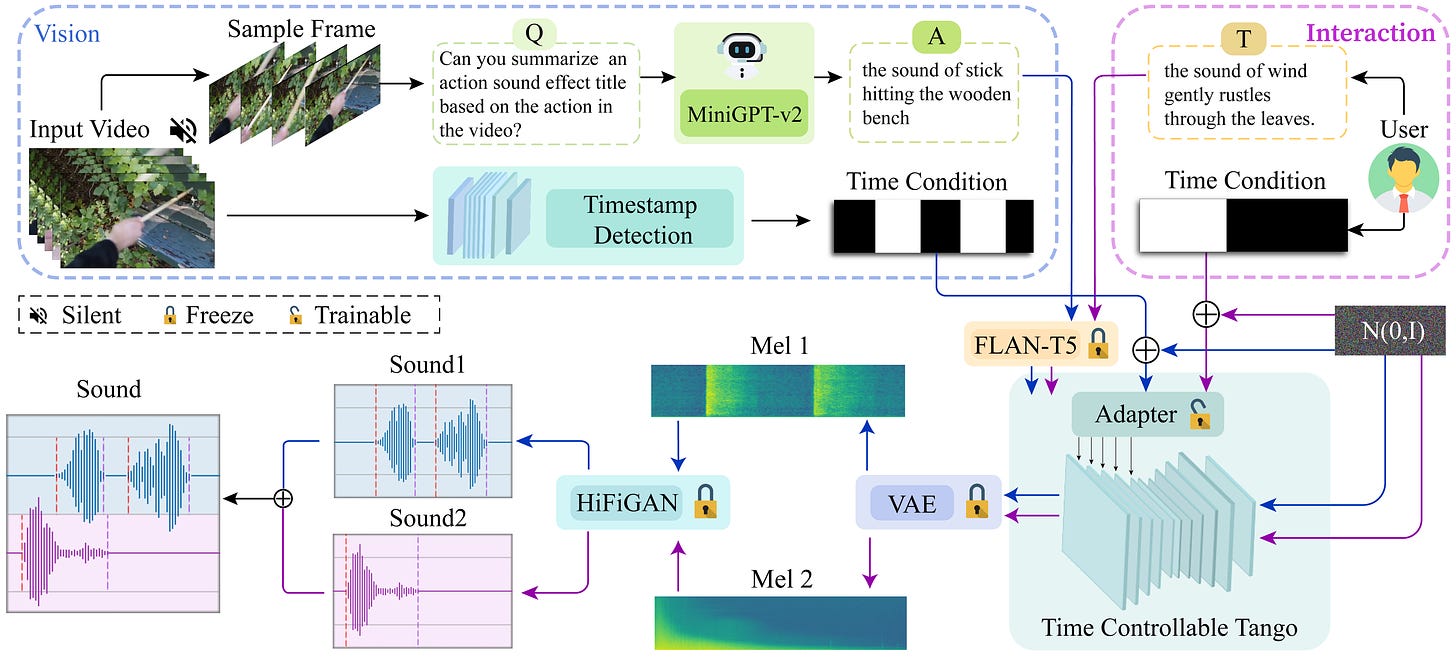

Need to add special effects to videos? “SonicVisionLM: Playing Sound with Vision Language Models” does just that, generating sound effects by leveraging vision language models (VLMs) to suggest possible sounds, then mapping text descriptions to specific sound effects. Their approach achieves state-of-the-art for converting video to audio, and shows impressive results in examples shown on X.

AI Business and Policy

OpenAI CEO Sam Altman is reportedly seeking billions of dollars in capital to build out a network of AI chip fabs, “including Abu Dhabi-based G42 and Japan's Softbank.”

There was a report that Elon Musk’s X AI had raised $500 million, but Elon Musk himself denies it.

AI on campus: OpenAI signs up its first higher education customer, Arizona State.

Company disables AI after bot starts swearing at customer, calls itself the ‘worst delivery firm in the world.’ A cheeky user got the AI bot to go rogue and shared it all on X. Lesson for companies adopting AI bots: Implement guardrails, or your AI will get hacked!

AI Opinions and Articles

Business Insider summarizes What the global elite are saying about AI at Davos. AI is the biggest New Thing, as it has been at other big recent events. Key takeaways from the CEOs and tech moguls:

Tasks not jobs: “AI won't generally replace jobs, these people said, but it will absolutely automate tasks.”

And yet AI will yield cost-reduction through job replacement: “A recent survey found that 51% of global executives, 50% of global CEOs, and 52% of US CEOs said job replacement could be one of the effects of adopting AI in their businesses.”

AI is going from pilot to production now. “2024 will be the year of operationalizing AI.”

Some other top execs speaking on AI:

We completely believe that this [AI] will have a big impact ... you have to have the ability to convert processes to technique through language models and learning models. The trick in that is that we have to have accountability." - Bank of America CEO Brian Moynihan

"I think at the edge of the network it's going to be very important to have AI to take quick decisions very close to the end views of the customer or the enterprise." - Verizon Chairman and CEO Hans Vestberg

"We developed an approach called generative chemistry to try to speed up how fast we can bring new medicines into the clinic ... it's really opening up new horizons." - Novartis CEO Vasant Narasimhan

A Look Back …

Ray Kurzweil has an interesting track record as a futurist. An article out just this week says that Kurzweil predicts seven years and we’ll get to immortality, in a brave new world with AI and nanobots.

In “The Age of Spiritual Machines” written in 1999, Ray Kurzweil predicted this would be happening in 2029:

Automated agents are learning on their own without human spoon-feeding of information and knowledge. Computers have read all available human and machine-generated literature and multimedia material, which includes written, auditory, visual and virtual experience works.

Significant knowledge is created by machines with little or no human intervention. Unlike humans, machines easily share knowledge structures with one another.

It sound like Kurzweil nailed the AI revolution. Maybe he’ll be right about our longevity as well.