AI Week In Review 24.01.27

Google's Lumiere, Google Chrome gets AI features, OpenAI GPT-4 update, GPT Mentions, Adept Fuyu-Heavy, Brave Leo adds Mixtral, Depth Anything, Google partners with HuggingFace.

AI Tech and Product Releases

OpenAI announced New embedding models and API updates, including a better GPT-4 Turbo and a cheaper GPT-3.5 Turbo:

An updated GPT-4 Turbo preview model, that is intended to improve code generation and reduce ‘laziness’ regarding task completions.

An updated GPT-3.5 Turbo model, with pricing lowered 50% (to $0.0005/1K tokens, or 50c a million input tokens).

An updated text moderation model and two new embedding models.

OpenAI also added an important user feature to ChatGPT, MentionGPT, which is listed as our “Top Tool” for this week below.

Google Chrome adds three new AI features to make your internet browsing life easier. Announced by Google in a blog post, these features help users:

Organize tabs with AI-curated tab groups.

Creating chrome themes.

Draft web writing using AI, such as filling in web inputs for party RSVPs, answering queries, or writing reviews.

Some Google Gemini users are commenting that Google Gemini Pro might have quietly gotten better recently. Bard (Gemini Pro) now scores close to GPT-4 Turbo on Arena ELO on Chatbot Arena leaderboard (as of January 26). Is this an Ultra preview? It’s unclear.

Adept AI announced Adept Fuyu-Heavy, a new multimodal model designed specifically for digital agents. They claim:

Fuyu-Heavy is the world’s third-most-capable multimodal model, behind only GPT4-V and Gemini Ultra, which are 10-20 times bigger.

Their goal with this multi-modal AI model is to build an agentic workflow AI: “We’re building a machine learning model that can interact with everything on your computer.”

Brave Leo, the AI browser assistant, now features Mixtral for improved performance. The Mixtral AI model is currently the best open-source AI model, so this is a great addition to the Leo Assistant. Other models available are Llama 2 and Claude Instant.

Arc Browser has adopted Perplexity as their default search engine. Why is this important? Browsers are the UI of the internet. Josh Miller, CEO of the Browser Company, which makes Arc browser, explains more on X why they are betting on Perplexity:

Search Engines need Browsers for distribution – it's where their searches come from – which is why we're thrilled to support the next gen, like Perplexity. AI Search is the next frontier & it will be distributed via the Browser too. It's our chance to start anew.

PayPal launched new AI-driven personalized products as part of six new innovations in its PayPal and Venmo products, announced by their CEO in their FirstLook Keynote. AI buzzwords take over another company product announcement!

Top Tools & Hacks

GPT Mentions is a new feature from OpenAI. It allows you to inline call out a custom GPT inside GPT itself. You can call on custom GPTs to perform actions as a result of a GPT and enables using multiple GPTs in a single conversation. This is like LangChain and it’s ability to string AI model invocations together, but without need for programming.

Lots of interest on X on this brand-new feature that just made custom GPTs more interesting and useful, since they can be strung together. Wil Reynolds noticing the power of GPT Mentions, that can link together multiple GPT actions: “Chain together custom GPTs in your existing workflow. Should be pretty dang cool.”

OpenAI’s approach towards agents is taking shape: Powerful capabilities without requiring programming.

AI Research News

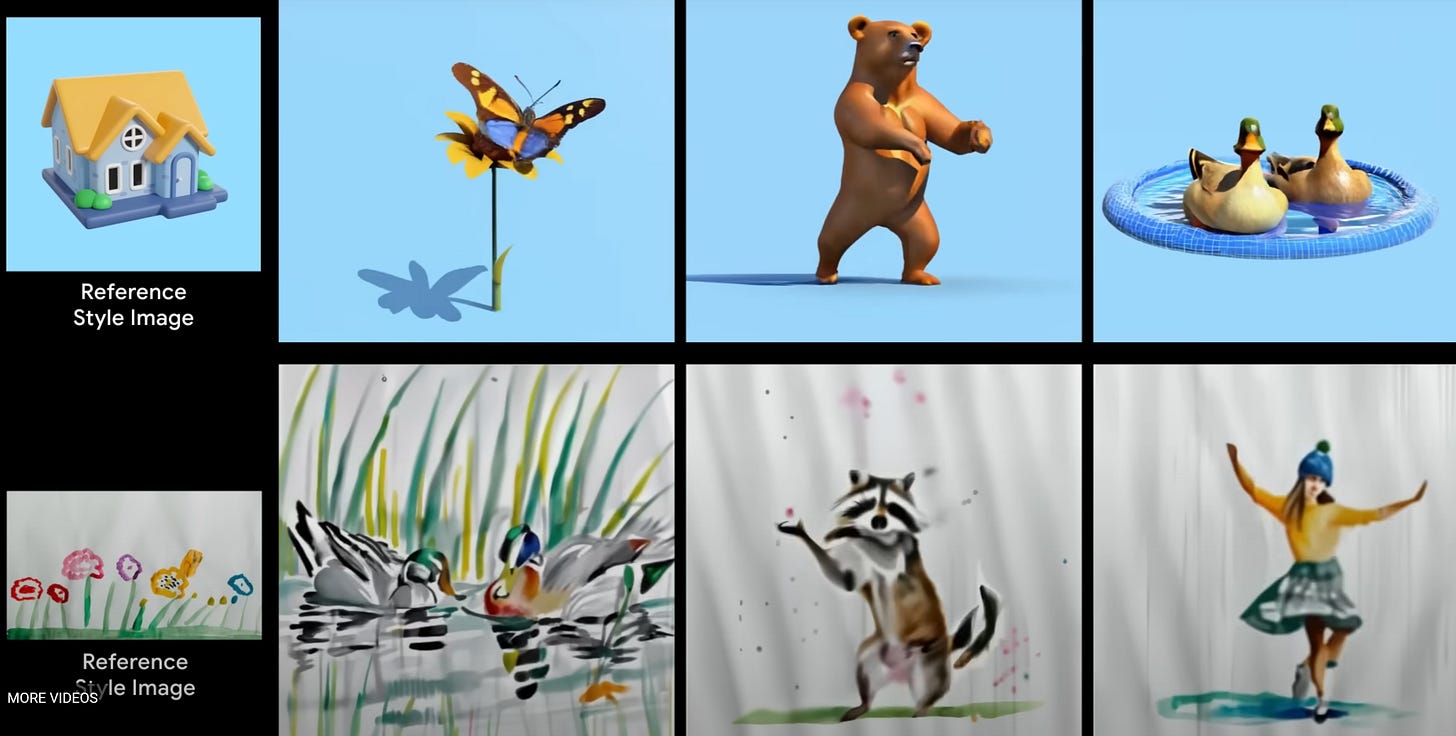

Presented in “Lumiere: A Space-Time Diffusion Model for Video Generation,” this new text-to-video AI model from Google Research is stunning people with its realism. It is based on a “Space-Time U-Net (STUNet) architecture” that implements both spatial and temporal down-sampling and up-sampling to generate the entire duration of a 5 second 16fps 1024x1024 video in a single pass in the model.

Ars Technica says: “Lumiere generates five-second videos that portray realistic, diverse and coherent motion,” and links to a Google promotional video. Aside from high-quality video with better temporal consistency, it can provide image-to-video, stylized video generation, and region-based animation (like RunWayML’s motion brush). Google released a paper and promotional video, but not the AI model itself.

“MM-LLMs: Recent Advances in MultiModal Large Language Models” surveys the impressive progress in multi-modal Large Language Models (MM-LLMs), which can take in video, images, and audio as input, process them through an LLM backbone, and output to those modalities as well.

The article looks at the 26 MM-LLMs developed and released in 2023 and describes the different implementations and architectural commonalities used to implement these MM-LLMs. Google’s Gemini is a ‘native’ MM-LLM and GPT-4 now has vision input, a sign that frontier AI models will be multi-modal going forward.

The paper “Depth Anything: Unleashing the Power of Large-Scale Unlabeled Data” presents an AI model that estimates depth from images, a problem called Monocular Depth Estimation. The Depth Anything model was trained on a combination of 1.5M labeled images and 62M+ unlabeled images, and they used semantic information to augment unlabelled data to make it useful for model training.

Depth Anything achieved state-of the art results for depth perception. Code has been released at on GitHub and the model is on HuggingFace. This approach could be helpful in areas such as self-driving cars and other real-world tasks needing depth perception.

AI Business and Policy

Google and HuggingFace form partnership to spur open-source AI adoption. This alliance between Google’s cloud business and open-source AI platform HuggingFace will help developers make and use open AI models more easily through Google Cloud. This move by Google validates the importance of open AI models as well as the HuggingFace vision of being a ‘GitHub’-like platform for them.

Google DeepMind Scientists in Talks to Leave and Form AI Startup focused on multi-agent Generative AI. The company name could be Holistic, and they have held discussions with investors about a financing round that could raise over 200 million euros.

Do the close relationships Tech Giants have with AI firms lead to anti-trust concerns? FTC opens inquiry into Big Tech's partnerships with leading AI startups.

U.S. antitrust enforcers are opening an inquiry into the relationships between leading artificial intelligence startups such as ChatGPT-maker OpenAI and Anthropic and the tech giants that have invested billions of dollars into them

“We’re scrutinizing whether these ties enable dominant firms to exert undue influence or gain privileged access in ways that could undermine fair competition,” said Lina Khan, chair of the U.S. Federal Trade Commission, in opening remarks at a Thursday AI forum.

North Carolina recently introduced new guidelines for artificial intelligence in schools, highlighting how AI can help students and teachers:

The state guidebook notes AI literacy should be infused into all grade levels and curriculum areas. It also stresses the importance of incorporating AI into the classroom responsibly — using it as a tool to aid in learning without becoming too reliant on it.

Multiple stories of AI DeepFake news stories this week: On the political front, an AI deep fake of Joe Biden was used in a robocall to New Hampshire Democrats. Eleven Labs tracked down the errant account that made this deep fake, and authorities are investigating.

Graphic Taylor Swift AI fakes flooded onto X and went viral. This highly public example of AI deep-fakery caused X to remind X users of their “Zero-tolerance policy” on non-consensual nudity, stirred alarm by the actor’s union and led to calls to action by the White House.

George Carlin's estate sues over AI-generated stand-up special titled 'I'm glad I'm dead'. This had no visual representation of Carlin and clearly identified itself as a parody and impersonation, but used a voice clone of Carlin to deliver an hour-long Carlin-like (somewhat amusing) monologue. Carlin’s family though considered it theft:

It is a piece of computer-generated clickbait which detracts from the value of Carlin’s comedic works and harms his reputation. It is a casual theft of a great American artist’s work.

AI Opinions and Articles

Aaron Levie talks about the cost curve of AI:

What’s incredible about AI is the rate of cost reduction and efficiency improvements. You can build things that are cost prohibitive today knowing they’ll become economically viable shortly.

Economic revolutions are typically built on a product or service going through a massive cost reduction. The internet revolution was based on a massive million-fold reduction in the cost of bandwidth. Today’s AI revolution is witnessing massive drops in the cost of LLM inference, with GPT-3.5 inference today costing a small fraction of what it did 15 months ago.

A Look Back …

Fellow Sub-stack author Michael Spencer shared this quote I’d like to re-share. It’s a prescient explanation from the genius early computer pioneer and mathematician John von Neumann:

“There is thus this completely decisive property of complexity, that there exists a critical size below which the process of synthesis is degenerative, but above which the phenomenon of synthesis, if properly arranged, can become explosive, in other words, where syntheses of automata can proceed in such a manner that each automaton will produce other automata which are more complex and of higher potentialities than itself.” - John von Neumann, 1949.

This explains … everything. From life, to computers, to complex systems in general. And it applies to AI, in terms of the emergent properties we observe as AI scales up.

GPT-4 as an AI model has achieved a level that is good enough to train other AI models, and review and critique its own output, to bootstrap to better results. There may be a ‘liftoff’ when AI builds on itself.