AI Week In Review 24.02.10

Gemini Ultra, Copilot's new look, Qwen 1.5, Laion's BUD-E, Frame, OpenHermes 2.5, Midjourney Style refs, FCC bans AI robocalls, Apple's MGIE, Multi-hop RAG

AI Tech and Product Releases

The top release of the week: Google released Gemini Ultra as “Gemini Advanced,” rebranded its AI offerings as Gemini, and launched new Gemini AI apps on iOS and Android. We covered all the details and an initial review of it in “Gemini Ultra Released - A First Look.”

Microsoft announced a new look and new features for Copilot, including new image editing features and a new model Deucalion that makes Balanced mode Copilot richer and faster.

The Verge says “Microsoft’s next big AI push is here after a year of Bing” and it’s all about CoPilot. Microsoft’s SuperBowl ad tagline is “Copilot -Your Everyday AI Companion.” Microsoft sees Copilot as a core product, similar to Windows and Office.

Alibaba released Qwen 1.5, a suite of open-source LLMs sized 0.5B to 72B, with base and chat models. These models are available on HuggingFace and GitHub.

Showing the capabilities of Qwen AI models as a base, the newly-released Smaug-72B, a fine-tune on the Qwen 72B model, is “The new king of open-source AI.” It is beating out every other current open source AI model on key benchmarks as well as GPT-3.5 and Gemini Pro, with an MMLU score of 77.

Laion announced BUD-E (Buddy for Understanding and Digital Empathy), a project to develop AI-based voice assistants to be more natural, real-time conversation agents with empathy and context memory. This open-source project is on Github. A low latency response is much more natural and makes for the most natural user interface possible.

The image watermarking standard C2PA is now in DALL-E 3. “Images produced through the API will contain a signature indicating they were generated by the underlying DALL·E 3 model.” Similarly, Meta announced labeling AI-Generated Images using similar standards on their social media platforms.

A wild application for Apple Vision Pro let’s you Generate entire worlds - Outtakes for Vision Pro. It provides “Immersive animated 360 worlds upscaled to 8K, runs entirely local on Apple Silicon.” The AI model runs locally thanks to the high-powered M2 in the VisionPro.

An alternative for AI eye-wear is not to put a computer in front of your face but use the eyeglass as a screen only. Frame from Brilliant Labs does that. Just announced and taking pre-orders, Brilliant Labs’s Frame glasses serve as multimodal AI assistant, equipping the glasses with an AI assistant named Noa, that taps into GPT-4 and other AI models to operate.

A new open dataset for fine-tuning LLMs was released, OpenHermes2.5-dpo-binarized-alpha. This uses open-source models and data for everything and is available on HuggingFace.

StableDiffusion released SVD 1.1, an update on their video generation AI model, for “better motion and more consistency.” It’s incremental progress.

Top Tools & Hacks

AI tools for image creators keep getting better.

Midjourney released style referencing last week, to allow using images as a style guide. Here are pointers on how to use it and results of how it works from Heather Cooper on X. It’s incredibly useful for making consistent images and characters.

This week, Apple released an AI image tool that lets you make edits by describing them. It’s a great example of how yesterday’s AI research turns into tomorrow’s cool new AI tool.

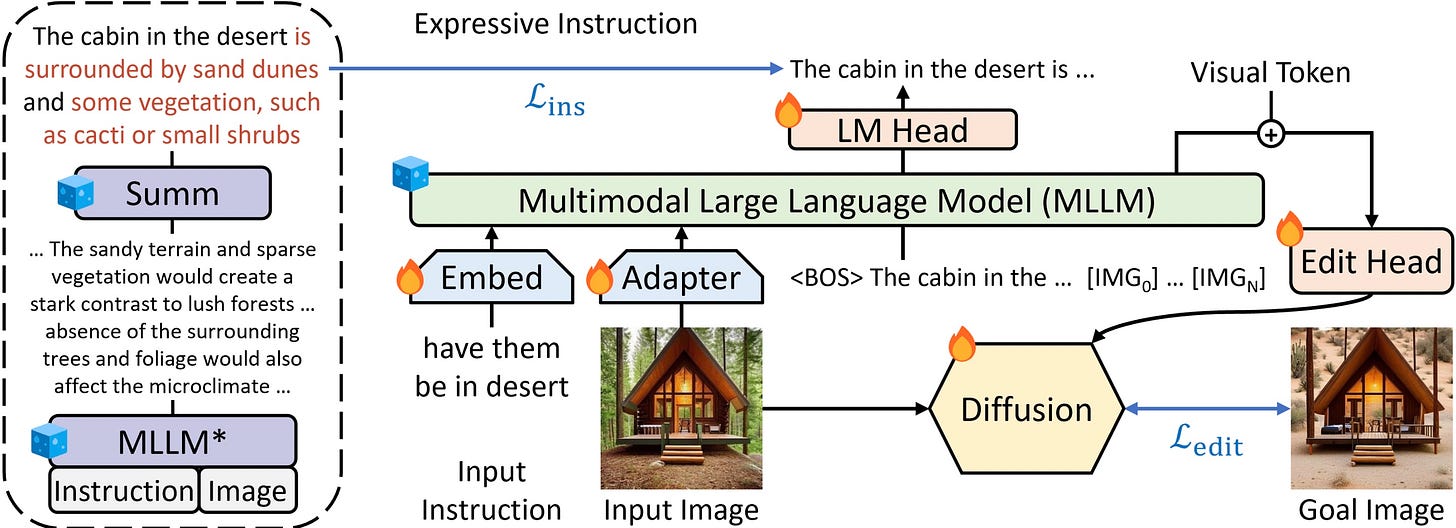

Apple released MLLM-Guided Image Editing (MGIE), an AI For Editing Images With Text Prompts, which implements a process that lets one edit images using text prompts and uses an MLLM (multi-modal large language model) to interpret and understand the instruction in the context of the image being edited.

The MGIE research paper “Guiding Instruction-based Image Editing via Multimodal Large Language Models” was first shared on Arxiv last September and is to be presented at ICLR. The open source MGIE implementation is on Apple’s Github repo. This is why scouring AI papers gives you a head’s up on future product releases.

AI Research News

Tencent AI researchers from Tencent inform us “More Agents Is All You Need.” Generating multiple LLM inference responses, then voting on the response that is most ‘popular’ by using response similarity scores, the performance of LLM scales with the number of agents instantiated.

They quantify this across different LLMs and numbers of agents invoked for each response. For example, an ensemble of 15 Llama-2 70B LLM agents can improve to the level of GPT-3.5-turbo (single inference). This is another result showing more effort in inference can improve LLM reasoning.

AI advances another field of study: History. Part of an Ancient Herculaneum Scroll Deciphered by AI 2,000 Years After Eruption of Mount Vesuvius. Nat Friedman explained the details on X on how they launched the Vesuvius Challenge to get help to decode the Herculaneum Papyri, and ML researchers contributed to solve the challenge and win the prize. AI and ML will continue to accelerate all areas of science.

New AI tool discovers realistic 'metamaterials' with unusual properties. Researchers from TU Delft have developed a deep-learning-based AI tool called Deep-DRAM that discovers exotic meta-materials and also makes them fabrication-ready and durable. Meta-materials are materials whose properties stem from their smale-scale design and structure. Deep-DRAM accelerates understanding of the material properties with low error relative to direct simulation, so it makes it possible to create devices with unprecedented functionalities.

Jerry Liu, founder of LlamaIndex, has touted recent work on MultiHop RAG:

An advanced RAG question should be able to handle complex, multi-part queries and synthesize a response. Excited to feature this work by Tang et al. that presents a novel dataset to specifically benchmark multi-hop queries.

The Multi-Hop RAG paper is “MultiHop-RAG: Benchmarking Retrieval-Augmented Generation for Multi-Hop Queries” and there is a Multi-Hop RAG project on GitHub.

Tiny Titans: Can Smaller Large Language Models Punch Above Their Weight in the Real World for Meeting Summarization? They found that most smaller LLMs fail to outperform larger zero-shot LLMs in meeting summarization, but that FLAN-T5 (780M parameters) was an exception.

AI Business and Policy

How Walmart, Delta, Chevron and Starbucks are using AI to monitor employee messages. AI-enabled or not, employee surveillance can get creepy, but this AI-powered tool from Aware, an AI firm specializing in analyzing employee messages, is doing it at scale: “Aware said its data repository contains messages that represent about 20 billion individual interactions across more than 3 million employees.”

Nvidia pursues $30 billion custom chip opportunity with new unit. As many tech firms are trying to build custom AI chips, Nvidia is building a new business unit to serve them. It’s another way for Nvidia to win even if more competing AI chips come on the market.

Deepfake scammer walks off with $25 million in first-of-its-kind AI heist. A Hong Kong firm was defrauded through a sophisticated phishing scheme instructing an employee to execute a transfer of money. To convince the skeptical employee to make fraudulent transfers, they created deepfakes of the Company CFO and others in a Zoom call.

The FCC bans robocalls with AI-generated voices. “This restricts callers from using AI-generated voices for non-emergency purposes or without prior consent.”

AI Opinions and Articles

YouTube's 2024 Vision includes a heavy emphasis on AI as an enabler for creators:

We’re approaching advances in AI with the same mission that launched YouTube years ago. We want to help everyone create. AI should empower human creativity, not replace it. And everyone should have access to AI tools that will push the boundaries of creative expression.

Generative AI is at the intersection of creativity and productivity. This has been quite unexpected, as it was always assumed creativity was the most human of activities and the last to fall to automation. Yet, now we see generative AI can be both a lever for productivity enhancement and a tool for ideation, opening new creative possibilities.

Andreessen-Horowitz tells us How AI Will Usher in an Era of Abundance and the opportunities for consumer AI businesses in that era, including some of the companies they see leading the way.

“We're entering a world in which consumers will experience abundance in creativity and productivity, in their relationships and social experiences, and in personal growth across dimensions like education, wellness, and financial health.

This will manifest in a new generation of AI-native consumer products and companies that grow faster and engage users more deeply than ever before.”

- A16z, The Abundance Agenda

PS. There’s too much news this week to have a “Looking Back” section. No looking back; we only look forward.