AI Week In Review 24.04.06

Higgsfield Diffuse video gen, Claude tool use, Dalle-3 image edits, Krea multi-image prompt, Replit Teams and Code Repair, Stable Audio 2.0, Meta AI content labels, TrueMedia deepfake detection.

AI Tech and Product Releases

Higgsfield - an AI video generation company “dedicated to democratizing social media video creation for everyone” - has announced itself and launched a high-quality video generation AI model.

Higgsfield is led by former Snap AI chief Alex Mashrabov, and their released video generation tool is turning heads for its high quality, on par with OpenAI’s Sora video generator. As TechCrunch notes, “Sora has so far been tightly gated, and the firm seems to be aiming it toward well-funded creatives like Hollywood directors” while Higgsfield aims to cater to consumer users.

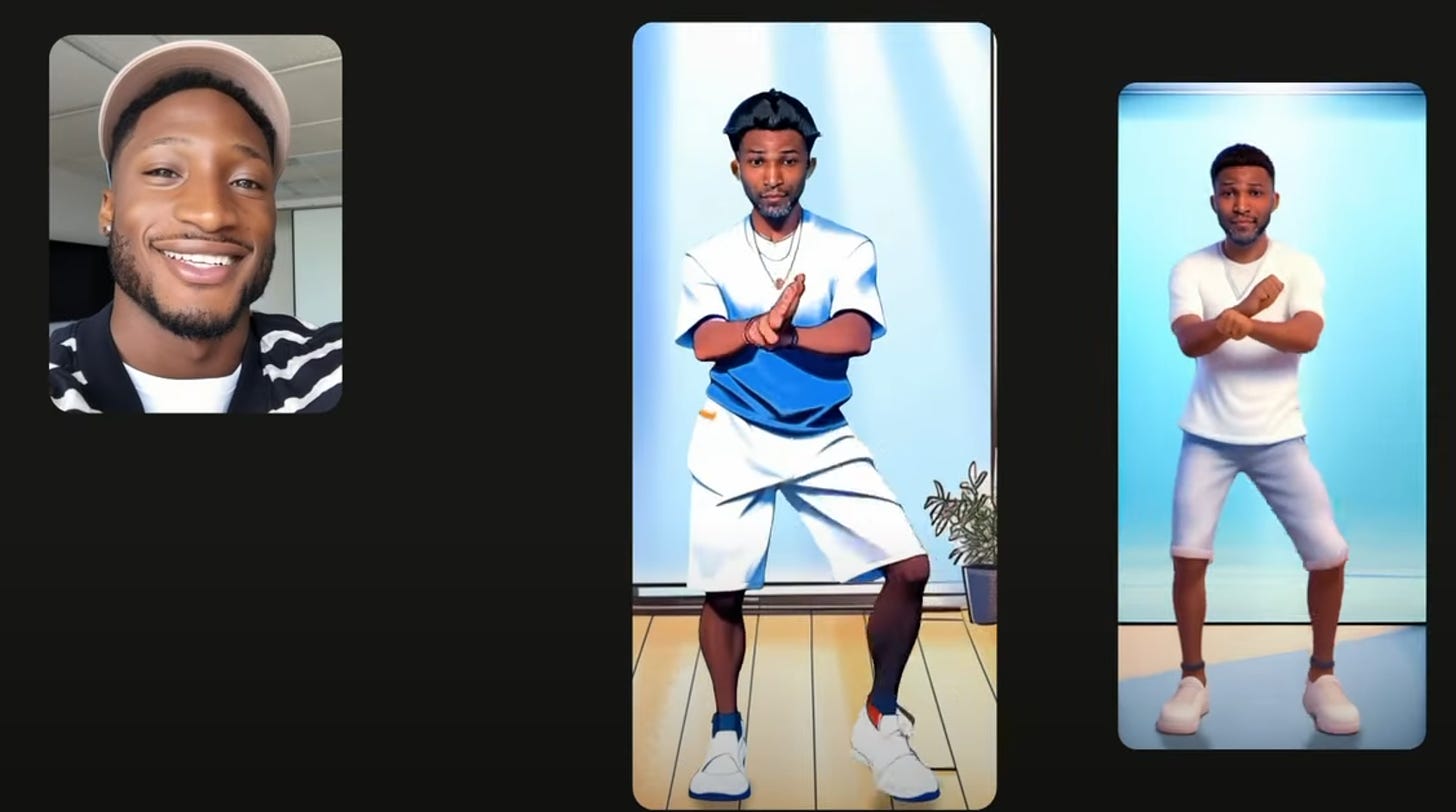

Their first release is Diffuse - a mobile app for personalized video generation where users can create personalized and realistic human characters and creatively control and fine-tune videos about the character:

Using Diffuse, users can insert themselves directly into an AI-generated scene, or have their digital likeness mimic things — like dance moves — captured in other videos.

Anthropic has enabled tool use (function-calling) as a beta feature in their latest Claude AI models. This is useful for getting real-time data, AI agent use-cases, enabling stock ticker integrations and more.

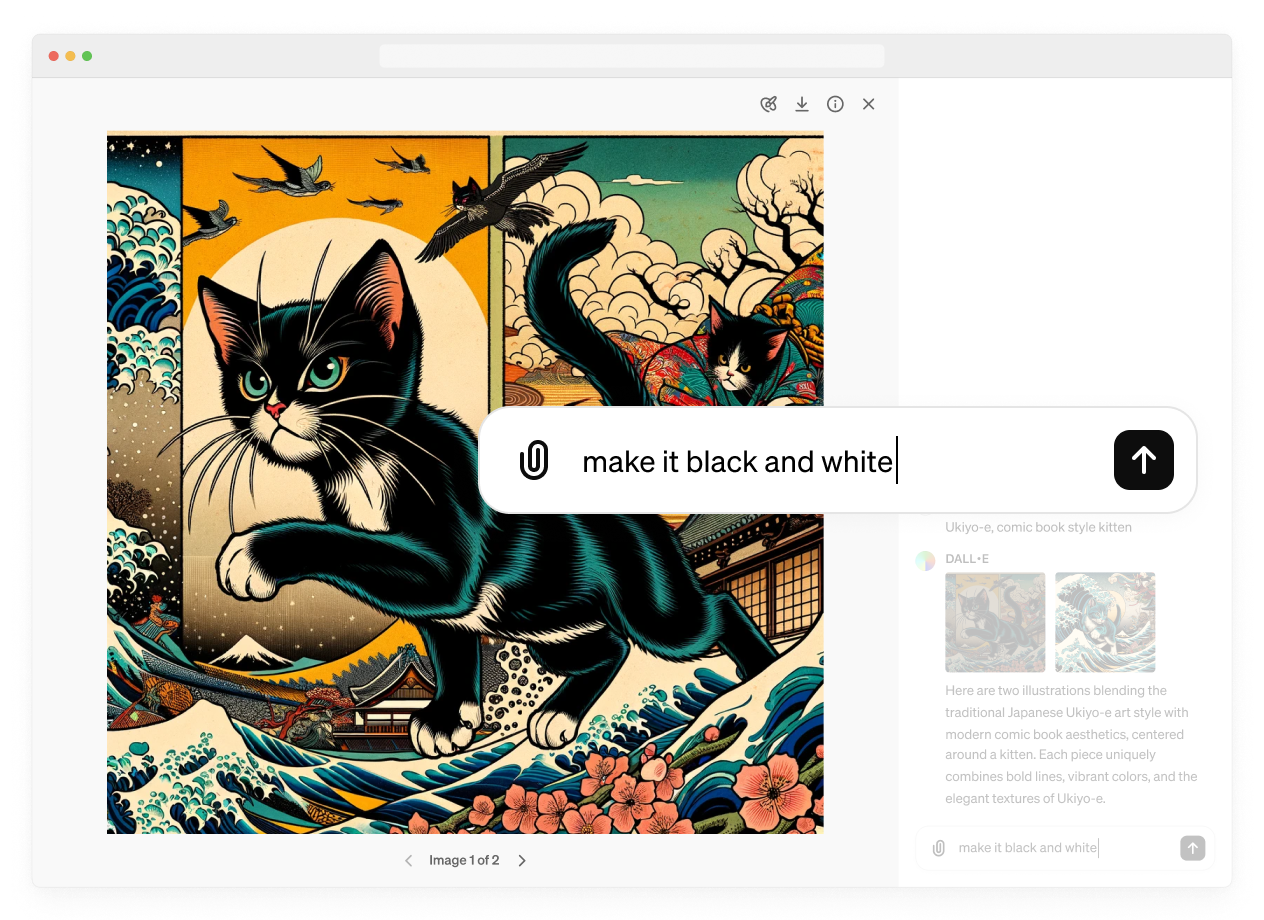

OpenAI adds ability to edit generated images with DALL-E, with an ‘in-painting’-like feature that enables you to edit images by selecting an area of the image to edit and describing your changes in chat. You can also direct your desired edit using a prompt in the conversation panel instead of the selection tool.

AI image generation tool-maker Krea AI has announced a wild feature - multi-image prompts in real-time: “Now you can use up to 3 images to condition your generations with our new "HD" model.” They uploaded 3 images and mixed them together, doing it in real-time makes it even more impressive. Krea AI has been adding other great features (overlay, upscale, enhance) to their toolset in recent months.

Replit launches “Replit Teams” a collaborative AI coding assistant. Replit Teams allows developers combined with AI agents to collaboratively develop software in real-time, a “Google Docs for coding.” AI agents will be able to automatically fix coding errors:

“Our agents will require no prompting. They’ll just jump in and present a fix,” said Replit founder and CEO Amjad Masad, in an exclusive interview with Semafor.

Replit also just announced Code Repair, the “world’s first low-latency program repair AI agent.”

Informed by Replit’s unique data on developer intuition, and grounded in real-world use cases to automatically fix your code in the background.

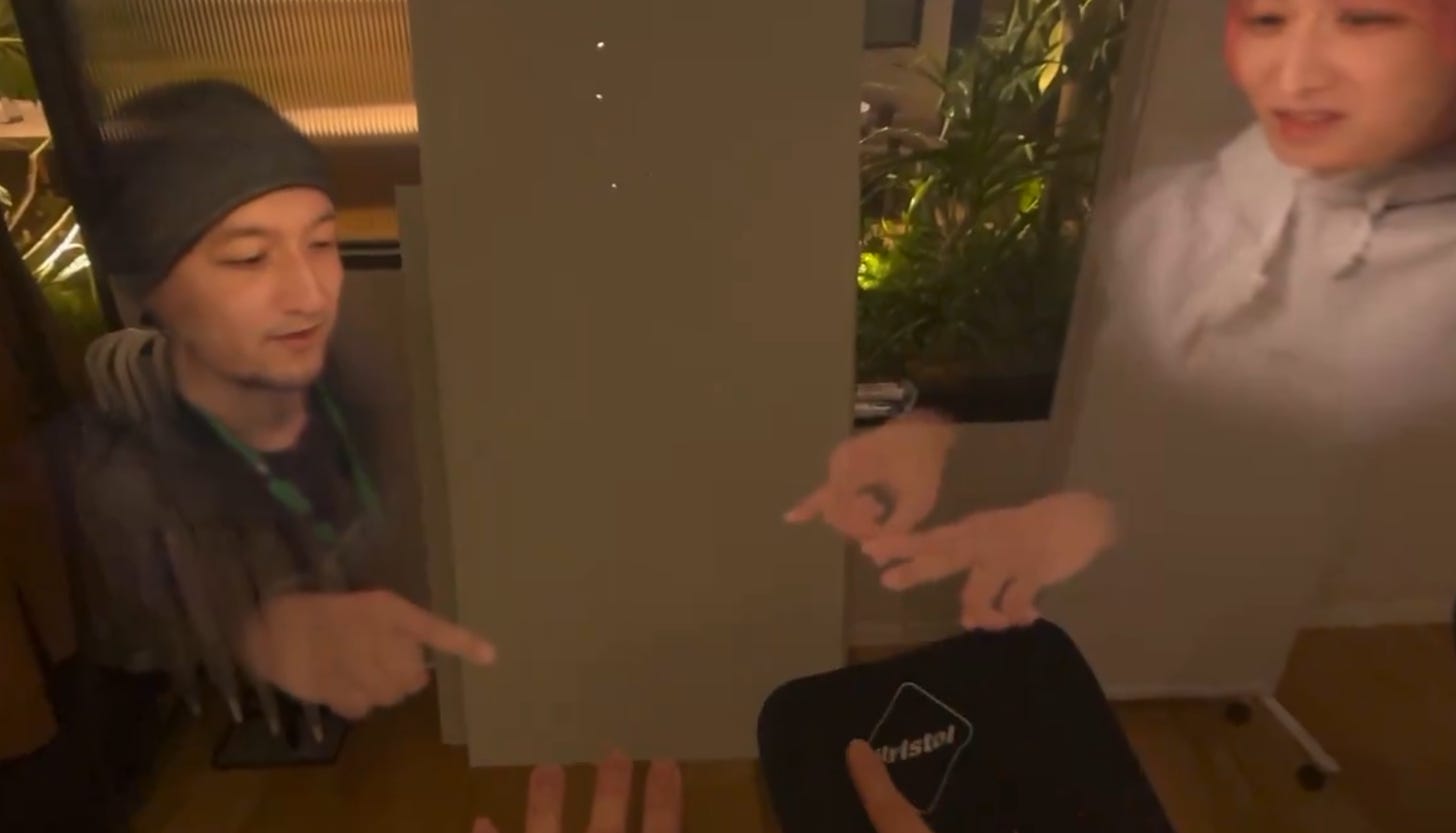

Apple Vision Pro is adding spacial personas, the “closest thing to true telepresence we have today.”

Top Tools & Hacks

Stability AI has announced Stable Audio 2.0, and it’s worth a listen. New features include music generations up to 3 minutes, that are “high-quality, full tracks with coherent musical structure.” It also has audio-to-audio generation, where users can upload sound or song clips and transform samples using natural language prompts.

Stability AI also tout efforts to avoid copyright infringement and abuse of artists:

Stable Audio 2.0 was exclusively trained on a licensed dataset from the AudioSparx music library, honoring opt-out requests and ensuring fair compensation for creators.

You can try it out for free on the Stable Audio website. Reviews of it have been positive for instrumental music, although not quite as good as Suno V3 usually. One nice use case for Stable Audio: Hum a tune into an audio clip for input to generate a full AI-generated song or soundtrack.

AI Research News

As we shared yesterday, AI research highlights for this past week:

Many-shot Jailbreaking

SWE-Agent gets 12.3% on SWE-Bench

Octopus v2: An on-device function-calling AI model for better agents

Dynamic Inference Compute with Mixture-of-Depths

Study: OpenAI's GPT-4 Bar Exam Score Lower Than Claimed

CodeEditorBench: A Code Editor Benchmark

Apple's ReALM-3B Beats GPT-4 on Reference Resolution

AI Business and Policy

Meta will require labels on more AI-generated content. Meta announced in a blog post they will begin labeling a broader range of video, audio, and image content identified as 'Made with AI' starting in May. This new policy addresses the challenges posed by generative AI deepfakes, such as election campaign misinformation, and it is in response to their own Oversight Board and other feedback. In particular, a poll Meta conducted found most favored disclosing of AI content:

A large majority (82%) favor warning labels for AI-generated content that depicts people saying things they did not say.

In related news, TrueMedia.org releases AI deepfake detector to identify political fake content in the upcoming elections. TrueMedia is a non-partisan organization launched in January, and led by Oren Etzioni:

In addition to launching the new tool Tuesday morning, TrueMedia.org reached a memorandum of understanding with Microsoft to share data and resources, collaborating on different AI models and approaches.

These AI startups stood out the most in Y Combinator’s Winter 2024 batch, according to TechCrunch:

Hazel - AI for the Government contracting process.

Andy AI - AI-powered transcription tool to help ease the documentation burden for nurses.

Precip - an AI-powered weather forecasting platform.

Maia - AI-powered guidance to couples to build stronger relationships.

Datacurve - “expert-quality” data for training generative AI models, specifically code data.

OpenAI-backed autonomous driving software startup Ghost Autonomy has shut down. Founded in 2017, they struggled to bring their planned self-driving capabilities to fruition, and ultimately couldn’t finance further development efforts.

Waymo on the other hand is getting traction on their self-driving cars. Uber Eats Working With Waymo to Get Rid of Pesky Delivery Drivers. This week, Uber Eats launched autonomous food delivery service in Phoenix, Arizona.

Legal tech startup Luminance has raised $40 million in a Series B funding round. The company specializes in generative AI tools for lawyers; its technology automates the process of creating, negotiating and analyzing contracts and other legal documents.

Washington judge bans use of AI-enhanced video as trial evidence. In a case involving cellphone video as evidence, the defendant’s lawyers created an AI-enhanced version of that video. However, upscaling of video via AI could introduce non-factual artifacts, so the Judge rightly decided to forbid it as evidence.

“This Court finds that admission of this AI-enhanced evidence would lead to a confusion of the issues and a muddling of eyewitness testimony, and could lead to a time-consuming trial within a trial about the non-peer-reviewable process used by the AI mode.” - Judge McCullough

Leading Companies Launch Consortium to Address AI's Impact on the Technology Workforce. This “AI-Enabled ICT Workforce Consortium” was initiated by Cisco CEO Chuck Robbins’ participation in the U.S.-EU Trade and Technology Council Talent for Growth Task Force. This Consortium is led by Cisco and joined by Accenture, Eightfold, Google, IBM, Indeed, Intel, Microsoft, and SAP.

It will assess AI's impact on technology jobs, address the need for a “proficient workforce” trained in AI, and identify skill development pathways for the roles most likely to be affected by artificial intelligence:

The Consortium will leverage its members and advisors to recommend and amplify reskilling and upskilling training programs that are inclusive and can benefit multiple stakeholders – students, career changers, current IT workers, employers, and educators – in order to skill workers at scale to engage in the AI era.

Advisors include labor unions, the European Vocational Training Association, Khan Academy, and other training and labor organizations.

“The mission of our newly unveiled AI-Enabled Workforce Consortium is to provide organizations with knowledge about the impact of AI on the workforce and equip workers with relevant skills. We look forward to engaging other stakeholders—including governments, NGOs, and the academic community—as we take this important first step toward ensuring that the AI revolution leaves no one behind.” - Francine Katsoudas, Executive Vice President and Chief People, Policy & Purpose Officer, Cisco.

AI Opinions and Articles

Jon Stewart, in a recent “Daily Show” piece on AI, gives us a humorous and cynical take on how AI is coming for our jobs - hard and fast. He reminds us that the “Retraining” buzzword has been used by politicians for decades, but as he puts it:

At least those other disruptions took place over a century or decades, AI is ready to take over by Thursday.

Whether it’s for comedic effect or real, his “AI get off my lawn” act is a fitting rejoinder to the above “ICT Workforce Consortium” effort. The corporate buzzwords in that above press release don’t hide the fact that no real specific solutions are outlined. Nobody knows how to mitigate the side-effects of this inevitable technology shift.

In a similar vein, Billie Eilish, Nicki Minaj, Jon Bon Jovi and over 200 artists call for protections against “predatory use of AI.” They want to cease use of AI to devalue human artists, saying that “the assault of human creativity must be stopped.”

“We must protect against the predatory use of AI to steal professional artists’ voices and likenesses, violate creators’ rights, and destroy the music ecosystem.”

Most points are not really arguable, and there some basic protections to human artists via copyright protections that can and should be given. Artists’ voices should be licensed; copycat compositions or derivative works of art, whether image, sound or video, should compensate original authors, etc.

However, beyond that the assertions are vague and there are no real specific solutions. Perhaps because there are none. The Pandora’s box of AI-generated creativity has been opened. Even if we did fairly compensate artists’ for use of their prior work, brand or likeness, future creativity is fundamentally changed, making it harder for artists going forward. AI-enabled creativity, composition and art is here to stay.

A Look Back …

AI music generation is in the news now, but its development and progress has been years in the making.

OpenAI announced Jukebox in April 2020: “Provided with genre, artist, and lyrics as input, Jukebox outputs a new music sample produced from scratch.” They also shared a research paper on the capability.