AI Week In Review 24.11.17

Qwen 2.5 Coder, Gemini-Exp-1114, Suno V4, NexusFlow Athene V2, Pleias Common Corpus, Gemini for iOS goes global, InVideo v3, Nous Chat and Forge Reasoning API, Apple Final Cut Pro 11, DeepL Voice.

AI Tech and Product Releases

Alibaba Qwen team has released Qwen-2.5 Coder series, including a 32B coding model, Qwen2.5-Coder-32B, that achieves SOTA performance on coding tasks, matching GPT-4o. The full Qwen suite consists of 6 coding models: 0.5B, 1.5B, 3B, 7B, 14B, and 32B. These models are open source, have 128K context window, and were trained to be practical for code generation, repair, and reasoning.

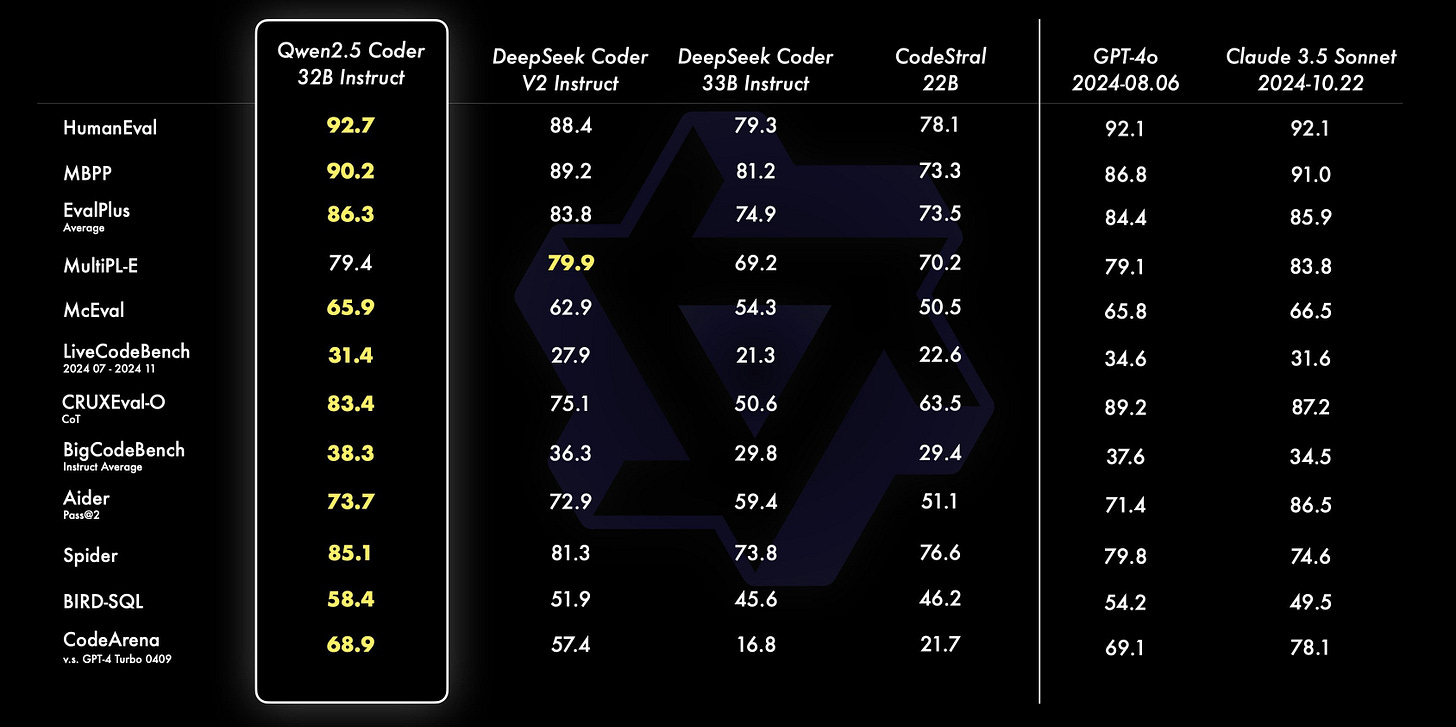

The benchmarks for Qwen2.5-Coder-32B are stellar: HumanEval of 92.7, CodeArena 68.9, Aider 73.7. However, real-world reviews have varied, so your mileage may vary. Whether it is as good as Claude 3.5 Sonnet for you or not, this is easily the best open-source AI coding model you can run locally, so I’ve already pulled it from Ollama, and it’s available on HuggingFace for download and with an artifacts interface. Qwen team has updated their Tech Report for the latest model releases.

Google released a new Gemini update, Gemini-Exp-1114 via the Gemini API, and it is new leading LLM on the LMSys Chatbot Arena. It also seems to be good at reasoning and helpful in VSCode. There is speculation that it might be a Gemini 2 preview, as this was quietly released, and it has an oddly limited 32K context window.

Suno released V4, their latest version of their AI music generation tool. V4 “remastering” lets you improve sound quality. Many V4 demos have been shared, showing Suno V4 is more high-fidelity, musical, and realistic than ever. Listen to assess: Ben Nash says it “literally rocks”; Alex Volkov of Thursdai shares a great acoustic song about Colorado; this acoustic song voice sounds so human, I can’t believe it’s AI; and here’s a SOTA Jingle.

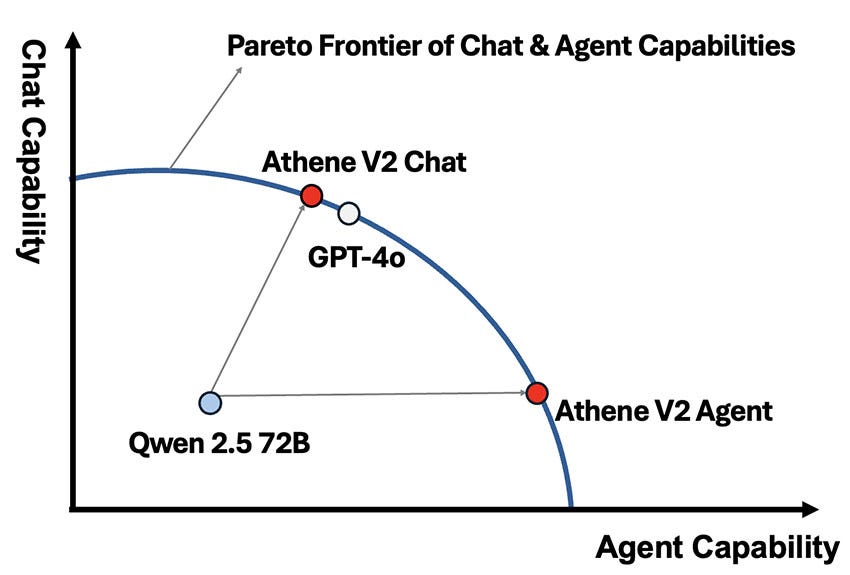

NexusFlow announced Athene-V2, a pair of 72B models fine-tuned from Qwen 2.5 72B for two targeted applications:

Athene-V2-Chat-72B is a state-of-the-art chat model that competes with GPT-4o on general chat capabilities.

Athene-V2-Agent-72B has both chat and agent capabilities, with concise, directive chat responses while surpassing GPT-4o in Nexus-V2 function calling benchmarks.

The models are open-weights LLMs and available on HuggingFace. The Athene-V2 model releases is an example where tuning AI models for specific skills can make smaller LLMs competitive with frontier AI models on specific tasks.

Pleias has announced the release of Common Corpus, a fully open LLM training dataset of over 2 trillion tokens of permissibly licensed content. This removes a barrier to the development of open-source AI models. Common Corpus is available on HuggingFace.

Google launched a dedicated app for its AI assistant, Gemini, on iOS globally. The new app supports text-based prompts in 35 languages and introduces Gemini Live for conversations in 12 languages, alongside image generation through Imagen 3.

Indian video editing platform InVideo launched InVideo v3.0, an AI video generation platform allowing users to generate videos from text prompts in a range of styles, including live-action and anime. InVideo aims to simplify AI-generated video creation for individuals, creators, and small businesses.

Nous Research introduced the Forge Reasoning API Beta and Nous Chat. Nous Chat is their hosted interface for Hermes 3 70B, their powerful open-source LLM model built for higher expression, long-form thinking and individual alignment. The Forge Reasoning API adds a layer of reasoning capabilities -Mixture of Agents, Monte Carlo Tree Search (MCTS), and Chain of Code - to help AI models reason better.

The Forge Reasoning API allows you to take any popular model and superpower it with a code interpreter and advanced reasoning capabilities. Our evaluations demonstrate that Forge augments the Hermes 70B model to be competitive with much larger models from Google, OpenAI and Anthropic in reasoning benchmarks.

Apple released Final Cut Pro 11, their latest version of their AI-enabled video-editing tool. This version uses Apple’s M-series chips to support AI features like Magnetic Mask for object cropping, Transcribe to Captions for accurate closed captions, and spatial video editing that can be imported into the Apple Vision Pro.

German AI startup DeepL, which offers translation services similar to Google Translate, introduced DeepL Voice for real-time text-based translation during live conversations and video calls across 33 languages, translating into text captions. Full voice output may come in a future release. DeepL builds custom LLMs optimized specifically for translation.

YouTube released a new AI music generation feature that allows creators to remix songs by describing style changes. This “Restyle a track” option generates a 30-second snippet for use in Shorts, maintaining the original song’s vocals and lyrics while altering genre or mood.

Patience, Jimmy. OpenAI is planning to launch “Operator” in January, an AI agent that controls your computer. The AI agent tool is planned to be capable of executing tasks directly in a web browser and will be launched initially through its developer API.

AI Research News

Our Research Roundup this week had a focus on recent research progress in AI reasoning, covering these research results:

GSM-Symbolic: The Limits of Mathematical Reasoning in LLMs

TTT: The Surprising Effectiveness of Test-Time Training for Abstract Reasoning

ScaleQuest: Unleashing Reasoning Capability of LLMs via Scalable Question Synthesis

Entropix: Overcoming Limitations of Mathematical Reasoning in LLMs

SMART: Self-learning Meta-strategy Agent for Reasoning Tasks

Agents Thinking Fast and Slow: A Talker-Reasoner Architecture

The Test-Time Training (TTT) result is particularly notable, as their method achieved 61% on the ARC benchmark, comparable to human-level performance on a benchmark that is known to be difficult for LLMs. For example, GPT-4o gets only 9% on ARC benchmark.

Epoch AI released FrontierMath, a new highly challenging benchmark for advanced math reasoning in AI, that even top mathematicians struggle solving. Top AI models did poorly, solving less than 2% of FrontierMath problems.

A new paper called Scaling Laws for Precision, formulates precision-aware scaling laws for both pre- and post-training. They find that models become harder to post-train quantize as they are overtrained on lots of data, so that eventually more pretraining data can be harmful if quantizing post-training. Also, pretraining at FP6 or FP8 might be compute-optimal for training. A thread on X describes it further.

AI Business and Policy

Reuters reports “AI is hitting a wall” and new reports highlight fears of diminishing returns for traditional LLM training. We reported on this trend earlier in the week in our article “Is LLM scaling hitting a barrier?” Industry concerns are growing that LLMs are hitting a performance plateau, seemingly confirmed by rumors. However, Sam Altman has struck back at the pessimism with a simple X post:

there is no wall

Amazon is poised to roll out its newest AI chip, the Trainium 2 AI chip in December, as the Big Tech group seeks to reduce its reliance on market leader Nvidia for AI capability.

Anthropic hires first "AI welfare" researcher, as concerns over future AI suffering grow. Kyle Fish joins Anthropic's alignment science team to explore if advanced AI models deserve moral consideration and protection.

Cursor has acquired SuperMaven, merging two popular leading AI coding tool providers.

“Soon, we will merge the intelligence of our custom Tab model with the speed and codebase understanding of Supermaven.”

It’s on again. Elon Musk revived his lawsuit against OpenAI, adding Microsoft, Reid Hoffman, and Dee Templeton as defendants. The amended complaint alleges anticompetitive practices between OpenAI and Microsoft, harming Musk’s AI company xAI. OpenAI is accused of abandoning its nonprofit mission to benefit from partnerships that violate antitrust laws.

OpenAI’s tumultuous early years were revealed in emails from Musk, Altman, and others. Emails exposed from Elon Musk’s lawsuit filing against OpenAI have exposed internal debate and conflict over corporate structure and OpenAI’s mission, and concerns by OpenAI leaders about Elon Musk's leadership. It also shows OpenAI considered acquiring AI chipmaker Cerebras in 2017, a deal that never materialized.

Venture investors show no sign of AI fatigue as the sector raised $18.9 billion in Q3, accounting for 28% of all venture funding. Notably, OpenAI secured a record-breaking $6.6 billion round, one of six AI deals over $1 billion this year.

François Chollet, a leading AI researcher and creator of Keras, is leaving Google to start a new company. In his farewell post on X, he expressed gratitude for his time at Google, during which deep learning evolved into a major industry. Chollet has been skeptical of LLM’s ability to reason, but remains optimistic about the future of AI.

Unlike some people working on AGI, I don't want to create God. I want to give humanity the tools it needs to control its own destiny. – Francois Chollet

U.K. mobile network O2 has created an "AI granny" called Daisy to outsmart scammers by engaging them in lengthy conversations filled with irrelevant personal stories and details. Daisy uses human-like rambling chat to waste the scammers' time, keeping them on the line for up to 40 minutes at a time.

The European Union is developing compliance guidance for its new law on AI. It has launched a consultation seeking input on how the law defines AI systems and examples of software that should be excluded.

AI Opinions and Articles

Anthropic CEO Dario Amodei believes scaling up models remains a viable path toward more capable AI, despite challenges. Amodei sat for almost 3 hours with Lex Fridman discussing his thoughts on AGI timelines, his essay “Machines of Loving Grace” about the positive future of AI, and concerns about the economic and ethical implications of AI. While saying AGI could be achieved by 2026 or 2027, he acknowledged the unpredictability of AI development and control issues with current models.

I don’t want to represent this [suggesting AGI in 2026 or 2027] as a scientific prediction. People call them scaling laws. That’s a misnomer. Like Moore’s law is a misnomer. Moore’s laws, scaling laws, they’re not laws of the universe. They’re empirical regularities. I am going to bet in favor of them continuing, but I’m not certain of that. – Anthropic CEO Dario Amodei.