AI Week In Review 24.12.28

DeepSeek-V3, QVQ, CogAgent-9B, Hume OCTAVE, OpenRouter Web Search, ModernBERT, Coconut latent space reasoning, OpenAI shed's non-profit status to become Public Benefit Corp. xAI gets funding.

AI Tech and Product Releases

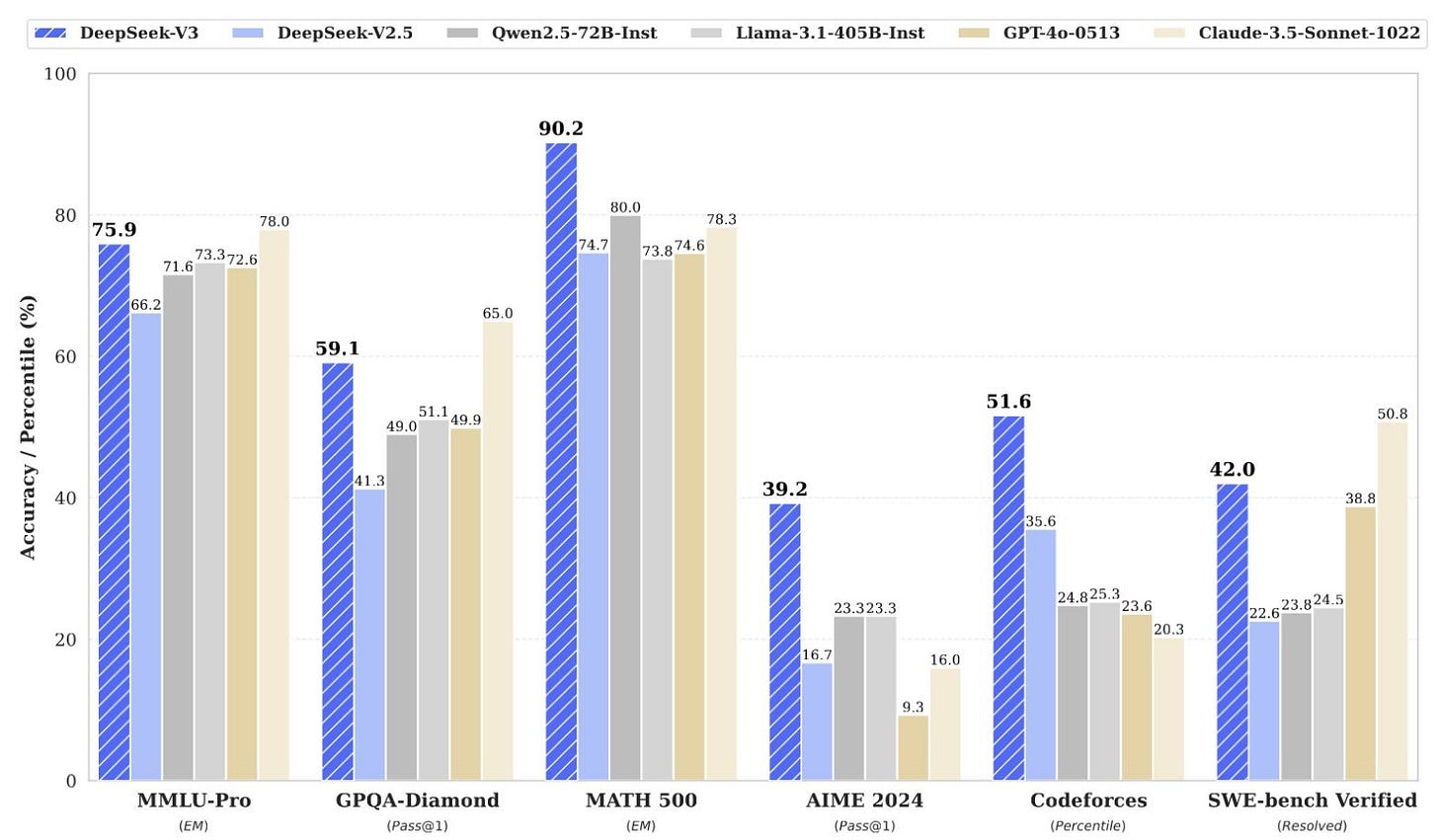

The Chinese AI firm DeepSeek released DeepSeek-V3, an open-source LLM that outperforms many existing models in coding and text-based tasks, including GPT-4o and Claude 3.5. DeepSeek-V3 is a 671B parameter fine-grained Mixture-of-Experts (MoE) model, which activates only 37B parameters per token. It was trained on 14 trillion tokens of data in just 2.8 million GPU hours, significantly less than comparable models, making it one of the most efficient large-scale models both in its training and inference.

DeepSeek-V3’s impressive performance validates that AI model efficiency can still improve with mixture-of-experts (MoE) architecture. It also confirms there is no moat. Chinese AI labs are very capable of competing with the top US AI labs, and open weights AI models are fast followers of leading proprietary AI models.

You can access DeepSeek-V3 via the DeepSeek chat interface or via their API at a very cheap price of 14c per million input tokens. The open-weights DeepSeek-V3 is also on Hugging Face, but the 671 billion parameter MoE LLM is too large to run locally.

Perhaps due to training on ChatGPT outputs, DeepSeek V3 frequently misidentifies itself as ChatGPT. The model provides instructions for using OpenAI’s API and repeats GPT-4's jokes. This practice may violate OpenAI’s terms of service and can lead to hallucinations and misleading answers in DeepSeek V3.

Copycat AI model behavior is not unique to DeepSeek AI models. Contractors evaluating Google’s Gemini AI compare its answers against Anthropic’s Claude. Contractors noted Claude’s responses to be safer compared to Gemini’s, which sometimes produced content flagged as a "huge safety violation." This practice raises questions about permission, as Anthropic forbids using Claude for training competing models without approval.

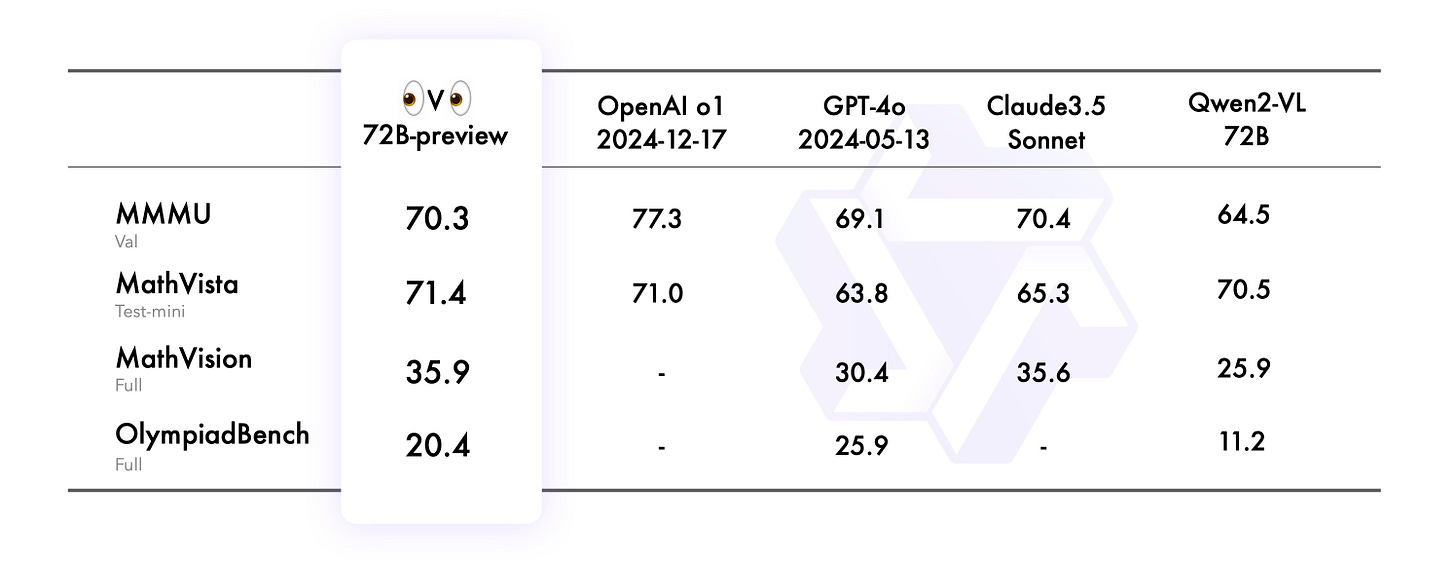

Qwen released QVQ, the world’s first open-weight visual reasoning model that outperforms GPT-4o and Claude 3.5 on visual understanding benchmarks, including MMMU and MathVista. QVQ is built upon Qwen2-VL-72B and uses inference-time compute (used in o1 model) to enhance its visual reasoning capabilities. While QVQ demonstrated excellent performance, it is an experimental model and has limitations, such as hallucinations and getting stuck in circular logic patterns.

The open weights Qwen QvQ 72B is available to download from HuggingFace or to try out on HuggingFace spaces.

CogAgent-9B, an update to the CogAgent open-source VLM-based GUI agent, has been released. The CogAgent-9B-20241220 model is based on GLM-4V-9B, a bilingual open-source VLM base model, and this new release achieves significant advancements in GUI perception, inference prediction accuracy, action space completeness, and task generalizability.

Hume has released OCTAVE their next-generation 3B speech-language model, that has voice and personality creation:

With a prompt like “scholarly wizard mentor,” OCTAVE not only generates a high-quality voice, but a new personality, accent, expressions, and accompanying language—in less than 300 ms.

Hume website demonstrated OCTAVE-based voice cloning (such as Ilya Sutskever) from brief sound clips and a variety of their voice personalities.

OpenRouter added Web Search Grounding to their interface, enabling AI-based web search on their (over 300) served models in the OpenRouter chat interface.

xAI is testing a standalone iOS app for its chatbot Grok. The app integrates real-time data from the web and X, offering features like text rewriting, Q&A, image generation from text, and summarization. xAI is also preparing a Grok.com dedicated website for web access to Grok beyond X.com.

AI Research News

Our AI Research Review for this week covered these important recent research results:

DeepSeek shared further DeepSeek-V3 details in DeepSeek-V3 Technical Report

Qwen2.5 Technical Report

ModernBERT: A Fast, Memory Efficient, and Long Context Bidirectional Encoder for Finetuning and Inference

Coconut: Training LLMs to Reason in a Continuous Latent Space.

Recent research shows that AI model quantization, a technique used to reduce computational demands by lowering bit precision, may limit LLM performance more than expected. This research was reported in the paper “Scaling Laws for Precision.” This finding could impact AI companies that rely on quantization to reduce inference costs for large models.

AI Business and Policy

OpenAI’s ChatGPT Search can be fooled into generating misleading summaries. The Guardian reports that by inserting hidden text into websites, they could make ChatGPT ignore negative reviews and generate entirely positive summaries or even produce malicious code.

OpenAI Plans Transition to Public Benefit Corporation to Advance Its Mission on AGI. OpenAI aims to balance shareholder interests with public benefit through a Delaware PBC structure, allowing it to raise capital conventionally while its existing nonprofit receives shares in the new entity.

OpenAI co-founder Elon Musk and competitor Meta have argued in court against OpenAI’s transition, raising philanthropic integrity and financial implications. Non-profit AI Safety organization Encode filed an amicus brief supporting Elon Musk’s injunction against OpenAI's transition to a for-profit company. Encode argues that this conversion would undermine OpenAI’s mission to prioritize AI safety over financial gains.

Microsoft and OpenAI have a profit-based definition for artificial general intelligence (AGI). According to an agreement, OpenAI must generate at least $100 billion in profits to be considered AGI, a milestone it aims to achieve by 2029. This re-definition ensures Microsoft retains access to OpenAI’s technology for years, no matter how rapidly AI develops.

Elon Musk's xAI secured an additional $6 billion in new funding, cementing its rise as a significant player in the AI landscape. xAI started with around 12 employees in March 2023 but has since grown to over 100 staff members, and remarkably built their “Colossus” 100,000 Nvidia GPU super-cluster in just 122 days. xAI plans to train its models on data from various Musk companies like Tesla and SpaceX, while also leveraging its ties to the X (formerly Twitter) platform.

Two big defense tech players, Palantir and Anduril, are in talks with tech companies like SpaceX, OpenAI, Saronic, and Scale AI to form a consortium for Pentagon contracts. The goal is to challenge traditional contractors such as Lockheed Martin and Raytheon by offering more efficient ways to provide advanced technology to the government. Initial partnerships could be announced as soon as January.

OpenAI is considering developing its own humanoid robot, a venture it abandoned in 2021. OpenAI has also financially backed firms like Figure and Physical Intelligence, indicating renewed interest in merging AI advancements with robotics.

CES preview: Nvidia’s CEO Jensen Huang will kick off CES 2025 with a keynote, including expected announcements on the RTX 5000 series GPU and discussions on AI, robotics, and more. leveraging the company's foundational role in the current AI boom. As Nvidia dominates the AI chip market. AMD must compete by presenting its next-generation GPU, potentially branded as the RX 8000 or RX 9000 series under its RDNA 4 lineup.

European AI startups are thriving, despite a flat year for venture funding in Europe. AI startups received 25% of VC funding in Europe this year, up from 15% four years ago, resulting in new unicorns like Poolside and Wayve.

Coralogix has acquired Aporia, an AI observability startup, integrating its technology into a new unified monitoring platform for both traditional and AI workloads.

President-elect Trump has appointed Sriram Krishnan his AI advisor, with the official role of senior policy advisor for AI at the White House Office of Science and Technology Policy. Krishnan brings a background in venture capital and leadership roles at Microsoft and Twitter, and he will work with David Sacks, Trump’s crypto and AI czar, to shape and coordinate AI policies across government agencies.

AI Opinions and Articles

There has been a lot of reaction to the o3 model release and its stunning 87.5% score on ARC-AGI benchmark, matching human-level performance and igniting debate about how close o3 gets us to AGI. Frank Andrade on X points out that the o3 model still gets some obvious problems wrong.

Finally, Michael Spencer at AI Supremacy covers the top 10 China AI Stories in 2024. He asks:

The Western media keeps underplaying the capabilities of Chinese Gen AI startups, but for how much longer can they keep doing that?

His article highlights areas where Chinese AI firms are competitive like AI video generation models and robotics. He notes Qwen 2.5 models and DeepSeek-V3 as latest and greatest examples of Chinese AI firms executing well on SOTA AI model development.

Chinese AI firms very quickly improve on capable AI models, and China AI firms are embracing more open AI development, which is better for all long-term and a challenge to proprietary AI firms. We should stop underestimating Chinese AI.