AI Week in Review 25.08.02

BFL's Flux.1 Krea [dev], Gemini Deep Think, GLM-4.5, Qwen3 Coder-Flash & 30B-A3B-Thinking, Cogito v2, Runway Aleph, Hunyuan World 1.0, Step-3, Wan2.2, Ideogram Character, Manus Wide Research.

Top Tools

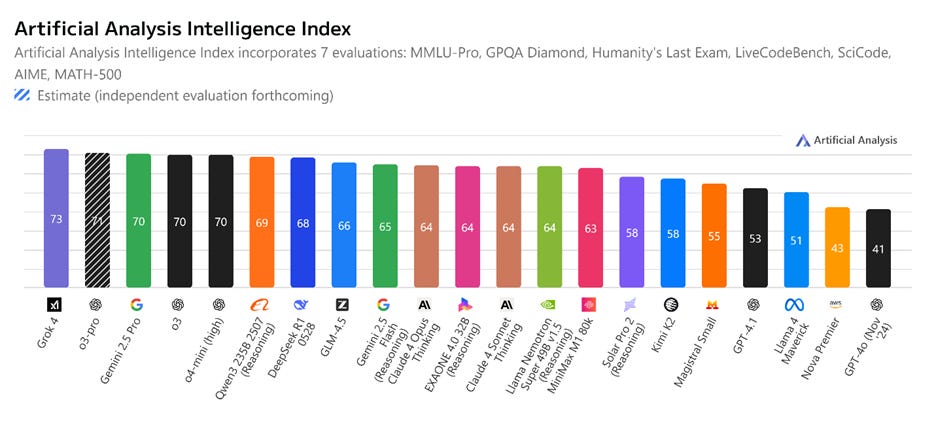

Google is releasing Deep Think in the Gemini app for Google AI Ultra subscribers, a productized version of the Deep Think that recently scored a gold level in the International Math Olympiad. Deep Think uses parallel thinking techniques and longer thinking time to scale compute on reasoning. Deep Think crushes math and code benchmarks: 34.8% on HLE score of without external tools; 86.6% on Live Code Bench; 99.2% on AIME 2025. Deep Think is the smartest AI model yet.

DeepMind is giving a special version of Deep Think to mathematicians to try out, but they point out its uses are much broader:

Deep Think’s strengths go beyond supercharging math discovery. It can also perform well at work that needs creativity and strategic planning, including tackling tough coding problems as well as iterative web design.

AI Tech and Product Releases

Chinese startup Zhipu AI (now Z.ai) released GLM-4.5, an open MoE AI reasoning model with 355B parameters and 32B active parameters, and GLM-4.5-Air, a smaller MoE with 106B total parameters and 12B active parameters. GLM-4.5 and GLM-4.5-Air were trained to support reasoning, coding and agentic applications, with a hybrid-reasoning architecture that supports both “thinking” and “non-thinking” modes. GLM-4.5 is available on HuggingFace. We wrote earlier this week on GLM-4.5 and the Rise of Chinese AI here.

The Alibaba Qwen team followed up the releases last week of Qwen3-Coder and Qwen3-2507 updates with two additional releases this week, of smaller coding and thinking models:

Qwen3-Coder-Flash-2507 is a 30B parameter MoE with 3B active parameters coding-focused model. It offers 256 K context, high throughput for local use, and built-in support for agentic coding tasks. The FP-8 quantized Qwen3-Coder-30B-A3B-Instruct-FP8 is available on HuggingFace and on Ollama for lower memory local use.

Qwen3-30B-A3B-Thinking-2507 is the “thinking” variant of the 30B parameter MoE with 3.3B active parameters, boasting a 256K token context window and strong reasoning capabilities on benchmarks like MMLU and GPQA. An FP-8 quantized Qwen3-30B-A3B-Thinking-2507-FP8 is available on HuggingFace.

Cogito has released the Cogito v2 open source hybrid reasoning models in 4 sizes - 70B, 109B MoE, 405B, 671B MoE. The largest 671B MoE model is amongst the strongest open AI reasoning models in the world, on par with the latest DeepSeek R1 models but with shorter reasoning chains. Cogito trained reasoning on 4 non-reasoning base models (Llama 3.3 70B, Llama 4 109B MoE, Llama 4.1 405B, DeepSeek v3 671B MoE) using Iterated Distillation and Amplification (IDA) to internalizing the reasoning process and scale model reasoning. They describe this as iterative self-improvement (AI systems improving themselves).

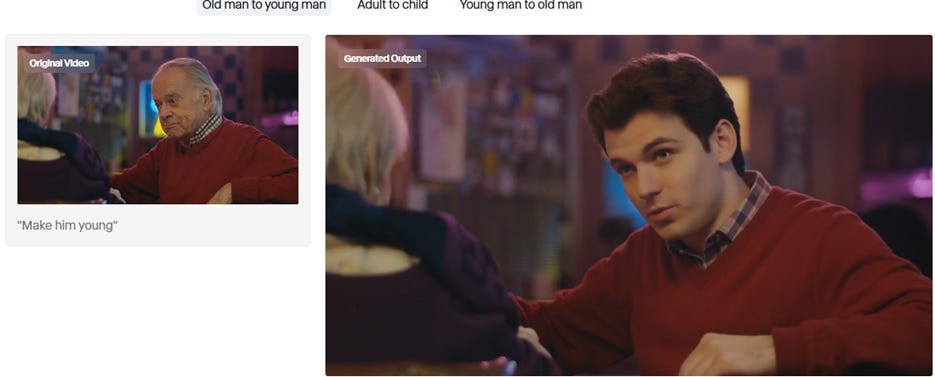

Runway introduced Runway Aleph, adding chat-based editing to its AI video model for scene manipulation. From just conversational prompts, users can remove, change, or add people, background scenery, as well as changing effects and camera angles. This is next-level interactive video editing.

Blaine Brown used it to modify the LilYachty dancing on-stage meme and says: “I just got access to RunwayML Aleph & it's insane!”

Tencent released Hunyuan3D World Model 1.0, the first open-source generator of explorable 3D worlds from text or images, producing explorable meshes suitable for games and modeling.

Complex scenes require about 33 GB VRAM. is a game-changer for game developers and digital content creators. HunyuanWorld-1 is available on HuggingFace, with more information on their Project Page and their published technical report: “HunyuanWorld 1.0: Generating Immersive, Explorable, and Interactive 3D Worlds from Words or Pixels.”

StepFun has released Step-3, a multimodal reasoning MoE model with 321B parameters and 38B active parameters, providing “cost-effective multimodal intelligence.” Step-3 scores 74% on MMMU and 64% on MathVision benchmarks. StepFun published a paper on Step-3’s design, describing how Step-3 achieves higher model inference efficiency via architecture features such as Multi-Matrix Factorization Attention (MFA). Step-3 is available as an open model (Apache 2.0 license) on HuggingFace.

Alibaba Qwen team introduced Wan2.2, an open-source MoE video model that generates 5-second 720p videos from text and images on a single 4090 GPU. It supports both text2video and image2video pipelines with diffusers. Available on Hugging Face, Wan2.2 marks a major step up in AI video model generation quality available to consumers.

Black Forest Labs and Krea AI released FLUX.1 Krea [dev], a 12B parameter text-to-image transformer with open weights under a non-commercial license. It delivers distinctive “natural grain” outputs for real photograph looks, outperforms many open peers in prompt adherence, and supports LoRA-based fine-tuning. Black Forest Labs says of FLUX.1-Krea-dev:

FLUX.1 Krea [dev] overcomes the oversaturated 'AI look' to achieve new levels of photorealism with its distinctive aesthetic approach.

Ideogram launched Ideogram Character, a character consistency feature that works from a single image and is available free on Ideogram. Users can upload a single reference image to generate infinite consistent variants with inpainting support.

Microsoft introduced Copilot Mode in Edge, infusing AI-enabled features into their browser. Copilot supports voice navigation and can see all your open tabs so it can understand the full context of what you’re exploring online. Microsoft CEO Satya Nadella says:

My favorite feature is multi-tab RAG. You can use Copilot to analyze your open tabs, like I do here with papers our team has published in @Nature journals over the last year.

AI agent startup Manus AI has introduced "Wide Research," an experimental feature for large-scale, high-volume tasks. It leverages over 100 parallelized AI agents simultaneously for single goals, like comparing 100 sneakers or generating 50 design styles. In their “Wide Research” blog post, Manus said their agent is a “personal cloud computing platform” and they want to scale AI compute for users. Wide Research is rolling out to their Pro users as an experimental feature.

CharmBracelet open-sourced Crush, a VSCode-inspired CLI coding agent with built-in subagent support. It integrates open-source LLMs directly into terminal workflows with discoverable commands and permission-controlled tool calls.

Embodied AI robotics company LimX Dynamics launched its full-size general-purpose humanoid robot, LimX Oli. Aimed at AI researchers, robotics developers, and solution integrators, LimX Oli features 31 degrees of freedom and is available in three versions: Lite, EDU, and Super, with prices starting at RMB 158,000 ($21,800).

Quora’s AI platform Poe released an API allowing developers to access diverse AI models and bots for their applications. This API supports over 100 multimodal models and uses Poe's existing point-based subscription plans.

AI Research News

Google DeepMind announced AlphaEarth Foundations, a new AI model that integrates petabytes of Earth observation data from dozens of sources (satellite images, climate data) to describe the earth in sharp, 10-meter precision detail. Google DeepMind published a paper on AlphaEarth Foundations, describing their work. AlphaEarth Foundations requires 16 times less storage than before, while accurately and efficiently characterizing the planet’s entire terrestrial land and coastal waters.

AI Business and Policy

NVIDIA has placed a new order with TSMC for 300,000 H20 chips as China demand surges. At the same time, the U.S. Department of Commerce is delaying Nvidia's H20 AI chip sales to China, due to a backlog of licensing applications, staff losses, and poor communication within the department. The decision to allow H20 sales to China has faced criticism, with some national security experts urging restrictions due to security concerns.

The AI data center buildout comes to Europe: OpenAI, Nscale, and Aker are launching Stargate Norway, their first European AI data center, near Narvik. This renewable-powered facility will have 230MW capacity, using 100,000 Nvidia GPUs by 2026, and OpenAI will purchase capacity from the $2 billion joint venture.

More details emerged on the Google and Cognition acquisitions of Windsurf. Google paid Windsurf $2.4 billion for its technology and top talent, splitting funds between investors and approximately 40 hired employees, primarily co-founders. This deal left most Windsurf employees without an initial payout. Subsequently, Cognition acquired what remained of Windsurf for an estimated $250 million, ensuring all remaining staff received financial benefits.

Anthropic has become the top enterprise choice, surpassing OpenAI in AI model usage. Anthropic now holds 32% of the enterprise market share by usage, compared to OpenAI's 25%, a reversal from two years ago. Its Claude 3.5 and 3.7 Sonnet models fueled this surge, also securing 42% of the coding market.

AI startup fundraising news:

OpenAI recently raised an oversubscribed $8.3 billion, reaching a $300 billion valuation and reporting $13 billion in annualized revenue with projections of $20 billion by year-end.

AI storage platform Vast Data is reportedly seeking a new funding round that could value the startup at up to $30 billion. Vast Data, which develops efficient storage for AI data centers, raised funds in 2023 at a $9.1 billion valuation in 2023.

Applied AI research company Fundamental Research Labs secured $33 million in funding. The company develops multiple AI applications, including the general-purpose consumer assistant Fairies and the spreadsheet-based agent Shortcut, both of which are already generating revenue.

AI startup C8 Health raised $12 million to address the issue of fragmented clinical knowledge in healthcare. Their AI-powered platform centralizes and instantly delivers hospital best practices and protocols to staff via mobile, desktop, and EMRs.

AI Opinions and Articles

Meta CEO Mark Zuckerberg published “Personal Superintelligence,” his latest AI manifesto, declaring that “superintelligence is now in sight” and outlining his vision for AI. He advocates for “personal superintelligence that empowers everyone,” contrasting it with centrally automating work, a subtle jab at OpenAI.

However, Zuckerberg’s new statement lacks his prior clear support for open source AI. It would be unfortunate if Meta moves away from their prior strong position as a provider of open AI models.

The rest of this decade seems likely to be the decisive period for determining the path this technology will take, and whether superintelligence will be a tool for personal empowerment or a force focused on replacing large swaths of society. – Mark Zuckerberg