AI Week in Review 25.08.16

Gemma 3 270M, SuperFly & Chickbrain / Llama 3.1 8B Slim, LFM2-VL, Matrix-Game 2.0, Matrix-3D, Nvidia Cosmos & Omniverse, Sonnet 4 gets 1 million token context, Jan-v1, gpt-oss-20b-base, CoAct-1.

Top Tools

Several small, high-efficiency AI models have been released recently. These “smol AI models” can be fine-tuned and are small enough to power AI directly on smartphones and other edge devices.

Google introduced Gemma 3 270M, releasing a “hyper-efficient” open-source compact AI model that can run offline on smartphones and be quickly fine-tuned for specialized applications. Gemma 3 270M boasts excellent instruction-following, with highest IFEval scores among comparably-sized AI models:

Gemma 3 270M embodies this "right tool for the job" philosophy. It's a high-quality foundation model that follows instructions well out of the box, and its true power is unlocked through fine-tuning. Once specialized, it can execute tasks like text classification and data extraction with remarkable accuracy, speed, and cost-effectiveness.

Gemma 3 270M is available on HuggingFace.

Spain-based Multiverse Computing released small efficient AI models “SuperFly” and “ChickBrain.” These small AI models were created by compressing larger AI models with CompactifAI, a quantum-inspired compression algorithm that drastically reduces existing AI model size while maintaining model performance. ChickBrain, or Llama 3.1 8B Slim, is a 3.2B model based on Llama 3.1 8B that’s faster and cheaper “without sacrificing intelligence.”

Tiny AI model SuperFly is a compressed version of SmlLM2-135 with only 94 million parameters, intended to be used in smart home devices:

SuperFly is designed to be trained on very restricted data, like a device’s operations. Multiverse envisions it embedded into home appliances, allowing users to operate them with voice commands like “start quick wash” for a washing machine.

Liquid AI released LFM2-VL, 440M and 1.6B parameter vision-language models that are optimized for low-latency, high-accuracy vision-language tasks. Combining the LFM2 Liquid Foundation Model backbone with vison encoders, these models boast 2x speedup for on-device inference.

The LFM2-VL models are available on Hugging Face.

AI Tech and Product Releases

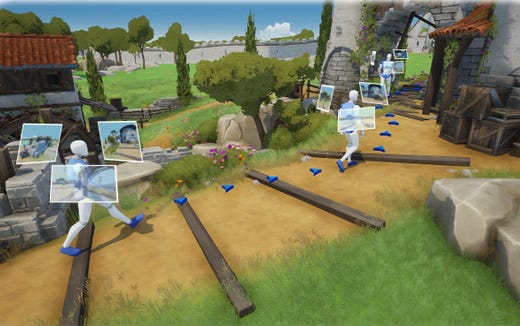

Skywork AI released two open-source world models as part of their Technology Release Week: Matrix-Game 2.0 and Matrix-3D. These models significantly advance interactive AI video and 3D world generation, pushing the boundaries of real-time, physics-aware simulations.

Matrix-Game 2.0 focuses on explicit user-driven interactivity and is fully open source, with technical documentation and model downloadable from HuggingFace. Features include real-time distillation, frame-level action injection, and a massive interactive data pipeline.

Nvidia announced new Cosmos physical-AI models and new Omniverse robotics libraries. The Nvidia Omniverse™ libraries and Nvidia Cosmos™ world foundation models (WFMs) accelerate the development and deployment of robotics solutions, targeting perception-to-action workflows for sim, training and deployment across robots and industrial twins.

Anthropic’s Claude Sonnet 4 now supports a 1 million token context window, a five-fold increase of the Claude context. This enables Claude to process 75,000 lines of code in a single context. The company frames the jump as enabling entire codebases or dozens of papers per request, available on its API and AWS Bedrock in public beta.

Anthropic's Claude Opus 4 and 4.1 can now end "persistently harmful or abusive" conversations as a last resort. Based on AI model welfare research, it applies to extreme cases like soliciting illegal or violent content and protects the AI model from harmful interactions or mis-aligned behavior.

Anthropic launched learning modes for Claude AI that guide users through step-by-step reasoning instead of providing direct answers. These new “learning modes” transform Claude into a teaching companion, emphasizing guided discovery over immediate solutions. Available for general Claude.ai and Claude Code, they aim to enhance human learning and provide an alternative to ChatGPT Study mode.

Menlo Research released Jan-v1, a fine-tuned version of Qwen3-4B-thinking that is optimized for SimpleQA tasks in local environments. Jan-v1 rivals Perplexity Pro on SimpleQA, operates locally and uses search data sources via MCP tools, effectively acting as an open-source local search assistant.

AI researcher Jack Morris created ‘gpt-oss-20b-base’ by reversing gpt-oss-20b alignment, yielding a “base” model that offers faster, uncensored response. He shared his results on X and the model itself, gpt-oss-20b-base, on HuggingFace. It’s not a true base model but a LoRA fine-tune that undid alignment, making the model uncensored and useful for more tasks, while raising alignment and safety risk concerns.

Google is upgrading Gemini with personalization memory features, including Personal Context and Temporary Chat, which give Gemini controllable memory of prior interactions for improved personalization. Personal Context provides memory of prior chat interaction to Gemini, while Temporary Chat enables users to have one-off conversations that aren’t included in personalization memory. The features bring Gemini up to Anthropic and OpenAI AI models in personalization and memory features.

Google announced Flight Deals, a new AI-powered search tool within Google Flights. This tool uses a custom Gemini 2.5 to help users find cheaper fares via natural language queries like “week-long trip to a city with great food.”

In the week since GPT-5 release, OpenAI has made several post-release GPT-5 course corrections:

Responding to user blowback from losing access to legacy AI models, OpenAI restored the ability to select legacy models such as GPT-4o via the model picker, which had been deprecated in GPT-5’s launch. OpenAI reversed their deprecation decisions and restored model choices for users preferring earlier models.

OpenAI discovered a GPT-5 routing bug that caused many users to be directed to a weaker GPT-5 variant. Once fixed, users could access the higher-performing "thinking" version.

OpenAI released a GPT-5 prompting guide for developers to craft more effective prompts for GPT-5, along with a prompt optimizer tool that refines complex instructions and provides explanations of its adjustments. This supports more precise and understandable prompt design in working with GPT-5’s reasoning capabilities.

OpenAI is expanding its compute fleet and adding customization options like personality settings and third-party connectors (Dropbox, Gmail, Teams) for Plus and Pro subscribers to address demand.

Microsoft rolled GPT-5 into Copilot across platforms. The new “Smart mode” exposes GPT-5 capabilities to consumers and enterprises in Copilot on web, Windows, Mac and mobile.

AI Research News

A new technical paper from Meta called Efficient Speculative Decoding for Llama at Scale outlines challenges and solutions for deploying fast speculative decoding in production Llama systems. Meta details speedups for Llama via speculative decoding at scale.

Researchers from Salesforce and USC developed CoAct-1, an AI agent combining GUI navigation with code execution. Presented in “CoAct-1: Computer-using Agents with Coding as Actions,” the CoAct-1 hybrid system achieves state-of-the-art results on benchmarks, efficiently completing complex tasks with fewer steps. CoAct-1 offers potential for robust enterprise automation, but it faces challenges regarding security and human oversight.

AI Business and Policy

DeepSeek’s next model launch has been reportedly delayed over Huawei chip issues. The FT-sourced report cites supply problems as a key factor in the schedule slip. Meanwhile, China’s Government cautioned Chinese tech firms against purchases of Nvidia’s export-limited H20 AI chips, urging support for local AI chip makers.

ChatGPT’s mobile app is raking in the revenue. Since May 2023, it has generated $2 billion in consumer spending, approximately 30x its rivals like Claude and Grok. The app also leads in downloads with 690 million global installs, showing significant market dominance.

AI companion apps are growing in popularity, with category revenue up 64% YoY through July, hitting $120 million in revenue in 2025. There were at least 128 AI companion apps released in 2025, and over 60 million total downloads in the first half of 2025, including apps from Replika, Character.AI, PolyBuzz, Chai and others. The top 10% of apps capture nearly 90% of spend.

Anthropic has achieved a rapid $5 billion revenue run rate, driven by its dominance in AI coding applications. However, with Cursor and GitHub Copilot accounting for nearly a quarter of its income, Anthropic has a precarious dependence on AI coding customers. OpenAI's new GPT-5 model poses a threat with significantly lower pricing with similar performance, potentially undermining Anthropic's customer base.

Vibe coding startup Lovable aims to hit $1 billion in annual recurring revenue within 12 months, according to CEO Anton Osika. Founded in 2023, the European AI darling reached $100 million ARR in eight months and projects $250 million by year-end. The company raised $200 million at a $1.8 billion valuation this summer.

Enterprise AI company Cohere is valued at $6.8 billion after a recent $500 million funding round and appointed Joelle Pineau as Chief AI Officer to focus on developing practical, privacy-focused AI applications like their North AI agent platform.

Co-founder of xAI Igor Babuschkin, who helped launch xAI and led engineering, has announced his departure from xAI to start Babuschkin Ventures. This new VC firm will focus on AI safety research and backing startups that advance humanity.

Anthropic acquired Humanloop, a prompt management and LLM observability platform, to bolster its enterprise AI tooling and compete with rivals like OpenAI. Humanloop's technology focuses on managing complex AI telemetry data by embedding context into logs and traces using the Model Context Protocol (MCP). This acquisition will enable Anthropic to deliver more proactive anomaly detection, root-cause analysis, and actionable insights for large enterprises.

AI Opinions and Articles

Financial Times asks whether AI is “hitting a wall” after a tepid reception for GPT-5. The piece argues that scaling laws face data and compute limits and calls for fresh approaches like multimodal world models.

GPT-5’s changed ‘personality’ led to “chatbot grief” after OpenAI’s GPT-5 update altered the feel of AI companions. A Guardian column recounts user backlash and broader concerns over how tech firms are shaping digital intimacy.

This has been a relatively quiet week for AI model releases and news, after a whole slew of big releases lately. But there are rumors of a Gemini 3 release coming soon, and claims it will be very good, with high benchmark scores. Stay tuned!

I’ve never been more confident in Google than I am today. - Logan Kilpatrick

I just don’t get the point of 270M models because most phones are capable of running at least 1b-sized models. And most phones manufactured after 2024 can run qwen3-4b-2507 which is 100 times more powerful than that 270m model. For example, my iPhone 15 Pro can run it at a speed of 19 tps.