AI Week in Review 25.08.30

Gemini 2.5 Flash Image, Grok Code Fast 1, HeyGen Digital Twins, Google Translate live translation, LipSync 2 Pro, MAI Voice 1, MAI1 Preview, Claude Chrome, Perplexity Comet Plus, MCP-Universe.

Top Tools

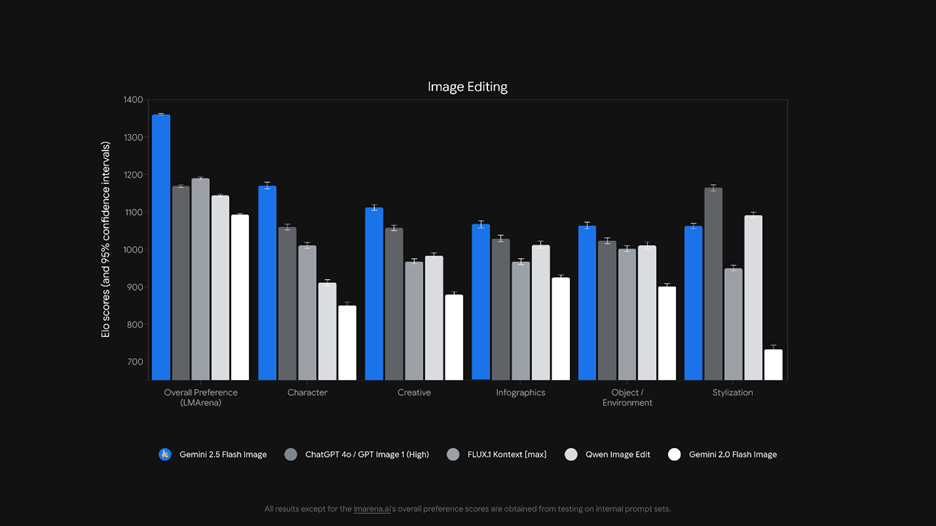

Google has officially released Gemini 2.5 Flash Image, the new SOTA image editor formerly known Nano Banana that allows for easy modification and annotation of images. It has been judged the best AI image generation model according to LM Arena ELO scores, with top scores on character consistency, infographics, and Creative expression and a total ELO score over 1300.

Gemini 2.5 Flash Image / Nano Banana has great prompt adherence, enhanced control, consistent character generation, and faithful text rendering. This makes it a game-changer for creating and editing marketing content. It can create 3D wireframe mesh images and 3D visual representations from screenshots, restore and enhance old or damaged photographs, and convert regular photographs into isometric drawings.

Native image editing with Gemini 2.5 Flash Image is now in Google’s Gemini app, available both in the browser and on Gemini mobile app. This enables powerful image transformations and editing for consumers inside the Gemini app. Gemini 2.5 Flash Image can also be tried for free via Google AI Studio.

In addition, Adobe has integrated Google's Gemini 2.5 Flash Image, aka Nano Banana, model into Adobe Firefly and Adobe Express as its default image generation model, which can be seen in the Adobe Firefly interface. Maybe Nano Banana won’t be an Adobe Photoshop killer after all; Adobe is adopting AI features to keep their tools cutting-edge.

AI Tech and Product Releases

xAI launched Grok Code Fast 1, an agentic AI coding model that prioritizes speed and cost yet reports a 70.8% score on SWE-Bench-Verified. This does not appear to be a Grok 4 fine-tune, as they claim, “We built grok-code-fast-1 from scratch, starting with a brand-new model architecture.” There are few benchmarks from xAI, but vibe-check reactions are positive: “terse and focused”, “good for bite-sized things” even “insane.” But one negative review notes “there are many more models that perform better.”

This launch marks xAI’s first push into autonomous coding tools, and they look to be buying market share with limited-time free access through partners like GitHub Copilot, Windsurf, and Cursor. GitHub has a public preview of Grok Code Fast-1 inside Copilot. Long-term pricing is listed at $0.20/M input, $1.50/M output tokens.

Google Translate has been updated with AI-powered live translation for real-time conversations. They also released a new language learning tool for practicing languages. These features are available in the Google Translate app.

HeyGen has released Digital Twins for Avatar 4, an upgrade designed to create more realistic digital twins by mirroring gestures and expressions, creating a more realistic lip-sync avatar from an image and script/voice input:

For the first time, you can create videos that look, sound, and feel like the real you without the stress of filming or endless retakes.

Sync Labs has launched LipSync 2 Pro, a video model that can change the audio of a video and automatically adjust the lip-syncing to match. The tool is available at sync.so.

XAI has open-sourced its Grok 2.5 model, which is two generations behind their current Grok 4 model. It’s available on HuggingFace. Elon Musk adds:

“Grok 3 will be made open source in about 6 months.”

Microsoft has introduced two new in-house AI models, MAI Voice 1 for expressive speech generation and MAI1 Preview, a mixture-of-experts LLM. MAI Voice 1, which powers Copilot Daily, is able to generate a minute of audio in less than a second, and is available at Copilot Labs. MAI1 Preview is on LM Arena.

NotebookLM has expanded its language support to 80 languages and expanding audio overviews, making both audio and video overviews accessible to a wider international audience.

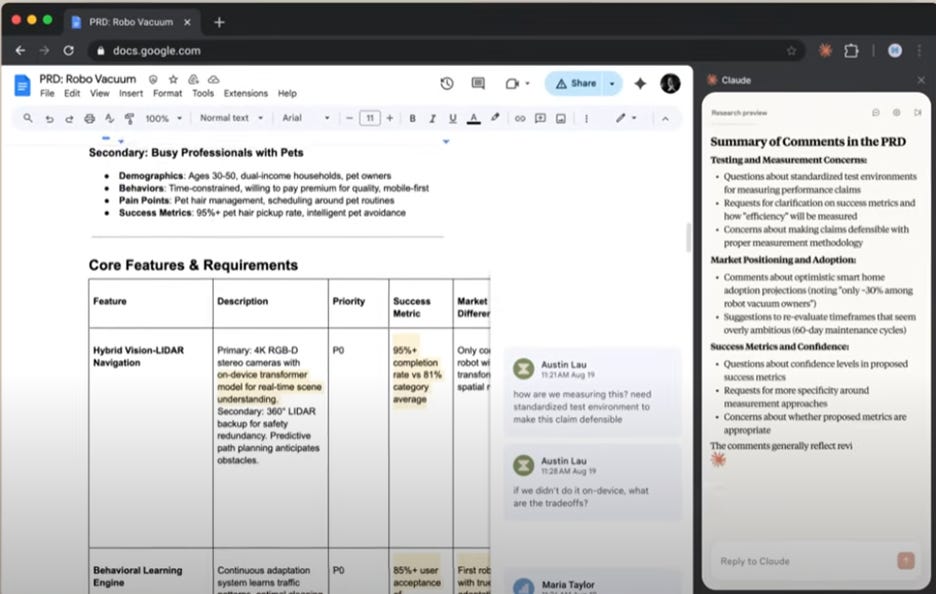

Anthropic is piloting a Claude Chrome extension that allows AI to directly control a user's Chrome browser for agentic tasks. The pilot is limited to 1,000 Max plan users.

Perplexity has launched Comet Plus, a subscription service that compensates publishers for content cited by the AI, with 80% of the revenue shared with them. Perplexity is attempting to create the right compensation model so quality content providers on the internet are rewarded for use:

Comet Plus is the first compensation model that allocates revenue to our partners based on three types of internet traffic: human visits, search citations, and agent actions.

OpenAI introduced GPT-Realtime and voice-agent API updates, a more advanced speech model for production-grade voice agents, allowing developers to integrate the conversational voice mode from the ChatGPT app into their own applications. The new API capabilities (MCP server support, image input, SIP phone calling) support a full real-time stack for AI voice agents and interfaces. The rollout also includes reusable prompts and improved latency. VentureBeat notes OpenAI’s most capable real-time stack for multimodal AI yet is aiming for enterprise adoption.

Nous Research has released Hermes 4, a family of frontier, hybrid-mode AI reasoning models based on Llama-3.1 70B and 405B models, and post-trained on 5M samples for improved reasoning. Hermes 4 offers unprecedented user control, minimal content restrictions, and transparent hybrid reasoning, allowing insight into its thought process.

Krea has unveiled their first real-time video generation model that allows for on-the-fly video manipulation, providing a demo of the unreleased model to announce a beta waitlist.

MathGPT.ai, an AI platform, provides an “anti-cheating” tutor for college students and a teaching assistant for professors by using Socratic questioning, never directly giving answers. After a successful pilot at 30 colleges, it's expanding to nearly double its availability this fall, with hundreds of instructors incorporating the tool.

Sakana AI’s M2N2 allows enterprises to create specialized AI models by efficiently merging existing ones without expensive retraining.

AI Research News

Salesforce AI Research introduced MCP-Universe, an open-source benchmark evaluating LLMs' performance in real-world enterprise interactions, revealing that even advanced models like GPT-5 struggle with complex tasks and long contexts.

OpenAI and Anthropic published a joint safety evaluation study, where both AI companies evaluated each-others leading foundation models for alignment and safety. Testing Anthropic’s Claude Opus 4 and Claude Sonnet 4, and OpenAI’s GPT‑4o, GPT‑4.1, o3, and o4-mini, the labs shared findings from cross-lab testing that highlighted both blind spots and the value of independent evaluation. Key findings were that reasoning models resisted jailbreaks and tended to give the strongest performance overall, while general chat models like GPT-4.1 and GPT-4o were susceptible to misuse and sycophancy, even detailing harmful acts.

AI Business and Policy

The AI investment boom is on track. Nvidia announced a $46.7 billion quarter with a 56% year-over-year increase in revenue, driven by strong demand in its AI data center business and the new Blackwell chips, forecasting a potential $3 to 4 trillion AI infrastructure market in coming years.

Meta's investment in Scale AI and its Superintelligence team shows signs of fraying. Several prominent researchers have left Meta's Superintelligence team, both Meta veterans and several new hires who are returning to OpenAI. Some Scale AI customers are turning to competitors like Mercor and Surge. The high-profile departures and internal chaos raise questions about the stability of Meta's AI initiative.

Anthropic updates data-use policy choices for consumers. Users are being asked to opt-out or share chats for AI model training, a change from prior policy that never trained on customer queries and interactions. Anthropic’s post on the update pitches this as helping improve model safety and improve at skills:

By participating, you’ll help us improve model safety, making our systems for detecting harmful content more accurate and less likely to flag harmless conversations. You’ll also help future Claude models improve skills like coding, analysis, and reasoning, ultimately leading to better models for all users.

It’s a convenient change for Anthropic; they are benefitting directly from the data if users give permission.

Meta is adding teen-safety safeguards to its AI experiences. Following a Reuters investigation into inappropriate chatbot behavior with minors, Meta says it’s tightening policies and retraining models to avoid risky topics with teens. Chatbots will no longer engage with teens on self-harm, suicide, disordered eating, or inappropriate romance. Teens will also be limited to educational AI characters, restricting access to previously available sexualized ones.

Reliance Industries is building India’s AI backbone with its new venture, Reliance Intelligence, through strategic partnerships with Google Cloud and Meta.

Estonia-based Vocal Image offers AI-powered coaching to improve voice and communication skills, inspired by its CEO's personal journey. The startup recently secured $3.6 million in seed funding and now claims $12 million in annual recurring revenue. With 4 million downloads, its unique dataset of over 1 million voice samples enables personalized AI feedback and supports future development.

Investors are clamoring for a stake in Swedish vibe-coding startup Lovable, making unsolicited offers valuing the company at over $4 billion, even though Lovable is not currently fundraising. The nine-month-old company has rapidly achieved over $100 million ARR and had a recent $200 million round at a $1.8 billion valuation.

Maisa AI raised $25 million in funding for deploying enterprise AI automation based on accountable AI agents. Maisa AI launched Maisa Studio, a model-agnostic self-serve platform for deploying digital workers that can be trained with natural language.

Aurelian has raised $14 million to deploy AI voice assistants in 911 call centers. The company’s AI triages non-emergency calls, like noise complaints, to reduce human dispatcher workload and improve efficiency.

YouTube has been secretly using AI to upscale and improve the clarity of uploaded videos. YouTube planned an opt-out feature for this experimental feature but has not released it. This has led to a backlash from content creators whose non-AI generated content has been muddied with AI editing.

AI Opinions and Articles

TIME says 60 MPs in the U.K. accuse Google DeepMind of breaking their AI industry safety pledge, releasing an open letter intensifying regulatory scrutiny in the U.K. amid global AI Act enforcement ramp-ups.

Their complaint is that, by labelling releases as “experimental,” Google Deep Mind is skirting pledges to fully test and report on AI safety and alignment concerns:

Yet when you released Gemini 2.5 Pro on 25 March, no safety evaluation report accompanied it. A month later, only a minimal "model card" appeared, lacking any substantive detail about external evaluations. Even when directly questioned by journalists, Google refused to confirm whether government agencies like the UK AI Security Institute participated in testing.

Intense competition between AI labs has led to faster releases, with AI safety testing and reporting taking a back seat. If an experimental AI models creates a serious AI safety problem, it will cause a backlash. Until then, the AI race is on!