AI Week in Review 25.11.01

Cursor 2.0, Minimax M2, Hailuo 2.3, MiniMax Speech 2.6, Music 2.0, gpt-oss-safeguard, 1x NEO home robot, Granite 4.0 Nano, Ming-Flash-Omni, SWE-1.5, Aardvark, Odyssey-2, Sonic-3, Adobe goes Max AI.

Top Tools

Cursor released Cursor 2.0 and Composer, a new in-house AI coding model. Cursor 2.0 introduces a multi-agent coding interface where users can coordinate multiple agents (up to 8 agents) working in parallel across a codebase to plan, edit, and implement changes. Previously in beta, browser for Agent is now GA (Generally Available). Another improvement is in smoother code reviews. Cursor’s Composer coding model is built for low latency and is about 4 times faster than comparable models.

The Cursor 2.0 upgrade marks Cursor’s push from “AI inside an IDE” toward a full-stack AI coding assistant platform, with AI taking on more of the software development flow and the Cursor 2.0 UI behaving more like an AI agent control panel than a traditional IDE.

AI Tech and Product Releases

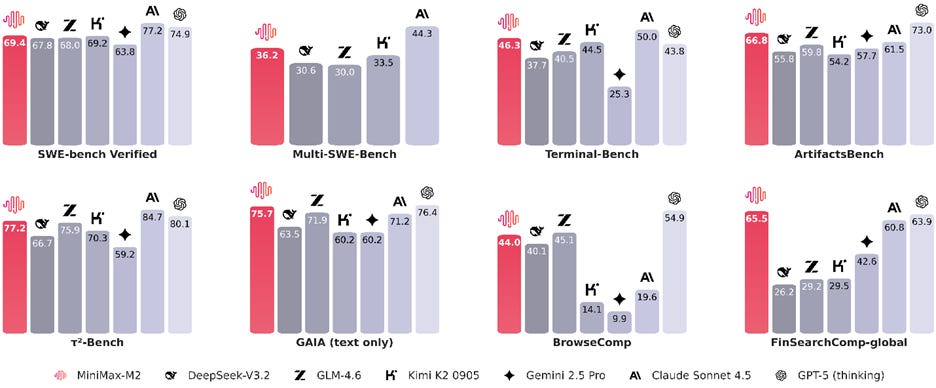

MiniMax released M2, an efficient, high-performing open-weight AI model that achieves excellent benchmark performance, near Claude Sonnet 4.5, at twice the speed and only 8% of its price. Designed for agentic workflows like code generation and complex task orchestration, the M2 model is a fine-grained Mixture-of-Experts (MoE) model with 200B parameters total and 10B active parameters during inference. This helps make it a more efficient AI model. Minimax notes:

By maintaining activations around 10B , the plan → act → verify loop in the agentic workflow is streamlined, improving responsiveness and reducing compute overhead.

The M2 model is available on HuggingFace (MIT license).

MiniMax shipped three other media models this week:

Hailuo 2.3, an updated video generation model that achieves ‘cinema-grade’ AI video generation, with improvements in “physical actions, stylization, and character micro-expressions, while further optimizing its response to motion commands.” Creators can access Hailuo 2.3 directly or other platforms such as VEED’s Hailuo-2.3 AI Playground.

MiniMax Speech 2.6, a low-latency speech synthesis model that achieves under 250ms human-like voice response, useful for real-time interactive agents.

Music 2.0 expands MiniMax’s music generation stack, with MiniMax touting its musical range and qualities. “Music 2.0 produces a timbre that is incredibly close to the real human voice … capable of mastering a wide range of singing techniques and emotional styles.”

These AI model releases position MiniMax as a full multimodal AI lab spanning text, speech, video, and music.

OpenAI released gpt-oss-safeguard, finetuned versions of its previously open-weight gpt-oss models aimed specifically at “safety reasoning” and policy enforcement for production apps. The models come in 20B and 120B parameter sizes. With gpt-oss-safeguard, developers can update moderation and safety policies using plain language instead of retraining a classifier and gate user content according to custom safety rules.

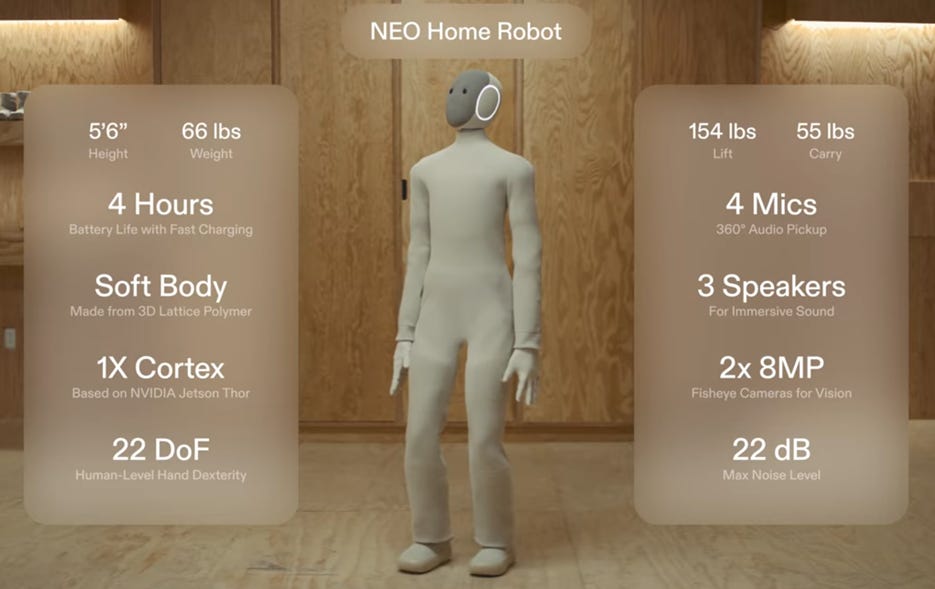

Humanoid robotics company 1X launched NEO in a video announcement, which it describes as the first consumer humanoid robot meant for in-home use. They also opened public pre-orders for NEO at a price point of about $20,000 and with planned delivery in early 2026. The robot is marketed for household chores like cleaning and laundry. NEO supports tele-operation; a remote 1X employee pilots the robot to perform tasks it hasn’t learned yet, while collecting data to eventually automate those tasks. The remote tele-operation feature raised concerns about privacy and whether this robot is robust enough for consumer use in private homes.

IBM released Granite 4.0 Nano, an ultra-efficient tiny model with only 300 million parameters, meant for running useful language tasks locally on edge devices. IBM’s Granite model line is intended for on-device and enterprise-controlled inference, instead of cloud scale inference. Granite 4.0 Nano is available on HuggingFace.

Inclusion AI released Ming-Flash-Omni Preview, a sparse Mixture-of-Experts omni-modal model with 100B total parameters and only 6B active parameters. Ming-Flash-Omni is an upgraded version of Ming-Omni, which can handle speech, vision, and text modalities under one architecture rather than splitting across separate systems. The model is in early preview with weights available on HuggingFace.

Cognition announced SWE-1.5, a new frontier “coding agent model” that now powers its Windsurf environment. The company says SWE-1.5 was co-designed end-to-end with Cerebras hardware and a custom inference stack to achieve a blazing 950 tokens/second generation speed, while reaching near–state-of-the-art scores on SWE-Bench Pro.

OpenAI introduced Aardvark, a new GPT-5-powered “agentic security researcher” AI agent designed to identify, verify, and fix software vulnerabilities:

Aardvark represents a breakthrough in AI and security research: an autonomous agent that can help developers and security teams discover and fix security vulnerabilities at scale.

Adobe rolled out sweeping generative AI upgrades across Photoshop, Premiere Pro, Lightroom, Express, and Firefly at their Max conference:

Newly released Firefly Image Model 5 improves realism, resolution, and controllability. Adobe will let customers train custom Firefly models, in some cases with as few as six reference images.

Adobe announced a browser-based Firefly video editor that merges multi-track video, audio, and AI-generated narration in one place.

New features Generate Speech and Generate Soundtrack can automatically generate voiceover and soundtrack audio for video.

Adobe’s new Photoshop AI Assistant, currently in private beta on the web, can follow natural-language instructions, generate selections, and walk users through complex edits.

Project Frame Forward lets an editor modify a single video frame, such as removing an object or inserting a new one via a prompt and then propagates that change across an entire video clip automatically.

Project Moonlight is a cross-app creative “director” that carries user style, brand context, and social publishing preferences between tools.

Adobe said it plans to surface these agentic features not only inside Adobe apps but eventually inside third-party chatbot platforms such as ChatGPT.

Moonshot AI released Kimi Linear, a 48B parameter linear attention model with a 1M token context window. The Kimi Linear architecture combines an MoE model with only 3B active parameters and an expressive linear attention to achieve superior performance for long-context tasks. It is aimed at handling large documents, codebases, or chat histories without chunking, useful for knowledge retrieval tasks.

Odyssey ML introduced the Odyssey-2 real-time interactive AI video system, where generation starts streaming immediately instead of returning a finished clip after a long render. Users can prompt a scene and then live-edit attributes via the prompt, and the video updates in place. You can try Odyssey-2 here.

Cartesia announced Sonic-3, a real-time text-to-speech model built on State Space Models that achieves an incredible sub-50ms latency. Sonic-3 can generate expressive speech that includes laughs, emotion, and multilingual output across 42 languages. You can try Sonic-3 in Cartesia’s playground.

AI Research News

Google DeepMind unveiled the AI for Math Initiative, a new research program that partners with five elite research institutions to apply frontier AI systems directly to unsolved mathematics. This initiative builds collaborations between top mathematicians and AI researchers to accelerate discovery in areas like number theory and optimization. Math is ideal for AI reasoning because it is both a scientific target and a proving ground for AI systems that can reason symbolically.

By combining the profound intuition of world-leading mathematicians with the novel capabilities of AI, we believe new pathways of research can be opened, advancing human knowledge and moving toward new breakthroughs across the scientific disciplines. - Google DeepMind

AI Business and Policy

OpenAI and Microsoft formalized a sweeping restructuring deal, where Microsoft will acquire a 27% ownership stake in OpenAI and will receive continued access to OpenAI’s most advanced models, including AGI-level AI systems, through 2032. This agreement ends OpenAI’s unusual capped-profit structure and cements its shift into a conventional for-profit future, laying the groundwork for a potential IPO and removing uncertainty around governance.

In related news, OpenAI announced it has completed its restructuring, in which a nonprofit OpenAI Foundation now governs a for-profit OpenAI Group, with the foundation starting at about 26% equity and an initial commitment reportedly around $25B toward public-good initiatives such as curing disease and “AI resilience.”

Meta CEO Mark Zuckerberg told investors that capital expenditures on AI will climb sharply in 2026 as Meta invests more in AI Superintelligence and builds massive AI data centers. Meta is raising 2025 capex guidance up to $72 billion and expects 2026 capex to be “notably larger” than that. Meta’s stock fell more than 6% on Meta’s quarterly report, as concerns about the cost of accelerating capital costs of AI weighed on investors’ minds.

Nvidia has been on a tear in announcing new deals at their GTC event in DC this week. Nvidia’s CEO Jensen Huang announced in Washington that Nvidia has about $500 billion in bookings for advanced AI chips and is striking deals in telecom, robotics, and autonomous vehicles:

Nvidia and Oracle are partnering with the U.S. Department of Energy to build the DOE’s largest AI supercomputer at Argonne National Laboratory. This AI supercomputer will contain100,000 Nvidia Blackwell GPUs to accelerate scientific discovery.

Nvidia is investing $1 billion in Nokia while bringing AI solutions to 6G networks.

Hyundai Motor Group and Nvidia announced that they are building a dedicated AI factory, using tens of thousands of Blackwell GPUs and other Nvidia technology to accelerate in-vehicle intelligence, autonomous driving, factory automation, and robotics.

Nvidia announced expanded partnerships with South Korean firms like Samsung, SK, Hyundai and Naver and the Korean Government.

Jensen Huang has framed AI infrastructure buildout as a national industrial project on the scale of the Apollo program, and he has stated that “AI will revolutionize every facet of every industry,” from car design and robotics to factory automation.

Former OpenAI researchers launched a new startup, Applied Compute, with $80 million in funding to develop custom AI models for enterprises.

Following a lawsuit alleging its chatbot played a role in a teenager’s suicide, Character.AI announced it will ban minors from ‘open-ended’ interactions with its chatbots. They will be taking other steps to assure teen safety in AI interactions.

AI Opinions and Articles

A World Economic Forum roundup this week warned that AI is being deployed faster than governments can meaningfully govern it, calling this gap a systemic risk. The Forum cites fresh adoption data showing paid AI usage among U.S. businesses surging, but they also say responsible AI practices remain immature and there are fragmented rules across the EU, U.S., and China.

In my view, the lack of single global AI rules is a feature, not a problem. We don’t know enough about AI to know what regulations are actually beneficial. Different nations can try diverse approaches, and we can learn what works best from the varied experiences.

Ah rumor says that the composer model is a Chinese model. And you should really put the MiniMax m2 first. It is such a great model. Better than full sonnet 4 same as opus 4.1. You should definitely try it out in Claude Code. It is wild

Meta's announcement that 2026 capex will be 'notably larger' than the already staggering $72 billion for 2025 suggests they're doubling down despite the 6% stock drop, which actually signals conviction rather than concern. The market reaction seems short-sighted when you consider that AI infrastructure buildout is essentially a land grab for the next decade of compute dominance. What's interesting is how this spending compares to the metaverse investment cycle, where Zuckerberg faced similar pushback but ultimately the market questioned the product-market fit rather than just the spend itself. If Meta can demonstarte clearer AI monetization pathways than they did with VR, this capex cycle could look prescient in hindsight.