AI Week in Review 25.12.06

DeepSeek V3.2 & V3.2-Speciale, Mistral 3 Large & Ministral 3, Kling AI Video 2.6, O1, & Avatar 2.0, Runway Gen-4.5, Gemini 3 Deep Think, Nova 2 Lite, Pro, Sonic & Omni, Trinity Mini & Nano, LUX AI.

Top Tools

DeepSeek released DeepSeek V3.2 and DeepSeek V3.2-Speciale, 685B parameter Mixture-of-Experts (MOE) open-weight models that achieve outstanding performance on reasoning and agentic tasks. DeepSeek V3.2-Speciale, trained with RL for deep reasoning, achieves SOTA results on reasoning benchmarks, including gold-medal level on the math Olympiad (IMO), 96% on AIME, and 30% on Humanity’s Last Exam, outperforming Gemini 3.0 Pro and GPT-5-High on some benchmarks.

The DeepSeek V3.2 standard Thinking model is great on enhanced agent reasoning and instruction following, getting 73.1% on SWE-Bench Verified and 80.3% on tau-bench (agentic task benchmark). DeepSeek V3.2 is even more impressive because it performs on par with Gemini 3.0 Pro and GPT-5.1 but costs a fraction to run. On OpenRouter or via DeepSeek API, it costs $0.28/ M tokens input, $0.40/M tokens output.

DeepSeek V3.2 is an important AI model release because it shows that open AI models can compete with current frontier AI models. DeepSeek did this with architecture innovations, such as DeepSeek Sparse Attention (DSA), a more efficient attention mechanism that reduces inference cost on long context queries, and training improvements, such as using specialist RL training with distillation.

AI Tech and Product Releases

Mistral has launched the Mistral 3 model family, consisting of Mistral Large 3, plus a three smaller Ministral 3 models. Mistral Large 3 is a Mixture-of-Experts (MOE) model with 675B parameters and 41B active parameters and supports a 256 K token context window. With a competitive 1418 LMArena ELO score, it performs comparable to leading open AI models such as Qwen 3 and Kimi K2 in non-thinking mode, but falls short on reasoning, as it’s a non-thinking model, similar to Llama 4 Maverick.

The Ministral 3 models, sized 3B, 8B, and 14B for local use on edge devices, are multimodal (vision-capable) and capable of reasoning. Ministral 3 14B reasoning variant reaches 85% on AIME 2025 and 64.6% on LiveCodeBench, state-of-the-art for its size class. These are useful models for local use, and the smallest 3B variant can even run in-browser via WebGPU.

Kling AI announced a flurry of new AI video releases this week. First, Kling AI released its Kling Video 2.6 model with native audio, its first with native audio generation, which enables synchronized speech and sound effects with 1080p video generation, joining Veo 3 in being a full audio-visual AI generation model.

Kling AI also unveiled the O1 multimodal creative engine, designed to streamline the AI video creative process. The O1 engine provides a unified interface that takes a variety of inputs - text prompts, reference images, and video clips - and can generate or edit audio-visual content with AI-powered tools and user controls in a single workflow. This helps particularly with character and object consistency and long-form video content.

Finally, Kling AI introduced Avatar 2.0, which adds more expressive facial animations, lip-sync precision, and real-world likeness to generated digital characters in video generations. Avatar 2.0 supports long-form videos up to 5 minutes, to support knowledge sharing, storytelling and advertising. Kling AI claims Avatar 2.0 is superior to competitors such as HeyGen’s Avatar and OmniHuman-1.5.

Runway introduced Gen-4.5 Video Model, a new flagship state-of-the-art AI video generation model that leads on Artificial Analysis text-to-video leaderboard and outperforms competitors such as Google’s Veo 3 on independent benchmarks. Runway touts Gen-4.5 features such as enhanced motion dynamics, better physical realism, and finer control over cinematic style:

Gen-4.5 achieves unprecedented physical accuracy and visual precision. Objects move with realistic weight, momentum and force. Liquids flow with proper dynamics. Surface details render at great fidelity. And fine details like hair strands and material weave remain coherent across motion and time.

Runway will bring existing control modes (Image to Video, Keyframes, Video to Video and more) to Gen-4.5 and will roll out this release in coming days.

Google launched Gemini 3 Deep Think mode, which provides longer-horizon reasoning, more explicit chain-of-thought, and improved accuracy across multistep analytical tasks. Gemini 3 Deep Think uses parallel reasoning and achieves state-of-the-art reasoning on benchmarks: 45.1% on ARC-AGI-2, 41% on Humanity’s Last Exam, and 93.8% on GPQA Diamond. The rollout is available for Gemini Ultra subscribers inside the Gemini mobile app.

At their AWS re:Invent conference, Amazon announced the Nova 2 model family include the Nova 2 Lite, Pro, Sonic, and Omni models. Nova 2 Pro scores strongly on agentic and coding benchmarks such as τ²-Bench and SWE-Bench Verified, comparable to Claude Sonnet 4.5. Nova 2 Lite is a small, fast and cost-effective AI model, comparable to Gemini Flash 2.5. Nova 2 Sonic is a speech-to-speech model, with 1.39s time-to-first-audio. Nova 2 Omni is a unified model handling text, image, video, and speech with a 1 M token context window. Overall, Nova 2 is a strong improvement on previous Nova model releases.

Amazon made other release announcements at re:Invent:

Nova Act, a service for building and deploying AI browser agents.

Nova Forge, a service for customizing and fine-tuning Nova AI models for user-specific needs.

New autonomous AI agents to handle software development tasks: Kiro autonomous coding agent, AWS Security agent, and AWS DevOps agent for operational automation. These agents run across AWS.

U.S.-based Arcee announced Trinity, a family of open-weight MOEs trained end-to-end in the U.S, and released two of the models, Trinity-Mini (26B total, 3B active) and Trinity-Nano-Preview (6B total, 1B active). Trinity-Mini is a compact cost-efficient model for agentic workloads. Arcee plans to release a larger model, Trinity-Large (420B total, 13B active), in January 2026.

AI startup OpenAGI announced its LUX AI agent, claiming to be state-of-the-art on AI agentic computer-use with an 83.6% Mind2Web benchmark score, outperforming proprietary AI models at lower cost. Lux is designed for autonomous workflows and can control Slack, Excel, and other desktop applications, unlike browser-only AI agents.

Microsoft released VibeVoice‑Realtime‑0.5B, an open-source lightweight text-to-speech model optimized for real-time applications. The model reportedly delivers initial audio output in under 300 milliseconds latency and can generate up to 10 minutes of speech in a single run.

AI Research News

At NeurIPS, Nvidia announced new open AI tools and model updates for speech processing, AI safety evaluation, and autonomous driving workflows. These include Nvidia’s DRIVE Alpamayo-R1 (AR1), the world’s first open reasoning VLA model for AV research; further updates to customizing NVIDIA Cosmos for physical AI; tools for Nvidia DGX and automotive stacks; and Nvidia-authored research papers at NeurIPS.

Apple presented on and released STARFlow-V, the first end-to-end normalizing-flow model for high-quality video synthesis. The published pre-print paper is “STARFlow-V: End-to-End Video Generative Modeling with Normalizing Flows.” The model promises sharper frame coherence and faster sampling compared with diffusion-based approaches.

AI Business and Policy

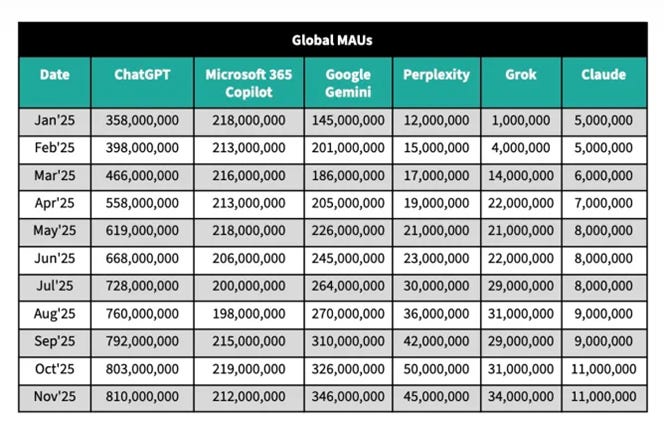

OpenAI CEO Sam Altman issued an internal “Code Red” memo as a response to competitive pressure from Google’s rapid Gemini advancements. New data shows Google is gaining market share; ChatGPT’s user growth slowed to 5% from August to November, compared with Gemini’s 30% rise in the same period.

Sam Altman told OpenAI employees in the “Code Red” memo that ChatGPT needed to improve to keep pace with Google. This has translated into returning to basic AI model improvements and core capabilities, putting less effort into new non-core features. Industry reports suggest that OpenAI may release GPT-5.2 next week in a competitive response to Google’s Gemini 3 release. This AI model is said to be ahead of Gemini 3 Pro in overall intelligence.

Nick Turley, head of OpenAI’s ChatGPT team, put a public statement on X about ChatGPT the same day as the internal memo, marking 3 years of ChatGPT, touting how ChatGPT has a dominant 70% of AI assistant usage worldwide, and declaring:

Our focus now is to keep making ChatGPT more capable, continue growing, and expand access around the world — while making it feel even more intuitive and personal. Thanks for an incredible three years. Lots more to do!

Amazon introduced its next-generation Trainium 3 AI chip, along with a roadmap that extends compatibility with Nvidia’s ecosystem. AWS positions Trainium3 as a cost-efficient option for large-scale training. AWS also introduced AI Factory a new on-premises system combining Nvidia GPUs or Trainium chips to deliver enterprise AI clusters. The platform provides dedicated AI infrastructure for regulated industries and high-security use cases.

Anthropic acquired Bun, the JavaScript runtime, to support expanding and improving Claude Code and developer infrastructure. Anthropic stated that Claude Code has surpassed $1B in annualized usage.

The New York Times filed a lawsuit for copyright infringement against Perplexity, alleging that the company’s AI systems reproduced and redistributed copyrighted news content without authorization.

OpenAI purchased an equity stake in Thrive Holdings to expand its enterprise services footprint in accounting, IT support, and workflow automation. The deal deepens OpenAI’s move into verticalized enterprise solutions.

Nvidia and Synopsys announced a partnership to modernize engineering and chip-design workflows using accelerated computing and AI tooling. The partnership will integrate Synopsys’s EDA software with Nvidia’s full-stack AI hardware, with a collaboration that spans across CUDA accelerated computing, agentic and physical AI, and Omniverse digital simulation.

AI Opinions and Articles

Just in time for NFL playoffs and conference bowl games, Yahoo introduced an AI system that generates near real-time football recaps that go beyond traditional box score statistics. The tool contextualizes play-by-play information to produce narrative-style summaries.

Gearing up for Christmas, OpenAI added ChatGPT-powered interactions and Q&A features to NORAD’s annual “Tracks Santa” experience. The collaboration brings real-time AI storytelling and educational responses to a popular holiday tradition.

Hopefully, we will see more innovative and creative uses of AI this holiday season, and not just AI slop showing up on social media.

The part about DeepSeek V3.2 competing with frontier models at a fraction of the cost is really interesting. Their distillation approach and sparse attention mechanism seems to be the key here, not just throwing more compute at it. Makes you wonder if that specialist RL training technique could become a standard pattern for efficient open models.