AI Week In Review 25.01.13

CES Roundup: Nvidia's RTX 50 series, COSMOS World Foundation Models, Project Digits, R2X AI avatar, Mirumi furry bot, Halliday and Rokid Smart Glasses, Phi-4 weights released, r-Star math, LatentSync.

AI Tech Product Releases

The biggest news of this week are the AI products presented at CES, and the biggest CES announcements came from Nvidia CEO Jensen Huang’s CES keynote. CEO Jensen Huang touted the Blackwell AI chip and systems that they are now delivering and announced several new products and releases:

Jensen Huang announced the Blackwell-based GeForce RTX50 Series, including RTX5070, RTX5080 and RTX5090, with their top RTX5090 achiving 3,352 trillion AI operations per second and increasing memory to 32GB. He also noted how AI is accelerating computer graphics with neural rendering of pixels.

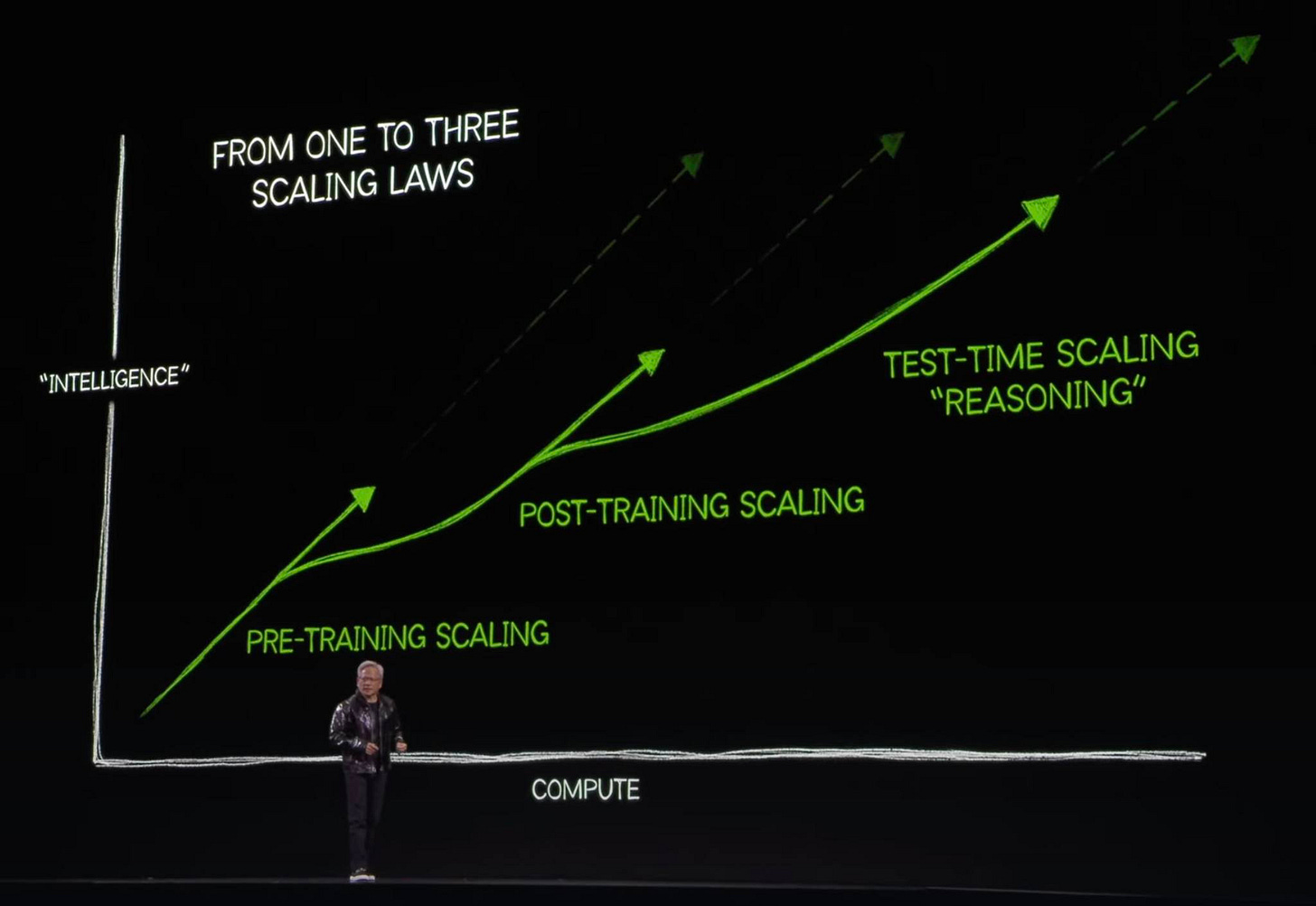

Jensen Huang claims his company’s AI chips are advancing faster than historical rates set by Moore’s Law. Blackwell runs some AI inference workloads 30 times faster than the previous generation.

Nvidia released COSMOS, a platform of pretrained world foundation models (WFMs) for Physical AI to help simulate world environments. These physics-aware models simulate real-world actions and environments to help train robots, autonomous vehicles, and industrial AI tools.

Nvidia introduced Isaac GR00T Blueprint, enabling synthetic motion generation for humanoid robot training.

Nvidia unveiled R2X, an AI avatar designed as a video game-like character that assists users on their desktop. The avatar can interact through text and voice, process files uploaded by the user, and view live screen activities with popular LLMs integration. Nvidia plans to open-source these avatars.

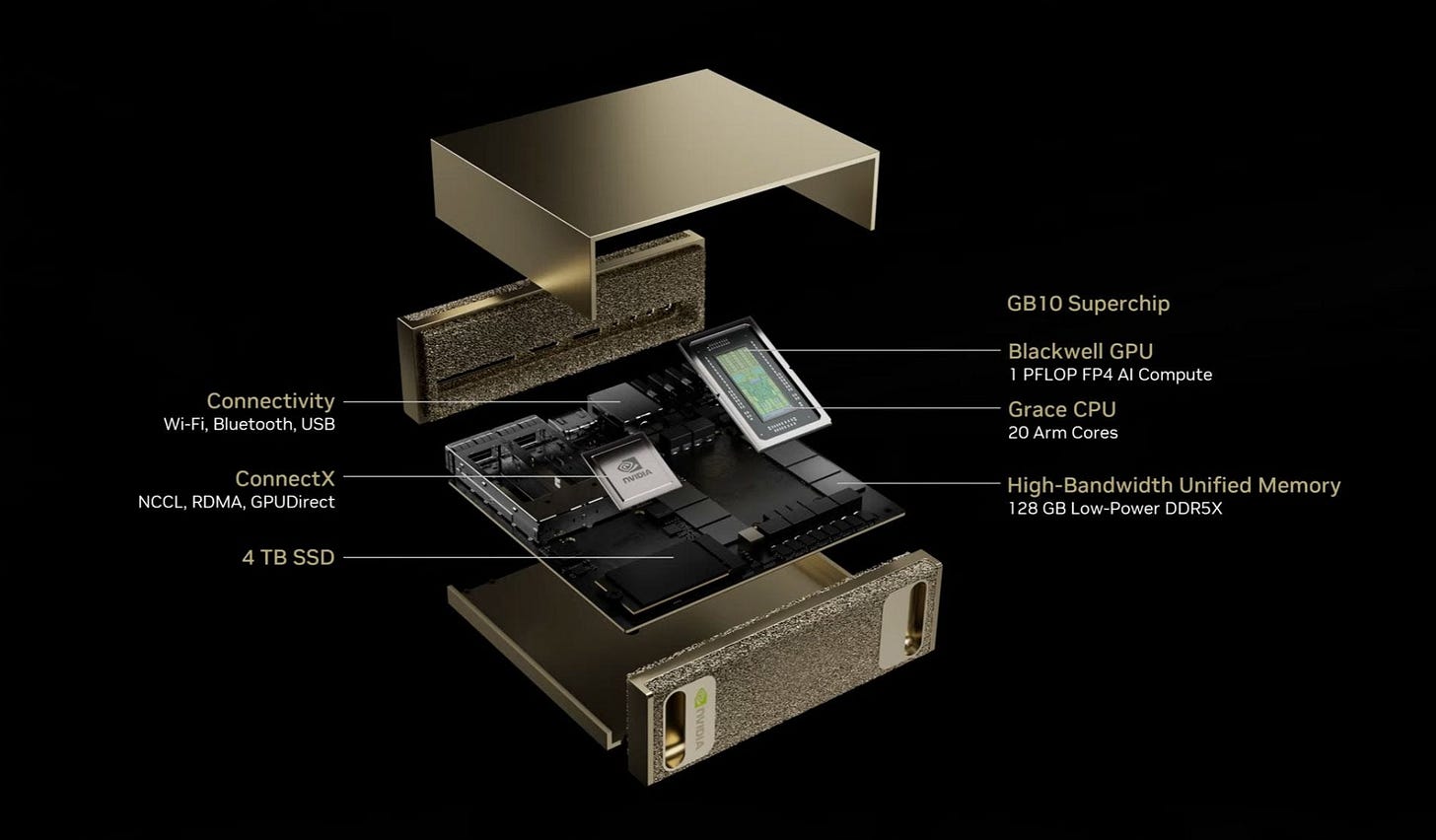

Finally, CEO Jensen Huang announced in his keynote the "Project Digits" $3000 desktop supercomputer, powered by the GB10 Grace Blackwell Superchip. It’s 1 petaflop of FP4 compute, which is 1000x faster than your average laptop on AI workloads, with 128GB RAM, 4TB SSD, and able to run LLMs as big as 200 billion parameters. Best part: It’s Linux OS.

While Nvidia won the AI crown for best AI announcements at CES 2025, there were many other AI-related products at CES 2025:

The furry Mirumi companion robot from Yukai Engineering looks like a tiny pink lemur and will look around at moving people, like a shy observant pet. The cute bot stole many hearts.

ChatGPT is arriving in Volkswagen EVs later this year, to provide AI assistance to drivers.

A robot vacuum that picks up socks and another robot cleaner that can go up small steps and obstacles were two of many household robots featured at CES 2025.

Samsung said their already-announced Ballie robot will finally be released in 2025. Maybe it will be, but don’t

AMD announced their next-gen Radeon RX 9070-series GPUs with AI-powered FSR 4 upscaling.

Halliday launched Smart Glasses with direct eye projection. The glasses feature a tiny DigiWindow module projecting a display directly into the user’s eye, offering real-time language translation, notifications, and AI assistance.

Based Hardware launched AI wearable Omi, a device worn as a necklace or attached to the head, which uses voice commands and purportedly a “brain interface” to assist with tasks like summarizing conversations and creating to-do lists. The company is aiming for a Q2 2025 release at $89.

The Verge take on the Best of CES 2025 included Rokid smart glasses, aforementioned Murumi furry bot, and giving kudos to Nvidia for dominating the AI conversation at CES.

CES 2025 also featured some unclear and dubious AI applications, such as Spicerr, an AI spice dispenser with limited utility, and ChefMaker 2, an AI air fryer that scans cookbooks. These hype-driven AI products often overlook actual technical limitations and genuine consumer needs.

Microsoft has released weights for the Phi-4 model, announced last month, to HuggingFace. The Phi-4 models have an MIT license, so are free to use, and boast the highest benchmark scores for their size.

Elon Musk has announced that Grok 3 has finished pre-training and should be released in the next 3 or 4 weeks, with 10 times the computational power of Grok 2.

Qwen has a new web portal to access their modals, at chat.qwenlm.ai.

OpenAI introduced new personalized interaction options with ChatGPT. Some users can now specify a preferred name, profession, and traits like “Chatty” or “Encouraging” in the updated custom instructions menu, though these options are not yet visible to all users.

Google is testing a new “Daily Listen” feature that automatically generates a podcast based on your Discover feed. This AI-powered audio experience is rolling out in the Google app for U.S. users who have opted into Search Labs, providing up to five minutes of personalized news summaries with links to related stories.

AI Research News

Researchers from UC Berkeley’s Sky Computing Lab have released an open source ‘reasoning’ AI reasoning model called Sky-T1 and shared a technical report on it called Sky-T1: Train your own O1 preview model within $450. The open-source Sky-T1 32B is based on the 32B Qwen 2.5 base model, and then it was post-trained for reasoning on 17k synthetic data examples. It competes with o1-preview on key benchmarks and is available on HuggingFace. The training cost was only $450, suggesting AI reasoning model training could be quite accessible.

Another AI reasoning model, this one from Microsoft Research Asia, is “rStar-Math: Small LLMs Can Master Math Reasoning with Self-Evolved Deep Thinking.” The rStar-Math models are small LLMs (1.5B and 7B parameters) trained for reasoning using Monte Carlo Tree Search (MCTS) over a code-augmented chain-of-thought process, which is guided by a novel process reward model training method.

Through 4 rounds of self-evolution with millions of synthesized solutions for 747k math problems, rStar-Math boosts SLMs' math reasoning to state-of-the-art levels.

rStar-Math 7B, based on Qwen 2.5 7B, gets results comparable to o1-mini on benchmarks, including an average of 53.3% on the USA Math Olympiad (AIME).

Researchers are Tencent have created an AI model that both upscales and colorizes low resolution and black and white films, specifically improving the resolution of facial video. They published their work in SVFR: A Unified Framework for Generalized Video Face Restoration. They leveraged Stable Video Diffusion (SVD) in a unified face restoration framework to produce impressive results. Code and demo examples are on GitHub.

ByteDance released a lip-syncing AI model called LatentSync, which takes a voice and a video and creates a synced voiceover video. This is similar to HeyGen, but LatentSync is an open AI model, and the technical details are shared on GitHub and in a paper “LatentSync: Audio Conditioned Latent Diffusion Models for Lip Sync.” LatentSync can be tried out at Fal.AI.

AI Business and Policy

Microsoft has taken legal action against a group that developed tools to bypass safety measures in Azure OpenAI Service. The unnamed defendants allegedly stole customer credentials and used custom software to generate harmful content, violating multiple federal laws.

Based on published job listings indicating plans for robotics development, OpenAI is developing its own robots. OpenAI is aiming for general-purpose, adaptive robots that integrate high-level AI capabilities with physical robotic platforms.

Google is further integrating its AI teams under Google DeepMind to accelerate AI development. Logan Kilpatrick announced that the AI Studio team and Gemini API developers will join DeepMind, aiming to enhance collaboration and expedite research-to-developer processes.

A lawsuit filing claims Mark Zuckerberg gave Meta’s Llama team the OK to train on copyrighted works, including pirated e-books and articles from LibGen for training AI models, despite concerns within the company about potential copyright infringement.

François Chollet is co-founding a nonprofit, the ARC Prize Foundation, to develop benchmarks for "human-level" AI intelligence. The foundation will expand on ARC-AGI, a test designed by Chollet to evaluate an AI’s ability to solve novel problems it hasn’t been trained on.

Hippocratic AI Secures $141 Million, at a Valuation of $1.64 Billion, to address healthcare professional shortages by automating non-diagnostic tasks such as patient monitoring and appointment preparation.

Another AI healthcare startup, Grove AI, plans to expedite clinical trial enrollment with generative AI, using a voice-based AI agent named Grace to streamline patient enrollment from weeks to minutes. The startup has raised $4.9 million in seed funding.

French startup Rounded is developing an orchestration platform for companies to build their own AI voice agents. They developed Donna, an AI voice agent for anesthesia secretaries, that has handled hundreds of thousands of conversations across 15 private hospitals.

AI Opinions and Articles

Elon Musk concurs with other AI experts that there’s little real-world data left to train AI models on. He is echoing concerns raised by former OpenAI chief scientist Ilya Sutskever about reaching "peak data" and suggests synthetic data generated by AI models themselves is the future path for training. Meanwhile, OpenAI is hiring to break the data wall.

Sam Altman shared his latest thoughts in a personal blog post called “Reflections,” and in it, he said that OpenAI knows how to build AGI and they are looking beyond that, to ASI – Artificial Superintelligence:

We are now confident we know how to build AGI as we have traditionally understood it. We believe that, in 2025, we may see the first AI agents “join the workforce” and materially change the output of companies. We continue to believe that iteratively putting great tools in the hands of people leads to great, broadly-distributed outcomes.

We are beginning to turn our aim beyond that, to superintelligence in the true sense of the word. We love our current products, but we are here for the glorious future.

As if on cue from the boss, many OpenAI people have started talking more about superintelligence and ASI being imminent. Is it hype, hope, or reality that superintelligence is in sight already? Whichever it is, Noam Brown states that Altman’s view on AGI and ASI is the ‘median view’ of people working at OpenAI. The future is closer than you think.