AI Wins Global Coding Contest

OpenAI and DeepMind made history at the 2025 ICPC World Finals: Their AI models beat all humans in an elite global coding competition. AGI coding achievement unlocked!

A Personal Note

Thanks to Devansh sharing our prior essay on Seemingly Conscious AI to his much larger Substack audience here as “When Illusion Replaces Essence in the Age of AI.” Thanks to this exposure, we have gained quite a few more subscribers. To my new readers: I hope to earn your trust as a source of news and insight on AI.

I’ve been quiet this week dealing with a case of flu, but I’ve recovered and will return to my usual two or three articles per week schedule. There is plenty to report, and AI progress continues thick and fast. Feel free to comment on items you’d like to hear more about.

Reasoning Milestone Met: Beating All Humans at Coding

One news item that can’t wait for the AI Weekly on Saturday is OpenAI and DeepMind making history at the 2025 ICPC World Finals this week.

The International Collegiate Programming Contest or ICPC is an elite competition where participants from nearly 3000 universities and over 103 countries compete to solve real-world algorithmic coding problems. In this contest, OpenAI’s reasoning model solved all 12/12 ICPC World Finals problems under contest rules, equivalent to first place among human teams.

Under the rigorous five-hour ICPC World Final time limit, OpenAI’s system achieved a flawless score, solving all 12 complex algorithmic problems, beat all of the 139 university teams present. OpenAI’s AI model perfect score marks a new milestone: Beyond elite human team performance in algorithmic coding.

DeepMind’s Gemini 2.5 Deep Think solved 10 out of 12 problems, which would be the second-place finish among all human teams and deserving a gold medal accolade.

These results are a reminder of how far we have come. AI models have made rapid progress across the competition circuit this summer. OpenAI performed at Gold-level at IMO, and DeepMind’s Gemini Deep Think earned a Gold at IMO as well. OpenAI got 6th place at IOI, and achieved a second-place finish in the AtCoder World Tour Finals.

AI Wins with Ensemble and Multi-Agent Reasoning

Their performance not only eclipsed every human team but did so in a manner that signals a shift in the landscape of AI problem-solving.

The model submitted solutions entirely under standard contest conditions, facing the same blind evaluation and real-time feedback as human competitors. Both models competed under identical conditions to human teams, drawing from the same five-hour time budget and facing real-time judging. The AI teams did not receive code-level hints or contest-specific tuning.

Both OpenAI and DeepMind used generally available general-purpose models, not bespoke or specially trained models, to do most of the competition. These models were instead trained for and provide robust general-purpose reasoning and code synthesis capabilities.

OpenAI used an ensemble approach that combined GPT-5 with an (unreleased) experimental AI reasoning agent; GPT-5 solved most of the problems:

We competed with an ensemble of general-purpose reasoning models; we did not train any model specifically for the ICPC. We had both GPT-5 and an experimental reasoning model generating solutions, and the experimental reasoning model selecting which solutions to submit. GPT-5 answered 11 correctly, and the last (and most difficult problem) was solved by the experimental reasoning model.

Eleven of the twelve problems were solved correctly on the very first attempt with GPT-5; the final, and hardest, problem was solved after nine attempts, a feat no human team matched.

Likewise, DeepMind’s Gemini 2.5 Deep Think achieved gold‑medal level at ICPC, solving 10 out 12 problems using an AI model that is available to Ultra subscribers. DeepMind attributes DeepThink’s success to parallel thinking, multi-step reasoning, and novel RL techniques in the AI training.

DeepMind applied DeepThink in a collaborative, multi-agent way. Multiple agents “proposed, coded, and tested” solutions in a coordinated fashion, using parallel solution strategies and search made possible with scaled-up deep learning and inference infrastructure. This solution exploration process echoes the teamwork of top human competitors but with a speed, breadth and endurance unique to AI.

Gemini rapidly solved eight problems within just 45 minutes and delivered a breakthrough on “Problem C,” a new and exceptionally tough optimization challenge unsolved by all human teams yet solved by the model; DeepThink solved it in 30 minutes by employing innovative minimax theorem-based reasoning.

Efficiency and Speed Gains

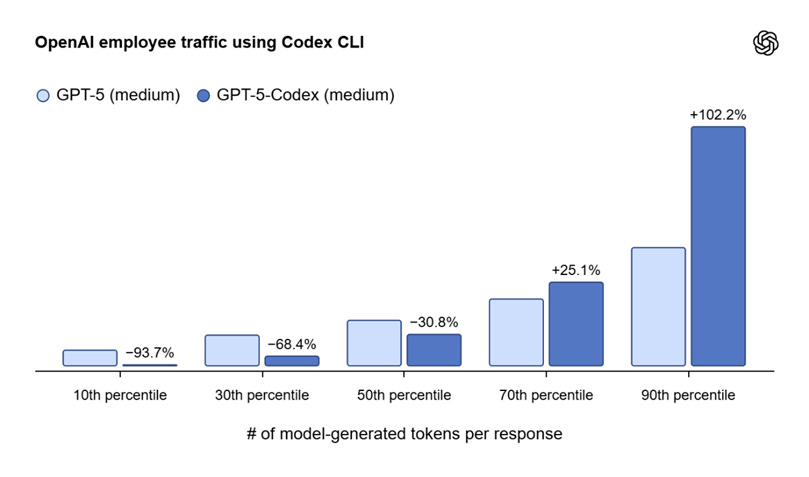

Another theme this news brings up is improvements in reasoning efficiency. As noted on X by Elvis:

We are witnessing an incredible level of efficiency in reasoning models. Faster and more efficient reasoning models are on the rise. First, GPT-5 (and GPT-5-Codex) with remarkably efficient token use, and now Gemini 2.5 Deep Think, achieving gold-medal level performance at the ICPC 2025 under the same five-hour time constraint. – Elvis (@omarsar0)

Weak AI reasoning models can churn through many tokens trying to reason, sometimes getting stuck in a rut. Being able to not ‘overthink’ a simple problem while solving difficult problems yields many benefits. Getting to a correct logical conclusion with fewer tokens means lower cost and better latency for reasoning.

GPT-5 is able to reason to correct conclusions with fewer tokens. GPT-5’s router helps triage problems somewhat, but the reasoning model itself is also being trained with RL for faster and more efficient reasoning.

OpenAI themselves showed off the trend in their GPT-5 Codex release update, saying:

GPT‑5-Codex adapts how much time it spends thinking more dynamically based on the complexity of the task. The model combines two essential skills for a coding agent: pairing with developers in interactive sessions, and persistent, independent execution on longer tasks. That means Codex will feel snappier on small, well-defined requests or while you are chatting with it, and will work for longer on complex tasks like big refactors.

The result is a remarkable capability where GPT-5 Codex responds much faster on easy tasks yet thinks for longer to solve harder tasks. This will have huge implications: Answers with fewer tokens are faster and lower cost; the context window also doesn’t get used up as quickly, so more can be done with a given memory budget.

Conclusion: Another AI Inflection Point

This triumph of OpenAI and DeepMind AI models at the 2025 ICPC marks a new inflection point for AI. AI is now besting humans fully on competitive programming; AI is superior in ultra-competitive, creative problem-solving. It’s an astounding demonstration of AI capabilities.

The most impressive part of it is that publicly available AI models, Gemini Deep Think and GPT-5, handled most problems.

Achieving these results with general AI reasoning models has important implications for using AI across a range of scientific and business applications. It suggests that fine-tuning or creating bespoke AI models are not needed in most niche applications. General AI models with powerful AI reasoning can handle more difficult tasks than ever before.

If anyone thought there would be a pause on AI progress now, think again. AI is getting faster, more efficient, and more intelligent, at a rapid clip, and the Fall 2025 season of AI releases has just begun.