Apple gets Intelligence

Debrief on Apple WWDC's Apple Intelligence Announcement

Apple Intelligence - What Is It?

The centerpiece of Apple’s WWDC for this year is Apple Intelligence, their take on putting AI throughout their product stack: Hardware, OS, and applications.

Apple has billed it as “AI for the rest of us” and described Apple Intelligence (AI, get it?) in these terms:

a personal intelligence system integrated deeply into iOS 18, iPadOS 18, and macOS Sequoia.

The WWDC keynote talked about many non-AI updates in iOS, apps, etc. but we will focus here on the AI features, specifically the Apple Intelligence rollout.

The Apple Intelligence announcement highlighted AI-enabled features across Apple ecosystem applications, powered by both AI models both on-device and on a private cloud for AI workloads. However, the main highlight was Apple’s Siri upgrade, and its use of both Apple’s AI models and OpenAI’s ChatGPT to deliver a real AI experience on Apple devices.

Apple Intelligence for Writing and Image Generation

The Apple Intelligence part of the keynotes presented capabilities along several dimensions, in particular talking about language, images, actions, personal context, and privacy.

Language: Writing applications get an AI boost, adding features such as rewriting text, changing tone, proofreading text, and more. The capabilities will now be convenient native features in Apple’s apps.

Emails and notifications: Notification management and email summaries get improved with AI; AI-based summaries for emails instead of . Time sensitive emails will be prioritized at the top (dinner tonight, flights). Their updated notification tool will tell you if something is urgent, using their LLM to detect urgency or important or not.

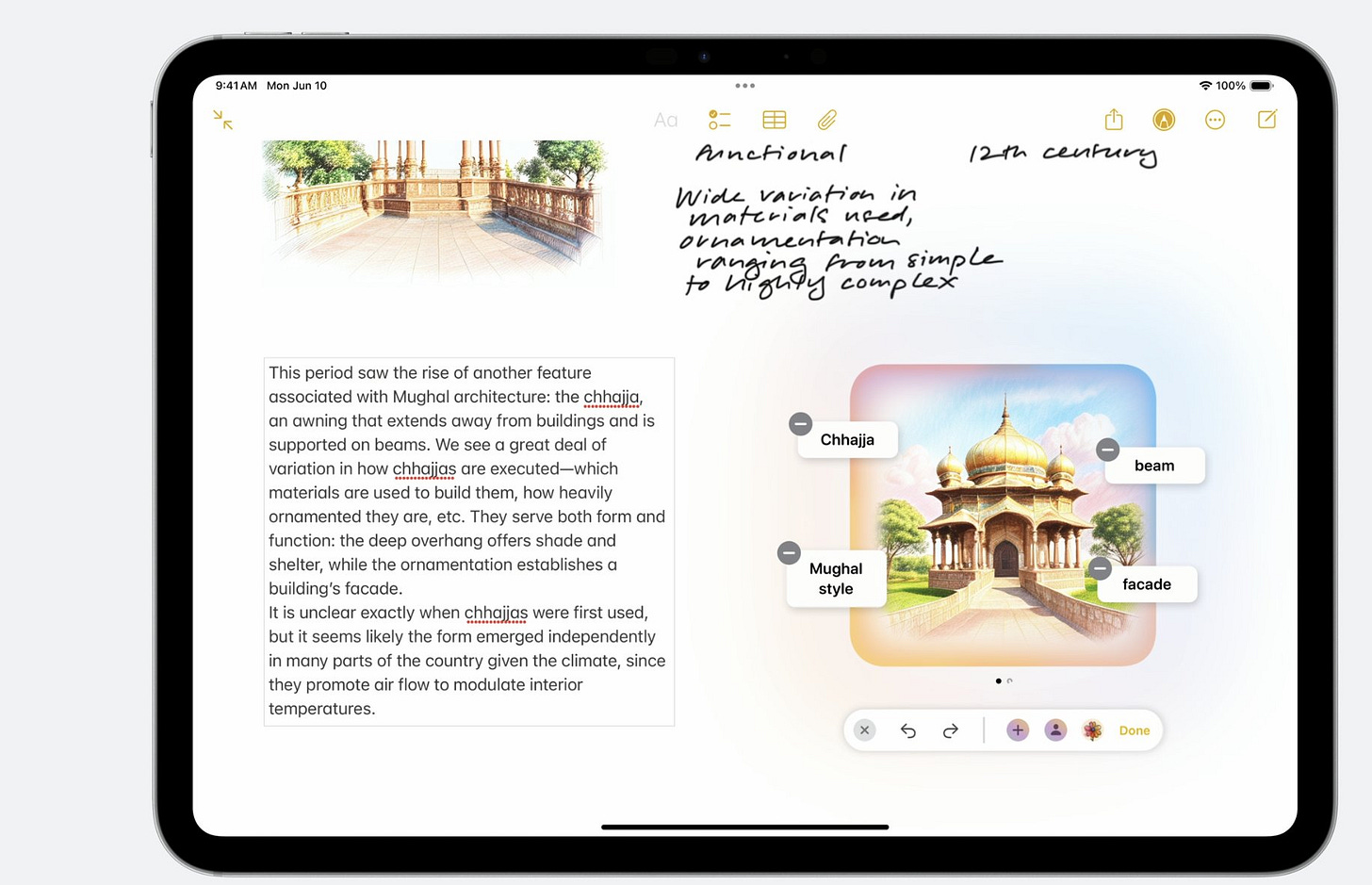

Images: Personalized image generation is now in messages, notes, freeform, keynote. Image wand lets you sketch something and then turn it into a more complete picture. You can also auto-generate images from your text. in a document.

Image playground is a stand-alone tool where users can create original images in several unique styles, such as sketch. Genmoji is an AI-based emoji image generator that can generate customized emojis that you can share via messages.

This AI image generation features and writing features aren’t particularly novel; Meta has features similar to custom emojis generation. Many AI image generation tools exist, while ChatGPT or other AI tools for writing are available.

What is new is plugging it right into Apple’s apps and devices, and Apple’s twist on how they deliver AI: Leaning in local on-device AI models to provide personal context and privacy with AI, plus connecting Siri and their apps together via actions (App Intents).

Privacy & Personal Context

Apple is touting a vision of AI as a personal AI that leads with privacy.

Personal context: Apple Intelligence can process the information on your device to understand your personal context. One way they do this is through their 'on-device semantic index’. This uses semantic index and feeds it (a form of RAG).

Their demos spoke of their AI as a “personal intelligence system” and emphasized highly personalized use cases. At the same time, Apple believes that the Apple Intelligence ‘personal context’ goes hand-in-hand with privacy. They suggest that servers can help with AI, but can store or misuse your data, so they lean in to on-device AI capabilities.

Apple touts on-device AI processing, using M-family of chips to run their “highly capable LLMs and diffusion models” locally. Running on device has several advantages if local hardware can handle it: It’s low-latency, better user experience, and enables privacy. iPhone can assure privacy by keeping data on-device.

The most advanced AI models can’t run locally however. To scale AI models while protecting security, Apple extends privacy into the cloud, with private cloud compute - Apple Secure Server. Apple says these servers will run a MacOS subset and dedicated AI tooling on their own silicon, and will be used exclusively to run their AI model workloads.

They are calling it a “Brand new standard for privacy in AI.” It’s a smart move by Apple, as it plays to Apple’s strength as they can control the Apple platform and ecosystem more completely.

Siri Gets AI

Apple presented a complete overhaul of Siri, with much better natural-language understanding and more capabilities, thanks to better AI understanding:

With richer language-understanding capabilities, Siri is more natural, more contextually relevant, and more personal, with the ability to simplify and accelerate everyday tasks.

The new AI-enhanced Siri can now:

Follow along if users stumble over words.

Understand both text and voice, so users can communicate in the best way.

Have on-screen awareness. If a friend texts you an address in a text message, you can ask Siri to add to contacts.

Take actions from Siri across many apps - like booking meetings, getting travel plans, etc.

Provide device help, with extensive product knowledge, features and settings.

Siri’s local AI model is the default to provide AI-based assistance, but Siri can also bring in OpenAI as needed to solve some AI tasks.

Actions and App Intents

Actions are perhaps the most important upgrade to Siri and to Apple Intelligence overall. With it, Siri can tap into tools and seamlessly integrate apps from other interfaces.

Commands like “Show me photos that include so-and-so” or “Create a hike using maps and calendar and Safari at this park” require calling different applications.

App Intents connect apps to Siri; it’s the API from the natural language interface to the application called. This turns Siri’s natural-language interface into an Action Model.

Developers can use the App Intents API, registering their apps for connecting to them through Siri. This enables tool use from Siri across both Apple native apps and third party apps.

With App Intents, Siri could become a new main interface for smart-phone apps, with natural voice and text displacing app interfaces with a single Siri interface. This is the vision the Rabbit R1 promised but didn’t deliver; with App Intents, Siri eventually will.

ChatGPT and OpenAI’s Partnership

In conjunction with Apple’s WWDC, OpenAI announced the OpenAI partnership with Apple. ChatGPT is both an add-on feature integrated into Apple Intelligence as well as built in to Siri:

Apple is integrating ChatGPT into experiences within iOS, iPadOS, and macOS, allowing users to access ChatGPT’s capabilities—including image and document understanding—without needing to jump between tools.

Siri is the entry point and will suggest and ask if OpenAI should be called in. This is a loose, not tight, integration.

Since Apple had also criticized cloud-based LLMs as being less private, what about privacy? What about ChatGPT preferences and “memory” feature? OpenAI says:

Privacy protections are built in when accessing ChatGPT within Siri and Writing Tools—requests are not stored by OpenAI, and users’ IP addresses are obscured. Users can also choose to connect their ChatGPT account, which means their data preferences will apply under ChatGPT’s policies.

OpenAI will be making Apple integrations for ChatGPT. But they won’t be alone. Apple confirms plans to work with Google Gemini.

Apple’s Foundation AI Models for On-Device and Private Cloud AI

Apple introduced their foundation AI models used to power their AI features.

To get the performance and specialization in an LLM small enough to fit on an iPhone, they turned to fine-tuning via Adapters.

The local model is a 3B parameter LLM that uses adapters trained for each specific feature. Their adapters are utilizing LoRA, which can specialize the foundation LLM using a small collection of weights. This saves space as they need to store only a fraction of parameters of the full model. For the 3B LLM, for example, each adapter parameter set require tens of megabytes.

To save space further, they compress models through quantization:

To maintain model quality, we developed a new framework using LoRA adapters that incorporates a mixed 2-bit and 4-bit configuration strategy — averaging 3.5 bits-per-weight — to achieve the same accuracy as the uncompressed models.

Their AI image generation diffusion model similarly uses an adapter for each style.

Anything running locally or Apple's Secure Cloud is an Apple model. Even though these are not leading frontier AI models at this time, Apple has the infrastructure to develop and deploy in-house AI models to address all customer needs.

Thoughts & Summary

With the Apple Intelligence launch, Apple is officially in the AI game.

Some aspects were beyond expectations, some were less impressive. Apple is not leading in frontier AI models, but there’s a lot more than the best frontier model to get the best AI Assistant out there.

Apple’s key differentiators are their focus on personal data, privacy, practical user experience features, local AI models, devices for AI, and external integration (OpenAI) as needed.

Consumer AI is about the user experience, and on that score Apple delivered. Siri is getting much better, and with App Intents, Siri will evolve into an AI-enabled natural language assistant. The OpenAI integration is important, but long-term, actions and integration with apps may be more important to making the Siri AI assistant useful.

Siri updates will get into iPad and Macs as well as iPhone, but only on recent OS versions and devices. This may drive upgrades of devices.

Apple pushing local AI models with their 3B fine-tune AI models is a great strategy. Long-term, local AI models will become more capable, enough to take on most tasks, as edge-device chips get better at handling AI workloads.

Apple is leading in edge device chips for AI, with the latest M4 being the best edge device chip set for AI right now. Local AI models leans in on their strengths.

Apple’s real advantage is their distribution: Apple devices serve around two billion Apple users, which dwarfs most other consumer distribution channels. Apple consumers will adopt many ‘low-hanging fruit’ AI capabilities that Apple offers, and it will be enough for them.

Is being behind in frontier AI models a problem for Apple? No. Apple will build in-house AI models for use-cases that don’t need bleeding edge AI models. Where they need the frontier AI models, Apple can play leading AI model makers off each-other, as they would love to get access to Apple’s billions of devices. For now, OpenAI is slotted in as their top-of-the-line AI model, a win for OpenAI.

Apple Intelligence will be in beta next month, while full release is this fall. Developers can integrate AI features in beta soon. Whether Apple Intelligence as a product lives up to the announcement remains to be seen.

Postscript. Elon gets it wrong

Elon Musk is threatening to ban iPhones from all his companies over Apple’s newly announced OpenAI integrations with Siri. Elon Musk wrote on X:

“if Apple integrates OpenAI at the OS level, Apple devices would be banned from his businesses and visitors would have to check their Apple devices at the door where they’ll be “stored in a Faraday cage.”

But he’s wrong. ChatGPT is a bolt-on to Siri, and not deeply integrated. Only Apple’s own internal on-device AI models are deeply integrated.