Better AI Models, New AI Evals, and Scaling RL Training

New AI benchmarks arise for useful work and agentic AI: GDPval, GAIA2, SWE-Bench Pro. AI models are getting faster, cheaper, better, and more agentic, by scaling RL post-training.

Trends in the News

My most recent AI weekly news report was packed with new AI models and new AI product releases. After a lull in August, the fall AI release season is in full swing. There was so much that I had to skip noteworthy progress in evaluations and other newsworthy items.

Just looking at the dizzying number of new AI products might cause us to miss the forest for the trees. Each individual new release is an incremental improvement on what came before, but taking a higher-level view helps you make out the overall trajectory and trends in AI.

This article shares two key trends and meta-stories behind recent AI news:

New evaluations are replacing old, saturated benchmarks and cover what matters now: Useful work (GDPval), and Agentic AI (GIAA2, SWE-Bench Pro).

AI models are getting faster, cheaper, better, and more useful for agentic AI – all at the same time. Scaling RL post-training is paying off.

Evaluating Useful AI: GDPval

Existing AI benchmarks are getting saturated; the latest example was Qwen team claiming Qwen3-max thinking scores 100% AIME 25. Most existing benchmarks don’t capture the complexity and richness of real-world long horizon tasks that are most useful. Metrics to evaluate the latest frontier AI models are weak.

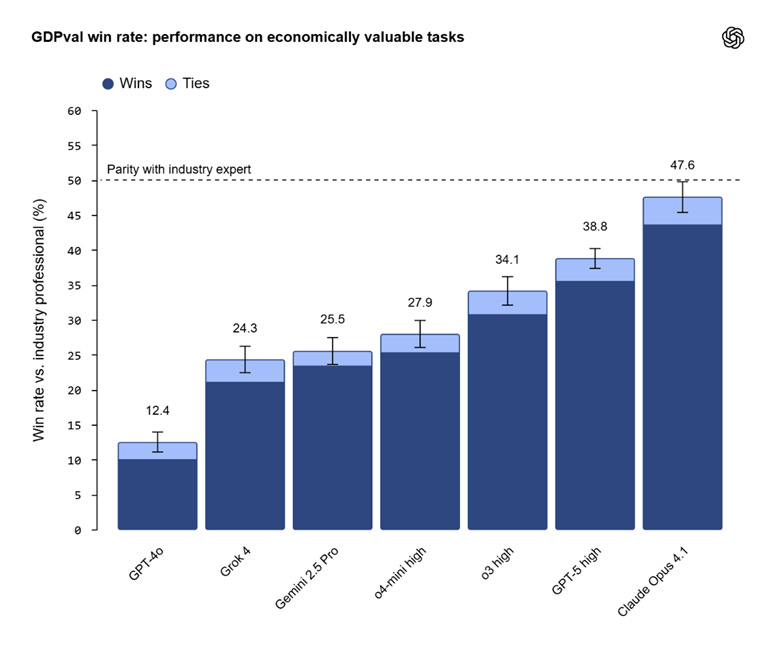

To better track AI models on real-world use cases, OpenAI introduced GDPval, a new evaluation measuring models on economically valuable tasks across 44 occupations. OpenAI sees GDPval as the next step in the progression of AI benchmarks in increasing real-world complexity. Each generation of AI benchmark - MMLU, SWE-Bench, SWE-Lancer, and now GDPval – have captured more complex and valuable tasks.

GDPval differs from other benchmarks by being more complex in the inputs, outputs and tasks. GDPval task inputs are not just text prompts but include reference files and context. GDPval evaluates work product outputs, which could be documents, slides, diagrams, spreadsheets, or multimedia. GDPval specifically measures over a thousand real-world tasks:

The GDPval full set includes 1,320 specialized tasks (220 in the gold open-sourced set), each meticulously crafted and vetted by experienced professionals with over 14 years of experience on average from these fields. Every task is based on real work products, such as a legal brief, an engineering blueprint, a customer support conversation, or a nursing care plan.

Thus, GDPval evaluates agent AI tasks that have useful relevance on diverse work contexts. They were able to test various frontier AI models, and found, interestingly enough, that Claude 4.1 Opus and not GPT-5 was the highest performing AI model on this benchmark.

Sharing results, OpenAI notes that “frontier models can complete GDPval tasks roughly 100x faster and 100x cheaper than industry experts,” but that metric doesn’t include the overhead of human oversight.

We see both good and bad outcomes from this massive output boost: On the plus side, AI is now good enough to tackle many economically useful tasks, if you can manage inputs, contexts, and expectations. On the negative side, AI is able to quickly produce vague, cliché-filled reports with little real value - AI work-slop.

GDPval may tease out those differences between the AI ‘slop’ and useful output, as it uses human evaluators to judge results.

GDPval can be considered a benchmark for AGI, since AGI is defined by many as AI that can do as well as the average human on real-world tasks. Going by the progress of AI models on this benchmark, AGI is not far off.

Updated Evaluations for Agentic AI: GAIA2 and SWE-Bench Pro

Hugging Face announced the GAIA 2 agentic AI benchmark, which is an update on the GAIA benchmark that adds a thousand new human-authored scenarios in execution, search, ambiguity handling, temporal reasoning, agent collaboration, and adaptability.

GAIA2 runs with the Meta Agents Research Environments (ARE). The ARE environment can be used beyond GAIA2 as a platform for evaluating and researching AI agent behavior.

The motivation for GAIA2 is the advance in AI agent capabilities:

In 2 years, the easiest levels have become too easy for models, and the community is coming close to solving the hardest questions, so it was time for an entirely new and harder agent benchmark!

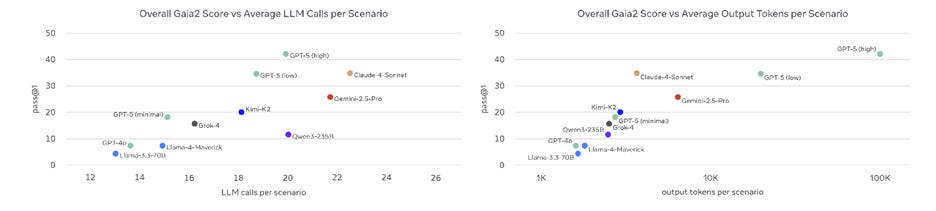

The GAIA 2 benchmark includes time and budget constraints and forces agents to chain steps, making many tasks challenging and comparable to real-world use. Results show that AI models with good ability to plan, iterate and handle long-horizon tasks do best on the GAIA2 benchmark. GPT-5 (high) came out on top.

Scale AI published the SWE-Bench Pro benchmark and dataset with public and commercial leaderboards. Scale AI also shared a technical paper on SWE-Bench Pro.

The benchmark updated the SWE-Bench AI coding benchmark to be a “rigorous and realistic evaluation of AI agents for software engineering.” The benchmark “features long-horizon tasks that may require hours to days for a professional software engineer to complete.”

SWE-Bench Pro of 1,865 problems spanning diverse types of software engineering tasks. The benchmark is partitioned into a public dataset and a commercial set of proprietary repositories that are not publicly available (to avoid contamination). The SWE-Bench Pro public dataset card lists 731 test tasks with patches and problem statements.

The SWE-Bench Pro leaderboard shows AI models scoring much lower overall than on SWE-Bench; the top scoring AI model on SWE-Bench Pro is GPT-5, scoring 23.

Faster, Better, Cheaper

The story in AI models this month is the great AI model releases that are showing advances in speed, quality and cost-efficiency all at once. The story-behind-the-story is that the AI labs are scaling RL Post-training aggressively, gaining a lot of ground in AI model performance without having to expand AI model footprints.

New AI models show this trend: Grok 4 Fast has broken all records in price-performance; its almost as good as Grok 4 but touts being 47x cheaper. Google’s 2509 updates on Gemini 2.5 Flash and Flash-lite also

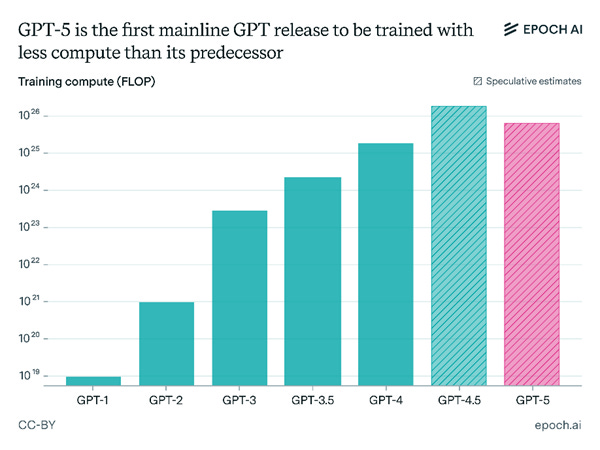

We are getting more with less thanks to RL post-training. As Epoch AI Research recently noted, it’s why GPT-5 managed to get trained on less compute than GPT-4.5:

The invention of reasoning models made it possible to greatly improve performance by scaling up post-training compute. This improvement is so great that GPT-5 outperforms GPT-4.5 despite having used less training compute overall.

Meta AI’s Llama 4 performance was disappointing due to their failure to move into scaling RL post-training, relying too much on scaling pre-training; Meta’s training process fell behind in the RL-post-training era.

RL post-training has been the ‘low-hanging’ fruit since the o1 model. The Grok team grew RL Post-training 10x in Grok 4 over Grok 3, to the point where RL training compute grew to be as much as used in pre-training. The bet on RL training paid off.

Scaling RL post-training likely will continue for perhaps an order of magnitude of more RL training scaling, significantly improving AI model performance without having to expand AI model size. At some point, RL training will end up taking far more compute than pre-training and RL scaling will run out of steam.

… and More Agentic

RL post-training is also now being used to make AI model better for agentic AI use cases, not just reasoning. Every frontier AI model released in recent months - whether it is Claude 4.1 Opus, GPT-5, gpt-oss, GLM-4.5, Kimi K2, or Qwen 3 – has been built for agentic AI use cases.

The makers of GLM-4.5 were transparent about their training process for this, as we shared in “GLM-4.5 and the rise of Chinese AI.”

Recently released Grok 4 Fast was explicitly trained for tool-use:

Grok 4 Fast was trained end-to-end with tool-use reinforcement learning (RL). It excels at deciding when to invoke tools like code execution or web browsing.

The Qwen3-Max release announcement touted its SOTA Tau-bench score:

On Tau2-Bench — a rigorous evaluation of agent tool-calling proficiency — Qwen3-Max-Instruct delivers a breakthrough score of 74.8, surpassing both Claude Opus 4 and DeepSeek V3.1.

The trend is clear: Scaling RL in post-training to enhance AI models’ capabilities on agentic AI.

Conclusion

Agentic AI has been the focal point of AI progress this year. AI models are increasingly geared towards AI agent capabilities and use-cases. Every new frontier AI model release highlights the agentic capabilities of the model.

Helping this is the continued rapid scaling of RL post-training. It’s enabled large gains in AI reasoning without increasing AI model sizes, a huge boost to efficiency. RL post-training has also broadened from reasoning to also incorporate agentic capabilities such as tool-calling.

New AI benchmarks are being developed to reflect these advances in AI models, replacing older saturated benchmarks and evaluating AI models on more agentic tasks. This includes GAIA2 and SWE-Bench Pro, but also GDPval, a benchmark geared towards complex, useful, real-world tasks.

Late to press: Anthropic has released Claude 4.5 Sonnet. This article is long enough, so we will leave detailed analysis of Claude 4.5 Sonnet for another day, but the top-line Claude 4.5 Sonnet sales pitch is all about its agentic AI abilities:

Claude Sonnet 4.5 is the best coding model in the world. It’s the strongest model for building complex agents. It’s the best model at using computers.

Claude Sonnet 4.5 ran 30 hours straight on an autonomous coding task. Agentic AI is hitting a whole new level, and its due to them scaling RL post-training to get those results.