Building Next-Gen AI Agents with OpenAI Tools and MCP

OpenAI releases Responses API, built-in tools, and Agents SDK. Anthropic’s Model Context Protocol (MCP) gains traction. The AI Agent ecosystem expands and improves.

The Ecosystem for Building AI Agents Expands

AI agents - AI systems that can autonomously plan and act intelligently - are enabled by tools, frameworks, and standards that help build and orchestrate AI agents.

In our previous article, “Manus and the New AI Agents,” we noted that we are beginning to see a flood of highly capable AI Agents in 2025, because the AI reasoning models, tools, and overall ecosystem are continuing to evolve and improve. This ecosystem for AI agents is expanding and improving to support more advanced AI agents.

AI applications frameworks, such as LangChain (and their AI agent library LangGraph), AutoGen, and CrewAI, were initially developed in 2022 through 2024 to integrate LLM-based workflows, connecting LLMs in prompt chains and integrating RAG (Retrieval Augmented Generation) and other capabilities (such as tool and function-calling). These frameworks enabled the first generation of LLM-based AI agents.

As AI models added capabilities such as multi-modality and tool-calling, some AI workflows have been streamlined, while more complex workflows have become possible. AI agent frameworks have moved ‘upstream’ and expanded in scope, enabling a new generation of more capable AI agents.

Some recent developments in this area include: Anthropic’s Model Context Protocol (MCP); Block’s Goose AI agent framework; and LangChain’s Protocol and the AGNTCY interoperability standards. The most recent development is OpenAI’s release of new tools for building agents.

OpenAI Releases Responses API, Built-in Tools, and Agents SDK

OpenAI just released four key components to support building agents: Responses API, built-in tools (web search, file search, and computer use), Agents SDK, and observability tools. OpenAI’s release of these four components is opening up their technology stack for AI agent builders.

The new Responses API is a new unified API for utilizing OpenAI’s built-in tools and models:

The Responses API is designed for developers who want to easily combine OpenAI models and built-in tools into their apps, without the complexity of integrating multiple APIs or external vendors.

With a single Responses API call, developers will be able to solve increasingly complex tasks using multiple tools and model turns.

Responses API provides a superset of features in the Chat Completions API, supporting single-turn chat completion and adding multi-turn and complex flow support. Responses API will add support to replace the Assistants API, so there is a single interface schema to support both simple and complex AI use cases.

The Responses API includes built-in tools web search, file search, and computer use. By sharing built-in tools used to make agentic AI applications such as Deep Research and Operator, OpenAI is exposing capabilities for developers to build their own of Deep Research and Operator, as well as other AI agents:

Web Search provides up-to-date internet information, enabling flows for real-time fact-checking. OpenAI fine-tuned GPT-4o into GPT-4o-search preview towards more accurately getting the right information from a search and properly citing it.

File Search lets an AI model leverage private data in a built-in RAG capability; it allows for metadata filtering, custom reranking, and a fine-tuned version of GPT-4o to provide precise information.

Computer Use completes tasks for users using the computer. As with OpenAI Operator, it works based on screenshots; you send it a screenshot and it tells you what actions to take.

The new Agents SDK orchestrates single-agent and multi-agent workflows. Agents SDK is based on OpenAI’s Swarm release last year, an experimental release to support multi-agent systems. Agents SDK comes with added support for configurable agents, types, built-in guardrails, and better tracing and observability. It is also open-source and presents itself as model-agnostic:

“The Agent SDK is open-source, allowing enterprises to mix and match different models. We don’t want to force anyone to use only OpenAI models.” - Olivier Godement.

OpenAI also shared observability tools to trace and inspect agent workflow execution. It stores the chat state (for 30 days) and provides a dashboard to see what happened, all to make AI agent debugging simpler.

OpenAI’s vision is a world where developers use OpenAI models and tools under the hood to build a diversity of AI agents. OpenAI monetizes their AI models and supporting tools via their APIs. reaping much economic value of such AI applications.

Anthropic MCP Gains Traction

Anthropic introduced Model Context Protocol (MCP) in late 2024, offering an interoperability protocol and a standardized connector between tools and LLMs to support complex AI applications. We discussed how Model Context Protocol changes AI integration when it first came out.

MCP is an open protocol that is model-agnostic and uses a straightforward client-server architecture to standardize how context and information are exchanged. Developers can run MCP servers that expose data from content repositories, databases, and applications. AI agent developers build MCP clients that connect to those servers. This allows agents be built without having to develop custom connectors to leverage knowledge or tools beyond their training data.

Many MCP servers have been stood up to support integrating various tools, with hundreds of services and knowledge bases connected, such as a GitHub server. This makes MCP especially valuable in applications where rich context or external knowledge is needed for decision-making. MCP can standardize automation of many tasks; for example, to automate authentication, your credentials can be a private MCP.

Model Context Protocol appears to be taking off. As the MCP ecosystem has grown, the momentum has led to increased buzz around MCP on AI YouTube channels, with explainer videos on Building anything with MCP Agents and how to supercharge your Cursor with MCP.

Block’s Goose and MCP

Recently, Block introduced Goose, an open‐source, modular AI agent framework designed to enable developers to build autonomous AI agents that can execute complex real-world tasks.

The focus of Goose is on automating engineering tasks, supporting software developers and engineering teams to build autonomous AI agents for tasks such as code migration, unit test generation, and debugging. The creators of Goose describe it as: “Your on-machine AI agent, automating engineering tasks seamlessly.”

Goose connects tools to LLMs using Anthropic’s open source Model Context Protocol (MCP) to facilitate connections between the agent and external systems. By leveraging MCP, Goose achieves interoperability across different user interfaces, language models, and systems, making it adaptable to various environments and use cases.

Goose is a useful AI agent framework, and by being open-source, extensible, and built on standard protocols like MCP, there’s no vendor lock-in or downside for trying it out. Goose is validating MCP, demonstrating how standardized protocols enhance the AI agent ecosystem.

LangChain and AGNTCY

LangChain became one of the most popular AI application frameworks by being an open-source rich set of integrations for LLM-based workflows. LangChain has evolved into a modular, extensible architecture that supports building LLM-powered chatbots, assistants, and agentic AI applications.

LangChain proposed an Agent Protocol for interoperable AI Agents late last year. With it they offered their LangGraph Platform to deploy agents from CrewAI, AutoGen, and other frameworks.

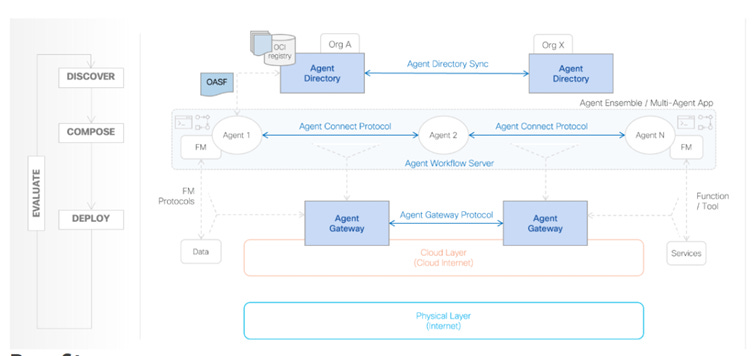

More recently, they joined an effort led by Cisco called AGNTCY. AGNTCY is trying to build “The Internet of Agents: an open, interoperable internet for agent-to-agent collaboration.” The AGNTCY effort aims to transform a fragmented landscape of how AI agents interact into a unified, open ecosystem.

To support this vision, they are proposing a set of common standards and protocols to discover, connect, compose, and deploy AI agents. LangChain’s existing Agent Protocol and integration tools will serve as a foundation layer for this interoperable Internet of Agents.

Conclusion

AI agents can perform tasks ranging from simple automations to complex interactions across domains such as customer service, financial analysis, and content creation. Their defining characteristic is persistence, maintaining awareness of context while pursuing objectives through changing environments.

AI agent capabilities are advancing rapidly thanks to innovations in supporting tools, frameworks, and standards.

With Computer Use, Web Search, and File Search, OpenAI brings more sophisticated awareness (vision for Computer use) and more reliable grounding for web searches and document use. These tasks could be done before, but combining these with improved problem-solving in AI reasoning models produces better quality and more reliable results. It yields better AI agents.

Model Context Protocol (MCP) is a critical component in the AI ecosystem because standardization of connections drastically simplifies glue code for AI agents.

It’s too early to say which interoperability standards will succeed, given the constant and rapid innovations in AI agents. However, interoperability is becoming critical, and standardization is a sign that the AI agent ecosystem is maturing.

One final thought: Open source is the way to go. Successful standards effort and even frameworks and tools are more successful when they are open source. Anthropic’s MCP would go nowhere as a closed vendor offering. The success of LangChain, Goose, and other frameworks mentioned relies on it. Even OpenAI’s Agents SDK is open source.

Much progress will be made in AI agents this year, and most of it will be out in the open.