ChatGPT Gets Vision and a Voice

Multi-modal AI has arrived: OpenAI's GPT-4V can see, hear and speak.

“ChatGPT Can See, Hear and Speak”

Just a few days after announcing DALL-E 3, a greatly improved AI text-to-image generative model, another important shoe dropped from OpenAI: Declaring “ChatGPT Can See, Hear and Speak,” OpenAI announced the multi-modal version of GPT-4, GPT-4V, that can read and understand images and answer questions about it, and do it all via audio input and output.

This was both stunning and long-expected. We knew since March that GPT-4 was developed as a multi-modal model and training on image inputs, but OpenAI never opened up that feature to the general public. Now it has.

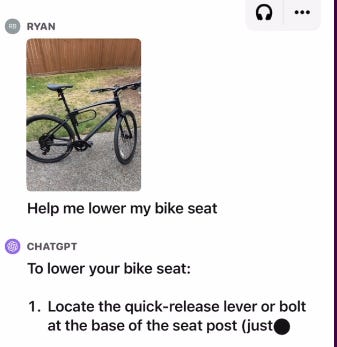

You can feed it images and ask questions about it. They’ve described a range of useful consumer applications for this, from taking a picture of a grill to ask “Why is my grill not working?” to snapping a picture of the contents of a fridge to get recipe ideas. They gave a use case of where it shared an image of a bike seat extender and asked advice on it.

OpenAI has written a technical paper, GPT-4V System Card to describe the refinement process and safety features of GPT-4V. In March of this year, they opened up GPT-4’s vision capabilities to help blind and visually impaired people. Working with an organization called Be My Eyes, they created Be My AI and let visually impaired users try out the AI and act as beta testers:

Be My Eyes piloted Be My AI from March to early August 2023 with a group of nearly 200 blind and low vision beta testers to hone the safety and user experience of the product. By September, the beta test group had grown to 16,000 blind and low vision users requesting a daily average of 25,000 descriptions. This testing determined that Be My AI can provide its 500,000 blind and low-vision users with unprecedented tools addressing informational, cultural, and employment needs.

Despite hallucinations and errors (for example, noting a menu item that wasn’t there), this AI proved to be very beneficial and impactful, and they were ready to release it now.

Now that’s the vision part, but what about “hear and speak”? OpenAI has already developed the Whisper speech recognition tool, and you can speak your input to ChatGPT on the ChatGPT Android and iPhone app. Now ChatGPT can output speech as well, using human-sounding (ElevenLabs quality level) synthetic AI voices.

Putting this together, OpenAI has made their AI model more general, more useful, and with the audio input and output, more accessible.

Surely, creative people will extend GPT-4V into many uses. From household advisor, to healthcare / wellness, equipment servicing, and e-commerce, possibilities for image-to-answer are enormous. Snap a picture of a broken piece of equipment and ask “what’s wrong with it? Find and order a replacement part.” Or ask “where can I buy that?” of a dress or jacket you see someone wearing.

For “AI Safety” reasons, OpenAI warns us that GPT-4V is not to be trusted in the use in medical questions:

Given the model’s imperfect performance in this domain and the risks associated with inaccuracies, we do not consider the current version of GPT-4V to be fit for performing any medical function or substituting professional medical advice, diagnosis, or treatment, or judgment.

Yet it’s obvious that analyzing images could be extremely useful in offering medical advice. For example, take a picture of a rash and “What is this? What’s a good treatment?”

Amazon’s Response

For their audio interface alone, ChatGPT now present much smarter AI competition to Alexa and Siri. What’s their response?

Apple has been working on improving Siri, and Amazon recently announced AI improvements to Alexa:

A new feature called Let’s Chat mimics the ChatGPT experience by allowing you to have a fluid conversation with Alexa, asking questions about everything from the voice assistant’s football team allegiance to recipes.

The demo wasn’t perfect and reviews on it were mixed, but I wouldn’t count Amazon out.

It would make sense for Amazon, to catch up with the progress made by others, to ‘buy in’ to a leading AI model maker to have a foothold. That’s what they just did: Amazon put $4 billion into Anthropic, in exchange for Anthropic’s commitment to use AWS and offer Anthropic Claude to AWS customers. This is similar to the commitment Microsoft made to OpenAI several years ago.

Anthropic also made a “long-term commitment” to offer its AI models to AWS customers, Amazon said, and promised to give AWS users early access to features such as the ability to adapt Anthropic models for specific use cases.

So a future version of Alexa might indeed by a Claude-powered AI chatbot.

What’s Easy and What’s Hard

There was a prediction not long ago (five years ago) that “Interpreting what is going on in a photograph” was ‘nowhere near being solved.’ We can put that on the “solved by AI” pile now.

Looking back on past predictions of the future, we see that there are things we might have thought were easy that turned out harder than expected, and things we thought were hard turned out easier. This perspective of AI from 2018 had the perception that we were nowhere near solving certain problems:

Yet most of the ‘nowhere near solved’ have been solved by the breakthroughs with scaling LLMs in the past few years:

“Human-level automated translation” has been solved by ever-better LLMs that can now translate across a number of languages, and do it multi-modally, i.e., with speech audio or text input or output.

“Understanding a story and answering questions about it” is now handled well by LLMs such as GPT-4 and Llama2 70B. AI can handle not just short texts; Claude 2 can take in an entire book into its context window and answer detailed questions about it.

“Interpreting what is going on in a photograph” is now solved by Meta’s “Segment Anything Now” and OpenAI’s GPT-4V. These are able to dissect and interpret what is going on in a scene in real-time. They can also take a crack at “interpreting a work of art.”

“Writing interesting stories.” While ‘interesting’ is in the eye of the beholder, Amazon is now flooded with AI-generated content from ChatGPT and GPT-4.

We are basically left with “driverless cars” and “human-level general intelligence” as not yet solved, and for both of those, we can say we are making ‘real progress’ and will get to both in just a few years.

What’s Solved

The rise of LLMs has solved many language-related tasks or gotten us to the point where AI achieving human-level performance on language tasks is within reach. Scale may be enough to get us there.

The rise of multi-modal AI models with vision capabilities now is tackling another realm of human performance. Image recognition was solved some years ago, but the precision, speed and capability of interpreting images and videos continues to improve. We are now at the level of being able to describe scenes in detail via AI, almost at or even beyond human capabilities.

A third avenue of progress has been in audio, both recognition of human speech into text and text-to-speech voice synthesis. We are witnessing great strides in AI generation of speech, music, sounds and other forms of audio, on command.

The rise of generative AI models has been for many of us unexpected because it was deemed quite hard. Yet now that AI developers know the technique of GANs (generative adversarial networks) and diffusion models (that do directed de-noising), they have been able to develop generative AI across multiple modalities: images, speech, music, texts and documents. Creative AI applications now mix those modalities for even more complex creations.

This adds up to a range of capabilities and a level of utility that is beyond what a pure LLM can do. Multi-modal AI models will be more powerful, flexible and useful than their pure text-only LLM kin.

If all this is in the process of getting solved, what’s still hard for AI?

Multi-stage reasoning, the kind of complex reasoning tasks required to build reliable autonomous agents, is still elusive. This week reminded us of those limitations with a paper showing gaps in the reasoning powers of LLMs; they can’t grasp a reversed logical connection. That’s a topic for another article.

‘The difficult we do immediately, the impossible takes a little longer.’ - US Army Corps of Engineers slogan during WWII

Wow. Leaps and bounds.