Context Engineering

Context Engineering is the new Prompt Engineering. It’s all about setting up your AI model or AI agent for success.

Context Engineering, the Latest Buzzword

I really like the term “context engineering” over prompt engineering. It describes the core skill better: the art of providing all the context for the task to be plausibly solvable by the LLM. – Tobi Lutke, CEO of Shopify

A new AI idiom has been gaining traction: Context engineering.

Context engineering is setting your AI agent up to be successful, feeding it the right prompts, data, tools and environment so the AI agent understands its task and executes it well. The term captures the essence of an especially important task in using AI, namely providing the full informational context for the AI model or AI agent, so that it can do its job.

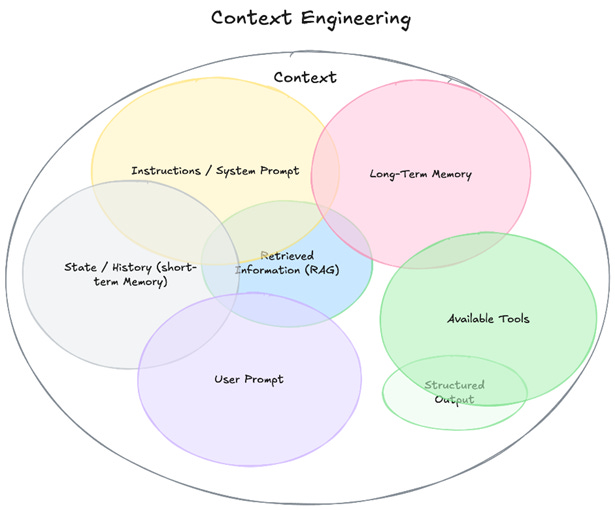

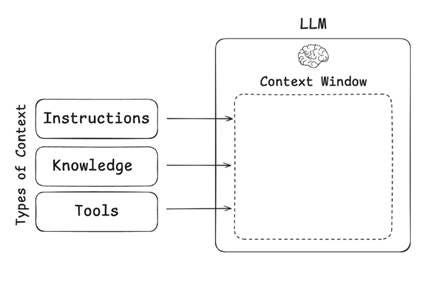

Context engineering joins a litany of related prior AI buzzwords like RAG, fine tuning, and prompt engineering that also relate to making AI work better. Context engineering also encompasses prompt engineering and RAG, to cover all the things that you need to feed the AI model: Prompt instructions both system instructions and user instructions, retrieved data and information, long-term memory and short-term memory, and available tools. All of it is context.

The New Skill of Context Engineering

Of course, context engineering is just a label on a set of activities we are already doing, so what is special about the term or the activity? As AI models and AI systems have improved and evolved, so have the best practices for interacting with them. Context engineering is the answer to the question:

What’s the best way to run an AI agent?

When AI models were embedded in AI chatbots, what constituted interfaces to them was mostly about a (text-only) prompt. The term “prompt engineering” came to denote best practices for crafting prompts to elicit the best AI model responses.

AI models and applications are now trained to interpret prompts better as part of their reasoning, so they are less sensitive to specific prompts. At the same time, their more complex capabilities and ability to absorb more context with larger context windows means that getting the most out of an AI model or AI agent means a lot more than feeding it a good prompt.

Context engineering for AI agents is what prompt engineering was for AI chatbots.

You need to set up the entire context – the AI model’s memories, prompt instructions, supporting knowledge and information, and relevant tools – to help the AI model complete the task. Hence, Phil Schmid says: “The New Skill in AI is Not Prompting, It's Context Engineering.”

Rules for Context Engineering

[Context engineering is the] “…delicate art and science of filling the context window with just the right information for the next step.” – LangChain “Context Engineering”

While some of what is now called context engineering is what we called prompt engineering before, and much of it is common sense, this term helps organize the rules and best practices to keep in mind when setting up and running the latest AI models and AI agents.

What are those best practices in context engineering for today’s AI agents?

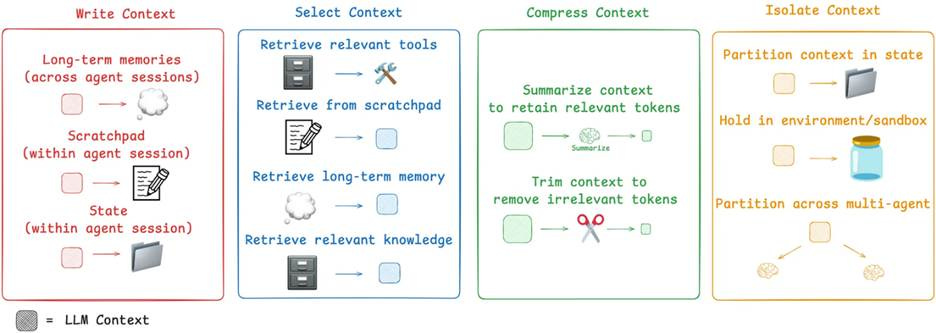

LangChain has written a blog post on Context Engineering for AI agents. They break down the tasks that are needed for managing context into four categories: Write context, select context, compress context, and isolate context.

Managing context is about making sure the AI model doesn’t hit different failure modes due to context malfunction. They reference a Dick Breunig post on how long contexts can fail:

Overloading your context can cause your agents and applications to fail in surprising ways. Contexts can become poisoned, distracting, confusing, or conflicting. This is especially problematic for agents, which rely on context to gather information, synthesize findings, and coordinate actions.

You don’t want the AI model to be overwhelmed by excessive context, be distracted by irrelevant or superfluous information, run out of context window, or invoke incorrect tools due to too many tool choices. The trick is to give the AI agent the context it needs, no more, no less.

Managing Context: Writing and Selecting Context

The essential task of managing context is to give the AI agent the information it needs to do its job. If it’s an informational question or summarization task, you need to give it the source materials or document to answer the query or summarize. To that end, selecting context from information sources is vital.

In selecting context, LangChain mentions the scratchpad, long-term memory, and relevant knowledge (such as via a web search) as sources of information to retrieve from. In these cases, an operation such as RAG can be performed to insert the memory or knowledge into context.

The other context selection operation is to retrieve relevant tools for the context, so it is available for use by the AI agent. Selecting only plausibly needed tools for a task instead of all possible tools can help the AI agent improve its tool selection accuracy.

In order for any long-term memory or scratchpad to work, there needs to be a process of remembering such information in the first place; consequently, writing context is an important operation. The ‘scratchpad’ assists a multi-turn AI agent in maintaining proper memory within a multi-turn session. Writing out to a scratchpad could be done via a tool call or some persistent object that stores relevant information.

Writing out long-term memory helps to maintain memory across different sessions. That way, what the AI agent learned or did in a prior session can be used to inform more correct behavior in the current or future one. There are distinct kinds of memory, which can guide AI agent actions in diverse ways:

Semantic memory or factual information; facts about a user support customization and personalization.

Episodic memory or experiences; these record past AI agent actions and events to guide repetition of actions or tasks.

Procedural memory or instructions; these record AI agent prompt instructions and can direct AI agent behavior.

The latter category (procedural memory) is typically fixed by the system instructions, but one can record, maintain, customize or partition instructions to, for example, execute specific desired sub-tasks in a multi-turn or multi-agent AI system.

We can also write the AI agent state from the context, to denote and manage state of the AI agent in a multi-turn setting.

Managing Context: Compressing and Isolating Context

Managing context is not just putting the right information in front of the AI model. Just as important is avoiding putting the wrong information in the context window, which can degrade reasoning, cause hallucinations or confusion, or overwhelm the context window.

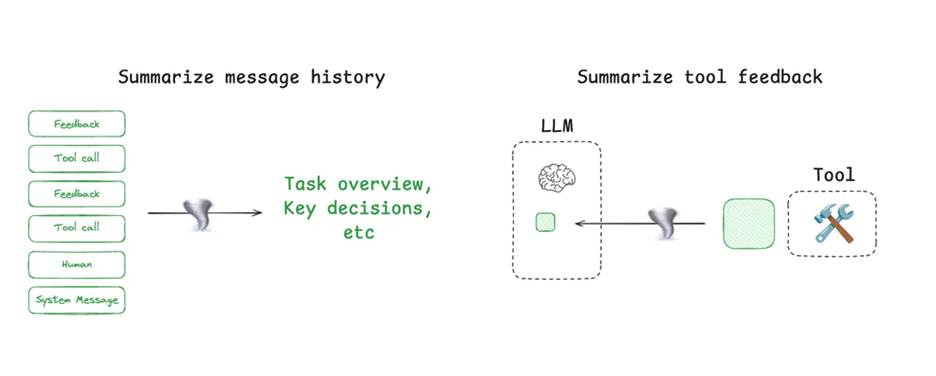

To that end, the context window needs to be trimmed and pruned. There are two methods: Summarization of context to keep essential information in the context while keeping its size within bounds; and trimming the context to remove irrelevant information from the context.

Similarly, isolating context is about splitting up the context to perform a task. If you are to split a complex task among sub-agents, it makes sense to split the context (notwithstanding the arguments from Cognition about the need to preserve a unitary context). Isolating context is about parceling out those parts of a full context that a sub-agent would need to complete its sub-tasks.

Conclusion: AI users as AI Agent Orchestrators

Control of an AI agent occurs via an interface, and for AI users, the prompt is still the interface. So, isn’t context engineering just an updated name for prompt engineering? Yes and no.

While the underlying intention is the same - obtain best AI outputs through better inputs - context engineering goes beyond writing better prompts. AI users have ways of control not just the direct prompt instructions (the essence of prompt engineering), but they can also control memory, external information, and tool use.

For example, to leverage the AI system for personalization, and use of memory you need to be able to leverage the memory systems of AI models to store prompts, personalized information, and prior actions. This will improve how the AI system will respond to new requests and tasks. But to leverage it, users need to feed memories to the system and control them. As OpenAI has put it: “You’re in control of ChatGPT’s memory.”

For AI users, the take-away is that as AI system builders add memory and tool features, AI users can use them to better manage context and produce ever better results. This adds capability to the AI system but requires greater context management, aka context engineering. The skills, techniques and best practices for context engineering will evolve as AI agents evolve.

It would interesting to develop a perspective on the best UI to engineer the context