Customizing AI with Claude Projects and Mistral Agents

Claude Projects and Teams, Mistral Agents, OpenAI GPTs, Gemini Flash context caching, Poe.com create bot.

Introduction

ChatGPT became the ‘killer app’ for AI in late 2022 by putting a simple text-based chatbot interface on top of GPT-3.5. The chatbot interface proved sufficiently powerful because it is the ultimate in customization: Ask it any question in the text window, and the instruction-tuned LLM chatbot will answer in most cases (except when limited by AI safety guardrails), and most answers will be useful (except when it hallucinates or lacks knowledge).

As we noted in our article “AI UI /UX, User Experience & Screen AI,” the prompt is the interface for LLMs; the chatbot is the simplest and most direct interface with LLMs. We also noted the efforts to move beyond the basic chatbot interface to make AI more useful.

Leading AI model providers are moving beyond standard AI chatbots in several directions. They are supporting customization of AI for specific use cases, and they are adding features to AI models, systems and interfaces to make the AI model or AI application more flexible, capable and easy to use.

Why Customize AI?

A general AI model with a generic chatbot interface can do many things, but many of them not well. AI becomes a tedious knowledge-work partner when users need to manually repeat prompts for similar tasks. Without current knowledge, a stand-alone LLM cannot answer current-events queries. Without personal understanding, the AI can’t fit responses to the user’s needs.

These challenges motivate customizing AI models and applications to enhance their usability and effectiveness. The primary reasons for AI customization include:

User Adaptation: Implementing an AI system that learns and remembers user preferences, allowing for personalized interactions and improved efficiency over time.

Stylistic Adjustments: Modifying the language model's output to conform to specific writing styles or tones, tailoring communication to the target audience or brand voice.

Domain Specialization: Optimizing the AI for particular industries or fields (e.g., healthcare, finance, or specific business sectors) to improve accuracy and relevance of responses within that domain.

Knowledge Enhancement: Augmenting the AI's knowledge base with domain-specific information to provide more accurate and contextually appropriate responses.

Persona Creation: Developing distinct AI personalities or characters for specific use cases, such as virtual assistants or interactive storytelling applications.

Customizing AI can be achieved through various technical approaches, including fine-tuning, prompt engineering, in-context personalization, and retrieval-augmented generation (RAG), each offering different levels of AI model adaptation and performance improvements.

Customization Features in Leading LLMs

Fine-tuning has been the traditional way to customize an AI model. Supervised fine-tuning (SFT) adjusts the weights of a base AI model with instruction. Parameter-efficient fine-tuning (PEFT) methods such as low-rank adaptation (LoRA) can reduce the overhead, but this is still an involved costly process to personalize AI for individual personal or business use cases.

There is an easier way: Import personal information or needed domain knowledge into the context window and custom (system) prompts to customize how the AI model works.

The first major customized AI model framework that took this approach was OpenAI’s GPTs last November. GPT builders define the GPT’s purpose (‘board game advisor’), upload information files on topics the GPT should know (upload board-game rules), and seed a few queries (“How does ‘free parking' work in monopoly?”). Voila, a new custom GPT such as Board Game GPT (yes, it’s real).

Recently, new customization features were released by other leading AI model makers:

Anthropic’s Claude Projects, Artifacts, and Teams was released in June to support more productive use of AI.

Mistral Agents and a platform to customize Mistral AI models was released in early August.

Gemini fine-tuning and context caching.

Claude Projects

Anthropic’s Claude Projects feature is aimed at enhancing AI-assisted workflows and collaboration. Combined with Claude 3.5 Sonnet and Artifacts, this combination is a helpful workflow enhancement to a typical AI model flow. Some of the key features in Projects:

Context-based Memory: The project knowledge for a given Project is loaded into Claude’s context window. Claude’s large context of up to 200,000 tokens, or about 500 pages of text, allows for substantial relevant documents or other data, but it’s not unlimited. Project’s memory usage also is distinct from how OpenAI GPTs work, which incorporate memory via a RAG system.

Custom Instructions: Users can define project-specific prompts to tailor Claude's responses, including adjusting tone or perspective based on specific roles or industries. Users can set custom instructions and calibrate language and style to fit specific project needs. This flexibility allows Claude to adapt to different writing styles and tones, enhancing its utility for diverse writing tasks.

By allowing users to ground Claude's outputs in internal knowledge bases, such as style guides, codebases, or technical documents, the Projects feature enables more contextually relevant and domain-specific outputs, reducing the "cold start" problem in AI assistance.

Artifacts provide a dedicated window for viewing, editing, and building content generated by Claude. Claude can generate creative text formats, like code or graphics. This includes code snippets, text documents, graphics, diagrams, and website designs. For developers, Artifacts offer an enhanced coding experience with a larger code window and live frontend previews.

Teams: The Claude Teams feature lets users share snapshots of their conversations in a shared project activity feed, fostering knowledge sharing and skill improvement across the organization. Teams users can share their best Claude conversations and work products with others on their Team.

All of these Claude features - Projects, Artifacts and Teams - can work together and are powered by Claude 3.5 Sonnet. The combination of a leading AI model and these capabilities enables Claude to provide targeted assistance across various tasks.

Unlike GPTs, which can be published and shared, Projects as a feature is more focused on individual and team use on specific AI workflows. With Teams aiding collaboration, this should improve productivity, development speed and cross-organizational knowledge for teams using these features.

Mistral Agents - “Build, Tweak, Repeat”

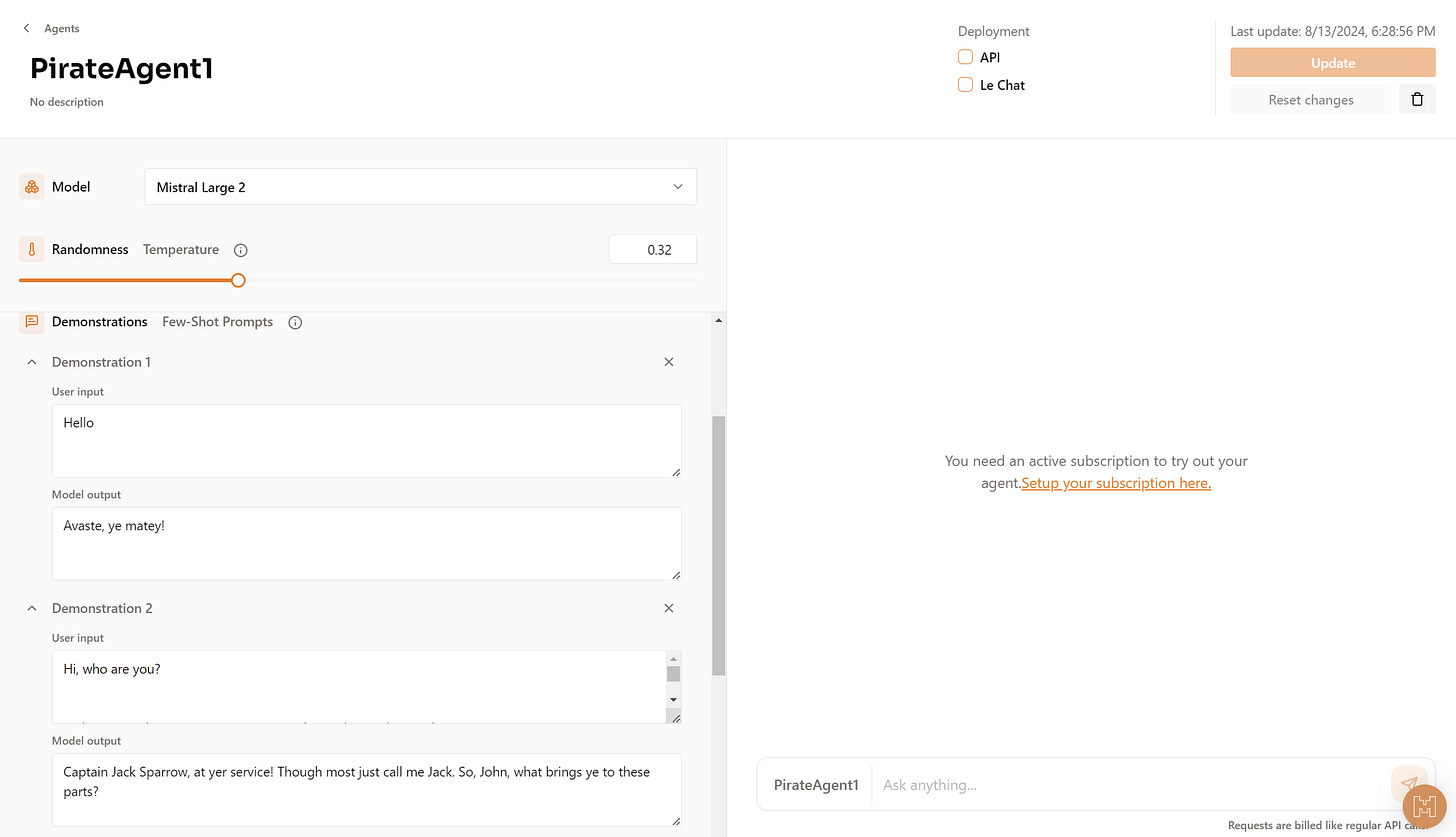

Mistral last week released their own AI customization features to tweak generative AI applications for specific user needs.

Their model customization features are for now fairly modest:

Models can be customized using a base prompt, few-shot prompting, or fine-tuning, and you can bring your own dataset.

The Mistral fine-tuning interface asks for a base model, a dataset of instruction response pairs for fine-tuning, and validation files.

Mistral also introduced an early version of Agents, AI applications that wrap AI models with additional context and instruction to define custom behavior. For now, the Mistral agents are defined via a custom system prompt and few-shot prompting.

Mistral Agents are not (yet) fully agentic AI systems, making the name Agents a misnomer, at least for now. However, they have ambitions to add to their AI agent capabilities over time, saying “we’re working on connecting Agents to tools and data sources.” When they do, these should be a lot more powerful, able to conquer more complex workflows and tasks.

Context Caching with Gemini

There are ways to fine-tune Gemini in Google AI Studio:

Tuning in Google AI Studio uses a technique called Parameter Efficient Tuning (PET) to produce higher-quality customized models with lower latency compared to few-shot prompting and without the additional costs and complexity of traditional fine-tuning.

Even with PET, fine-tuning has costly overhead. If you are not an AI model developer or coder, can you get a customized AI model experience with lower overhead?

This is why I am excited about context caching, a feature Google announced in May at their I/O event, and for which they recently announced very competitive pricing.

Context caching works fairly simply: Users create a context cache of the prompts and/or documentation to be cached, then later they can explicitly recall the cache to use it in a prompt. Gemini is multi-modal, so context caching supports a number of documentation types (PDF, text), image, audio and video format files.

As their Gemini documentation notes:

Context caching is particularly well suited to scenarios where a substantial initial context is referenced repeatedly by shorter requests.

This happens to cover many of the use-cases, such as querying some set of documents or operating over a very specific knowledge domain, that users build custom AI models for. So for many tasks, context caching is all you need.

However, there is still the hurdle that you need to be a developer using VertexAI to get Gemini context caching to work. It may be easier and lower-overhead, but as of now, it’s not a no-code solution like OpenAI GPTs or Claude Projects offer.

Customization for Open-source AI Models

I have been asked a question: Are there open source alternatives to OpenAI GPTs and Claude Projects?

The open source world has really embraced fine-tuning as an AI customization method. Developers can fine-tune open weight AI models by using fine-tuning frameworks like Axototl. Thousands of fine-tuned versions of top AI models can be found on HuggingFace.

There is also the option of using RAG systems and AI agents and customize those AI agent systems to particular work tasks. However, these options as well as fine-tuning require developer or hacker skills and effort.

Is there a no-code option to build custom AI bots like GPTs? Yes.

One no-code custom AI bot builder that allows you to build a custom AI on a variety of AI models is the Create bot interface at poe.com. The AI customization interface lets users define the system prompt and import documents into a knowledge base.

These features are basic, but enough to make for a good custom AI. Poe also has an app that runs on Android, iOS, MacOS and Windows, so you can build custom AI bots, then run them from your own devices. I made a classical music AI bot based on Claude 3.5 Sonnet and was able to run it locally.

Conclusion

Customizing AI models and applications can make AI more adaptable, easier to use, and directly helpful for specific tasks.

Fine-tuning an AI model is one way to customize an AI model, but other techniques can customize an AI application with less overhead and without changing the underlying model: Customizing the system prompt; adding specific information either to the context window or via RAG; putting information in a context cache.

All major AI model providers have some custom AI capabilities, with many of the features overlapping. None have all needed features. As AI improves AI interfaces, reasoning, and memory management, AI customization features will improve along with other general features.

As with everything AI, there’s more to come.

It seems to me that the big players are constantly playing catch-up. All these things have been available through API driven apps before they come to their app. By the time they figure, I’ve already got my own solution!

I've been asked: Are there open source alternatives to OpenAI GPTs and Claude Projects?

The best answer I came up with was the Poe create bot feature, which allows you use many AI models (GPT-4o, Gemini 1.5 pro, Claude 3.5 Sonnet, etc.) as a base model for customization via knowledge addition and system prompt tuning. However, while open wrt AI models it can use, it's not open-source. So is there a purely open source project that does the same? I haven't found it. If you know of one, I'm all ears; would like to try it.