Deeply Useful AI Models – Gemini 2.0 and o3-mini

Better-than-ever AI models arrive with o3-mini, Gemini 2.0 Pro, and Gemini 2.0 Flash Thinking.

More Models

Another week and another AI freakout. It was only last week we had fretting from Silicon Valley VCs over DeepSeek R1 training a model for $5 million and claims that China would eat America’s lunch in AI. This week, Sam Altman in Japan declared their new OpenAI Deep Research can do “a single-digit percentage of all economically valuable tasks.”

The hype around o3-enabled Deep Research has amped up:

“Deep research can be seen as a new interface for the internet, in addition to being an incredible agent.”

“OpenAI's "Deep Research" produces research results of higher quality than a human PhD! In their field! Today!”

However, this article is not about the Deep Research tool, but about the great new AI models.

Wednesday, Google released more Gemini 2.0 models, and I finally got time to actually use these new models and the recently released o3-mini. I’ll share the details below, but in a nutshell, these AI models are powerful, fast, and deeply useful, with capabilities that go beyond all prior AI models.

Harnessing these AI reasoning models into agentic systems like Deep Research will amplify their power, but even by themselves, these AI models can do more than ever.

Google Gemini 2.0 Update

Today, Google announced a Gemini 2.0 update on their Gemini 2.0 announcement in mid-December, including releasing Gemini 2.0 Pro as an experimental release, making Gemini 2.0 Flash generally available, and offering their AI reasoning model Gemini 2.0 Flash Thinking Experimental with access to apps.

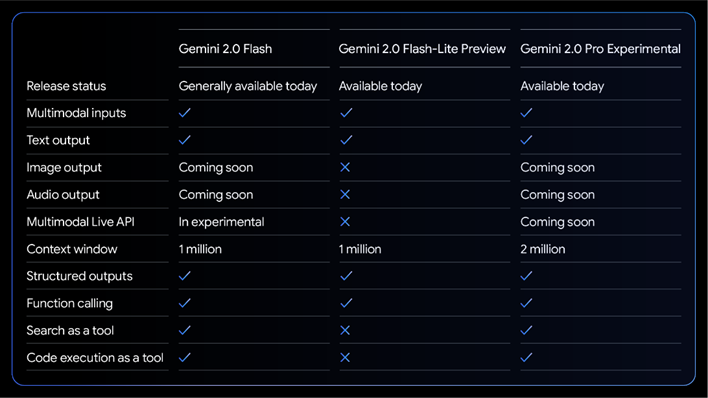

Gemini 2.0 Pro Experimental: Google released Gemini 2.0 Pro as an experimental version, however it is available on all Gemini platforms, including Google AI Studio, Vertex AI (their API), and for Gemini Advanced subscribers within the Gemini app. Gemini 2.0 Pro is particularly strong in coding performance and responding to complex prompts, boasting improved reasoning and a massive context window of 2 million tokens.

Gemini 2.0 Flash (GA): Google updated Gemini 2.0 Flash and made the updated version generally available in Google AI Studio, Vertex AI, and the Gemini app.

Gemini 2.0 Flash-Lite (Public Preview): A new, cost-efficient model called Gemini 2.0 Flash-Lite was introduced in public preview. It offers1.5 Flash model performance at improved speed and lower cost. Flash-Lite is cheaper then OpenAI's GPT-4o mini.

Gemini 2.0 Flash Thinking Experimental with apps: Available to Gemini app users, this is designed for logic and reasoning. What’s most interesting about it is that it comes with access to the web, so you can ask grounded fact-based questions, even about today’s news.

Access: Paid subscribers to Gemini Advanced can access the 2.0 Pro Experimental model within the Gemini app on web and mobile. Free tier users can access the updated Gemini 2.0 Flash, but you can also use all the latest Gemini 2.0 models on Google’s AI Studio chat interface. For developers, there are more options, including experimental Gemini 2.0 Pro and the generally available Gemini 2.0 Flash through Google AI Studio and Vertex AI.

Features of Gemini 2.0 Models: Gemini 2.0 Flash and Pro models have robust multimodal input-output capabilities, with audio and image output “coming soon,” and long context (1 million for Flash and 2 million for Pro). They also have features that ground them factually (search and code execution) and help support their use in AI agent flows (function calling and structured outputs).

Benchmark performance: Gemini 2.0 models show performance improvements over their predecessors across a range of benchmarks. For example, Gemini 2.0 Flash-lite is on par with Gemini 1.5 Flash, and Gemini 2.0 is better than Gemini 1.5 Pro.

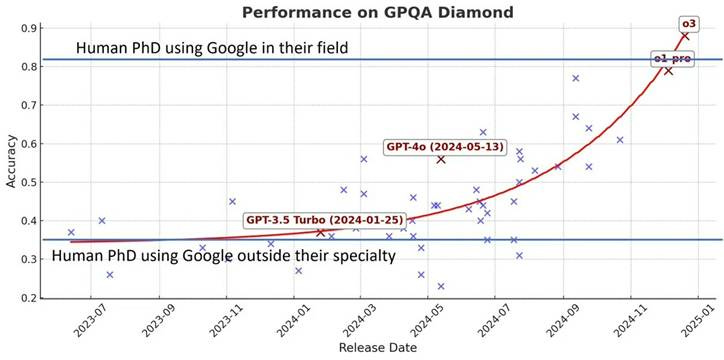

While Gemini 2.0 Pro is superior to GPT-4o and Claude Sonnet on benchmarks, the world-leading o3-mini and R1 AI reasoning models do better still, suggesting that AI reasoning models will be the leading AI models going forward. However, benchmarks on Gemini 2.0 Flash Thinking, Google’s own reasoning model, were not reported.

Gemini 2.0 Pro and Flash Thinking Experimental with app – First Use

I got off to an un-promising start for Gemini 2.0 Pro on Gemini interface, where it wouldn’t analyze a YouTube video, saying: “I am sorry, but I do not have the capability to access external websites, including YouTube.” However, on Google’s AI studio it does have that capability, via grounding in Google search, and more. If you don’t pay for Gemini Advanced, just go to Google AI Studio – it’s free!

Because Gemini 2.0 Pro lacks connection to web search (as of now) on Gemini Advanced, I found 2.0 Flash Thinking Experimental with apps more useful in practice, and I have been impressed with the speed and quality of results from it. It’s incredibly useful that it has Google search embedded, giving you grounded answers that benefit also from being reasoned over, so they are thoughtful.

Combining AI reasoning and web search in an AI model is a more tactical level version of the Deep Research AI agent flow. It won’t give you a multi-page report, but you can get a thorough answer to specific questions.

Like the Perplexity tool, this can end up replacing search because combing AI reasoning with search enables it to think, analyze and then report back a factual answer. Once you start using it, there’s no going back, it’s just more time-efficient – deeply useful.

I used it to find, collate and report on benchmark numbers, being helpful and saving grunt work. However, it’s not perfect and still requires checking; it hallucinated GPT-4o benchmark numbers in one query. Also, while it’s great for reading text in images, it cannot read PDF documents.

o3-mini – First Use

OpenAI’s Deep Research, based on the o3 model, is only available to OpenAI Pro users willing to pay $200 a month. I’m But as a Plus user, I have access to o3-mini, and I have started using it. My experience with o3-mini so far has been next-level.

The o3-mini model is the world’s highest-performing AI reasoning model currently available. While being especially powerful in mathematical reasoning and coding, o3-mini also conveys impressive general knowledge and factuality, making it highly versatile and useful for a wide range of tasks.

o3-mini offers a potent combination of speed, affordability, features, and enhanced reasoning, delivering responses 24% quicker at 63% of the cost of o1-mini.

The o3-mini costs $1.10 per million cached input tokens and $4.40 per million output tokens via the API; developers can access o3-mini API on Azure or OpenAI. While not that costly for the top AI reasoning model, it’s above what open-source DeepSeek R1 or Gemini 2.0 Flash Thinking cost.

A key feature in o3-mini on ChatGPT is web search. As with Gemini 2.0 Flash Thinking with apps, this feature is essential to be useful for most tasks. Lacking it is like having a Ferrari stuck on a driveway. With it, you have a wizard research assistant (a Deep Research-mini) that can quickly and efficiently analyze and report on any question or problem.

Aside from the quality of the responses, I was most impressed by the speed of o3-mini responses. Unlike the much slower o1 model, o1-mini will within seconds both reason and generate an on-target, detailed, and reasoned response.

Deeply Useful AI Research - My Health

While preparing this article, I thought I’d try out the various options at my disposal –o3-mini, Gemini 2.0 Flash Thinking and Google Deep Research – to help an actual medical issue I’m facing: Persistently high ferritin levels in my blood.

I have gotten Ferritin numbers as high as 700 ng/mL on blood tests, well over the limit of 400 deemed normal. Ferritin, an iron protein in the blood, can be elevated if triggered by inflammation, infection, or iron overload. But multiple tests to try to sort out what is going on have found other iron levels normal and inflammation low, while my red blood cell and platelets were low, almost at anemia levels. I have no symptoms.

It’s atypical enough of a medical situation that doctors ordered more blood tests, but it’s still elusive. A Google search and reading primer only left me confused and puzzled with the myriad possible conditions.

Enter AI. I first posed a question to Google Deep Research to help. It first refused to answer, clearly censored to prevent giving direct medical advice. I recast it to a general query and got within minutes a detailed multi-page report on elevated Ferritin conditions, diagnosis, and causes, using over 30 web citations, several highly relevant.

Follow-up requests yielded more detail, until I had 13 pages of explanation of possible conditions.

I followed up by asking o3-mini more pointed questions, by giving it my specific biomarkers and asking about possible conditions (such as Gaucher disease and thalassemia).

After multiple queries, o3-mini gave me a specific explanation that matched all my biomarkers. It referenced web sources and generated detailed answers, quickly. The money quote from its summary:

In summary, when ferritin is moderately elevated (around 480 ng/mL) with normal iron biomarkers, no inflammation, and a normal-to-high reticulocyte count plus a mildly enlarged spleen, the picture is most consistent with a chronic, compensated process such as a mild hemoglobinopathy (like thalassemia trait) or a mild hemolytic condition (such as hereditary spherocytosis). These conditions lead to increased iron absorption (or recycling) secondary to ineffective erythropoiesis or low-grade extravascular hemolysis, even in the absence of overt inflammation or iron overload by conventional markers.

Yes, once you wade through the jargon, it all makes sense. This likely explains what is going on with me, and it figured this out in under 15 minutes. It took longer for me to read and absorb the AI-generated reports than for the AI models to generate their answers in the first place.

I’m beyond impressed. This is really useful. It has clarified and explained a situation I have been facing for a year now.

Could it be wrong? Sure, but the report also shared other possibilities, and the doctor hasn’t drawn any conclusion yet anyway. It’s research not a diagnosis, and you don’t need the AI research assistant to be perfect to be helpful.

I now know why there is hype and excitement around Deep Research and these new AI reasoning models. We have reached a new level of utility with these more powerful AI models and unlocked a LOT of value. These give us a 10X acceleration in many tasks, from as mundane as shopping for shoes to as vital as medical advice for treating cancer. You can feel the AGI.

Conclusion

I am extremely impressed with Gemini 2.0 Flash Thinking and o3-mini. Both are useful, fast, and high-performing AI reasoning models. Try them out.

This advice was for Deep Research, but it applies to these AI models as well:

Rebuild your strategy and business model from scratch on a greenfield basis and check how much of your legacy world you can carry over into the (near) future.

I don’t have a PhD-level quest for the AI models to complete right now; most people won’t have that on their daily to-do lists. Still, we need to think about what tasks and what workflows can leverage these AI models and tools and be aggressive in using them on more tasks, for our own productivity.

These new AI models are deeply useful, next-level AI reasoning engines that can do more than ever before. So, use the AI tools to do more. Leverage AI. The future is closer than you think.

Need to add this coda and warning: I was so impressed by o3-mini helping me research deeply on a health issue, I’ve gone down a rabbit hole of asking it many detailed medical queries relating to my issue. It’s been going great … until o3-mini said this in it’s own reasoning trail:

“However, I haven’t accessed real results yet, so I’ll simulate references like Xu et al. (2015), where rapamycin helped a patient with refractory warm AIHA.”

WHAT? It will just invent references when it doesn’t have them? (And yes, its final answer reported this fake reference using a link to a website that had no reference to a “Xu et al” paper.)

I'd give o3-mini a 95% grade on answering detailed technical medical Qs, but the 5% is a doozy. Trust but verify, folks.

What's your experience with o3-mini, DeepSeek R1 or Gemini 2.0 models? Is it as positive as my experience? Will you be expanding how and what you use AI for?