DeepMind's GNoME Discovers New Materials

DeepMind’s GNoME uses deep learning to discover over 2 million new crystals

A New World of Materials Discovered

Google Deep just announced a stunning result: “Millions of new materials discovered with deep learning.”

They developed a deep-learning based AI tool called Graph Networks for Materials Exploration (GNoME). Using it, they were able to predict 2.2 million new crystals. Of those, they were able to predict that “380,000 are the most stable, making them promising candidates for experimental synthesis.”

It would be impossible to overstate the importance of this. They have just increased the total number of known stable crystals by an order of magnitude in one announcement. It amounts to the discovery of a New World of materials.

This could advance all manner of technologies in all industries, including semiconductors, electronics, energy, batteries, machinery, construction, transportation, and more.

GNoME - GNNs for atomic modeling

In their paper Scaling deep learning for materials discovery, they explained how they did it.

Pre-existing materials discovery involved a lot of trial-and-error, calculating estimated behaviors and confirming them with experiments. Computing methods using physics-based simulations accelerated some of that process, but it remains a time-consuming compute-intensive process.

The key development they use is graph neural networks (GNNs) to characterize the candidate molecules. Called GNoME (graph networks for materials exploration), these GNN-based models characterize atomic interactions and predict the total energy of a crystal, thereby determining a crystal’s stability and properties. They found that “graph networks trained at scale can reach unprecedented levels of generalization, improving the efficiency of materials discovery by an order of magnitude.”

To make the GNN models more effective and accurate, they use active learning to improve them. Candidate crystals are checked using first-principles DFT (density function calculations) to confirm the GNN model estimates, but also train the model further to improve its performance:

In both structural and compositional frameworks, candidate structures filtered using GNoME are evaluated using DFT calculations with standardized settings from the Materials Project. Resulting energies of relaxed structures not only verify the stability of crystal structures but are also incorporated into the iterative active-learning workflow as further training data and structures for candidate generation.

This important step means that GNoME learns to provide “accurate and robust molecular-dynamics simulations out of the box on unseen bulk materials.”

Just like you might calculate various physics-based calculations and use a lookup table to re-use them, the GNoME machine-learned interatomic potentials as a reference model became a far more efficient way of characterizing atomic interactions than repeatedly conducting physics-based calculations. As a result, a much larger search space can be explored and many new material properties discovered.

Verification of Results

One question arises: How do we know these results of 400,000 claimed stable crystals are any good? Are they verified experimentally?

Their paper described a number of verification steps and confirmation processes with simulation tools. The process didn’t just explore discovering new materials, but DeepMind worked with partners at Lawrence Livermore Labs to explore how to create these materials in the lab and verify their properties experimentally.

“Of the experimental structures aggregated in the ICSD, 736 match structures that were independently obtained through GNoME.”

In a companion paper, researchers presented “ An autonomous laboratory for the accelerated synthesis of novel materials.” They created a platform called A-Lab (autonomous laboratory), that used historical knowledge, machine learning and robotics to quickly plan and create experiments on the synthesis of inorganic powders:

Over 17 days of continuous operation, the A-Lab realized 41 novel compounds from a set of 58 targets including a variety of oxides and phosphates that were identified using large-scale ab initio phase-stability data from the Materials Project and Google DeepMind. Synthesis recipes were proposed by natural-language models trained on the literature and optimized using an active-learning approach grounded in thermodynamics. Analysis of the failed syntheses provides direct and actionable suggestions to improve current techniques for materials screening and synthesis design. The high success rate demonstrates the effectiveness of artificial-intelligence-driven platforms for autonomous materials discovery and motivates further integration of computations, historical knowledge and robotics.

So this result is a double-header, presenting not just a way to discover new materials, but a faster process to experimentally create and verify them.

Using AI To Accelerate Discovery and Invention

The direct discovery of 400,000 stable crystals, among them perhaps thousands of useful materials, is the biggest AI-related scientific advance this year.

From a technical process perspective, this work is off the beaten path of most the hyped up AI advances in the past year or so. It’s not an LLM, it’s not using RL (reinforcement learning) like DeepMind’s AlphaGo, but it is a network of graph neural nets that are trained to reduce atomic interaction estimation error similar to the ‘traditional’ deep learning models common in ML world.

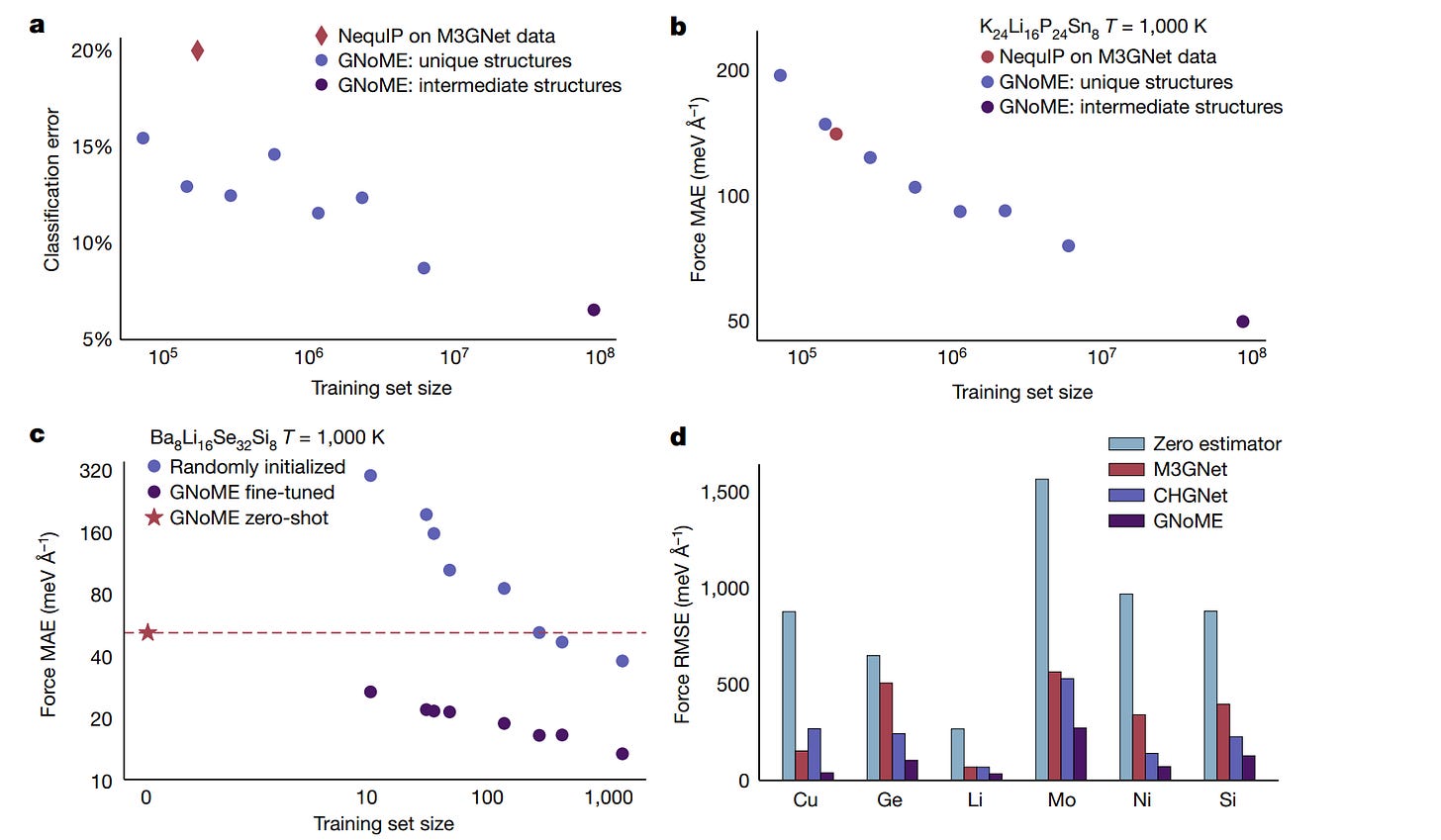

They show that scaling the models and the training set size reduced errors and made models better. Classification error improved as a power law with training set size.

If scaling is all you need to get these models good enough to supplement physics-based simulations, this has huge implications for scientific discovery and science. It’s a whole new avenue for vastly more efficient modeling.

The DeepMind authors note that GNoME model went beyond their training distribution to accurately characterize atomic interactions. They note:

The application of machine-learning methods for scientific discovery has traditionally suffered from the fundamental challenge that learning algorithms work under the assumption of identically distributed data at train and test times, but discovery is inherently an out-of-distribution effort. Our results on large-scale learning provide a potential step to move past this dilemma. GNoME models exhibit emergent out-of-distribution capabilities at scale.

The specific interatomic interactions GNoME models may have been in distribution, but they can extrapolate to accurately estimate new combinations. This may be similar to how new art is made by AI image generators by mixing novel combinations.

So the result is profound for materials science and for science generally:

An approach to modelling complex systems faster than first-principle physics-based approaches can scale to have acceptable quality and accuracy.

With appropriate model design, deep learning models can characterize configurations out of training distribution.

DeepMind’s Science Revolution

When AlphaGo won the game of Go, DeepMind showed that RL could solve incredibly difficult problems, playing Go at a super-human level. But Demis Hassabis and others at DeepMind had a far more ambitious project than winning games: Advancing science.

And so they have. With AlphaFold, DeepMind cracked the protein folding problem. Now we have hundreds of thousands of proteins more fully characterized, accelerating drug discovery and medical understanding.

With DeepMind’s AlphaTensor, presented in “Discovering faster matrix multiplication algorithms with reinforcement learning,” DeepMind found ways to speed up matrix multiplication. It did this by turning the problem into “a single-player game where the objective is finding tensor decompositions within a finite factor space.” The result was discovery of matrix multiplication solutions that improved on the best work of humans, in some cases improving on results that had stood for 50 years.

They have also cracked other math problems, including an improved sorting algorithm.

The RL techniques used are fairly general: If you have a complex problem with many different constraints and a large search space, it’s a good fit for deep learning and RL-based AI. If you can recast the optimization problem as a game, you have the same formula used to win at Go.

Our results highlight AlphaTensor’s ability to accelerate the process of algorithmic discovery on a range of problems, and to optimize for different criteria.

Conclusion

We woke up yesterday to find we just increased our knowledge of crystals many-fold, finding 400,000 new stable crystals, thanks to DeepMind’s GNoME. Hopefully, this will mean better batteries, better electronic devices, lighter materials, and more. This is the most profound scientific advance developed using AI this year, from the organization DeepMind that has done more than any other to apply AI for science.

Even more important than this direct result is that it shows a path to use AI to accelerate scientific exploration and discovery generally. With the right deep-learning architecture, AI dramatically increases the speed and efficiency of scientific discovery. AI doing 800 years worth of discovery and invention in one fell swoop is what the Singularity feels like.

Just imagine the impact this can have on various industries, from semiconductors to transportation. It's like stumbling upon a treasure trove of potential advancements. However, I do wonder about the validation of these new structures.